Volume 26, Number 2

Wen-Li Chang and Jerry Chih-Yuan Sun*

Institute of Education, National Yang Ming Chiao Tung University; *Corresponding author

Quality MOOCs (massive open online courses) ensure open learning under the top-down guidance of established criteria and standards. With an evaluative approach, course providers can use the guiding frameworks in designing and refining courses while fostering students’ targeted open learning competency. This study explores the openness embedded into MOOC course design and the anticipated core competency, gathering insights from interviews with in-service teachers preparing MOOC lessons. The findings suggest that teachers’ evaluative approach remains necessary in its cyclical practice, using prior experience as the primary foundation while also referencing national and international frameworks for course refinement. However, the teachers’ observed high reliance on early experience has resulted in an unstable foundation, where only a bottom-up experiential perspective is adopted, instead of an ideal balance with the top-down standards. From the teachers’ perspective, task completion is prioritized as the only primary learning outcome, despite open learning providing students with extensive opportunities to extend beyond in-class task challenges. Future studies should address this unbalanced perspective with a more diverse respondent pool and continue efforts to triangulate data through mixed-method approaches.

Keywords: course evaluation, criteria and standards, competency-based instruction, open learning, MOOCs, quality MOOCs, teacher perspective

Open education surged in 2008 and gained momentum in 2012, as summarized in Yousef and Sumner’s (2021) evolution timeline. Due to the COVID-19 pandemic, the year 2020 marked a successive milestone in the progression of open learning which has increasingly assumed a primary form of the massive open online courses (MOOCs), according to Ossiannilsson (2021). MOOCs have become widely recognized as affordable and flexible tools for valuable opportunities to learn and access knowledge, introduce personalized learning environments, and deliver quality educational experiences. Reflecting this ongoing trend toward open learning, Pelletier et al. (2021) in the EDUCAUSE Horizon Report emphasized MOOCs’ expanding contribution to microcredentialing in a widened scope of competency-based instruction within university settings. In response, the underlying design and implementation of online curricula have broadened, not only to scale student achievement levels but also to ensure online course quality by incorporating dynamism and inclusiveness. As MOOCs continue to expand in reach, their alignment with quality standards becomes crucial not only for enhancing individual learning experiences but also for addressing broader goals, such as educational equity and lifelong learning opportunities on a global scale.

In an ideal competency-based instructional setting for growing skills through MOOCs, embedding secure and reliable evaluation into open learning is essential for consolidating the fundamental process approach to aligning teaching with learning (Johnstone & Soares, 2014). Criteria and standards should therefore be widely adopted for ongoing assessment at all levels (individual, organizational, governmental), namely supporting self- and co-assessment of MOOC teaching and learning, meanwhile responding to an overall paradigm shift to contextual and personalized assessment (Chiang, 2007; Sadhasivam, 2014; Zulkifli et al., 2020). Queirós (2018) and Sandeen (2013) emphasized the role of assessment in maximizing the effectiveness of MOOCs and encouraged standard assessment methods for validating student learning. Parallel efforts focus on assessing course quality by comparing course arrangements with predetermined national and international standards intended for optimizing learning conditions for each student and for moving beyond common reliance on scholarly reputation and prestige as quality standards. In this sense, the precise and evaluative nature of competency-based instruction should be fulfilled in MOOCs assessed, which ideally expands inclusive educational opportunities for students of diverse demographic and socioeconomic backgrounds (Mazoué, 2013).

This study is grounded in competency-based instruction principles and draws on constructivist theories to examine how teacher evaluation practices influence MOOC learning outcomes. By aligning instructional design with learner-centred frameworks, the study highlights teacher insights in enhancing both instructional quality and student engagement. Open learning competency refers to the ability to self-regulate within a flexible learning environment, equipping learners to effectively navigate and engage with online resources. Through this instructional approach, quality MOOCs are expected to provide equitable access to education and advance lifelong learning efforts, both of which have gained increased significance in a post-pandemic landscape. As opposed to top-down standards which often rely on predefined criteria benchmarks, the bottom-up experiential perspective highlights practical teaching insights gained from firsthand experience, emphasizing the role of teachers in shaping MOOC design based on real-world dynamics.

This study draws on first-hand interview responses to explore teachers’ perspectives on designing and evaluating MOOCs on ewant, one of the pioneering MOOC platforms in Taiwan. While global platforms such as Coursera and edX emphasize consistency via institution-driven frameworks, ewant balances teacher autonomy with competency standards. With reference to relevant research attempts, such as Ferreira et al.’s (2022) proposed quality criteria deriving from the ENQA (European Association for Quality Assurance in Higher Education), this study resumes the thread of discussion and contributes to a deepened understanding of teachers’ knowledge and acceptance of fulfilling existing standards, as well as to the enhancement of intersecting relationships between teachers’ achieved understanding of evaluation standards and their perceived core competency for open learning. This study also accommodates the necessary attempt to use an interview approach as one evaluative measure or one reflective opportunity within open teaching and learning contexts. Specific research questions to be addressed are as follows.

In response to ENQA’s efforts to establish quality criteria for considerations in e-learning provision (Grifoll et al., 2010), the United Nations Educational, Scientific and Cultural Organization (UNESCO) proposed sets of criteria for measuring and determining course quality by respectively considering learning in general, online learning, and MOOC-specific settings (Patru & Balaji, 2016). The OpenupEd framework was especially highlighted for quality assurance of any MOOC, upon which course-level quality labels are clearly stated with a focus on learning outcomes, course content and materials, teaching and learning strategies, and assessment methods. Studies of quality assurance frameworks, including OpenupEd, highlight the importance of top-down standardization for consistent quality metrics (Rosewell & Jansen, 2014). Similarly, prior research studies on quality assurance criteria for online courses, including Wang and Chou (2013), proposed standards that consider course content, learning assistance, information credibility and currency, technique and connections, website interface design, and general openness.

Acosta et al. (2020) shared the similarity in encouraging expert-based evaluation following international standards, namely Web Content Accessibility Guidelines (WCAG), for generating guiding principles in developing perceivable, operable, understandable, and robust MOOCs. From the enhanced expert perspective, the study maintained the focus on quality courses helping students develop a clear notion and proper use of MOOCs with their own sense of direction, i.e., knowing what, how, and why, during their engagement in online open learning that most ideally brings meaningfulness and learner efficacy. The research on teacher perspectives also emphasized the significance of incorporating experiential insights to create adaptable learning environments (Acosta et al., 2020). By integrating teacher insights with learner-centred competency frameworks, the research pointed out the evolving role of educators in shaping flexible yet robust MOOC quality standards. For an essential addition to course evaluation, Su et al. (2021) measured student perceptions of content material, instructional effect, interaction process, as well as the operational learning system. Recent studies on MOOC evaluation continue to explore the importance of learner-centred, competency-based frameworks (e.g., Steffens, 2015) and teacher perspectives (e.g., Koukis & Jimoyiannis, 2019).

Aside from technical, organizational, and social aspects, this pedagogical aspect in quality assessment is considered one necessary factor in evaluating web-based educational software or programs in general for teaching and learning in the triple Student-Teacher-Institution framework of interdependent actors (Lopes et al., 2015). The adopted systematic review approach has prioritized a clear focus in relevant studies on learning process quality and on infrastructural functionality, flexibility, and adaptability, over the practice of community-based interactions and/or management cost and efficiency. Best practices for scalable interaction and formative feedback in MOOCs, as emphasized by studies including Kasch et al. (2021) and the OpenupEd quality framework (Rosewell & Jansen, 2014), further illustrate that maintaining quality at scale requires a combination of automated, peer, and content-based interactions. Out of the continued attempt to explore relevant studies on MOOCs, Stracke and Trisolini (2021) maintained the mixed combination of aspects and extended the quality dimensions with a broadened scope of the pedagogical aspect by further considering instructional design, learner perspective, theoretical framework, MOOC classification, overall context, and evaluation.

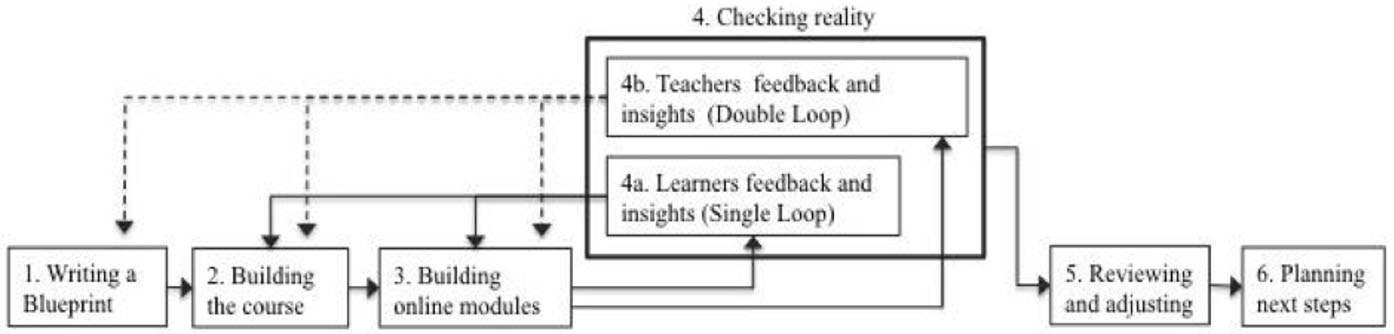

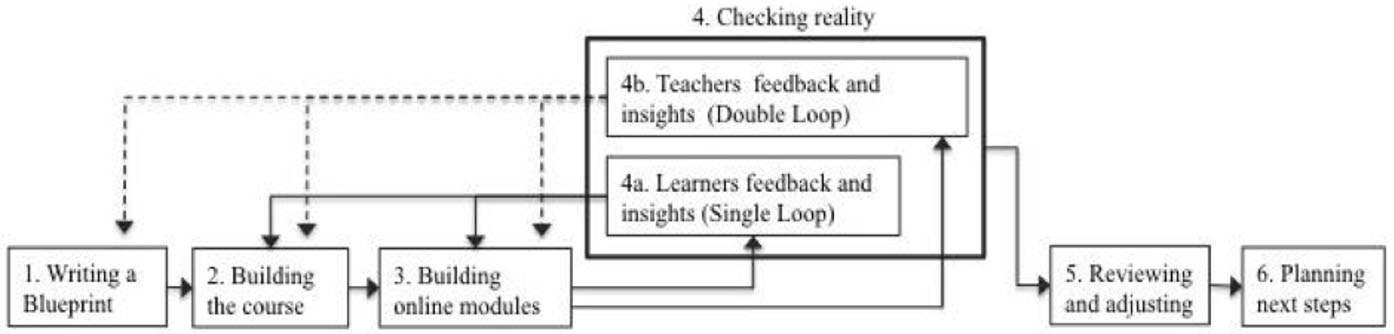

Prior research has significantly advanced the classification of quality evaluation aspects. To further refine the definition of quality, Hovhannisyan and Koppel (2019) were opposed to its association with any objective, but instead inclined toward it being a measure for a specific purpose, including primary considerations of quality from learners’ point of view, within a MOOC pedagogical framework, in relation to input elements, and on the basis of outcome measures. For full inclusion of teachers and students at the forefront of assessing MOOC quality, Cirulli et al. (2017) proposed a double-loop evaluation cycle for MOOC design, highlighting the need to balance student and teacher feedback and insights in checking reality and aligning open learning outcomes with objectives. Figure 1 shows the interacting relationship of evaluation with course development and student achievement.

Figure 1

Double-Loop Evaluation Cycle of MOOC Design

Note. From “A Double-Loop Evaluation Process for MOOC Design and Its Pilot Application in the University Domain,” by F. Cirulli, G. Elia, and G. Solazzo, 2017, Knowledge Management & E-Learning, 9(4), p. 440 (https://doi.org/10.34105/j.kmel.2017.09.027). CC BY 4.0.

The Ministry of Education (MOE) in Taiwan established a national framework for evaluating locally produced MOOCs, encouraging course providers and class participants at the forefront to carefully consider teaching contents and learning outcomes, as illustrated in Table 1. By these government-proposed standards, online courses are examined mainly from an expert perspective and should meet the objectives of open learning, maintain attention, facilitate progress, and enhance learning efficiency and motivation, via instructional design, visual support, and integrated technology, for the enhancement of learning engagement in an accessible environment. It is apparent that the MOE is broadening local perspectives on open course evaluation and has further extended evaluation methodology for a massive-scale inclusion of criteria and standards, though a thoroughly mixed perspective that combines teacher and student viewpoints remains lacking in course evaluation and even in the overall open teaching/learning process.

Table 1

Summary of MOE Standards for MOOC Evaluation

| Dimension | Criteria | Description |

| Teaching content | Instructional design Visual quality Learning efficiency Technology integration | Aligns with learner needs/objectives Maintains learning attention Facilitates learning progress Enhances learning efficiency and motivation |

| Learning outcomes | Course accessibility and learning engagement | Measures enrolment rate, time-of-use rate, and completion rate |

Note. MOE = Ministry of Education in Taiwan; MOOC = massive open online course. Adapted from Criteria for Evaluating Benchmark MOOC Courses (Evaluation Method section), by eLearning Movement Office, 2021, Ministry of Education, Taiwan. In the public domain.

This review synthesizes previous research on how top-down standards and bottom-up perspectives contribute to shaping MOOC quality, emphasizing the need for further alignment between these approaches. By integrating these perspectives, this review highlights the ongoing challenge of achieving a balance that supports both quality and flexibility in MOOC design. The present study builds on these insights to examine the unique role of teachers in ensuring MOOC quality, particularly through teacher-driven evaluations that align with both learner needs and established standards. These developments provide a broader context for exploring teacher-driven quality assurance, allowing for more tailored feedback and adaptable learning pathways that align with competency-focused frameworks. By examining ewant’s approach within this broader context, this study also highlights the applicability of these findings to other platforms and regions. This approach aligns with challenges observed on the ewant platform, where balancing teacher-driven flexibility with established quality metrics remains essential for supporting diverse learning needs and effective quality assurance across regions.

The target open learning context is focused on the local ewant platform, which has recruited in-service English language teachers for a 2023 English-medium-instruction (EMI) MOOC program. This program aims at scaffolding students to complete their concurrent, personal online micro-credentialing process on a global MOOC platform (FutureLearn) where English is the primary instructional language. For students to achieve an understanding of English-taught course contents using ewant learning scaffold, the English-as-a-second/foreign-language (ESL/EFL) learning objective is both medium- and content-focused, therefore widening to the coverage of multi-faceted competencies necessary on their way to ultimate attainment of digital certificates. In this dual-track online learning program, the certificates of achievement, if both successfully attained, are issued by ewant and FutureLearn at the end of the two-month program.

The four teacher respondents (A, B, C, and D) currently work at local public schools at different educational levels, from primary to higher education, and in various regions of the same country, ranging from northern to central Taiwan. Table 2 provides detailed background information about the participants. Despite the teacher respondents’ young age, all have accumulated extensive professional experience as English language educators, with an average of ten years in the field. Their teaching background covers the use and integration of web-based, digital technology in class, especially in planning for and producing video content (shared both synchronously and asynchronously, on web-based video channels such as YouTube). Although most of the teachers are new to delivering lessons on a MOOC platform, they possess up to three years of practical experience in designing and managing online classes, primarily due to the impact of the COVID-19 pandemic.

Table 2

Demographic Information About Teacher Respondents

| English Teacher | A | B | C | D |

| Position | Full-time primary school teacher in northern Taiwan | Full-time secondary school teacher in northern Taiwan | Full-time secondary school teacher in central Taiwan | Part-time college teacher in central Taiwan |

| Age | Middle-aged | Middle-aged | Middle-aged | Middle-aged |

| First Language (L1) | Chinese | Chinese | Chinese | Chinese |

| Education Background | Graduate-level education major | Graduate-level education major | Graduate-level education major | Graduate-level education major |

| MOOC Experience | MOOC learner | MOOC learner | MOOC teacher and learner | MOOC teacher and learner |

| ewant Experience | Novice | Novice | Experienced | Experienced |

Following a qualitative methodology, this study used a semi-structured interview approach and applied thematic analysis to interpret teachers’ perspectives on MOOC evaluation and competency-based instruction. Interview data were collected, analysed, and interpreted from the four teacher respondents. Each respondent participated in their own one-on-one online meeting with the researcher. All meetings were conducted in Chinese, with the researcher translating the responses. To ensure accuracy and consistency with the original meaning, back-translation methods were employed and cross-checked with the interviewees. These in-depth interviews, averaging 1.5 to 2 hours, provided qualitative data for thematic analysis, capturing detailed views on competency-based instruction and key elements of quality MOOCs. Each interview session occurred during the preparatory stage for the dual-track EMI MOOC program.

The primary research tool was an interview guide for the semi-structured interviews that were designed and administered to explore teacher perspectives on key elements of quality MOOCs and their intersecting relationship with the perceived core competency for effective open learning. With permission from all teacher respondents, the interview sessions were video recorded for word-for-word transcription using Google Meet, and subsequently analysed with a focus on addressing the proposed research questions. Given the exploratory nature of the research, a sample of four experienced teachers was used, supplemented by triangulation through multiple data coders. This approach allowed for a detailed exploration of teacher perspectives on MOOC quality, with triangulation enhancing the reliability of the findings despite the small sample size. These interviews served as a foundation for analysing the practical implications of teacher insights in competency-based instruction.

The semi-structured interview questions were developed for the collection of expert responses that suggest the teachers’ accumulated knowledge and demonstrated acceptance of the existing MOOC standards. Their self-reports on quality course design also provide a hidden path to personal interpretations of necessary competenc in the open learning process. The interview questions are mainly concerned with MOOC teaching contents and learning outcomes, following the Taiwan MOE quality evaluation standards for MOOCs (see the Appendix for full questions). Centered around the government-mandated core concepts, the questions were expected to help, in an evaluative manner, to examine teachers’ notions and perceptions in the present course design and preparation stage, and were believed to provide the underlying ground for further discussion over how the collected teacher thoughts responded to existing governmental or institutional standards.

Lincoln and Guba (1985) stated that trustworthiness is one measure of evaluating a qualitative research study and that trustworthiness involves a study being credible, transferrable, dependable, and confirmable. This study included multiple sources of data (teacher respondents), presented in the interview excerpts and quotes shown in the following section, to support and ensure the truthfulness of findings. The credibility level is enhanced with member check, through which the respondents were asked to review the findings for accuracy. By including detailed descriptions of the research context, participant characteristics, and data collection methods, this study gains its trustworthiness in terms of transferability, namely, the increased applicability of findings to different settings. The study also established dependability and reproducibility of the findings, providing a complete account of the research process achieved through transcription. To minimize the potential for researcher bias and achieve confirmability at the same time, the study has engaged multiple independent investigators for data triangulation.

In the research context of using MOOCs to pave the way for effective open learning, ewant courses are perceived as one of the trending options among the various locally developed and produced MOOCs. The collected interview responses show teachers’ perceptions of the key components of quality MOOCs (research question 1). Although the teacher respondents might have accumulated their own ewant experience prior to this research (as either teacher or student or both), they all described finding the platform to be highly accessible and available online when performing proper web-based search strategies under secure Internet infrastructure: Teacher A remarked, “No difficulty at all [in searching for the ewant platform]. I just googled the name, and there it was.” Teacher D noted, “Yes, [I] reached the site soon after I entered the search keywords.”

Despite claims that ewant is not particularly attractive in its interface design, the teacher respondents stated that they had little difficulty navigating the platform, even during their first visit to the site for personal learning purposes. Their searches for topics and courses were often mentioned, with emphasis on the overall ease of use as well as the wide course variety. Teacher A told us, “Not fancy how it looks, personally... but not to the point of saying no, thank you.” Teacher C remarked on the wide variety of course topics:

I was not expecting to have so many course options, to be honest. Now, upon all these topics that came to me right at my first search, I don’t know where to continue... of course, in a good way. I want them all, if possible.

Teachers noted the importance of easy navigation and a wide course variety for student engagement, while some expressed a desire for more dynamic instructional features to better accommodate diverse learning preferences. This reflects a broader commitment to enhancing accessibility and inclusiveness in MOOCs, revealing teachers’ interest in balancing functionality with educational quality. The findings suggest that teachers’ approach to MOOC evaluation aligns closely with broader goals in open education, supporting course-specific improvements and lifelong learning principles by promoting adaptable and competency-based learning pathways. The findings are consistent with previous qualitative studies on MOOC evaluation (e.g., Haavind & Sistek-Chandler, 2015), which emphasize the importance of balancing experiential insights with standardized quality benchmarks. This consistency with existing research reinforces the role of teacher-driven perspectives as a valuable component of quality assurance, bridging the gap between practical insights and formal standards. Teacher D stated, “From entering the search keywords to landing [on] a course or two I like, I’ll say, yeah, pretty good. The design in general can be a bit outdated... but I’m okay with it.”

Concerning the teacher respondents’ shared assignment to provide ewant courses for dual-track open learning, the role-to-role transition appeared fairly manageable, allowing them to evolve from learning through ewant to teaching lessons while still learning with ewant. Being clearly aware of their teaching responsibility, the teacher respondents extended their previous MOOC learning experiences and emphasized the pedagogical use of technology-assisted class or learning management resources (e.g., calendars, announcements, notifications, and discussion forums) in addition to teacher’s real-time guidance. They believed that through these basic settings that encourage social interactions and optimize learning conditions on the site, students would be guaranteed an enhanced level of motivation and engagement, and possibly, most ideally, the attainment of self-regulation in open learning or learning in general. Teacher B described it this way: “Like I was a student studying MOOCs, [and] now [I’m] a teacher ... to-be, but I know that it’ll be pretty similar, especially the part about time management and self-discipline.” Teacher D spoke of how the learning tools would help with self-regulation:

It matters to get MOOC students to think, and think all the time.... Students should think and reflect, and then think again, as in an ongoing cycle. And MOOC teachers should help by allowing the students to think with digital learning support, in different forms such as collaborative worksheets [and] individualized, calendar-based reminders.... They really help, at least to me... to prepare, to recap, or simply to catch up when feeling lost.

Given that a motivating learning environment encourages students’ persistent efforts, and that learner engagement facilitates behavioural, emotional, and meta/cognitive commitment, half the teacher respondents directed themselves from the mere focus on course display and settings, to combining the parallel emphasis on student orientation and self-directed course content exploration. The design and implementation of class activities for the dual-track MOOC program, at this time, gained the greatest attention for promoting not only teacher-led content presentation (incorporating step-wise guidance from the experienced) but also learner-centred content acquisition and critical thinking (encouraging higher-order participation of the novice). This necessary balance between teacher and student efforts ensures and maintains course quality, as demanded in an effective open learning process. Teacher C stated:

So, early on, I was wondering how this dual track of learning works.... I’ve sorted it through and kind of figured out, I guess. The purpose ... should be the learning objectives of students... to develop open learning strategies using the scaffolding MOOC and to obtain the certificate of achievement from the target MOOC.... Students using this worksheet are able to navigate the target MOOC site with focus on information required for completing the gap-fill task.

A quality ewant course or MOOC in general, as defined by the teacher respondents, lies in its adaptiveness towards and open inclusion of teacher/student needs and basic/advanced learning scopes. This closely aligns with existing criteria and standards for course evaluation, where the focus is placed on class participants at the forefront, mainly concerning learner needs, attention, progress, motivation, outcome, and overall efficiency (to the level of strategic employment), as suggested in both local and global frameworks (Grifoll et al., 2010; Patru & Balaji, 2016). The ENQA and MOE standards both emphasize core quality criteria for online courses, including course structure, accessibility, and assessment, which are essential for enhancing learner experience (Rosewell & Jansen, 2014).

In relation to research question 2, the local government-mandated MOE evaluation standards, however, appeared to lack necessary interactions with the teacher respondents and were rarely known or referred to as either success criteria for quality MOOCs or sample guidelines in their own course design and development. The balance between teachers’ personal experience and formal standards affects MOOC quality in distinct ways across educational levels, highlighting the need for flexible evaluation practices. This dynamic tension indicates that a one-size-fits-all approach may not be suitable in diverse educational contexts, emphasizing the importance of adaptable standards that can accommodate both teachers’ experiential insights and formal quality criteria (Su et al., 2021).

Using the ENQA and MOE standards as a reference, the study sheds light on teachers’ perceptions of quality, revealing the alignment and gaps between these frameworks and the practical challenges teachers encounter in MOOC evaluation and content alignment. Meanwhile, a relatively low acceptance level was observed when national and international course frameworks were referred to, though the respondents’ limited MOOC experience is likely to be a possible contributor to this phenomenon. In this regard, Teacher A told us:

Sorry, but [I’ve] never heard of these norms and standards .... From what I’m reading about here ... [I’m] not sure if I do understand [the evaluation items] ... the so-called “learning outcomes” can be hard to define, right? And I see the need to add some other points for consideration. You think?

While a quality MOOC course should facilitate class interaction and provide learning support and feedback for a positive learning loop, a competent and effective open learner should be independent and self-oriented in their online learning journey. Teachers emphasized that developing students’ self-regulation and independent learning skills is crucial for success in MOOCs. By designing activities that encourage students to take initiative and reflect on their learning, teachers aim to build these competencies, aligning with prior research that highlights how MOOCs are not just about content delivery but also about nurturing lifelong learning skills (e.g., Buhl & Andreasen, 2018; Steffens, 2015), exhibiting the broader educational potential of MOOCs. For the cultivation of competency for effective open learning, students are therefore encouraged to respond flexibly but responsibly to web-based open resources and practice opportunities, and teachers are to include and ensure the openness with content currency, real-life relevancy, accuracy, authenticity, and purposefulness. Half of the teacher respondents brought up the concept themselves and insisted on differentiated and personalized design of class material (by theme and form) instead of basing their self-generated handouts and worksheets entirely on convenient formats or templates (e.g., sample text material provided by an experienced teacher from the previous semester). Teacher B explained:

No need to have all of the lessons taught using first-hand, teacher-made videos, I believe. Just as students learn differently, the class material and learning tasks provided should be differentiated so as to accommodate varied needs and styles. Some lessons can be simply text-based, some with audio and video contents, and some ... mixed, maybe.

Teacher D’s comments on the subject of tailoring video content for specific contexts showed agreement:

Sources of instructional videos can be students’ own recommendations or their own options to make, among teacher recommendations. Those already-existing online videos from outside sources, such as YouTube, can be of great help, as the videos are widely accessible to most students, and the videos can be watched in a way that better meets students’ different learning preferences [than some self-made, low-quality ones]. You know, like at a lower speed or with captions or even subtitles on.

Apparently, a learner-centred, competency-based open learning environment is preferred, with most of the teacher respondents stating that they believe that quality MOOC courses are very likely to have these characteristics. Such attributes enable students to grow into competent and effective open learners (most ideally, at the end of the dual-track MOOC program). Whether on the proposed MOOC learning track or not, students are expected to demonstrate the ability to transfer context-appropriate knowledge, skills, attitudes, and values from the scaffolded open learning context to the real world with no guaranteed learning support. In other words, knowledge and skills transfer is fundamental to strategic open learners who are often cast in multiple roles (e.g., constant assessor, critical thinker, careful planner, and monitor) and take on different assignments (e.g., learning what, learning how to learn), therefore necessitating transfer ability as an essential part of the core competency for effective open learning.

Indeed, providing structured and extensive training on flexible transfer of all aspects (knowledge, skills, attitudes, and values) may be a practical way to realize the precise and adaptive nature of competency-based instruction in quality MOOCs. Yet, the teacher respondents who possessed expertise in course design did not highlight the need of doing so at the course preparation stage, not to mention the necessary practice on evaluation and assessment that has been widely recognized as an effective tool for training on transferring learning to new contexts. More than half the teacher respondents began with their design for student orientation, probably in hope, though not clearly stated, of commencing a fixed and linear instructional order in which the guided students would further explore course contents and engage themselves in interest-led, new content. It appears that just as most teacher respondents paid little attention to criteria for evaluation, as mentioned above, the evaluative approach that should support a successful learning process receives equally little attention, both in realizing the approach in class activities and aligning it with teaching and learning objectives. Teacher C gave an example:

There would be a pilot in my own homeroom class this semester at school, and tomorrow would be the first class-time. It would be the time for me to test my own design, yeah, as a pilot. Unfinished though, I mean the overall course design, but [the pilot is] sure to be a lesson that helps students with their orientation for the [ewant] site. Let me repeat myself. In this first class, I’ll get the students to know better about the site, using my self-developed worksheet that engages them in a gap-fill task.

An evaluative approach to course design and preparation can be highlighted in the later development and implementation of MOOCs, which does not necessarily hinder the cultivation of core competency for effective open learning (i.e., flexible transfer of knowledge, skills, attitudes, and values, as manifested in content learning, critical thinking, and even problem solving). However, the necessary but missing element of assessment and evaluation at an early stage is likely to hinder the ideal alignment of teaching and learning for effective and meaningful competency building. Given that such an evaluative approach, using either widely-accepted criteria or personal standards, introduces opportunities for demonstration, feedback, and reinforcement of the importance of transferring ability, the lack of these opportunities may reduce the flexibility being brought to cultivate students on their way to becoming effective open learners.

The MOOC platform has introduced openness to education, mainly through teaching and learning resources and practice opportunities. However, the teacher respondents, whose limited knowledge or low acceptance is clearly observed, tend to plan and design their open courses with a heavy reliance on prior experience that comprises hidden standards and personal guidelines. This reliance could lead to an over-simplified focus within these MOOC programs on task completion, diverging from an ideal, learner-centred process approach to students’ open learning journey. While teachers prioritize task completion, they also place importance on encouraging critical thinking, which highlights MOOCs’ potential to foster deeper engagement and improved learning outcomes on a larger scale. Several teachers also reflected on the role of MOOCs in reaching underserved communities, noting that high-quality design and accessible course structures are essential for enabling wider participation. This perspective aligns with the view that MOOCs, when supported by balanced quality standards, can be powerful tools for providing equitable educational access. A recommended pedagogical strategy is to combine task-focused assignments with reflective activities, embedding evaluation directly into the learning process for both teachers and students.

Additionally, using competency-based instruction as a framework not only aids in structuring course evaluations but also provides a basis for enhancing instructional quality across different educational settings. This approach supports a consistent method for assessing and improving course content, ensuring that MOOCs meet both educational standards and the diverse needs of learners globally. To optimize the general MOOC learning conditions, teachers are encouraged to seize every possible evaluative opportunity by opening up their course preparation to perspectives from experts of diverse backgrounds or stakeholders at different involvement levels ranging from individual to institutional (e.g., students, colleagues, administrators, and policy makers).

These insights from the case study on Taiwan’s ewant platform in competency-based MOOC design may be applicable across different regions facing similar challenges in balancing task-oriented learning with the competency-building opportunities found in MOOCs. By acknowledging the need for adaptable evaluation methods that can harmonize regional standards with teacher-driven perspectives, this study provides a valuable pedagogical framework for global MOOC platforms. The similarities between ewant and other MOOC platforms highlight the importance of developing evaluation frameworks that align competency-based learning with standardization requirements. By addressing these shared challenges, platforms can better support flexible learning pathways and adapt to global educational needs, enhancing MOOC effectiveness across diverse settings.

To maintain course quality, a broadly-defined evaluative approach that considers personal teaching and learning experience foundational is recommend. A positive attitude toward evaluation criteria and standards (national or international) is also encouraged for ongoing, whole-scale assessment that enhances the precise nature of competency-based instruction, especially in the widely open learning context. Cirulli et al.’s (2017) double-loop evaluation cycle presents a pedagogical blueprint for placing true MOOC participants back onto the centre of their personalized MOOC teaching and learning process for either micro-credentialing or open learning in general. The learning process is sure to be optimized when both teacher and student are involved, and when both national and international frameworks are carefully considered, in that the progressive nature is to be strengthened in this double- or multiple-loop design for ongoing evaluation and effective self-paced open learning. Meanwhile, ongoing discussions in open educational resources (OER) are advancing with emphasis on how competency-based standards in MOOCs can facilitate diverse lifelong learning journeys. The integration of adaptive learning technology and learner analytics can further support this approach by enabling teachers to implement competency-based instruction effectively. Through real-time data-driven insights, these tools allow educators to closely monitor student progress closely and adjust instructional strategies to address diverse learner needs, ultimately enhancing the impact of teacher-driven evaluation practices in MOOCs.

The teachers’ responses demonstrate their overall perception that competent open learners grow core knowledge, skills, attitudes, and values along their MOOC learning journey. In examining these responses, several thematic areas emerge as critical components of quality MOOCs: course accessibility, content depth, and learner engagement. Each of these highlights the importance of creating learning conditions that support diverse student needs and expectations. Teachers value a balance between foundational knowledge tasks and opportunities for critical thinking, as this dual approach helps students become adaptable and reflective learners. These thematic areas also reflect the teachers’ belief that a successful MOOC integrates both aesthetic appeal and substantive content to enhance engagement and learning outcomes. Each of the optimal conditions necessarily accepts a flexible definition, evolving with societal openness and individual differences that are reflected in or shaped by the adopted criteria and standards.

The study’s findings further reveal a complex interplay between teachers’ perceptions and established standards, highlighting that teachers often rely on personal teaching experience over formal standards, which can lead to a task-focused rather than a fully competency-based approach. Although frameworks such as the MOE and ENQA standards provide a reference points, many teachers remain unfamiliar with these benchmarks, resulting in inconsistencies when aligning MOOC content with quality requirements. This dynamic points to the need for a balanced approach that incorporates both teacher-driven insights and competency-based standards, allowing both teacher autonomy and adherence to established quality frameworks. Therefore, the exploration of teacher (or participant) perspective, along with the underlying evaluative approach that adapts to the shifting assessment paradigms, requires continued practice and reinforcement toward inclusive and sustainable online educational models. The integration of teacher perspectives in competency-based evaluation aligns this study with recent advancements in the field, offering a model that supports both teacher autonomy and standardized quality measures. This approach provides a pathway for future MOOC designs to accommodate diverse learning environments globally.

For future research directions, the adopted qualitative methodology (i.e., interviews) should be combined with quantitative measures, for a mixed research method and the necessary enhancement of data triangulation. This would address the limitations of a less diverse sample population whose representativeness is challenged due to its small size. Additionally, given that interviews serve as an effective way to engage front-liners (teachers) as respondents and meanwhile encourage an open mind towards the open learning trend, participants in different roles, including students, teachers, administrators, and policy-makers, should all be invited to join in-depth discussions and share personal thoughts and ideas. By involving a broader range of perspectives, future research could deepen understanding of factors that contribute to effective open learning environments and create a framework that balances top-down standards with teacher-led insights. Following the suggested directions, the data collected are sure to continue meaningful efforts to re-examine existing criteria and standards and to fulfil the broadened definition of competency, as an overall response to open learning trends for both students’ and teachers’ cyclical evaluation and improvement.

This research was supported by the National Science and Technology Council (formerly the Ministry of Science and Technology) in Taiwan through grant numbers NSTC 112-2410-H-A49-019-MY3 and MOST 111-2410-H-A49-018-MY4. We would like to thank National Yang Ming Chiao Tung University (NYCU)’s ILTM (Interactive Learning Technology and Motivation, see: http://ILTM.lab.nycu.edu.tw) lab members and the teachers for participating in the study, as well as the reviewers for providing valuable comments. (Jerry Chih-Yuan Sun is the corresponding author.)

Acosta, T., Acosta-Vargas, P., Zambrano-Miranda, J., & Lujan-Mora, S. (2020). Web accessibility evaluation of videos published on YouTube by worldwide top-ranking universities. IEEE Access, 8, 110994-111011. https://doi.org/10.1109/access.2020.3002175

Buhl, M., & Andreasen, L. B. (2018). Learning potentials and educational challenges of massive open online courses (MOOCs) in lifelong learning. International Review of Education, 64, 151-160. https://doi.org/10.1007/s11159-018-9716-z

Chiang, W. T. (2007). Beyond measurement: Exploring paradigm shift in assessment. Journal of Educational Practice and Research, 20(1), 173-200.

Cirulli, F., Elia, G., & Solazzo, G. (2017). A double-loop evaluation process for MOOC design and its pilot application in the university domain. Knowledge Management & E-Learning, 9(4), 433-448. https://doi.org/10.34105/j.kmel.2017.09.027

eLearning Movement Office. (2021). Criteria for evaluating benchmark MOOC courses. Ministry of Education, Taiwan.

Ferreira, C., Arias, A. R., & Vidal, J. (2022). Quality criteria in MOOC: Comparative and proposed indicators. PLOS ONE, 17(12), Article e0278519. https://doi.org/10.1371/journal.pone.0278519

Grifoll, J., Huertas, E., Prades, A., Rodriguez, S., Rubin, Y., Mulder, F., & Ossiannilsson, E. (2010). Quality assurance of e-learning. ENQA Workshop report 14. ENQA. https://www.aqu.cat/doc/doc_39790988_1.pdf

Haavind, S., & Sistek-Chandler, C. (2015, July). The emergent role of the MOOC instructor: A qualitative study of trends toward improving future practice. International Journal on E-learning, 14(3), 331-350. https://www.learntechlib.org/primary/p/150663/

Hovhannisyan, G., & Koppel, K. (2019). Students at the forefront of assessing quality of MOOCs. In EADTU, G. Ubachs, L. Konings, & B. Nijsten (Eds.), The 2019 OpenupEd trend report on MOOCs (pp. 29-32). EADTU. https://openuped.eu/images/Publications/The_2019_OpenupEd_Trend_Report_on_MOOCs.pdf

Johnstone, S. M., & Soares, L. (2014). Principles for developing competency-based education programs. Change: The Magazine of Higher Learning, 46(2), 12-19. https://doi.org/10.1080/00091383.2014.896705

Kasch, J., Van Rosmalen, P., & Kalz, M. (2021). Educational scalability in MOOCs: Analyzing instructional designs to find best practices. Computers & Education, 161, Article 104054. https://doi.org/10.1016/j.compedu.2020.104054

Koukis, N., & Jimoyiannis, A. (2019). MOOCs for teacher professional development: Exploring teachers’ perceptions and achievements. Interactive Technology and Smart Education, 16(1), 74-91. https://doi.org/10.1108/ITSE-10-2018-0081

Lincoln, Y. S., & Guba, E. G. (1985). Naturalistic inquiry. Sage.

Lopes, A. M. Z., Pedro, L. Z., Isotani, S., & Bittencourt, I. I. (2015). Quality evaluation of web-based educational software: A systematic mapping. In D. G. Sampson, R. Huang, G.-J. Hwang, T.-C. Liu, N.-S. Chen, Kinshuk, C.-C. Tsai (Eds.), 2015 IEEE 15th International Conference on Advanced Learning Technologies (pp. 250-252). IEEE. https://doi.org/10.1109/ICALT.2015.88

Mazoué, J. G. (2013, January 28). The MOOC model: Challenging traditional education. EDUCAUSE Review Online. EDUCAUSE.

Ossiannilsson, E. (2021). MOOCs for lifelong learning, equity, and liberation. In D. Cvetković (Ed.), MOOC (Massive Open Online Courses). IntechOpen. https://dx.doi.org/10.5772/intechopen.99659

Patru, M., & Balaji, V. (2016). Making sense of MOOCs: A guide for policy-makers in developing countries. UNESCO and Commonwealth of Learning. https://doi.org/10.56059/11599/2356

Pelletier, K., Brown, M., Brooks, D. C., McCormack, M., Reeves, J., Arbino, N., Bozkurt, A., Crawford, S., Czerniewicz, L., Gibson, R., Linder, K., Mason, J., & Mondelli, V. (2021). 2021 EDUCAUSE Horizon Report: Teaching and Learning Edition. EDUCAUSE. https://library.educause.edu/-/media/files/library/2021/4/2021hrteachinglearning.pdf

Queirós, R. (Ed.). (2018). Emerging trends, techniques, and tools for massive open online course (MOOC) management. IGI Global.

Rosewell, J., & Jansen, D. (2014). The OpenupEd quality label: Benchmarks for MOOCs. INNOQUAL: The International Journal for Innovation and Quality in Learning, 2(3), 88-100. http://www.openuped.eu/images/docs/OpenupEd_Q-label_for_MOOCs_INNOQUAL-160-587-1-PB.pdf

Sadhasivam, J. (2014). Educational paradigm shift: Are we ready to adopt MOOC? International Journal of Emerging Technologies in Learning (Online), 9(4), 50-55. https://doi.org/10.3991/ijet.v9i4.3756

Sandeen, C. (2013). Assessment’s place in the new MOOC world. Research & Practice in Assessment, 8, 5-12. https://www.rpajournal.com/assessments-place-in-the-new-mooc-world/

Steffens, K. (2015). Competences, learning theories and MOOCs: Recent developments in lifelong learning. European Journal of Education, 50(1), 41-59. https://doi.org/10.1111/ejed.12102

Stracke, C. M., & Trisolini, G. (2021). A systematic literature review on the quality of MOOCs. Sustainability, 13(11), Article 5817. https://doi.org/10.3390/su13115817

Su, P.-Y., Guo, J.-H., & Shao, Q.-G.. (2021). Construction of the quality evaluation index system of MOOC platforms based on the user perspective. Sustainability, 13(20), Article 11163. https://doi.org/10.3390/su132011163

Wang, Y. R., & Chou, C. (2013). 开放式课程网站评鉴面向与指标:专家访谈研究 [A study of the evaluation dimensions and criteria for OpenCourseWare websites: Expert interview research]. In GCCCE 2013: The 17th Global Chinese Conference on Computers in Education (pp. 482-485). https://www.gcsce.net/proceedingsFile/2013/GCSCE%202013%20Proceedings.pdf

Yousef, A. M. F., & Sumner, T. (2021). Reflections on the last decade of MOOC research. Computer Applications in Engineering Education, 29(4), 648-665. https://doi.org/10.1002/cae.22334

Zulkifli, N., Hamzah, M. I., & Bashah, N. H. (2020). Challenges to teaching and learning using MOOC. Creative Education, 11(3), 197-205. http://dx.doi.org/10.4236/ce.2020.113014

Your prior MOOC experiences

Your current task to design and teach an ewant course

Your awareness of and attitude toward existing standards and criteria

Teacher Perspective on MOOC Evaluation and Competency-Based Open Learning by Wen-Li Chang and Jerry Chih-Yuan Sun is licensed under a Creative Commons Attribution 4.0 International License.