Volume 26, Number 2

Chrissy Spencer1, Aakanksha Angra2, Kata Dósa3, and Abigail Jones1

1School of Biological Sciences, Georgia Institute of Technology; 2American Society of Hematology; 3Centre for Teaching and Learning, Corvinus University of Budapest

The high cost of commercial textbooks in higher education creates barriers to equitable access to learning materials and negatively impacts student performance. Open educational resources (OER) offer a cost-effective alternative, but their impact on student learning remains a critical question. This study directly compared student outcomes between OER and commercial textbooks in a controlled reciprocal design. Forty undergraduate participants completed reading tasks and knowledge assessments using both textbook types, focusing on topics in DNA structure and function and population ecology. Results showed no significant differences in learning gains between OER and commercial textbooks, consistent with prior research. However, participants spent significantly less time on task when using the shorter, learning objective-aligned OER readings, particularly for jargon-heavy DNA content. These findings highlight the potential of OER to reduce cognitive load and improve efficiency without compromising learning outcomes. Future research should explore the role of textbook alignment, length, and student preparation strategies in optimizing learning with OER, particularly in flipped classroom contexts. This study supports OER adoption as a cost-saving measure that maintains academic integrity while enhancing accessibility and efficiency.

Keywords: open educational resources, OER, normalized learning gains, student learning outcomes, think-aloud semi-structured interview, undergraduate introductory biology

Textbooks remain central to educational practices in the United States (Crawford & Snider, 2000; Hilton, 2020; Seaman & Seaman, 2024). However, the high cost of textbooks in higher education creates significant barriers for students (Anderson & Cuttler, 2020; Brandle et al., 2019; Goldrick-Rab, 2016; Hendricks et al., 2017; Hilton et al., 2014; Katz, 2019; Martin et al., 2017; Wiley et al., 2012). When students attempt to complete course assignments without required textbooks, surveys indicate academic underperformance without access to necessary learning resources (Florida Virtual Campus, 2012, 2022; Goldrick-Rab, 2016; Nusbaum et al., 2020). These hidden costs exacerbate inequities in access to education (Blessinger & Bliss, 2016; Bossu et al., 2012; Hockings et al., 2012; Lane, 2008, 2012; Willems & Bossu, 2012).

Open educational resources (OER) provide a solution to this issue. OER are adaptable learning materials available for free use and repurposing that improve access and equity (Blessinger & Bliss, 2016; Bossu et al., 2012; Lane, 2008, 2012; Smith & Casserly, 2006; Willems & Bossu, 2012). Cost consideration is the first element in a student-centered research framework on the efficacy of OER called COUP, where cost combines with outcomes, usage, and perceptions (Bliss, Robinson, et al., 2013), and remains a pillar of the SCOPE model (Clinton-Lisell et al., 2023), which expands upon COUP by taking into consideration social justice and reconceptualizing usage to engagement. Most studies of OER engagement and perceptions leverage student or faculty survey data. Surveys reveal students appreciate the lower costs and yield useful insights into student usage and perceptions of OER (Bliss, Hilton, et al., 2013; Bliss, Robinson, et al., 2013; Cuttler, 2019; Grissett & Huffman, 2019; Hendricks et al., 2017; Illowsky et al., 2016; Jhangiani et al., 2018; Martin et al., 2017). Faculty awareness of OER is increasing (Seaman & Seaman, 2024), but real-time comparisons between OER and commercial materials remain vital for understanding student engagement and outcomes (Hilton, 2020).

Parallel to cost, usage, and perceptions, faculty considering OER adoption are guided by the principle of “do no harm” to student learning outcomes when replacing a textbook (Fisher, 2018; Lovett et al., 2008; Ryan, 2019), of principal importance in this research. The SCOPE framework developed by Clinton-Lisell and colleagues (2023) expanded the definition of cost to include emotional, social-political, time, and academic costs (such as course withdrawal rates and cognitive load), in addition to financial considerations. Time and cognitive load are of particular interest to our study. Cognitive load theory (Sweller, 1988) suggests that the perceived difficulty of an academic task (coupled with the required time investment) has a direct relationship with student goal setting, their willingness to put mental effort into learning, and their likelihood of persisting with learning (Feldon et al., 2019). This suggests that if an OER requires less time on task and is perceived by the student as less difficult, better learning outcomes are expected, compared to a commercial textbook.

Comparisons of student learning outcomes after course adoptions of OER indicate no significant impact on average to academic achievement (Clinton & Khan, 2019; Hendricks et al., 2017; Hilton, 2016; Tlili et al., 2023; Vander Waal Mills et al., 2019). Tlili and colleagues’ (2023) and Clinton and Khan’s (2019) meta-analyses of learning efficacy from up to 25 published studies found variation in the student learning outcomes across studies. Even given variation, Clinton and Khan (2019) detected no effect on learning or assessment scores after a switch from a non-OER to an OER textbook. Refining our understanding of how OER impacts learning achievement, Tlili and colleagues (2023) found a statistically significant but negligible effect on learning gains, tempered by subject matter, education level, and geographical location. While researchers have found both gains (Colvard et al., 2018; Grewe & Davis, 2017; Jhangiani et al., 2018; Smith et al., 2020) and losses (Delgado et al., 2019) in learning after a switch to OER, the majority of studies support meta-analysis findings of no effect (Clinton et al., 2019; Croteau, 2017; Fialkowski et al., 2020; Grissett & Huffman, 2019; Hendricks et al., 2017; Kersey, 2019; Nusbaum et al., 2020; Vander Waal Mills et al., 2019). In short, student learning gains after a conversion to an OER textbook are complex.

In a study that examined three OER learning gains studies, Griggs and Jackson (2017) also indicated the textbook format and preparedness generates variation in student learning. The variation and complexity mapped in the two meta-analysis studies (Clinton & Khan, 2019; Tlili et al., 2023) could have some of these same contributors to variance. In addition to different textbook types, studies on OER efficacy address length (Dennen & Bagdy, 2019), quality, and content of readings. Finally, a comparison of non-OER to OER requires alignment of both types of teaching resources with the course learning objectives (Fink, 2013; Wiggins & McTighe, 2006). Our research question addressed whether an instructor-curated focused OER textbook would yield better student performance on reading questions, less total time on the task, and equivalent learning gains relative to the same student using a commercial textbook. To control for these sources of variation, we conducted a controlled experiment that allowed the same student equal exposure to both OER and commercial textbooks. We hypothesized that students using OER would perform better on reading questions, take less time to answer reading questions, and show learning gains at least equivalent to the students using the non-OER text.

We recruited undergraduate, non-biology majors to participate in a non-classroom study to directly compare learning from an OER and a commercial textbook in a within-subjects counterbalanced experiment. Study participants answered five short incoming knowledge evaluation (IKE) questions using either an OER or non-OER reading, then repeated the process with the other textbook type on a different subject-matter topic. We examined learning gains in a semi-structured interview.

The reading questions, or IKEs, used in the study had five multiple choice questions based on the learning objectives and covered in the readings. IKE questions were written to require understanding, application, or synthesis of ideas, with one exception where the answer was almost verbatim in both texts. An example IKE question on population ecology was:

The exponential equation of population growth describes

An example IKE question on DNA was:

A newly discovered bacterial species has 35% G in its DNA. What is the % A?

The readings used in the study were from two textbooks: Biological Principles (Choi et al., 2015), an OER faculty-authored and curated text for use in an introductory biology course for science majors at a US university, and Biological Science, 5th edition (Freeman et al., 2014), a commercial textbook published by Pearson Education. Biological Principles was written from the outline of learning objectives authored by course faculty. The professionally-edited commercial textbook Biological Science was the second most assigned textbook in US college-level biology courses (Ballen & Greene, 2017). Freeman et al.’s Biological Science was the required reading in the course before OER textbook implementation. Readings from both textbooks were provided electronically to study participants; however, learning objectives were not provided to the participants.

We selected readings from these textbooks on DNA structure and function and population ecology. The readings included the information necessary to complete five short IKE questions, as well as content not assessed on the IKE. The commercial textbooks had higher word counts, more figures and images, more equations in boxes in the ecology reading (Table 1), and included topics beyond the learning objectives. Each OER text also included one 12-13 minute video. Some participants accessed only parts of one or both the texts; some participants did not view the video.

Table 1

Word, Image, and Equation Counts for Commercial and OER Textbooks by Subject

| Content | Subject and type of textbook | |||

| Ecology | DNA | |||

| OER | Commercial | OER | Commercial | |

| Word count | 724 | 9,193 | 878 | 6,676 |

| Number of figures | 2 | 17 (+ 3 photos) | 5 | 20 (+ 1 photo) |

| Number of equations | 2 | 2 | 0 | 0 |

| Boxes | 0 | 1 | 0 | 0 |

| Number of equations in boxes | 0 | 10 | 0 | 0 |

| Number of videos | 1 | 0 | 1 | 0 |

| Length of video | 11 min 53 s | 12 min 58 s | ||

Note. OER = open educational resource.

Study participants (N = 40) were undergraduate students at a doctoral granting research university (R1) in the southeastern United States. Their pre-surveys indicated they did not have prior exposure to college-level biology coursework, including AP credit. We distributed participants into four textbook-by-topic groups evenly, as we scheduled their interviews. Participants were compensated and recruitment was ongoing until 40 study participants completed the interview.

In a within-subjects counterbalanced design, each participant completed a think-aloud, semi-structured interview that contained two main tasks and several additional elements:

Specifics of the interview elements are addressed in the next section.

In their interviews, participants accessed an open education and a commercial textbook resource to complete reading tasks with either DNA or ecology content. Each participant completed one task in an OER textbook and one task in a traditional commercial textbook. If their first task was on the DNA topic, then their second task was on the ecology topic, and vice versa. This design allowed for a direct comparison of the same student in two different textbook environments. Each participant completed two reading tasks and a “Draw DNA” pre/post-task prompt. We compared IKE scores and time on task of the Biological Principles OER versus the non-OER textbooks.

In the one-on-one interview session, each participant completed two reading tasks, one in each of two textbook environments: open education or commercial. Each reading task included simultaneous access to the assigned textbook-by-topic online reading and to the Internet while completing five multiple choice IKE questions. Participants accessed the readings and the Internet using pre-opened browser tabs on a laptop provided for the interview. Tasks were introduced as formative with no mention of grades for correct scores. IKE scores were recorded in Qualtrics. Reading task learning change scores were calculated from the proportion correctly answered (Marx & Cummings, 2007; Theobald & Freeman, 2014). We recorded the duration of each reading task in minutes using screen-recording software Camtasia (https://www.techsmith.com/camtasia/). All interviews were conducted by AA within two months in spring of 2018; interviews lasted up to two hours.

To confirm minimal prior knowledge for each topic, participants completed a pre- and post-assessment. We prompted participants to “Draw DNA” while narrating aloud. The “Draw DNA” pretest provided an independent metric of prior biology content knowledge. The “Draw DNA” posttest documented knowledge recall after both reading tasks were completed. The pre- and post-drawing assessment and narration were captured with LiveScribe software (https://livescribe.com). Using an inductive approach to quantify student prior knowledge about DNA, we scored each drawing with its verbal explanation for knowledge of DNA structure and function, awarding 0 or 1 point per concept out of 9 possible points. The knowledge types and categories are shown in Table 2. For the post-task “Draw DNA,” we applied the same scoring rubric, adjusting the post score upward to include concepts from the pretest that were not explicitly restated post test. We assumed these correct concepts were not forgotten but rather omitted when considering newly learned or recalled information in the open-ended prompt to “Draw DNA.” While we also polled a “Draw Ecology” prompt, the data were not readily scorable in a quantitative analysis.

Table 2

Categories of Knowledge About DNA Structure and Function

| Knowledge type | Knowledge category |

| DNA structure | a double helix that includes lines like ladder rungs rungs on ladder represent two “things” (e.g., bases) two things pair in specific ways (e.g., base pairs of A = T and G = C) four different units (e.g., A, T, G, C or similar) chemical bonds (e.g., hydrogen) 5’ to 3’ directionality or reference to “antiparallel” structure nucleotide base with a backbone (e.g., sugar and or phosphate) |

| DNA function | processes (e.g., mutation, replication, transcription, recombination) codes for genetic information and/or traits |

We analyzed initial content knowledge using the pre-task “Draw DNA” prompt (interview element 1), learning change scores from the “Draw DNA” task (elements 1 and 7), and IKE performance and duration for each reading task (elements 3 and 5).

Three raters (CS, KD, and AJ) independently scored DNA content knowledge of participants before and after they completed the reading tasks. Each rater rated all 40 pre-task “Draw DNA” entries, then used interrater differences for 14 of 40 to reformulate the scoring rubric. Each rater again independently scored all 40 pre-task “Draw DNA” entries. We calibrated how raters interpreted the revised rubric, which informed the post-task rubric (Table 2). Each rater for a third time independently re-scored the pre-task and then scored the post-task “Draw DNA” entries. Rater agreement was assessed using Krippendorff’s alpha (Hayes & Krippendorff, 2007). We analyzed DNA pretest knowledge scores using the lm() function in R, with textbook and task order as explanatory variables. All statistical analyses were performed using R (Version 4.0.5).

The post-task rubric was adjusted to account for participants who demonstrated the DNA knowledge types and categories as shown in Table 2 in the pre-task drawing that they did not include again in the post-task drawing. In these cases, we calculated an adjusted post-“Draw DNA” score to account for those points. We calculated normalized learning change scores as the ratio of the difference in the DNA knowledge score from pre- to post-task to the maximum possible gain, or c = (post - pre) / (postmax - pre), where postmax = 9, the maximum possible score from the rubric (Marx & Cummings, 2007; Theobald & Freeman, 2014). The normalized change scores, which are equivalent to learning gains (Hake, 1998; Theobald & Freeman, 2014), were analyzed using lm() for differences between textbook and task order, and for interaction effects.

We matched the 10 IKE questions to learning objectives. Then, using the Blooming Biology Tool (Crowe et al., 2008), three researchers and one co-author (CS) independently scored each question according to which level of Bloom’s taxonomy of educational goals would be required to answer it: know, comprehend, apply, analyze, synthesize, or create (Anderson & Krathwohl, 2001).

IKE performance and time on task were analyzed using mixed models with repeated measures (by participant) using the lmer() function in R. The full models included fixed-effects textbook, topic, and task order. Participant was a random effect. To identify the parsimonious models with the best fit, we used Akaike’s information criterion (AIC) to identify and compare full models with less parameterized models. We present results from type III analysis of variance with Satterthwaite’s method from the model with the lowest AIC. When log transformation better approximated a normal data distribution, we completed analyses with both untransformed and log-transformed data. Independent variables of IKE performance and time on task were centered using a z score. For participants who showed unusually high prior DNA content knowledge, we completed the mixed model analysis both with and without those participants. As an alternative analysis, we conducted a fixed effects analysis of variance aov() on the time on task data, where we replaced the random effect of “participant” with “taskorder,” the order in which participants used each textbook (i.e., OER first or commercial first).

We completed a power analysis for the generalized linear model (GLM) using the power.f2.test() function with treatment number u = 4, degrees of freedom v = 40-2-1, significance level = 0.05, and power as 80% to determine the effect size (f2) required to see significant differences in our data.

If participants simply guessed at IKE questions, we would predict that limited time on task would generate low scores. To test for this, we screened for a relationship between time on task and the IKE performance with Kendall-Theil Sen Siegel nonparametric linear regression using mblm(), which is not robust to ties in the ranked data, and also using rank-based estimation regression rfit() in R.

The three raters showed high agreement when ranking gains in DNA content knowledge from the pre-task (Krippendorff’s alpha40,3 = 0.927). For the post-task “Draw DNA” scores, raters had similarly high agreement (Krippendorff’s alpha40,3 = 0.901). Given the strong agreement between raters, we moved forward with analysis of DNA content knowledge using average scores from the “Draw DNA” data. Before the reading tasks, study participants scored DNA content knowledge of 3.02 ± 0.25 on average, with a range from 0 to 6.66, including two participants with scores above 5 out of 9 possible DNA content knowledge points. The division of participants into different textbook treatments and task order groups was random with respect to their pre-task DNA content knowledge (textbook F = 0.999, p = .324; task order F = 0.018, p = .895). Adjusted post-“Draw DNA” scores were on average 5.72 ± 0.26 (mean ± standard error). All participants increased in DNA knowledge score between the initial and final assessment.

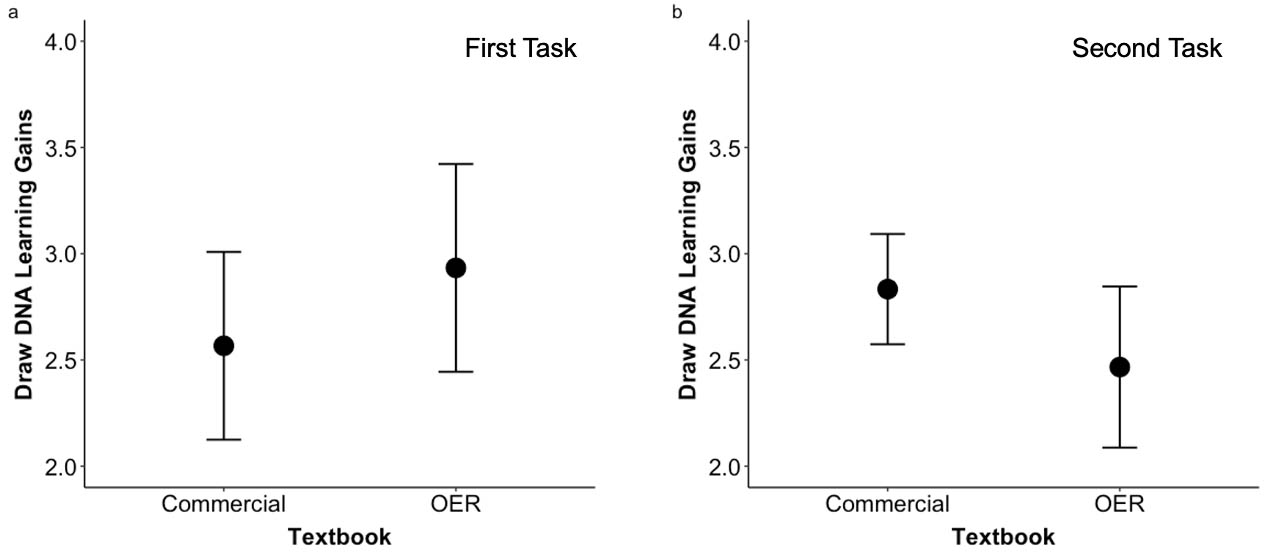

Learning gains from “Draw DNA,” calculated as normalized learning change scores, were on average 0.462 ± 0.029 (mean ± standard error) points (see Figure 1 showing DNA learning gains in both first and second task). Most of that change is attributable to increased recall of DNA structure knowledge (raw data M = 2.1 ± 0.167) rather than knowledge about the function of DNA (raw data M = 0.6 ± 0.077). “Draw DNA” learning gains revealed no significant differences in the gain of DNA content knowledge given textbook (F = 0.095, p = .760) or in the order those textbooks were presented (F = 0.011, p = .917), with no interaction effect between textbook type and the order of the tasks (F = 1.055, p = .311).

Figure 1

Learning Gains for Participants From the “Draw DNA” Pre- and Posttest

Note. Panel a: Participant scores after the first task. Panel b: Participant scores after the second task. OER = open educational resource.

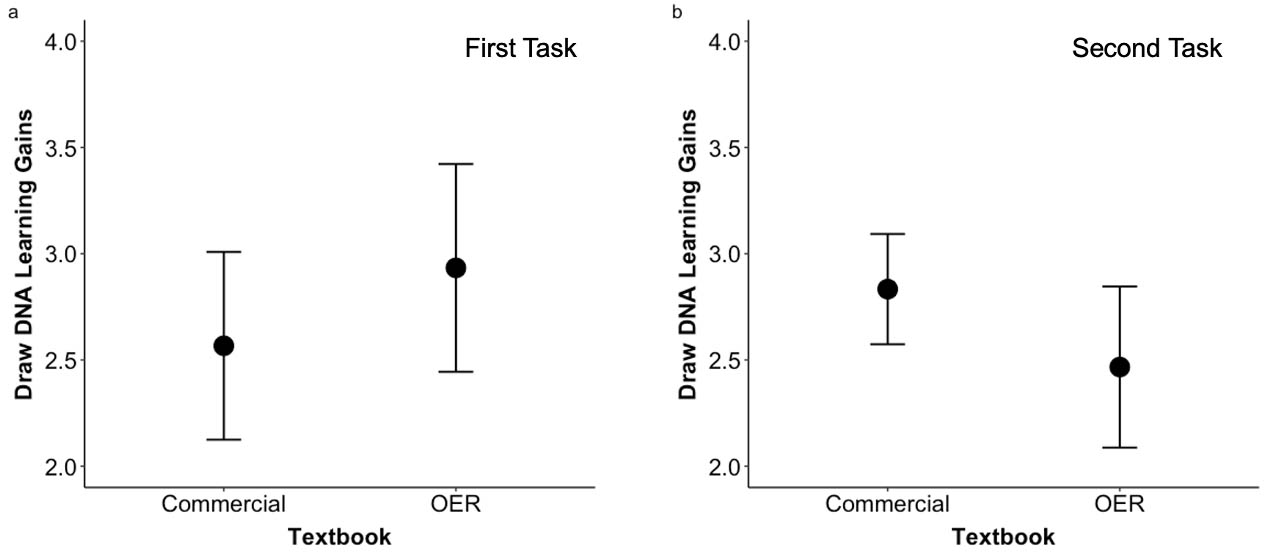

With the IKE questions, two ecology and three DNA questions required lower-order cognitive approaches while the remaining were higher order. Participants taking the ecology IKE scored a median of 4 out of 5 possible, a mean of 4.2, and 45% scored 5. For the 5 DNA questions, the median was 4, with a mean of 3.8, and 18% scored full marks. The model with the lowest AIC score was IKE_ZScore ∼ Textbook + Topic, with the random effect of participant omitted. Participants showed no significant differences in IKE performance between commercial and open education textbooks, F(1,77) = 2.09, p = .153. See Figure 2. Participants performed significantly better on ecology than on DNA questions, F(1,77) = 4.98, p = .029. Removing two outlier participants with high prior content knowledge on the pretest did not alter these results (data not shown). Models fitted using linear mixed effects were all singular, indicating that the data distribution was on the boundary of feasible parameter space for the model. We therefore also applied the AIC to a fixed effect analysis of variance model aov(), omitting the random effect of participant. This analysis revealed the same result as the mixed effect model.

Figure 2

Student Performance on the Five IKE Reading Questions

Note. IKE = incoming knowledge evaluation.

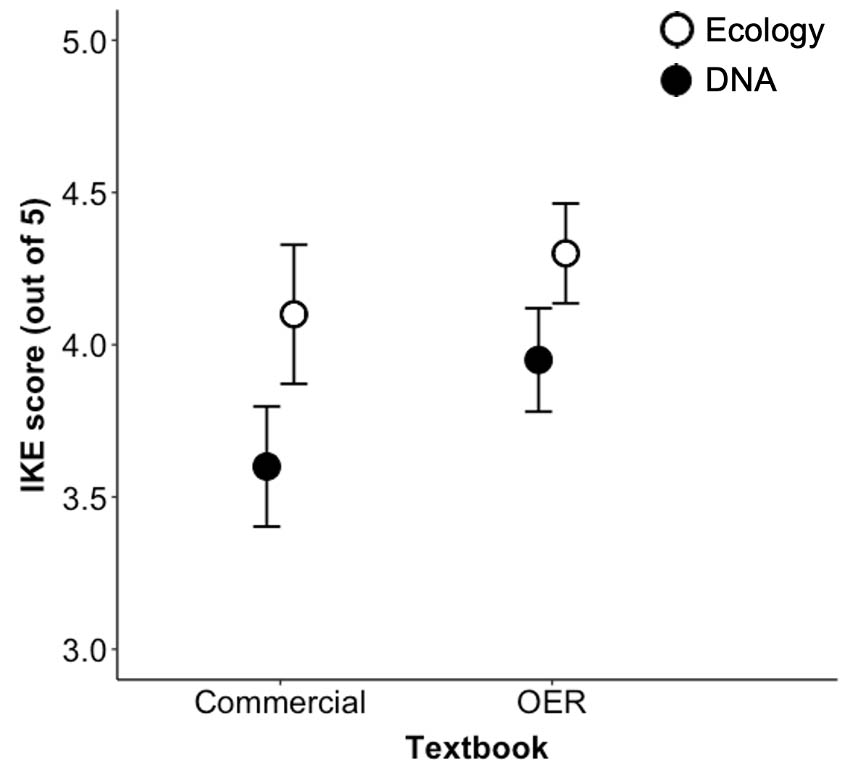

Participants spent significantly more time on the DNA task when using the commercial textbook (log-transformed and z -centered data: F(1,38) = 21.55, p < .001). See Figure 3. Participant was also a significant effect for time on task, with the likelihood ratio test 15.81, df = 1, p < .001.

Figure 3

Time on Reading Task When Using Each Type of Textbook

We conducted a fixed effects analysis of variance on the time on task data, replacing the random effect of participant with the order in which the participant used each textbook (i.e., OER first or commercial first). Data for this analysis were centered using the z score and also log-transformed to normalize the distribution. As with the mixed model analysis, we saw the significant effects of textbook (F = 8.894, p = .039) and topic (F = 8.209, p = .0055), but there was not an effect of task order (F = 1.051, p = .3037) for the participants. A Tukey’s honestly significant difference (HSD) analysis of interactions showed that the DNA topic was more time-consuming overall, especially when paired with the commercial textbook, F(1,72) = 4.22, p = .044, or when DNA was the first task of the two each participant completed, F(1,72) = 7.85, p = .007.

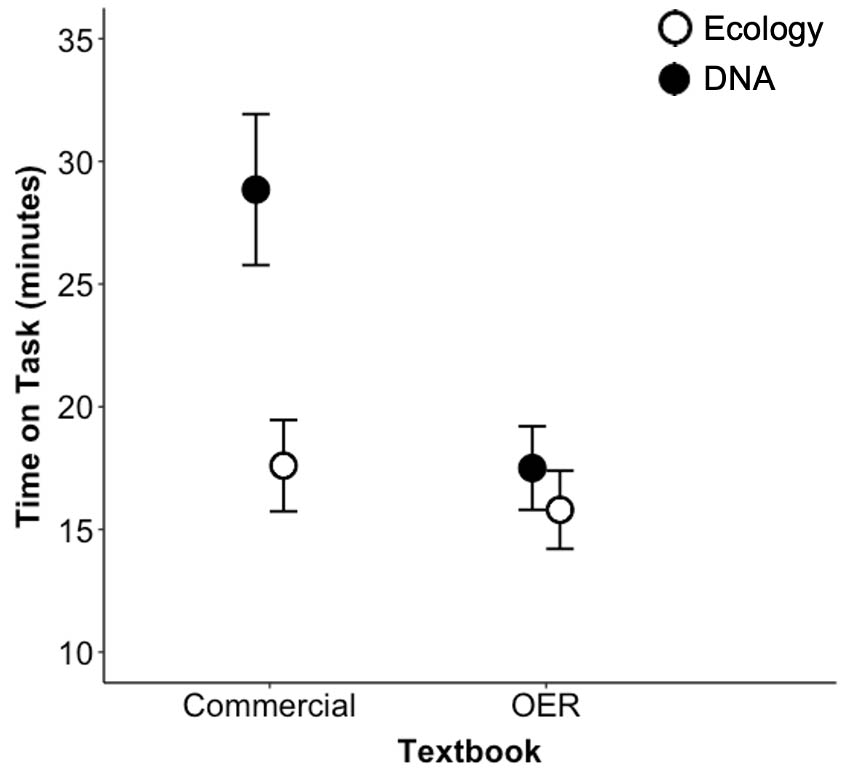

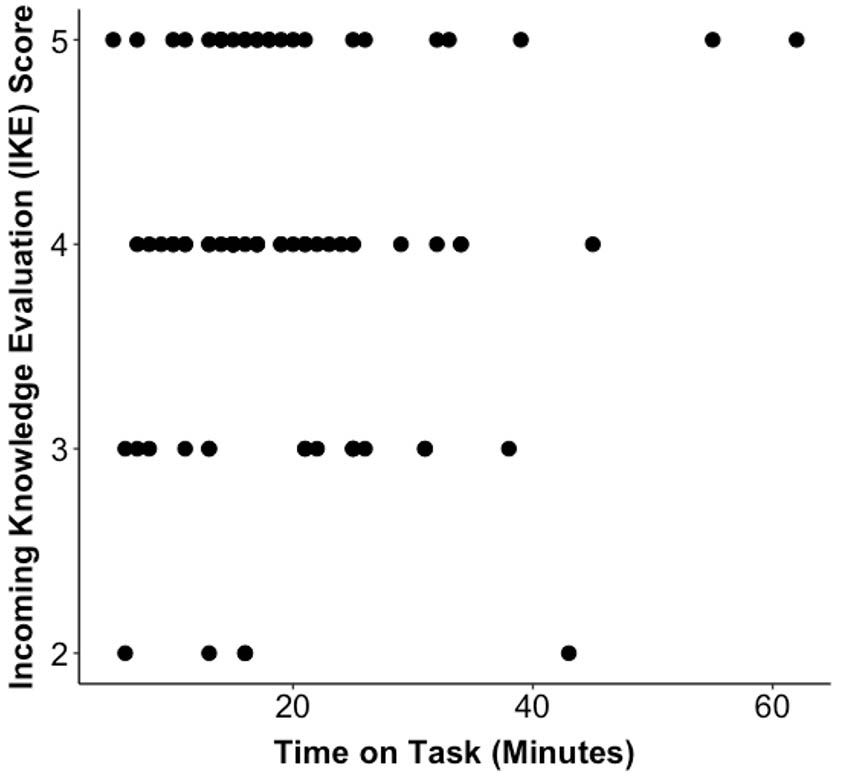

There is no predictive relationship between time on task and the IKE score according to a rank-based estimation regression (Figure 4, t = 0, p = 1) and a Kendall-Theil Sen Siegel nonparametric linear regression (V = 289, p = .886).

Figure 4

No Relationship Between Time on Task and the IKE score

Note. IKE = incoming knowledge evaluation.

Given the within-subjects counterbalanced design with a sample size of 10 in each of 4 treatments groups, power analysis for a GLM with 80% power and a 5% significance level indicated that a large effect size of 0.32 would be necessary to detect significant differences for time on task and IKE scores.

Student learning outcomes from both the reading task IKE and the posttest learning gains did not decline with the switch from commercial to OER textbooks, in agreement with the majority of previous studies (Clinton et al., 2019; Clinton & Khan, 2019; Croteau, 2017; Fialkowski et al., 2020; Grissett & Huffman, 2019; Hendricks et al., 2017; Kersey, 2019; Nusbaum et al., 2020; Tlili et al., 2023; Vander Waal Mills et al., 2019). Given that the OER readings and IKE questions were aligned with the same learning objectives, we expected IKE performance to increase. Instead, the IKE scores showed only a non-significant trend toward higher performance when using the Biological Principles OER textbook. Our sample size was too low to detect moderate differences in learning gains between the commercial and OER textbooks.

Significant differences emerged in time spent on DNA content, with longer times for the commercial textbook (Figure 3). Participants completed ecology tasks more quickly, regardless of textbook type, and performed better on these IKE questions than on DNA questions (Figure 2). The relative ease of ecology content on growth may stem from greater familiarity, intuitive concepts, or reduced cognitive load (Feldon et al., 2019). Jargon-rich commercial DNA chapters likely increased cognitive load, reducing efficiency (Ou et al., 2022). Alternatively, students may be better primed for ecology from prior education, or the questions themselves were less challenging.

Longer time on task on the less-focused commercial readings suggests their length and complexity increased cognitive load, making it harder to retrieve relevant concepts for IKE questions. The commercial readings (Freeman et al., 2014) contained more jargon than OER readings, adding to comprehension challenges (Hsu, 2014). Future research should examine how factors such as concept density, sentence length, and jargon impact cognitive load and learning.

The shorter, objective-focused OER readings likely explain faster task completion in the OER DNA content. Brevity, though underexplored in OER research, appears beneficial for engagement (Dennen & Bagdy, 2019; Howard & Whitmore, 2020). The OER was designed directly from course learning objectives, unlike the commercial text (Freeman et al., 2014), which included additional material and presented concepts in a different order. Shorter, learning objective-focused OER are not the norm with OER adoption, but brevity and focus motivated the shift to OER in the course textbook transformation. Future studies should explore how brevity and focus in OER impacts student learning.

Pre-class preparation is key in flipped classrooms (Bassett et al., 2020; Heiner et al., 2014; Sappington et al., 2002). Shorter, directed readings improve engagement and reduce off-task preparation time, which may benefit learning (Baier et al., 2011). This contrasts with comprehensive, unfocused textbook chapters that can overwhelm students (Bloom et al., 1956; Fink, 2013).

The seemingly counterintuitive result that students working with a reading aligned to the reading questions still do not outperform those using less well-aligned course materials calls into question what the value of a textbook is and presents an interesting direction for future research on how reading structure can best help students prepare for class. One possibility is that students do not read effectively when preparing for class. In fact, we have ample anecdotal evidence of this from students enrolled in the course. Some students will passively read to study instead of using retrieval practice or other deep-learning strategies. Unpublished survey data from the course indicate that a subset of students omit the preparatory reading altogether, a pattern noted by other researchers (Gorzycki et al., 2020; Parlette & Howard, 2010), omitting the opening step in retrieval practice and instead turning their time resources elsewhere (Aagaard et al., 2014; Berry et al., 2010). Skipping the reading might not be a perceived cost if the student believes they will be provided with the opportunity to review and learn more in class. Additionally, cognitively higher-order learning objectives may exceed most students’ ability to learn deeply from a first read alone, especially in a student population where reading has declined (Gorzycki et al., 2020; Parlette & Howard, 2010). While a few study participants completed the second task too quickly to have more than cursorily used the text, this was not common among the 40 study participants. This “phone-it-in” behavior may be more common for students in a course with readings and low-stakes reading quizzes. A next step is to analyze student behavioral approaches to using the textbook as a learning resource to help complete a reading quiz. New AI-enhanced digital textbooks present alternative strategies for textbook implementation and efficacy (Koć-Januchta et al., 2022).

Our findings that our OER at minimum did no harm to student learning enrich the OER literature on learning outcomes. These results invite new research directions into the quality and alignment of the textbook and how students engage with their reading materials. Our results from this direct comparison of the same study participants who engaged with OER and with commercial texts provides additional evidence that OER implementation saves students money while: (a) not detracting from student learning of content specific to course-defined student learning objectives, and (b) spending less time on their first pass at understanding course content. Future research directions for OER research include examination of how readings align with learning objectives (Fink, 2013; Wiggins & McTighe, 2006), how reading length influences student motivation and cognitive load to learn new ideas, and how students approach pre-class readings to prepare for deeper learning in a flipped classroom. The evidence we present on learning outcomes for the same student in OER and non-OER textbook environments deepens the discussion on how students learn from OER and provides insights into future research directions important to student learning.

We thank James Cawthorne, Julia Ciaccia, Heather Fedesco, Stephanie Halmo, Troy Hilley, Jasmine Martin, Brent Minter, Shana Kerr, Jung Choi, Paula Lemons, Lisa Limeri, Taraji Long, Jessica Meredith, Alison Onstine, Madeline Tincher, Emily Weigel, Joyce Weinsheimer, Emma Whitson, Georgia Tech’s Science Education Research Group Experience (SERGE), UGA’s Biology Education Research Group (BERG), and the students who participated in the study. Funding and support came from Affordable Learning Georgia, Bio@Tech, the Open Education Group, and the Georgia Tech College of Sciences Open Education Materials Grant (to CS). ChatGPT contributed to shortening the manuscript.

This study was approved by the Georgia Tech Institutional Review Board, project number IRB H17494. Participants were remunerated for their time.

Aagaard, L., Conner, T. W., & Skidmore, R. L. (2014). College textbook reading assignments and class time activity. Journal of the Scholarship of Teaching and Learning, 14(3), 132-145. https://doi.org/10.14434/josotl.v14i3.5031

Anderson, L. W., & Krathwohl, D. R. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. Longman.

Anderson, T., & Cuttler, C. (2020). Open to open? An exploration of textbook preferences and strategies to offset textbook costs for online versus on-campus students. The International Review of Research in Open and Distributed Learning, 21(1), 40-60. https://doi.org/10.19173/irrodl.v20i5.4141

Baier, K., Hendricks, C., Warren-Gorden, K., Hendricks, J. E., & Cochran, L. (2011). College students’ textbook reading, or not! American Reading Forum annual yearbook, 31. https://www.researchgate.net/profile/Cindy-Hendricks/publication/266008859_College_students%27_textbook_reading_or_not/links/577d427b08aed39f598f6fed/College-students-textbook-reading-or-not.pdf

Ballen, C. J., & Greene, H. W. (2017). Walking and talking the tree of life: Why and how to teach about biodiversity. PLOS Biology, 15(3), Article e2001630. https://doi.org/10.1371/journal.pbio.2001630

Bassett, K., Olbricht, G. R., & Shannon, K. B. (2020). Student preclass preparation by both reading the textbook and watching videos online improves exam performance in a partially flipped course. CBE-Life Sciences Education, 19(3), Article ar32. https://doi.org/10.1187/cbe.19-05-0094

Berry, T., Cook, L., Hill, N., & Stevens, K. (2010). An exploratory analysis of textbook usage and study habits: Misperceptions and barriers to success. College Teaching, 59(1), 31-39. https://doi.org/10.1080/87567555.2010.509376

Blessinger, P., & Bliss, T. J. (Eds.). (2016). Open education: International perspectives in higher education. Open Book Publishers. https://doi.org/10.11647/OBP.0103

Bliss, T. J., Hilton, J., III, Wiley, D., & Thanos, K. (2013). The cost and quality of online open textbooks: Perceptions of community college faculty and students. First Monday, 18(1). https://doi.org/10.5210/fm.v18i1.3972

Bliss, T. J., Robinson, T. J., Hilton, J., & Wiley, D. A. (2013). An OER COUP: College teacher and student perceptions of open educational resources. Journal of Interactive Media in Education, 2013(1), Article 4. https://doi.org/10.5334/2013-04

Bloom, B. S., Engelhart, M. D., Furst, E. J., Hill, W. H., & Krathwohl, D. R. (1956). Taxonomy of educational objectives. Longmans, Green and Co.

Bossu, C., Bull, D., & Brown, M. (2012). Opening up Down Under: The role of open educational resources in promoting social inclusion in Australia. Distance Education, 33(2), 151-164. https://doi.org/10.1080/01587919.2012.692050

Brandle, S., Katz, S., Hays, A., Beth, A., Cooney, C., DiSanto, J., Miles, L., & Morrison, A. (2019). But what do the students think: Results of the CUNY cross-campus zero-textbook cost student survey. Open Praxis, 11(1), 85-101. https://doi.org/10.5944/openpraxis.11.1.932

Choi, J. C., Spencer, C. C., Kerr, S. C., Weigel, E., and Montoya, J. (2015). Biological Principles. https://bioprinciples.biosci.gatech.edu

Clinton, V., & Khan, S. (2019). Efficacy of open textbook adoption on learning performance and course withdrawal rates: A meta-analysis. AERA Open, 5(3), Article 2332858419872212. https://doi.org/10.1177/2332858419872212

Clinton, V., Legerski, E., & Rhodes, B. (2019). Comparing student learning from and perceptions of open and commercial textbook excerpts: A randomized experiment. Frontiers in Education, 4, Article 110. https://doi.org/10.3389/feduc.2019.00110

Clinton-Lisell, V. E., Roberts-Crews, J., & Gwozdz, L. (2023). SCOPE of open education: A new framework for research. The International Review of Research in Open and Distributed Learning, 24(4), 135-153. https://doi.org/10.19173/irrodl.v24i4.7356.

Colvard, N. B., Watson, C. E., & Park, H. (2018). The impact of open educational resources on various student success metrics. International Journal of Teaching and Learning in Higher Education, 30(2), 262-276. https://www.isetl.org/ijtlhe/pdf/IJTLHE3386.pdf

Crawford, D. B., & Snider, V. E. (2000). Effective mathematics instruction: The importance of curriculum. Education and Treatment of Children, 23(2), 122-142. https://www.jstor.org/stable/42940521

Croteau, E. (2017). Measures of student success with textbook transformations: The Affordable Learning Georgia initiative. Open Praxis, 9(1), 93-108. https://doi.org/10.5944/openpraxis.9.1.505

Crowe, A., Dirks, C., & Wenderoth, M. P. (2008). Biology in Bloom: Implementing Bloom’s taxonomy to enhance student learning in biology. CBE-Life Sciences Education, 7(4), 368-381. https://doi.org/10.1187/cbe.08-05-0024

Cuttler, C. (2019). Students’ use and perceptions of the relevance and quality of open textbooks compared to traditional textbooks in online and traditional classroom environments. Psychology Learning & Teaching, 18(1), 65-83. https://journals.sagepub.com/doi/full/10.1177/1475725718811300

Delgado, H., Delgado, M., & Hilton, J., III. (2019). On the efficacy of open educational resources: Parametric and nonparametric analyses of a university calculus class. The International Review of Research in Open and Distributed Learning, 20(1). https://doi.org/10.19173/irrodl.v20i1.3892

Dennen, V. P., & Bagdy, L. M. (2019). From proprietary textbook to custom OER solution: Using learner feedback to guide design and development. Online Learning, 23(3). https://doi.org/10.24059/olj.v23i3.2068

Feldon, D. F., Callan, G., Juth, S., & Jeong, S. (2019). Cognitive load as motivational cost. Educational Psychology Review, 31(2), 319-337. https://doi.org/10.1007/s10648-019-09464-6

Fialkowski, M. K., Calabrese, A., Tillinghast, B., Titchenal, C. A., Meinke, W., Banna, J. C., & Draper, J. (2020). Open educational resource textbook impact on students in an introductory nutrition course. Journal of Nutrition Education and Behavior, 52(4), 359-368. https://doi.org/10.1016/j.jneb.2019.08.006

Fink, L. D. (2013). Creating significant learning experiences: An integrated approach to designing college courses. Wiley.

Fisher, M. R. (2018). Evaluation of cost savings and perceptions of an open textbook in a community college science course. The American Biology Teacher, 80(6), 410-415. https://doi.org/10.1525/abt.2018.80.6.410

Florida Virtual Campus. (2012). 2012 Florida Student Textbook Survey. https://www.oerknowledgecloud.org/record2629

Florida Virtual Campus. (2022). 2022 Student textbook and instructional materials survey: Results and findings. https://www.flbog.edu/wp-content/uploads/2023/03/Textbook-Survey-Report.pdf

Freeman, S., Quillin, K., & Allison, L. (2014). Biological science (5th ed.). Pearson.

Goldrick-Rab, S. (2016). Paying the price. College Costs, Financial Aid, and the Betrayal of the American Dream. University of Chicago Press.

Gorzycki, M., Desa, G., Howard, P. J., & Allen, D. D. (2020). “Reading Is important,” but “I don’t read”: Undergraduates’ experiences with academic reading. Journal of Adolescent & Adult Literacy, 63(5), 499-508. https://doi.org/10.1002/jaal.1020

Grewe, K., & Davis, W. P. (2017). The impact of enrollment in an OER course on student learning outcomes. The International Review of Research in Open and Distributed Learning, 18(4). https://doi.org/10.19173/irrodl.v18i4.2986

Griggs, R. A., & Jackson, S. L. (2017). Studying open versus traditional textbook effects on students’ course performance: Confounds abound. Teaching of Psychology, 44(4), 306-312. https://doi.org/10.1177/0098628317727641

Grissett, J. O., & Huffman, C. (2019). An open versus traditional psychology textbook: Student performance, perceptions, and use. Psychology Learning & Teaching, 18(1), 21-35. https://doi.org/10.1177/1475725718810181

Hake, R. R. (1998). Interactive-engagement versus traditional methods: A six-thousand-student survey of mechanics test data for introductory physics courses. American Journal of Physics, 66(1), 64-74. https://doi.org/10.1119/1.18809

Hayes, A. F., & Krippendorff, K. (2007). Answering the call for a standard reliability measure for coding data. Communication Methods and Measures, 1(1), 77-89. https://doi.org/10.1080/19312450709336664

Heiner, C. E., Banet, A. I., & Wieman, C. (2014). Preparing students for class: How to get 80% of students reading the textbook before class. American Journal of Physics, 82(10), 989-996. https://doi.org/10.1119/1.4895008

Hendricks, C., Reinsberg, S. A., & Rieger, G. W. (2017). The adoption of an open textbook in a large physics course: An analysis of cost, outcomes, use, and perceptions. The International Review of Research in Open and Distributed Learning, 18(4). https://doi.org/10.19173/irrodl.v18i4.3006

Hilton, J., III. (2016). Open educational resources and college textbook choices: A review of research on efficacy and perceptions. Educational Technology Research and Development, 64(4), 573-590. https://doi.org/10.1007/s11423-016-9434-9

Hilton, J., III. (2020). Open educational resources, student efficacy, and user perceptions: A synthesis of research published between 2015 and 2018. Educational Technology Research and Development, 68(3), 853-876. https://doi.org/10.1007/s11423-019-09700-4

Hilton, J. L., III, Robinson, T. J., Wiley, D., & Ackerman, J. D. (2014). Cost-savings achieved in two semesters through the adoption of open educational resources. The International Review of Research in Open and Distributed Learning, 15(2). https://doi.org/10.19173/irrodl.v15i2.1700

Hockings, C., Brett, P., & Terentjevs, M. (2012). Making a difference—Inclusive learning and teaching in higher education through open educational resources. Distance Education, 33(2), 237-252. https://doi.org/10.1080/01587919.2012.692066

Howard, V. J., & Whitmore, C. B. (2020). Evaluating student perceptions of open and commercial psychology textbooks. Frontiers in Education, 5, Article 139. https://www.frontiersin.org/articles/10.3389/feduc.2020.00139

Hsu, W. (2014). Measuring the vocabulary load of engineering textbooks for EFL undergraduates. English for Specific Purposes, 33, 54-65. https://doi.org/10.1016/j.esp.2013.07.001

Illowsky, B. S., Hilton, J., III, Whiting, J., & Ackerman, J. D. (2016). Examining student perception of an open statistics book. Open Praxis, 8(3), 265-276. https://doi.org/10.5944/openpraxis.8.3.304

Jhangiani, R. S., Dastur, F. N., Le Grand, R., & Penner, K. (2018). As good or better than commercial textbooks: Students’ perceptions and outcomes from using open digital and open print textbooks. The Canadian Journal for the Scholarship of Teaching and Learning, 9(1), Article 5. https://doi.org/10.5206/cjsotl-rcacea.2018.1.5

Katz, S. (2019). Student textbook purchasing: The hidden cost of time. Journal of Perspectives in Applied Academic Practice, 7(1), 12-18. https://jpaap.ac.uk/JPAAP/article/view/349/530

Kersey, S. (2019). The effectiveness of open educational resources in college calculus. A quantitative study. Open Praxis, 11(2), 185-193. https://doi.org/10.5944/openpraxis.11.2.935

Koć-Januchta, M. M., Schönborn, K. J., Roehrig, C., Chaudhri, V. K., Tibell, L. A. E., & Heller, H. C. (2022). “Connecting concepts helps put main ideas together”: Cognitive load and usability in learning biology with an AI-enriched textbook. International Journal of Educational Technology in Higher Education, 19, Article 11. https://doi.org/10.1186/s41239-021-00317-3

Lane, A. (2008). Widening participation in education through open educational resources. In T. Iiyoshi & M. S. V. Kumar (Eds.), Opening up education: The collective advancement of education through open technology, open content, and open knowledge. The MIT Press.

Lane, A. (2012). A review of the role of national policy and institutional mission in European distance teaching universities with respect to widening participation in higher education study through open educational resources. Distance Education, 33(2), 135-150. https://doi.org/10.1080/01587919.2012.692067

Lovett, M., Meyer, O., & Thille, C. (2008). JIME—The Open Learning Initiative: Measuring the effectiveness of the OLI statistics course in accelerating student learning. Journal of Interactive Media in Education, 2008(1), Article 13. https://doi.org/10.5334/2008-14

Martin, M. T., Belikov, O. M., Hilton, J., III, Wiley, D., & Fischer, L. (2017). Analysis of student and faculty perceptions of textbook costs in higher education. Open Praxis, 9(1), 79-91. https://doi.org/10.5944/openpraxis.9.1.432

Marx, J. D., & Cummings, K. (2007). Normalized change. American Journal of Physics, 75(1), 87-91. https://doi.org/10.1119/1.2372468

Nusbaum, A. T., Cuttler, C., & Swindell, S. (2020). Open educational resources as a tool for educational equity: Evidence from an introductory psychology class. Frontiers in Education, 4, Article 152. https://doi.org/10.3389/feduc.2019.00152

Ou, W. J.-A., Henriques, G. J. B., Senthilnathan, A., Ke, P.-J., Grainger, T. N., & Germain, R. M. (2022). Writing accessible theory in ecology and evolution: Insights from cognitive load theory. BioScience, 72(3), 300-313. https://doi.org/10.1093/biosci/biab133

Parlette, M., & Howard, V. (2010). Pleasure reading among first-year university students. Evidence Based Library and Information Practice, 5(4), 53-69. https://doi.org/10.18438/B8C61M

Ryan, D. N. (2019). A comparison of academic outcomes in courses taught with open educational resources and publisher content [Doctoral dissertation, Old Dominion University]. ODU Digital Commons. https://digitalcommons.odu.edu/efl_etds/200

Sappington, J., Kinsey, K., & Munsayac, K. (2002). Two studies of reading compliance among college students. Teaching of Psychology, 29(4), 272-274. https://doi.org/10.1207/S15328023TOP2904_02

Seaman, J. E., & Seaman, J. (2024). Approaching a new normal? Educational resources in U.S. higher education, 2024. Bay View Analytics.

Smith, M. S., & Casserly, C. M. (2006). The promise of open educational resources. Change: The Magazine of Higher Learning, 38(5), 8-17. https://doi.org/10.3200/CHNG.38.5.8-17

Smith, N. D., Grimaldi, P. J., & Basu Mallick, D. (2020). Impact of zero cost books adoptions on student success at a large, urban community college. Frontiers in Education, 5, Article 579580. https://doi.org/10.3389/feduc.2020.579580

Sweller, J. (1988). Cognitive load during problem solving: Effects on learning. Cognitive Science, 12(2), 257-285. https://doi.org/10.1207/s15516709cog1202_4

Theobald, R., & Freeman, S. (2014). Is it the intervention or the students? Using linear regression to control for student characteristics in undergraduate STEM education research. CBE-Life Sciences Education, 13(1), 41-48. https://doi.org/10.1187/cbe-13-07-0136

Tlili, A., Garzón, J., Salha, S., Huang, R., Xu, L., Burgos, D., Denden, M., Farrell, O., Farrow, R., Bozkurt, A., Amiel, T., McGreal, R., López-Serrano, A., & Wiley, D. (2023). Are open educational resources (OER) and practices (OEP) effective in improving learning achievement? A meta-analysis and research synthesis. International Journal of Educational Technology in Higher Education, 20(1), Article 54. https://doi.org/10.1186/s41239-023-00424-3

Vander Waal Mills, K. E., Gucinski, M., & Vander Waal, K. (2019). Implementation of open textbooks in community and technical college biology courses: The good, the bad, and the data. CBE-Life Sciences Education, 18(3), Article ar44. https://doi.org/10.1187/cbe.19-01-0022

Wiggins, G., & McTighe, J. (2006). ASCD book: Understanding by design (2nd ed.). ASCD.

Wiley, D., Hilton, J. L., III, Ellington, S., & Hall, T. (2012). A preliminary examination of the cost savings and learning impacts of using open textbooks in middle and high school science classes. The International Review of Research in Open and Distributed Learning, 13(3), 262-276. https://doi.org/10.19173/irrodl.v13i3.1153

Willems, J., & Bossu, C. (2012). Equity considerations for open educational resources in the glocalization of education. Distance Education, 33(2), 185-199. https://doi.org/10.1080/01587919.2012.692051

Undergraduate Learning Gains and Learning Efficiency in a Focused Open Education Resource by Chrissy Spencer, Aakanksha Angra, Kata Dósa, and Abigail Jones is licensed under a Creative Commons Attribution 4.0 International License.