Volume 26, Number 1

Sajida Bhanu Panwale and Selvaraj Vijayakumar

B.S. Abdurrahman Crescent Institute of Science and Technology, India

This study aimed to evaluate the utility of artificial intelligence (AI) in improving the persuasive communication skills of online Master of Business Administration (MBA) students. In particular, this study investigated the influence of personalization through AI using the Google Gemini platform on conventional and online instructional approaches. This quasi-experimental study used a pretest and posttest design to compare two groups of MBA students pursuing persuasive online communication. The experimental group (n = 32) interacted with the AI-based personalized learning materials, whereas the control group (n = 32) used standard instructor-designed online modules. During the 12-week intervention period, the experimental group was provided with customized practice activities. Conversely, the control group was offered conventional online learning material. The effectiveness of both approaches was evaluated using pretests and posttests. The results of Tukey’s Honestly Significant Difference (HSD) test provided insight into the areas where AI-based personalized learning had a statistically significant impact. These results support the conclusions derived from an analysis of variance and further validate the study’s research hypotheses. This study demonstrates the advantages of incorporating AI into language development for remote learners and offers valuable insights for integrating AI-driven technologies into distance education.

Keywords: learner agency, adaptive technology, micro-learning, disruptive innovation, distributed learning

The increasing complexity of online learning platforms necessitates customized language development for part-time Master of Business Administration (MBA) students, who must juggle professional, personal, and academic responsibilities. Effective communication is essential for their academic success and professional growth (Randolph, 2008). MBA students need to develop several key communication skills, including clear articulation of ideas, coherent organization of arguments, persuasive engagement of the audience, and effective leadership communication. These skills are crucial for delivering impactful presentations, negotiating successfully, and writing compelling professional documents (DiBenedetto & Bembenutty, 2011).

The target learners often face challenges such as organizing and presenting arguments coherently, engaging and maintaining the interest of their audience, and effectively leading teams and managing projects (McGraw & Tidwell, 2001). Inadequate communication skills can lead to lower academic performance, reduced participation, and hindered career progression (Randolph, 2008). Additionally, poor communication can exacerbate stress and anxiety, negatively impacting overall well-being and academic outcomes (Francis, 2012).

Effective communication skills are critical for success in both academic and professional settings, especially in MBA programs where students must demonstrate leadership, project management, and stakeholder engagement. Deficiencies in these skills can create obstacles in managing responsibilities effectively, thereby contributing to increased stress and potentially impacting overall performance and career development (Ongus et al., 2017). Therefore, addressing these communication challenges through tailored language development programs is vital. Such programs can enhance students’ ability to balance their diverse responsibilities and succeed in both academic and professional endeavours.

This study’s theoretical framework is based on the principles of individualized learning and adaptive education. Customized educational settings that adjust to the specific requirements of each student can significantly improve the results of the learning process (Wang & Mendori, 2012). This study uses these ideas in the context of language acquisition, harnessing the power of artificial intelligence (AI) to offer tailored feedback and flexible learning trajectories. Through this study, we intend to facilitate learners in achieving their communication objectives and succeeding in their academic pursuits by providing customizable elements that create a personalized and engaging language-learning experience.

This study examines how AI can improve the persuasive communication abilities of MBA students in online environments. AI technologies can assist students in overcoming communication hurdles by providing tailored feedback and adaptive learning routes to address specific issues. The use of AI in this particular context is intended to provide MBA students with the essential abilities to express ideas with clarity, logically organize arguments, and effectively interact with their audience. These skills are directly pertinent and vital to the MBA curriculum and future professional endeavours.

In distance learning, instructor-led materials frequently fall short of students’ varied needs, particularly in terms of language development. These materials typically adopt a one-size-fits-all approach that hinders effective language acquisition and student progress (Shevchenko et al., 2021). This one-size-fits-all approach fails to consider learners’ learning styles, prior knowledge, and language proficiency levels, resulting in a gap between the educational content and specific needs. The lack of flexibility in conventional materials, which strictly adhere to a predetermined syllabus and pace, often clashes with a learner’s unique educational journey, potentially leading to feelings of frustration and disengagement, especially for those who require additional support or progress at a different pace (Tomasik et al., 2020). Traditional approaches lack personalized feedback on language output and skill development, which helps learners identify areas for growth and improve their language skills.

One of the limitations of traditional learning materials is that they frequently adhere to teacher-centric models that do not provide personalized feedback mechanisms. This lack of feedback can make it difficult for learners to identify and rectify their linguistic weaknesses, which may ultimately impede their language development (Paterson et al., 2020). This model limits learner autonomy, reduces motivation, and restricts collaborative learning opportunities, which are crucial for language development (Palincsar & Herrenkohl, 2002). These challenges, including the absence of tailored instruction, limited adaptability, insufficient personalized feedback, restricted learner agency, and limited collaborative learning opportunities, result in a mismatch between the educational content and learner requirements.

AI has shown remarkable potential in overcoming these limitations and personalizing language learning (Huang et al., 2023). AI-powered tools offer customized, engaging learning experiences and enhance language acquisition. These cutting-edge technologies, adept at analysing learner data, create tailored pathways that are in harmony with the learner’s distinct objectives and requirements. In doing so, they refute the traditional educational approach and foster effective language development. Moreover, AI-powered systems offer immediate feedback on language construction and skill progression (Liang et al., 2021). AI-driven technologies offering personalized feedback, simulations, and interactive games have the potential to revolutionize language learning and motivate and engage students (Crompton & Burke, 2023). Using AI capabilities, language learning can be reimagined as a dynamic and interactive experience.

Moreover, AI can facilitate collaborative learning by connecting learners from diverse backgrounds, thereby promoting social interaction and knowledge exchange (Wang et al., 2023). Therefore, this study aimed to compare AI-based personalized language learning with traditional instructor-designed courses, focusing on enhancing persuasive communication abilities in online MBA students using a quasi-experimental methodology. This study sought to assess whether AI-based customized language learning improves persuasive communication abilities more efficiently than traditional techniques.

Given the experimental nature of this study, the following hypotheses were formulated for examination:

Wang and Mendori (2012) examined a customizable online language-learning support system. This system is especially good at making concepts easier to understand and showing how AI can be used to accommodate each student’s individual learning preferences and knowledge levels. Another study addressed the issue of personalizing online courses by proposing a methodology through the application of natural language processing technologies (Lund et al., 2023). The shift to remote digital learning underscores the importance of personalized feedback in student-centred learning (Istenič, 2021). This study indicated that tailored feedback is necessary for remote students to learn effectively. Understanding how AI can deliver personalized feedback and enhance persuasive communication skills in online MBA programs is essential.

Artificial neural networks, intelligent tutoring systems, and natural language processing have been widely applied in personalized language learning (PLL), according to a comprehensive study by Chen et al. (2021). These tools have been shown to improve language learning and learner satisfaction, suggesting that they may help online MBA students improve their persuasive communication skills. Similarly, Sánchez-Villalon and Ortega (2007) investigated the potential of web-based learning, particularly in the context of personal learning environments (PLE). They proposed an alternative solution using online learning environments (OLE) and a writing e-learning appliance (AWLA) that integrated various language and communication tools. This approach promotes learner-created pathways, breaking down the barriers to traditional learning and potentially enhancing the persuasive communication skills of MBA students through technology-supported, personalized learning.

Obari et al. (2020) investigated the possibility of using AI tools, such as smart speakers and smartphone apps, to improve Japanese undergraduates’ command of English. According to their data, learners exposed to AI materials performed better than those exposed to conventional online resources. In summary, several studies have shown that AI-driven personalized language instruction can enhance language proficiency and student satisfaction, surpassing the effectiveness of conventional instructor-led language classes. The issues of addressing the effects of peer pressure, preserving student motivation, and incorporating diversity continue to require further attention and development.

Maghsudi et al. (2021) found that it is crucial to devise a personalized learning plan that considers learners’ strengths and weaknesses to facilitate knowledge acquisition. This method, which educational institutions are increasingly adopting, uses AI and big data analysis to identify and cater to individual student characteristics. Although these methods can suggest optimal content and curricula, some challenges need to be addressed, such as the absence of peer interaction and maintaining learner motivation. According to Chiu, Moorhouse, et al. (2023), automated data-driven personalized feedback within intelligent tutoring systems (ITS) improved student performance significantly by 22.95%. This study demonstrated the superiority of ITS in promoting learning compared with other computer-based instructional methods.

AI-powered personalized learning resources have garnered considerable traction because of their competence in meeting the varied requirements of learners and complementing classroom teaching (Zhao, 2022). Research has found that adaptive learning can personalize instruction based on students’ backgrounds and interests, resulting in improved problem-solving efficiency. Personalized interventions have been shown to benefit struggling students and positively impact learning outcomes.

Despite advancements in online education, real-time interaction remains challenging. ITS offer a promising solution by providing real-time personalized learning guidance and resource recommendations. Previous research has highlighted several challenges in the development of ITS, including learner modelling and human-computer interaction. (Chiu, Xia, et al., 2023). In summary, these studies collectively indicate that AI-based personalized language learning can significantly improve student learning outcomes and educational competencies, resulting in higher course completion rates than traditional instructor-designed courses. In essence, the data suggest that AI has the potential to transform personalized learning experiences by addressing the distinct needs of students.

The impacts of AI-based personalized learning and standard instructor-designed modules in an online MBA course were compared using a quasi-experimental pretest–posttest methodology. A quasi-experimental design, ideal for educational research, allows the examination of educational interventions in a natural setting (Shadish, Cook, & Campbell, 2002). The experimental and control groups were established through two complete classes, allowing for a comparison of the two teaching approaches while controlling for outside factors. The experimental design was deemed appropriate for assessing the impact of AI-based tools on students’ final grades, as it simulates the practical application of these technologies (Chen et al., 2021; Wang & Mendori, 2012).

Ethical considerations played a significant role in the present study. To ensure that the study adhered to ethical norms for research involving human participants, particularly in an educational context, the university’s Institutional Review Board (IRB) provided ethical approval prior to the study. With assurances of privacy and security in data processing, all participants provided their informed consent. The intervention was designed to avoid disrupting participants’ regular learning processes or academic performance. The study strictly followed the intervention research protocol by protecting participants’ integrity and upholding the institution’s academic standards. The university granted ethical approval, ensuring that the research complied with ethical standards for studies involving human participants in an academic setting (Istenič, 2021; Lund et al., 2023). The study was conducted over 12 weeks.

This study involved 64 part-time MBA students enrolled in an online course during the 2023 academic year at B. S. Abdur Rahman Crescent University, Chennai, India. All participants were non-native English speakers with diverse educational backgrounds and work experiences. Course requirements and practical considerations necessitated a non-random assignment of participants to experimental or control groups using purposive sampling. The groups were formed based on enrolment order and received either AI-based personalized learning materials (experimental group) or traditional instructor-designed online modules (control group).

Communication skills were assessed using a comprehensive rubric focusing on fluency, accuracy, organization, and overall effectiveness. These criteria are widely used in language proficiency studies, as evidenced by Chen et al. (2021), among others. Andrade (2000) and Moskal (2000) discussed the use of rubrics in promoting thinking and learning, thus supporting the assessment approach of the current study.

This rubric was designed to be used for both pre- and post-assessments. Each element was assessed on a scale of 0 to 2.5, resulting in a potential overall score of 10 for each sales pitch presentation. See Table 1.

Table 1

Assessment Tool—Standardised Rubric for Evaluating Sales Pitches

| Attribute | Assessment criteria | ||||

| Excellent (2.1–2.5) | Good (1.6–2.0) | Satisfactory (1.1–1.5) | Needs improvement (0.6–1.0) | Poor (0–0.5) | |

| Fluency | Speech flows smoothly and naturally | There are minor hesitations, but it still flows well | Some hesitations affect flow | Frequent hesitations disrupt the flow | Extremely choppy and disjointed speech |

| Accuracy | Error-free grammatical usage | Minor grammatical errors are present | Noticeable grammatical errors | Frequent grammatical mistakes | Speech is heavily laden with errors |

| Organization | Highly logical and well-structured | Mostly clear structure and logic | Some disorganization is evident | Lacks clear structure and logic | Completely disorganized |

| Overall effectiveness | Highly persuasive and engaging | Generally engaging and persuasive | Moderately engaging and persuasive | Limited in engagement and persuasion | Not engaging or persuasive |

To guarantee content validity of the rubric, a panel of three educators in business communication and online learning scrutinized the initial draft. Their input aided in enhancing the rubric to capture the crucial aspects of an effective sales pitch with greater precision. The rubric then underwent pilot testing with a selection of sales pitches from a prior course. This process enabled the refinement of the scoring criteria to ensure clarity and measurability. To assess the inter-rater reliability, two independent raters evaluated the sample presentations using the rubric. A high correlation between their scores (Cohen’s kappa > 0.8) confirmed the dependability of the rubric. During implementation, two independent raters scored each presentation, and any discrepancies in scoring were addressed through discussion to ensure consistency and fairness in the evaluation.

The research project lasted over 12 weeks, during which the experimental and control groups were subjected to diverse educational resources. These resources are compared in Table 2 and discussed in the next sections.

Table 2

Pedagogical Framework—A Comparison

| Aspect | Participant groups | |

| AI-based personalized learning (experimental) | Instructor-designed online modules (control) | |

| Platform | Google Gemini AI platform | Moodle LMS |

| Content development | Customized based on individual pretest performance and learning preferences | Standardized video lectures, readings, and discussion forums |

| Learning path | Interactive lessons, practice activities, personalized feedback | Fixed curriculum without personalization |

| Delivery mode | Adaptive LMS allows personalized access and progress tracking | The same LMS used for delivering standardized content |

| Interaction monitoring | Analytics tools in LMS tracking engagement, plus AI platform insights | Analytics tools in LMS tracking engagement and participation |

| Additional features | Customized learning paths and progress tracking specific to each learner | The standard learning experience for all students |

Note. AI = artificial intelligence; LMS = learning management system.

The experimental group had a distinctive learning experience facilitated by the Google Gemini AI platform. This platform uses sophisticated machine learning algorithms to analyse the pretest results of each student, along with their individual learning preferences and engagement patterns. Based on this comprehensive analysis, the AI platform generated personalized learning paths for each participant of the experimental group. These paths comprised interactive lessons, practice activities, and targeted feedback, all of which were tailored to each student’s specific needs and progression. These materials were delivered through an adaptive learning management system (LMS), which not only enabled students to access the content at their convenience but also allowed them to monitor their progress. This approach was designed to offer a highly individualized learning experience, potentially enhancing the efficiency and effectiveness of skill acquisition.

In contrast, the control group received a more conventional form of online education. The learning materials for this group were developed by the course instructor and consisted of a series of standardized video lectures, readings, and discussion forums. These modules were hosted on the same LMS as the AI-based materials but lacked the adaptive and personalized features of the experimental group’s materials. Instead, they followed a fixed curriculum designed to cover the same educational content and objectives as the AI-based program, albeit without a personalized element. Thus, this group’s learning experience adhered to traditional online learning methodologies and served as a benchmark against which the efficacy of the AI-based approach could be evaluated.

The LMS was equipped with analytics designed to track various metrics to assess participants’ engagement with their respective learning materials. These metrics included the amount of time spent on each module, the degree of interaction with interactive elements, completion rates of lessons and activities, and participation levels in discussion forums. For the experimental group, an AI platform provided additional analytics that offered deeper insights into each student’s interaction with personalized learning elements, such as usage patterns and progression along custom learning paths. The objective of learning analytics was to provide a comprehensive comparison between the two educational approaches, assessing not only the effectiveness of AI-based personalized learning in an online setting but also the dynamics of student interaction and engagement with innovative educational technologies.

The personalized learning materials for the experimental group were developed using a machine learning algorithm integrated into the Google Gemini AI platform. This algorithm analyses a range of data points to create highly individualized learning paths for each student. Key data points included the initial assessment scores from the pretest, which provided a baseline for each student’s persuasive communication skills. Moreover, the algorithm considered variables such as students’ engagement patterns (time spent on various tasks and frequency of logins), interactions with different types of content (videos, readings, and interactive exercises), and responses to formative assessments embedded within the course. As the students progressed through the course, the algorithm continuously assessed their performance on ongoing assessments and activities in real time. Based on this data, the learning paths were adjusted to accommodate each student’s evolving requirements. If a student demonstrated improvements in specific areas, the algorithm would introduce more advanced concepts or challenging tasks to those areas. Conversely, if a student struggled with certain topics, the algorithm provided additional resources and exercises to reinforce learning in these areas. This adaptive approach enabled the learning experience to remain aligned with each student’s pace and learning style, aiming to maximize their engagement and educational outcomes.

The control group received instructor-designed online modules that were created to be comparable in educational value to the AI-based materials used by the experimental group. These modules were designed by an expert in persuasive communication and covered the same topics and learning objectives as in the AI-based curriculum. The content included well-structured video lectures, relevant readings, and case studies organized around specific themes or skills in persuasive communication. Interactive elements such as discussion forums were also incorporated to provide students with opportunities to engage with peers and instructors. Formative assessments, such as quizzes and short writing assignments, were included at regular intervals to gauge the student’s understanding and retention of the material. While these modules lacked the adaptive and personalized features of AI-based materials, they were designed to be engaging and pedagogically sound, ensuring that all students had access to high-quality educational resources.

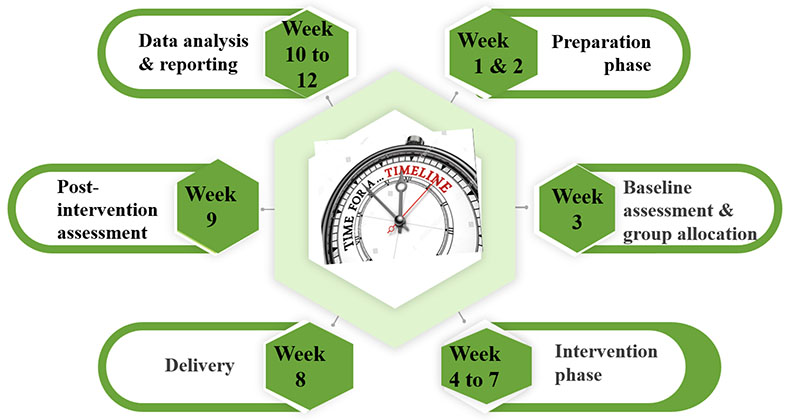

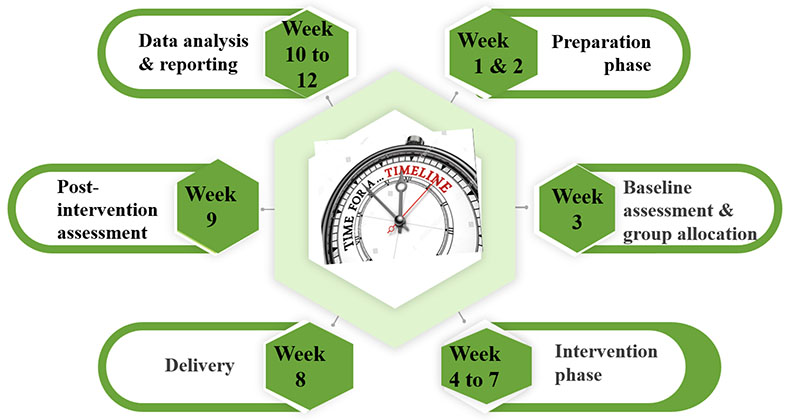

The study followed the procedure detailed in the previous section and ran for 12 weeks, according to the timeline shown in Figure 1. The steps are discussed in the sections that follow.

Figure 1

Timeline for Study

The planning and creation of the study were accomplished during the initial week, which entailed the development and refinement of the research materials and protocols to guarantee their suitability for the goals of the study. Following all required ethical standards, the researchers secured approval from the appropriate institutional review boards and ensured that participants provided informed consent. The following week was dedicated to conducting initial training sessions for the raters, emphasizing the employment of a standardized rubric to guarantee impartiality in the evaluations. The AI-driven learning platform and LMS were thoroughly evaluated and prepared to guarantee their full operational capacity and ability to meet the requirements of the study.

During the 3rd week, participants underwent a pretest fluency assessment, which served as a means of establishing a baseline measurement of their initial abilities. The information gathered from these assessments was then carefully analyzed to create a baseline reference point for evaluating the results of the study. At the completion of the 3rd week, the subjects were assigned to the control or experimental groups based on purposive sampling, following the established criteria. This allocation was undertaken to ensure both groups accurately reflected the broader participant pool, thereby enhancing the validity of the findings.

At the beginning of weeks 4 to 7, the experimental group initiated the use of personalized AI-based learning materials. The control group was provided with instructor-created online modules. The interactions of participants with both types of learning materials were observed and recorded, supplying information on their levels of engagement and usage patterns.

The distribution of educational resources was determined during the 8th week. Subsequently, participants were asked to share during feedback sessions their thoughts and feelings regarding the resources they had used. Data gathered from the feedback sessions aimed to complement the quantitative data gathered in this study.

At the beginning of the 9th week, assessments of posttest fluency were conducted with all participants. These assessments were designed to replicate the pretests, thereby ensuring data compatibility. After the assessments were completed, all data were stored safely for subsequent analysis.

During weeks 10 and 11, the researchers conducted an inferential statistical analysis of the pre- and posttest data. This analysis was critical for determining any statistically significant changes in the participants’ fluency resulting from the intervention. Moreover, the researchers analysed qualitative feedback to interpret the participants’ subjective experiences and the perceived impact of the intervention. The study was completed and finalized. This report integrates the results of both quantitative and qualitative analyses and provides a comprehensive overview of the study’s outcomes. Additionally, the research team prepared for the dissemination of these findings by selecting appropriate platforms and formats for sharing results with the academic community and other relevant stakeholders.

The analysis focused on the measures of overall effectiveness, correctness, and fluency and organization. Furthermore, the analysis aimed to determine any differences in learning outcomes, particularly in the area of organization, between the experimental and control group. The quantitative methodology employed in this study was essential for achieving primary research objectives and gaining a comprehensive understanding of the effectiveness of AI-enhanced learning approaches in a distributed educational context. Significant insights were obtained regarding the unique contributions of personalized learning tools to the development of key communication competencies through the use of inferential statistical methods.

A two-way analysis of variance (ANOVA) with repeated measures was deemed suitable because it allowed for the analysis of changes in the same subjects over two points in time, providing insights into both intra- and inter-group effects. To identify which sets of means differed significantly from one another, a post hoc analysis technique known as Tukey’s Honestly Significant Difference (HSD) test was employed. This post hoc analysis was crucial for providing a more detailed understanding of the impact of educational interventions on various parameters of persuasive communication skills. The use of Tukey’s HSD test in conjunction with other statistical methods resulted in a more comprehensive analysis. Table 3 presents the results of the inferential statistics. A discussion of each parameter follows.

Table 3

Descriptive Statistics and Effect Sizes for Key Study Variables

| Parameter | Group | Pretest M (SD) | Posttest M (SD) | p | η2 |

| Fluency | Control | 5.2 (0.8) | 5.6 (0.9) | <.05 | 0.08 |

| Experimental | 5.3 (0.9) | 6.4 (0.7) | <.05 | 0.08 | |

| Accuracy | Control | 4.9 (1.0) | 5.2 (1.1) | <.05 | 0.07 |

| Experimental | 5.0 (0.9) | 6.2 (0.8) | <.05 | 0.07 | |

| Overall effectiveness | Control | 6.1 (1.1) | 6.5 (1.2) | <.05 | 0.09 |

| Experimental | 6.2 (1.2) | 7.5 (1.0) | <.05 | 0.09 | |

| Organization | Control | 5.8 (0.7) | 6.0 (0.8) | >.05 | 0.02 |

| Experimental | 5.9 (0.6) | 6.1 (0.7) | >.05 | 0.02 |

The experimental group demonstrated considerable improvement in fluency, showing a statistically significant rise from pre-test to post-test, while the control group exhibited a smaller yet significant improvement. Thus, the experimental group, which was exposed to the AI-based learning approach, exhibited greater enhancement in fluency than the control group.

The accuracy parameter exhibited a similar trend. The average posttest score of the experimental group was 6.2 (SD = 0.8), which was a notable improvement from the pretest score of 5.0 (SD = 0.9). This improvement was statistically significant (p < .05) with an effect size of 0.07. The control group achieved a score of 5.2 (SD = 1.1) in the posttest, increasing from 4.9 (SD = 1.0) in the pretest. However, this improvement was less significant. These findings suggest that AI-based personalized learning materials are more effective than traditional methods in enhancing the accuracy of persuasive communication skills.

In terms of overall effectiveness, the experimental group achieved considerable improvement, with scores increasing from 6.2 (SD = 1.2) in the pretest to 7.5 (SD = 1.0) in the posttest. A modest effect size of 0.09 was associated with this improvement, which was statistically significant (p < .05). With scores rising from 6.1 (SD = 1.1) to 6.5 (SD = 1.2), the control group likewise showed improvement, but to a lesser degree. These results provide evidence that the AI-based learning method is more successful in improving overall persuasive communication abilities. When compared to the control group, the experimental group performed far better in terms of fluency, accuracy, and overall efficacy. Small effect sizes and p values below .05 support these enhancements.

In terms of the organization parameter, there were no clear variations between the two datasets. Both groups demonstrated marginal enhancement, with the experimental group improving from a mean score of 5.9 to 6.1 and the control group from 5.8 to 6.0. The p values were higher than .05, and there was a small effect size. This lack of disparity between the two groups may be attributed to several factors. First, the nature of the content and instructional methods in both learning approaches may have been sufficiently similar to address the organizational aspects of communication, leaving little scope for AI-based personalization to exhibit a distinct advantage. Second, the inherent limitations of the study design, such as the duration of the intervention or the scope of the curriculum, may have affected the potential to observe significant differences in this particular area.

After a two-way ANOVA showed significant interactions, Tukey’s HSD test was used for post hoc comparisons to determine which group means were different. When comparing the pre- and posttest scores of the experimental group, it was clear they had made considerable gains in fluency, accuracy, and overall effectiveness. According to Tukey’s HSD test, the control group had a posttest mean score of 5.6, whereas the experimental group had a considerably higher mean score of 6.4. The mean difference between the two groups was 0.8, and the p -value was < .05. Similarly, the results showed a significant difference of 1.0 in mean accuracy between the experimental (M = 6.2) and control (M = 5.2) groups, with a p -value less than 0.05.. In terms of organization, however, Tukey’s HSD did not show any significant changes between the groups when comparing the pre- and posttest scores; both groups had similar results (6.1 for the experimental group and 6.0 for the control group; p > .05).

The study results related to the first research hypothesis revealed that incorporating AI into personalized language learning significantly enhanced the persuasive communication skills of online MBA students. This was demonstrated through posttest improvements, wherein the experimental group exhibited a substantial improvement in fluency, accuracy, and overall effectiveness in their communication skills compared to the control group. These findings align with the current literature, which suggests that AI-based personalized learning environments can address learners’ individual needs more effectively, leading to improved language proficiency outcomes.

Regarding the second research hypothesis, the majority of the assessed parameters showed that AI-driven personalized learning had a beneficial impact on specific student learning outcomes. However, it failed to produce a significant effect on the organization aspect of persuasive communication skills. Both the control group and experimental group’s posttest mean increased only slightly. These findings suggest that while AI personalization may significantly enhance certain aspects of language learning, its influence on organizational skills is negligible, and it may require additional instructional strategies or support. Future research could benefit from a hybrid approach that integrates AI personalization with conventional methodologies to improve all aspects of persuasive communication more comprehensively.

In this study, the results were interpreted within the context of existing literature and theoretical frameworks on AI in education and language learning. The improvements in fluency, accuracy, and overall effectiveness among participants in the experimental group corroborate prior research, which has posited that AI-based personalized learning significantly enhanced language acquisition (Liu et al., 2021). These findings align with the theoretical framework, suggesting that AI-driven personalization effectively caters to individual learning styles and needs.

Consistent with earlier studies showing that AI could improve certain language skills, we found that both fluency and accuracy improved during our investigation (Crawford et al., 2023). This consistency suggests that AI tools are particularly adept at identifying and addressing language problems. However, the lack of a significant difference found in the organization parameter contrasts with some literature indicating that AI-based tools could also improve structural aspects of language learning (Long & McLaren, 2024). This discrepancy may have been the result of the specific AI tools used or the duration of the intervention.

The findings of this study have significant implications for online MBA programs. The integration of AI-based personalized learning tools can significantly enhance students’ communication skills. Adaptive algorithms capable of effectively targeting specific language skills are crucial for achieving this goal. However, it also points to the need for further research to develop tools that can enhance the organization aspects of language learning. While AI-based tools significantly enhance learning outcomes, they should be integrated as part of a comprehensive educational strategy that includes traditional methods, especially in aspects where AI tools might not have a distinct advantage. This study adds to the expanding literature on the use of AI in classrooms and offers empirical evidence that personalized learning powered by AI is effective.

Several constraints that may have influenced the results of this study were identified. First, the small sample size of 64 participants was a limitation that could restrict the generalizability of the conclusions. Future studies with larger sample sizes may yield more robust data with broader applicability. Additionally, the AI algorithm used in the learning materials of the experimental group was designed specifically for this study, which raises questions regarding its replicability in different educational settings or subject areas. Furthermore, the homogeneity of the student population, comprising part-time MBA students from a private university in India, who were all non-native English speakers, might limit the generalizability of the findings.

To further understand the possibilities and constraints of AI in education, future studies should investigate a range of AI-based tools and algorithms in different educational contexts and subject types. Research could also be expanded to examine other aspects of communication, such as emotional intelligence, critical thinking, and argumentation. The results of this study highlight how personalized learning materials powered by AI can improve persuasive communication; however, these constraints underscore the necessity for additional studies to enhance and expand our comprehension of AI’s function in educational settings.

The findings from this study contribute to the expanding body of research on the use of AI in education, particularly in online MBA programs and language acquisition. Participants in the experimental group who interacted with personalized AI-based learning materials showed significant improvements in fluency, accuracy, and overall effectiveness. These results indicate that AI-driven tools can enhance communication skills, supporting the findings of Jadhav et al. (2023) regarding the effectiveness of AI in tailored education.

The implications of this study for distance education theory are significant as they demonstrate how AI can personalize learning experiences to effectively meet individual needs. This study highlights the necessity for further investigation into AI’s capabilities and limitations in various educational contexts and with different student populations. In practice, integrating AI-based tools into distance education can enhance learning outcomes; however, it is essential to complement these tools with traditional methods to address language learning comprehensively. Future research should explore the limitations of this study, including the sample size and specificity of the AI system. Expanding research to include diverse student populations and educational settings, as proposed by Suen et al. (2020), and investigating a broader spectrum of AI tools will provide deeper insights into the diverse applications of AI in education.

Andrade, H. G. (2000). Using rubrics to promote thinking and learning. Educational Leadership, 57(5), 13-19.

Chen, X., Zou, D., Cheng, G., & Xie, H. (2021, July 1). Artificial intelligence-assisted personalized language learning: Systematic review and co-citation analysis. In M. Chang, N.-S. Chen, D. G. Sampson, & A. Tlili (Eds.), Proceedings: IEEE 21st International Conference on Advanced Learning Technologies (ICALT) (pp. 241-245). https://doi.org/10.1109/ICALT52272.2021.00079

Chiu, T. K. F., Moorhouse, B. L., Chai, C. S., & Ismailov, M. (2023, February 6). Teacher support and student motivation to learn with Artificial Intelligence (AI) based chatbots. Interactive Learning Environments, 1-17. https://doi.org/10.1080/10494820.2023.2172044

Chiu, T. K. F., Xia, Q., Zhou, X., Chai, C. S., & Cheng, M. (2023). Systematic literature review on opportunities, challenges, and future research recommendations of artificial intelligence in education. Computers and Education: Artificial Intelligence, 4, Article 100118. https://doi.org/10.1016/j.caeai.2022.100118

Crawford, J., Cowling, M., & Allen, K.-A. (2023). Leadership is needed for ethical ChatGPT: Character assessment and learning using artificial intelligence (AI). Journal of University Teaching & Learning Practice, 20(3), Article 02. https://doi.org/10.53761/1.20.3.02

Crompton, H., & Burke, D. (2023). Artificial intelligence in higher education: The state of the field. International Journal of Educational Technology in Higher Education, 20(1), Article 22. https://doi.org/10.1186/s41239-023-00392-8

DiBenedetto, M. K., & Bembenutty, H. (2011). Differences between full-time and part-time MBA students’ self-efficacy for learning and for employment: A self-regulatory perspective. The International Journal of Educational and Psychological Assessment, 7(1), 81-110. https://www.researchgate.net/publication/281466153_Differences_between_full-time_and_part-time_MBA_students'_self-efficacy_for_learning_and_for_employment_A_self-regulatory_perspective

Francis, R. (2012). Business communication courses in the MBA curriculum: A reality check (Corpus ID: 56263598). Semantic Scholar. https://www.semanticscholar.org/paper/Business-Communication-Courses-in-the-MBA-A-Reality-Francis/ebc9594a269889c3c14e91edd8d075c52676fd6b

Huang, X., Zou, D., Cheng, G., Chen, X., & Xie, H. (2023). Trends, research issues and applications of artificial intelligence in language education. Educational Technology & Society, 26(1), 112-131. https://www.jstor.org/stable/48707971

Istenič, A. (2021). Online learning under COVID-19: Re-examining the prominence of video-based and text-based feedback. Educational Technology Research and Development, 69(1), 117-121. https://doi.org/10.1007/s11423-021-09955-w

Jadhav, S. V., Shinde, S. R., Dalal, D. K., Deshpande, T. M., Dhakne, A. S., & Gaherwar, Y. M. (2023). Improve communication skills using AI. In P. B. Mane & A. R. Buchade (Chairs), Proceedings of the 2023 International Conference on Emerging Smart Computing and Informatics (ESCI) (pp. 1-5). IEEE. https://doi.org/10.1109/ESCI56872.2023.10099941

Liang, J.-C., Hwang, G.-J., Chen, M.-R. A., & Darmawansah, D. (2021). Roles and research foci of artificial intelligence in language education: An integrated bibliographic analysis and systematic review approach. Interactive Learning Environments, 31(7), 4270-4296. https://doi.org/10.1080/10494820.2021.1958348

Liu, C., Hou, J., Tu, Y.-F., Wang, Y., & Hwang, G.-J. (2021). Incorporating reflective thinking promoting mechanism into artificial intelligence-supported English writing environments. Interactive Learning Environments, 31(9), 5614-5632. https://doi.org/10.1080/10494820.2021.2012812

Long, S., & McLaren, M.-R. (2024). Belonging in remote higher education classrooms: The dynamic interaction of intensive modes of learning and arts-based pedagogies. Journal of University Teaching and Learning Practice, 21(2), Article 03. https://doi.org/10.53761/1.21.2.03

Lund, B. D., Wang, T., Mannuru, N. R., Nie, B., Shimray, S., & Wang, Z. (2023). ChatGPT and a new academic reality: Artificial Intelligence‐written research papers and the ethics of the large language models in scholarly publishing. Journal of the Association for Information Science and Technology, 74(5), 570-581. https://doi.org/10.1002/asi.24750

Maghsudi, S., Lan, A., Xu, J., & van der Schaar, M. (2021). Personalized education in the artificial intelligence era: What to expect next. IEEE Signal Processing Magazine, 38(3), 37-50. https://doi.org/10.1109/msp.2021.3055032

McGraw, P., & Tidwell, A. (2001). Teaching group process skills to MBA students: A short workshop. Education + Training, 43(3), 162-171. https://doi.org/10.1108/EUM0000000005461

Moskal, B. M. (2000). Scoring rubrics: What, when, and how? Practical Assessment, Research, and Evaluation, 7(3). https://doi.org/10.7275/a5vq-7q66

Obari, H., Lambacher, S., & Kikuchi, H. (2020). The impact of using AI and VR with blended learning on English as a foreign language teaching. In F. Karen-Margrete, L. Sanne, B. Linda, & T. Sylvie (Eds.), CALL for widening participation: Short papers from EUROCALL 2020 (pp. 253-258). Research-publishing.net. https://doi.org/10.14705/rpnet.2020.48.1197

Ongus, R. W., Gekara, M. M., & Nyamboga, C. M. (2017). Library and information service provision to part-time postgraduate students: A case study of Jomo Kenyatta Memorial Library, University of Nairobi, Kenya. Journal of Information and Knowledge, 54(1), 1-17. https://doi.org/10.17821/srels/2017/v54i1/108529

Paterson, C., Paterson, N., Jackson, W., & Work, F. (2020). What are students’ needs and preferences for academic feedback in higher education? A systematic review. Nurse Education Today, 85, Article 104236. https://doi.org/10.1016/j.nedt.2019.104236

Palincsar, A. S., & Herrenkohl, L. R. (2002). Designing collaborative learning contexts. Theory Into Practice, 41(1), 26-32. https://doi.org/10.1207/s15430421tip4101_5

Randolph, W. A. (2008). Educating part-time MBAs for the global business environment. Journal of College Teaching & Learning (TLC), 5(8). https://doi.org/10.19030/tlc.v5i8.1236

Sánchez-Villalon, P. P. S., & Ortega, M. (2007). AWLA and AIOLE for personal learning environments. International Journal of Continuing Engineering Education and Life-Long Learning, 17(6), 418-431. https://doi.org/10.1504/IJCEELL.2007.015591

Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Experimental and quasi-experimental designs for generalized causal inference. Houghton Mifflin.

Shevchenko, V., Malysh, N., & Tkachuk-Miroshnychenko, O. (2021). Distance learning in Ukraine in COVID-19 emergency. Open Learning: The Journal of Open, Distance and E-Learning, 39(1), 4-19. https://doi.org/10.1080/02680513.2021.1967115

Suen, H.-Y., Hung, K.-E., & Lin, C.-L. (2020). Intelligent video interview agents used to predict communication skill and perceived personality traits. Human-Centric Computing and Information Sciences, 10(1), Article 3. https://doi.org/10.1186/s13673-020-0208-3

Tomasik, M. J., Helbling, L. A., & Moser, U. (2020). Educational gains of in‐person vs. distance learning in primary and secondary schools: A natural experiment during the COVID-19 pandemic school closures in Switzerland. International Journal of Psychology, 56(4), 566-576. https://doi.org/10.1002/ijop.12728

Wang, J., & Mendori, T. (2012). A customizable language learning support system using course-centered ontology and teaching method ontology. In T. Matsuo, K. Hashimoto, & S. Hirokawa (Eds.), 2012 IIAI International Conference on Advanced Applied Informatics (pp. 149-152). IEEE. https://doi.org/10.1109/IIAI-AAI.2012.38

Wang, X., Liu, Q., Pang, H., Tan, S. C., Lei, J., Wallace, M. P., & Li, L. (2023). What matters in AI-supported learning: A study of human-AI interactions in language learning using cluster analysis and epistemic network analysis. Computers & Education, 194, Article 104703. https://doi.org/10.1016/j.compedu.2022.104703

Zhao, X. (2022). Leveraging artificial intelligence (AI) technology for English writing: Introducing Wordtune as a digital writing assistant for EFL writers. RELC Journal, 54(3), 890-894. https://doi.org/10.1177/00336882221094089

Evaluating AI-Personalized Learning Interventions in Distance Education by Sajida Bhanu Panwale and Selvaraj Vijayakumar is licensed under a Creative Commons Attribution 4.0 International License.