Abbie H. Brown

East Carolina University, USA

Tim Green

California State University, Fullerton, USA

The authors report the results of a study that provides bases for comparison between the time necessary to participate in courses delivered asynchronously online and courses delivered in a traditional classroom setting. Weekly discussion threads from 21 sections of six courses offered as part of online, degree-granting, accredited, graduate programs were examined. The purpose of this research is to determine whether students are spending more or less time participating in an online course than in a traditional classroom.

The discussion size (i.e., the number of words per discussion) was determined using the automatic word count function in MS Word. Once the word counts for each course section were determined, the average words per discussion were calculated. The authors used 180 words per minute to calculate the average reading time, based on the work of Ziefle (1998) and Carver (1985, 1990), in order to determine the average minutes per week a student spent reading the discussions.

The study indicates that a typical, graduate-level, online, asynchronous discussion requires about one hour a week of reading time, and the time commitment for participatory activity is similar to that of traditional, face-to-face courses, given that it takes under two hours to compose initial messages and responses to the discussion prompt.

Although these findings are informative, further research is recommended in the area of time spent on online course activities in terms of student hours earned to enable a direct focus on various student characteristics, such as English language competency and student level.

Keywords: Online learning; distance education; threaded discussion; asynchronous communication

After more than ten years of teaching online courses for a variety of graduate programs as full time faculty and as adjunct instructors, the authors have noted a common response to online, asynchronous instruction. Students regularly express remarks such as, “I learned an amazing amount in this course: I spent more time working on this course than I ever did in a face-to-face class” or “This course was too time consuming; I wish the instructor would keep in mind that many of us are busy professionals who work full-time.” These comments are added to the student satisfaction surveys administered at the end of a course or are delivered in face-to-face conversation (e.g., at conference meetings that students and instructors attend) or in private communication with the instructor.

Statements like “the course took a great deal of time” seem to be made by some as an admonishment to the instructor and by others as a testimonial that the online instruction is better than a course with similar content delivered in a traditional, face-to-face environment. Regardless of whether the comment is intended as criticism or praise, many students assert that courses offered asynchronously online are more time consuming than traditional, face-to-face courses. To determine how best to address this observation, the authors set out to discover whether online asynchronous courses are in fact more time consuming for students than traditional, synchronous courses.

The authors address the following questions:

Reading and writing assignments are an established component of most traditional courses, and the amount of time spent on these activities during a course is relatively easy to plan based on experience. However, when there is no specific meeting time established for a course, it becomes challenging to determine how much time students spend in participatory activities.

At the universities where the authors teach, student hours (a postsecondary unit of measure derived from the Carnegie Unit [Shedd, 2003]) and semesters are used as the measure of time for both online and face-to-face course participation. At these institutions one student hour is assumed to represent one hour a week of meeting time during a standard semester. The problem the authors face is that all of their courses are delivered asynchronously online, making it difficult to determine how much time students are spending in weekly, participatory activity. The students’ participation is always constrained by the semester in that courses begin and end on specific dates. In the absence of synchronous meetings that set a finite amount of incremental (i.e., weekly) participation time, it is difficult to gauge whether asynchronous student participation (in the case of the authors’ classes, threaded discussion) is significantly more or less time consuming than participation in synchronous courses.

The objective of this research is to determine bases for comparison between the time needed to participate in distance, asynchronous courses (delivered using Internet-based course management systems, such as Blackboard or eCollege) and the time needed to participate in traditional, classroom courses.

Postsecondary institutions are offering an increasing number of distance learning opportunities. Traditional “brick and mortar” universities currently offer courses and entire graduate programs online (Lee & Nguyen, 2007). There are also a number of accredited virtual institutions; that is to say, all students in these institutions complete their work at a distance, and the institutions do not maintain any traditional campuses or classrooms. Walden University and Capella University are examples of this type of virtual institution. Programs based in part or in whole on a distance learning delivery model are particularly attractive to students with jobs, families, or both (Schrire, 2006; Bourne, 1998). Furthermore, instruction delivered in this manner is a viable method of supporting lifelong learning (Thompson, 1998).

A great many of these online courses are delivered asynchronously, using course management software (CMS), alternatively referred to as learning management software (LMS). A great deal of thought has gone into how best to make use of the CMS/LMS to offer a learning experience at a distance that is similar to that of a traditional classroom; the bulk of this effort has gone into addressing the technological challenges of the learning experience (e.g., developing appropriate software and addressing connectivity and hardware requirements) and developing a feeling of community among learners (Anderson, 2006). What has not been adequately addressed to date is whether students learning at a distance are receiving a similar experience in terms of time spent on the course activities.

Whether delivered in a traditional setting or delivered at a distance, the authors have observed that courses offered for graduate credit tend to consist of a combination of assigned readings, assigned papers and projects, quizzes and tests, and some form of weekly participatory activity. In a traditional course, this weekly participatory activity is the class meeting in which the instructor presents information and answers questions and may organize and facilitate small group activity or discussion (Brown & Green, 2007).

Distance courses that employ synchronous communication, such as video conferencing or teleconferencing, can be compared to traditional classroom instruction relatively easily in terms of the time spent by students in course participation: Courses that employ synchronous communication methods can require similar amounts of time spent with the instructor and with classmates. The most obvious example of this would be a three-credit course delivered using videoconferencing in which the students meet via videoconferencing equipment for three hours each week during a semester. Courses delivered using asynchronous communications, however, are not able to make such a direct comparison in terms of the time students and instructors spend interacting with each other.

Courses delivered asynchronously most often use a CMS/LMS such as Blackboard or eCollege. Along with the traditional weekly readings and required assignments, students “attend” class through weekly seminars that are in essence a series of messages based on a prompt determined by the instructor and organized in a section of the CMS/LMS most often referred to as the discussion area. This activity is known as a threaded discussion where the participants are able to see all the messages that are posted, organized by author, topic, or date/time, and they can respond to specific threads within the larger discussion. Bourne (1998) suggests that this type of asynchronous discussion activity accounts for 40% of the overall course experience.

Threaded discussion has been identified as a useful tool in facilitating student metacognitive awareness and development of self-regulatory processes and strategies (Vonderwell, Liang, & Alderman, 2007). Although threaded discussion is a limited medium in that it relies entirely on the generation and interpretation of text (Dennen, 2007), it is possible to generate a sense of social presence in a way that does not require any synchronous communication (Dennen, 2007; Bender, 2003).

The threaded discussion aspect of CMS/LMS platforms supports many-to-many communication (Gunawardena & McIsaac, 2004). Typically, the discussion begins with a pre-determined prompt. The requirement is for students to individually respond to the initial prompt as well as to respond to at least one but usually two or more student responses.

There have been reports published dealing with how much time faculty spend on developing and maintaining courses offered at a distance (Bourne, 1998; Cavanaugh, 2006), on the amount and type of learning that asynchronous discussion can facilitate (Wu & Starr, 2004), and on the interaction patterns among course participants (Hara, Bonk, & Angeli, 2000). Additionally, research has been conducted that analyzes the content of asynchronous discussions (e.g., Gerber, Scott, Clements, & Saram, 2005; Mara, Moore, & Klimczak, 2004; Rourke & Anderson, 2004). This research has focused primarily on instructor influence in determining the type and amount of student discourse that takes place in asynchronous discussions (Gerber, Scott, Clements, & Sarama, 2005) and the protocols for analyzing student-to-student and instructor-to-student discourse in asynchronous discussions (Marra, Moore, & Klimczak, 2004; Rourke & Anderson, 2004).

Despite this available research, there has been little or no recent examination of the time students spend participating in asynchronous courses. Harasim (1987) examines the amount of time students spent participating in an early version of an asynchronous environment, but that study measures the time students spent at the computer, not reading the text generated by the discussion. Vonderwell and Sajit (2005) examine challenges related to the time students spend in weekly course participation in online learning situations, and the phenomenon of “information overload,” using a qualitative text-analysis. However, there is currently little or no quantitative data about text generated in weekly online course participation available. By examining quantitative data on the amount of time students spend participating in an online learning environment, researchers and instructional designers may better determine how best to provide a distance learning-based educational experience that is at least similar to that of a traditional classroom-based experience in terms of student hours.

This study is limited to a specific type of online instruction. All of the data collected for this study are from courses that use an LMS/CMS, such as Blackboard or eCollege, to organize and present the course content. Furthermore, each course uses the LMS/CMS discussion feature to provide regularly scheduled threaded discussions in which the course participants share ideas with each other. These threaded discussions are designed to perform the same function as the in-class activities conducted in traditional, face-to-face courses; in essence, they replace live, weekly class meetings. Traditional classroom activities are bounded by a specific time frame imposed by the course’s predetermined formal meeting times, but threaded discussion is not. Although the threaded discussion assignments for all courses examined had specific beginning and end dates that encompassed either one or two weeks, participants were welcome to participate at any time between the beginning and end dates, and there were no set meeting times and no time limits placed on participation during the discussion period.

All of the courses use a combination of required textbook reading, required readings presented via the Web, written assignments, and regular participation in threaded discussions. The discussions are preceded by a discussion prompt that includes a description of the topic, a set of questions that each student must address, and parameters for receiving full credit for the discussion assignment (e.g., a student must post at least 4 messages on at least 2 different days of the discussion). The instructor participates in each discussion. In all courses, each of the discussions is worth 3% to 5% of the overall course grade.

The selection of courses and course sections for the data set was based on their similarity of content and delivery. The authors examined the weekly discussion threads from courses offered as part of degree-granting, accredited, graduate programs in which all coursework is completed online. The courses are from four different institutions. The courses were taught individually by both authors, each of whom teaches for the university at which he is employed full-time and for degree granting programs that make use of adjunct faculty on a part-time, as-needed basis. Each of the courses was taught between fall 2005 and summer 2007.

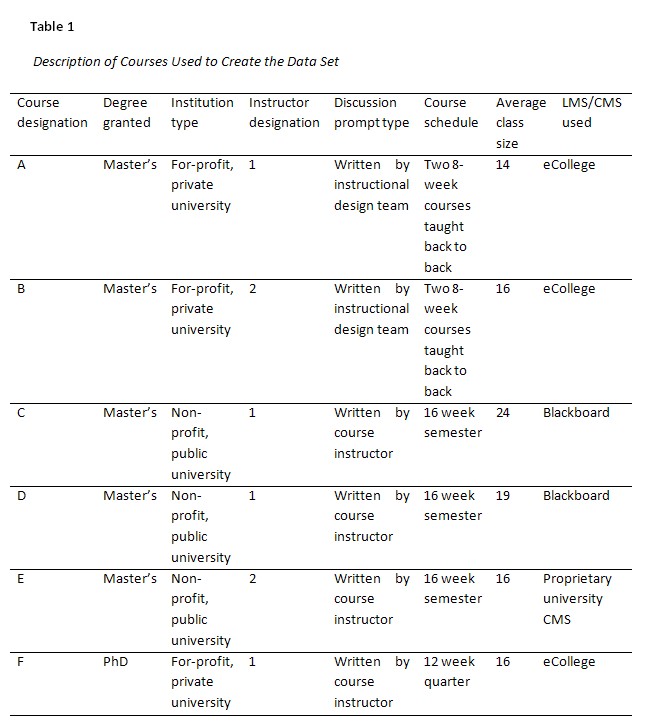

All of the courses used for the data set are part of graduate programs of study in instructional technology and/or curriculum studies; they are all part of completely online programs of study; and they are all graduate level (6000-8000 level). The courses are foundational in nature (e.g., foundations of instructional design, foundations of curriculum study). All course participants hold at least an undergraduate degree, and most are full-time, professional educators. Table 1 describes the differences among the courses in terms of the degree granted, institution type, discussion prompt type, course schedule, average class size, and LMS/CMS system used.

Using the preceding criteria for selection provided a total of 21 sections of six similar courses to examine as part of the data set.

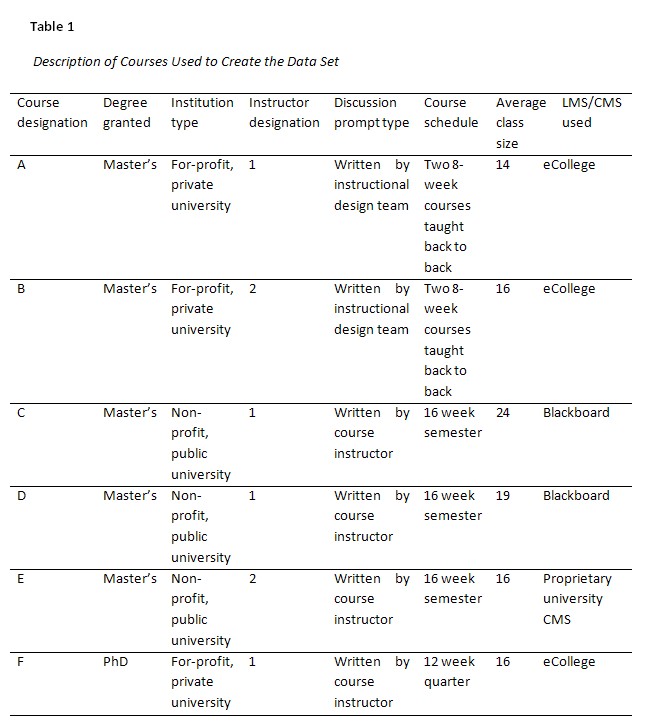

The authors examined five discussion threads from each of the 21 course sections for a total of 105 individual discussion threads (see Table 2). Each of the discussions was held over a one-week or a two-week period. The authors focused solely on the quantity of the discourse that took place in the asynchronous discussions rather than on the actual discourse content; consequently, no quantitative content analysis techniques (e.g., Gerber, Scott, Clements, & Saram 2005; Mara, Moore, & Klimczak, 2004; Rourke & Anderson, 2004) were used to analyze the discourse. The authors used basic descriptive statistics to measure and analyze the discourse in order to determine the time it takes the average person to read the discussion text.

Although discussion topics and prompts vary in terms of content, all of the discussions examined for this study have the following in common: discussion participation counted toward the student’s overall course grade; students were required to respond to the prompt and to classmates’ responses on multiple days during the course of the discussion; students were advised that discussion responses must be substantive to count toward a participation grade (e.g., agreeing with another’s post or a simple encouragement such as “very good” would not count toward a participation grade); students were advised that the instructor would participate in the discussion by monitoring the discussion daily and by responding when it was deemed appropriate (both instructors feel it is important to participate in the discussion by posting messages that deal with administrative details of the discussion, including keeping students focused on the discussion topic as well as adding content information).

To create the data set, five discussions were selected from each course. Five discussions were selected because it was the minimum common number of discussions each course had that focused on course content as opposed to social aspects of the course (e.g., “please introduce yourself”) or course evaluation (e.g., “please comment on whether you found the course engaging”).

The prompts used in the discussions that comprise the data set were written either by the instructors themselves or by a team of instructional designers who prepared the course without input from the instructors. Examples of the briefest and longest discussion prompts from the data set are provided below.

The briefest discussion prompt: A one-week discussion from program B

Report to the group the instructional goals and objectives you have developed for your instructional Web site project. Explain how the goals and objectives are influenced by the needs, task and learner analyses you conducted last week. Respond to at two classmates’ postings with constructive feedback on their goals and objectives. You should post to this discussion a minimum of two days each week.

The longest discussion prompt: A two-week discussion from program C

In this discussion we explore the possibilities of learning online. We know learning online can work (that's why we're here!), but does it work equally well for all types of instruction? A question we need to consider is, under what circumstances is online learning an ideal situation and when does it present challenges? To begin to answer this question we must recognize two important variables: 1. the population of learners; and 2. the content of the instruction.

We will be using Bloom's Taxonomy of the three learning domains as a point of reference (please review the recommended Website on Bloom's Taxonomy mentioned in the Module 4 assignments area).

In this discussion we need to develop answers for three questions:

- What are the advantages and challenges to cognitive learning in an online setting?

- What are the advantages and challenges to affective learning in an online setting?

- What are the advantages and challenges to psychomotor learning in an online setting?

As we develop the answers to these questions we will need to consider whether these advantages and challenges are different for different groups of learners. Your work on your critical analysis paper will no doubt provide you with insights into a specific population of learners - please share with the class what you have discovered about the group you are studying and how they might approach the three learning domains.

Also, see what you can include from the textbook in this discussion. Which of the instructional models/strategies that you are reading about seem most appropriate for various populations of learners and various types of instructional content?

During this discussion, you are required to post at least 3 original messages and respond to at least 3 of your classmates’ posted messages. You must post your first message to this discussion by Thursday, February 15.

Five discussions from each of the courses were identified as focused on course content and intended to require a similar amount of time on task per week. These discussions were focused on subject matter (not introductions or end-of-course reflections) and occurred between the second and penultimate week of the course.

The authors extracted the data from the six courses by accessing the completed discussions, copying the text from these discussions, and pasting them into Microsoft Word documents. Once the data was saved into Microsoft Word files, the discussion size (i.e., the number of words per discussion) could be determined. Discussion size is determined by the automatic word count function in Microsoft Word. The word counts include the header information (author, time posted, title of post) accounting for 20 to 30 words of information per post. Twenty-one sections of six different courses were analyzed (see Table 2).

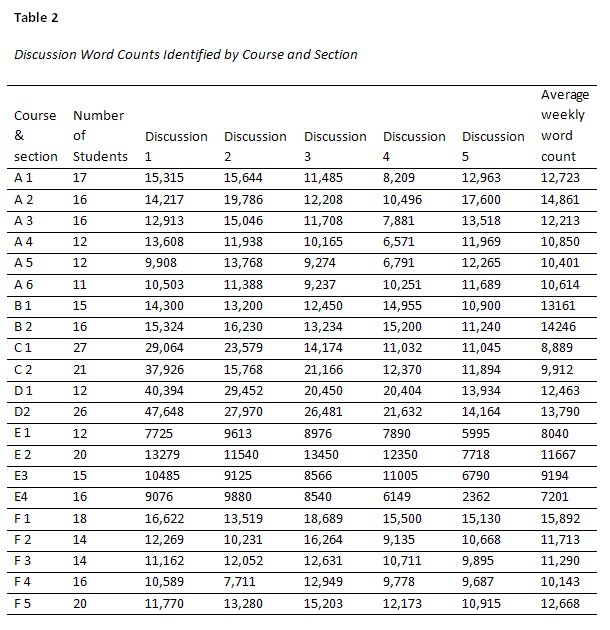

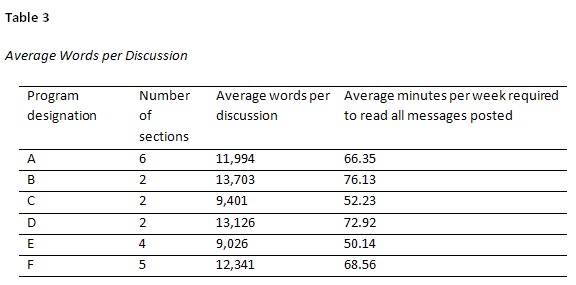

Once the word counts for each course section were determined, the average number of words per discussion was calculated for each course (see Table 3). To determine the average minutes per week a student spent on reading the discussions, the authors used 180 words per minute to calculate the average reading time of an average discussion. This number is based on the work of Ziefle (1998) and Carver (1985, 1990). Ziefle indicated that individuals scanning text on a monitor do so at an average of 180 words per minute as compared to 200 words per minute scanning the same text on paper. Carver indicated that the typical silent reading rate per minute for college students is between 256 and 333. The authors used Ziefle’s scanning rate for text on a monitor since all courses were presented online. Furthermore, the authors assume the lower-number scanning rate (as opposed to Carver’s silent reading rate for college students) because the discussion messages required responses; the assumption is that messages requiring a response would elicit more careful reading.

The average time spent for a week’s threaded discussion in all six courses was 64.39 minutes.

In trying to determine the amount of time students will spend in participatory activity in an online, asynchronous course, this study limits itself to the quantifiable aspects of completed threaded discussions. Although the authors determine that in the case of multiple graduate courses that address similar content one may predict that discussions will require approximately one hour’s reading time each week, no determination is made regarding the amount of time required to compose initial messages or responses within the discussion. The time spent composing initial posts and responses to classmates’ messages cannot be adequately measured given the data collected for this study. The data collected from this study can only address the time spent reading the text of the discussion. However, the fact that in 21 sections of six courses the range of average times stays between 50 and 76 minutes of reading time each week suggests a certain consistency that may be helpful to course developers.

Assuming it takes less than two hours to construct initial messages and responses to classmates, asynchronous threaded discussion used in this manner accounts for less than the three hours ‘classroom time’ that is part of a traditional three student-hour course. However, assuming that no campus-based, face-to-face course remains completely on-task for a full three hours each week (one must assume time for administrative activity at the beginning and end of a class session, as well as break times and divergent discussion during the class), it may be posited that asynchronous threaded discussion of the type studied here provides a reasonably similar experience in terms of time spent participating in classroom activity.

This study is limited to two instructors’ use of threaded discussion in a variety of online courses that are part of programs of study in instructional technology. It would, therefore, be imprudent to generalize these findings beyond online graduate courses similar to those observed.

The research method employed might be used with larger and more diverse samples (e.g., undergraduate courses, a greater range of course content at the graduate and undergraduate level, varying instructors) to obtain results that could be generalized to the larger population of the online post-secondary courses. Furthermore, greater consideration of the role reading level plays in determining reading time may be necessary.

The authors set out to answer the following questions:

It seems reasonable to assume the following given an asynchronous, threaded discussion prompt similar to those used in the online courses examined and with a class size between 11 and 27:

The results of this study suggest that threaded discussion activities used in online learning may be compared to more traditional, synchronous meetings in terms of the time necessary for weekly participation. Furthermore, this comparison is favorable: The two situations are on a par with each other. Although these findings are informative, further research is recommended in this area given that more and more institutions are developing and offering college-credit courses online. Increased consideration devoted to the topic of time spent on online course activities in terms of student hours earned would allow a more direct focus on various student characteristics, such as non-native English speakers and undergraduate- versus graduate-level. Examining these characteristics, and how they might influence time spent on asynchronous discussions, could provide additional insights that benefit developers and instructors of online courses.

Anderson, B. (2006). Writing power into online discussion. Computers and Composition, 23, 108-124.

Brown, A., & Green, T. (2007, October). Three Hours a Week?: Determining the Time Students Spend in Online Participatory Activity. Paper presented at the 13th Annual Sloan-C International Conference on Online Learning, Orlando, FL.

Bender, T. (2003). Discussion-based online teaching to enhance student learning: Theory, practice, and assessment. Sterling, VA: Stylus.

Bourne, J.R. (1998). Net-learning: strategies for on-campus and off-campus network-enabled learning. Journal of Asynchronous Learning Networks, 2(2).

Carver, R. P. (1985). Silent reading rates in grade equivalents. Journal of Reading Behavior, 21, 155-156.

Carver, R. P. (1990). Reading rate: A review of research and theory. San Diego, CA: Academic Press.

Cavanaugh, J. (2006). Comparing online time to offline time: The shocking truth. Distance Education Report, 10(9), 1-2, 6.

Dennen, V. (2007). Presence and positioning as components of online instructor persona. Journal of Research on Technology in Education, 40(1), 95-108.

Gerber, S., Scott, L., Clements, D. H., & Sarama, J. (2005). Instructor influence on reasoned argument in discussion boards. Educational Technology Research and Development, 53(2), 25-39.

Gunawardena, C.N., & Stock McIsaac, M. (2004). Distance education. In D.H. Jonassen (Ed.), Handbook of research on educational communications and technology (2nd ed., pp. 355-395). Mahwah, NJ: Lawrence Earlbaum Associates.

Hara, N., Bonk, C.J., & Angeli, C. (2000). Content analysis of online discussion in an applied educational psychology course. Instructional Science, 28, 115-152.

Harasim, L. (1987). Teaching and learning on-line: Issues in computer-mediated graduate courses. Canadian Journal of Educational Communication, 16(2), 117–135.

Lee, Y, & Nguyen, H. (2007). Get your degree from an educational atm: An empirical study in online education. International Journal on E-Learning, 6(1), 31-40.

Marra, R. M., Moore, J. L., & Klimczak, A. K. (2004). Content analysis of online discussion forums: A comparative analysis of protocols. Educational Technology Research and Development, 52(2), 23-40.

Rourke, L., & Anderson, T. (2004). Validity in quantitative content analysis. Educational Technology Research and Development, 52(1), 5-18.

Schrire, S. (2006). Knowledge building in asynchronous discussion groups: Going beyond quantitative analysis. Computers & Education, 46, 49-70.

Shedd, J.M. (2003). The history of the student credit hour. New Directions for Higher Education, 122, 5-12.

Thompson, M. M. (1998). Distance learners in higher education. In C. G. Gibson (Ed.), Distance learners in higher education: Institutional responses for quality outcomes (pp. 9-24). Madison, WI: Atwood.

Vonderwell, S., Liang, X., & Alderman, K. (2007). Asynchronous discussion and assessment of online learning. Journal of Research on Technology in Education, 39(3), 309-328.

Vonderwell, S., & Sajit, Z. (2005). Factors that influence participation in online learning. Journal of Research on Technology in Education, 38(2), 213-230.

Wu, D., & Starr, R. H. (2004). Predicting learning from asynchronous online discussions. Journal of Asynchronous Learning Networks, 8(2). Retrieved from http://www.sloan-c.org/publications/jaln/v8n2/v8n2_wu.asp

Ziefle, M. (1998). Effects of display resolution on visual performance. Human Factors, 40(4), 555-568.