Volume 26, Number 1

Hongwei Yang, PhD1, Müslim Alanoğlu, PhD2, Songül Karabatak, PhD2, Jian Su, PhD3, and Kelly Bradley, PhD4

1University of West Florida; 2Fırat University; 3University of Tennessee; 4University of Kentucky

This study developed and validated the Turkish version of the Self-Directed Online Learning Scale (SDOLS-T) for assessing students’ perceptions of their self-directed learning (SDL) ability in an online environment. Specifically, this study conducted in two stages multiple categorical confirmatory factor analyses factoring in the ordered categorical structure of the SDOLS-T data. The data in this study came from a parent study which utilized the SDOLS-T and other instruments for data collection. From among the three competing models the literature recommends examining to explain the shared variance of items in a survey, the results at stage 1 showed that the correlated, two-factor structure, originally proposed for the SDOLS, was also the best-fit model for the SDOLS-T. At stage 2, using the best-fit model from stage 1, measurement invariance analyses were conducted to examine the extent to which SDL under the SDOLS-T was understood and measured equivalently across the groups specified by four dichotomous demographic variables: gender, network connection, online learning experience, and grade. The stage 2 results indicate the SDOLS-T reached scalar invariance at least for gender and network connection, thus allowing the comparison of latent or manifest means, or any other scores (e.g., total scores, Rasch scores), across the groups by these two demographic variables. In the end, the findings support the SDOLS-T for use in facilitating educational practice (e.g., improving instructional design), advancing scholarly literature (e.g., investigating SDL measurement and content area issues), and informing policy/decision-making (e.g., increasing retention rates and reducing dropout) in online education in Turkey.

Keywords: self-directed learning, online teaching and learning, confirmatory factor analysis, ordered categorical data, measurement invariance

The literature of online education has identified fundamental characteristics of a successful online learning environment (Hone & Said, 2016). Among them is students’ self-directed learning (SDL) or self-management of learning, a consistent and essential characteristic recognized in online learning readiness and effectiveness (Prior et al., 2016). The literature indicates SDL supports students’ abilities to manage their overall learning activities, think critically, and cognitively monitor their learning performance when they navigate through the increasingly complex learning process. SDL contributes to the interaction and collaboration of students with their peers and instructors for feedback and support (Garrison, 1997; Kim et al., 2014).

As a core theoretical construct in adult education, SDL has been referred to as both a personal attribute and a process (Song & Hill, 2007). In a book published nearly 50 years ago, Knowles (1975) described SDL as an adult students’ ability to self-manage their learning; his guide was a go-to book for adult students developing competencies in self-directed learning (Long, 1977). Caffarella (1993) outlined three principles underlying SDL: (a) self-initiated learning, (b) more learner autonomy, and (c) greater learner control. Hiemstra (1994) interpreted SDL as a process in which adult students could plan, navigate, and evaluate their learning on the path to their personal learning goals. In contrast, Garrison (1997) established a more comprehensive theoretical model of SDL which focused on the learning process containing both motivational and cognitive aspects of learning. This model integrated three overlapping learning dimensions: (a) motivation, (b) internal monitoring, and (c) external management. Regarding lifelong learning, SDL is a preferred learning process to help students stay current (Kidane et al., 2020). Some researchers further concluded that SDL and lifelong learning are related to such an extent that they each serve as the basis of the other (Tekkol & Demirel, 2018). Finally, students’ SDL ability may be influenced by their culture (Ahmad & Majid, 2010; Demircioğlu et al., 2018; Suh et al., 2015). Therefore, the measurement of SDL in a collectivist culture (e.g., Turkey) may be different from that in an individualistic culture (e.g., USA).

Research has been conducted on SDL in online education, a learning delivery modality becoming increasingly popular around the world largely due to the recent COVID-19 pandemic and rapid development of instructional technologies. Noting that SDL may function differently by learning context (e.g., online context), Song and Hill (2007) investigated and compared various contexts where self-direction in learning occurred. They concluded a better understanding of SDL characteristics unique to the online context supports better online education experiences. On the other hand, research supports online education as the right place for students to self-manage their learning, and students’ SDL capability is significantly associated with their online readiness, disposition, engagement, and eventually academic achievement (Balcı et al., 2021; Kara, 2022; Karatas & Arpaci, 2021; Ozer & Yukselir, 2021). It is widely believed that online education will continue to thrive globally (Abuhammad, 2020; Xie et al., 2020). Accordingly, self-directed online learning is anticipated to continue to generate interest among researchers worldwide.

Many instruments measuring SDL are based on Knowles’s andragogic theory (Cadorin et al., 2017) and have evolved into various contexts that include cultures and languages, fields of study, and student populations (Cadorin et al., 2013; Cheng et al., 2010; Jung et al., 2012). Comprehensive reviews of studies validating SDL instruments are available in Cadorin et al. (2017) and Sawatsky (2017).

Focusing on the online environment, the Self-Directed Online Learning Scale (SDOLS) was developed in English in the USA, validated by Su (2016), and subsequently re-validated by Yang et al. (2020). The 17-item instrument consists of two dimensions: autonomous learning (AUL; eight items) and asynchronous online learning (ASL; nine items). Respondents rate the items on a 5-point Likert scale that ranges from 1 = strongly disagree to 5 = strongly agree. All 17 items are positively worded, and a higher score on an item represents a higher level of the aspect of SDL measured by that item.

In Turkey, as in other countries, there has been a transition to online teaching and learning over the past few years due to the COVID-19 pandemic and the advancement of instructional technologies. In turn, it may be reasonable to anticipate more SDL-related research in Turkish because the online environment is an ideal place for students to self-manage their learning. Therefore, survey instruments with solid psychometric properties for measuring students’ SDL ability are expected to be in even greater need.

Presented in Table 1 are a few instruments used to measure SDL. These instruments have been identified from an extensive literature review, and it is noteworthy that many SDL-related studies in Turkish, including several which took place prior to the pandemic, have used these instruments. Table 1 shows: (a) the full name of the instrument, (b) its original developer, (c) the researchers who subsequently adapted the instruments to Turkish, if any, and (d) in which studies in Turkish the instruments were administered. These studies demonstrate the importance of SDL measurement to scholarly literature, including that of online education, in Turkish.

Although properly measuring SDL is critical in online education, there are problems with the existing SDL instruments. First, many such instruments are not designed specifically for the online teaching and learning context. In Table 1, for example, among the six instruments measuring SDL, only two serve the exclusive needs of online education: the Readiness for Online Learning Scale developed in Turkish by Yurdugül and Demir (2017) and the Online Learning Readiness Scale adapted to Turkish by İlhan and Çetin (2013) and Yurdugül and Alsancak Sırakaya (2013). Second, many existing studies validating SDL instruments (e.g., the three studies just cited) have inadequacies in their methodology. They have treated the almost always ordered categorical response data from these surveys as if they were continuous when examining the instruments’ psychometric properties. Such a practice, though still common, is known to result in unsatisfactory consequences, including the underestimation of the standard error and an inflated statistic, among others (Byrne, 2010; Kline, 2016). At the same time, very few of these studies investigated whether their instruments provided equivalent measures, for example, measurement invariance, per Millsap (2011), Putnick and Bornstein (2016), and Svetina et al. (2020), of SDL across groups created by demographic variables such as gender. Meaningful comparisons of statistical measures, such as scale or subscale means, from across different groups should not be made until an appropriate level of measurement invariance is achieved.

Table 1

Self-Directed Learning Instruments Used in Turkish Studies

| Instrument | Developer(s) | Adaptation to Turkish | Administered in Turkish studies |

| Readiness for Online Learning Scale | Yurdugül & Demir (2017) | NA | Karatas & Arpaci (2021) |

| Self-Directed Learning Skills Scale | Askin Tekkol & Demirel (2018) Askin (2015) | NA | Karagülle & Berkant (2022) Peker Ünal (2022) Karatas & Arpaci (2021) Tekkol & Demirel (2018) |

| Self-Directed Learning Inventory | Suh et al. (2015) | Çelik & Arslan (2016) | Demircioğlu et al. (2018) |

| Online Learning Readiness Scale | Hung et al. (2010) | İlhan & Çetin (2013) Yurdugül & Alsancak Sırakaya (2013) | Kara (2022) Ates Cobanoglu & Cobanoglu (2021) Balcı et al. (2021) |

| Self-Directed Learning Scale | Lounsbury et al. (2009) Lounsbury & Gibson (2006) | Demircioğlu et al. (2018) | Ozer & Yukselir (2021) Durnali (2020) Saritepeci & Orak (2019) |

| Self-Directed Learning Readiness Scale | Fisher et al. (2001) Guglielmino (1977) | Kocaman et al. (2006) | Ahmad et al. (2019) Ertuğ & Faydali (2018) Ünsal Avdal (2013) |

Because online education is expected to continue to thrive in Turkey (Daily Sabah, 2021; Polat et al., 2022; Republic of Turkey Ministry of National Education, n.d.), this study is significant in that, by adapting the SDOLS to the Turkish language, it provides another tool for measuring SDL dedicated to online education that may be useful to the work of researchers, instructional designers, and policy- and decision-makers in Turkey. Ideally, the SDOLS-T is properly validated with regard to its psychometric properties based on the appropriate methodology capable of addressing the inadequacies outlined earlier in this section.

The study validated the SDOLS-T for its psychometric properties including measurement invariance describing the extent to which SDL under the SDOLS-T is understood and measured equivalently across the groups specified by demographic variables (Svetina et al., 2020). Specifically, the study addressed two research questions:

RQ1. What is the best-fit factor structure of the SDOLS-T: that of the original SDOLS or common alternative structures from the literature?

RQ2. To what extent does the best-fit factor structure underlying the SDOLS-T measure the same construct across the groups created by several demographic variables measured in the study: (a) gender, (b) network connection, (c) previous online learning experience, and (d) grade level?

When addressing these questions, the study provided insights into Turkish university students’ SDL.

As part of a parent study reviewed and approved by the university research ethics committee, the study investigated the psychometric properties of the SDOLS-T. To adapt the SDOLS to the SDOLS-T, permission was first secured from the developer of the SDOLS for its adaptation using the back-translation method (Brislin, 1970). Next, all 17 items were translated into Turkish to derive the initial draft of the SDOLS-T which was reviewed by the university’s faculty members in education. With the feedback from the education faculty, the initial SDOLS-T instrument was revised and next translated back into English (SDOLS-T-E). The SDOLS-T-E instrument was reviewed by the SDOLS developer for consistency in meaning with the SDOLS instrument. Further revisions were made to the SDOLS-T based on the feedback on the SDOLS-T-E, which led to the final SDOLS-T instrument. Similar to the SDOLS, the SDOLS-T has 17 positively-worded items in two dimensions: autonomous learning (AUL: eight items) and asynchronous online learning (ASL: nine items), and each item has five Likert response options ranging from 1 = strongly disagree to 5 = strongly agree.

The participants were 1,989 undergraduate students enrolled in the Faculty of Education of a Turkish university in the 2020–2021 academic year and were recruited into the larger-scale parent study. The data used here were collected from these undergraduate students during February and April 2021 as part of the parent study. The data contain 332 completed responses to all 17 SDOLS-T items plus four dichotomous demographic items. The data were used to run several confirmatory factor analyses (CFAs) to assess the underlying factor structure of the SDOLS-T and identify certain psychometric evidence for the instrument.

Table 2 presents descriptive statistics of the student respondents focusing on the demographic variables outlined in RQ2. About two thirds of the student participants were female and an even higher proportion had had no online learning experience. The percentage of students accessing the course using a smart device was slightly higher than that of students using a PC. Finally, the split was about even between higher (third- and fourth-year undergraduates) and lower (first- and second-year undergraduates) grade students.

Table 2

Demographic Characteristics of Participants

| Demographic variable | Category | n | % |

| Gender | Female | 224 | 67.5 |

| Male | 108 | 32.5 | |

| Network connection | PC | 150 | 45.2 |

| Smart device | 182 | 54.8 | |

| Online learning experience | No | 243 | 73.2 |

| Yes | 89 | 26.8 | |

| Grade | Higher | 159 | 47.9 |

| Lower | 173 | 52.1 |

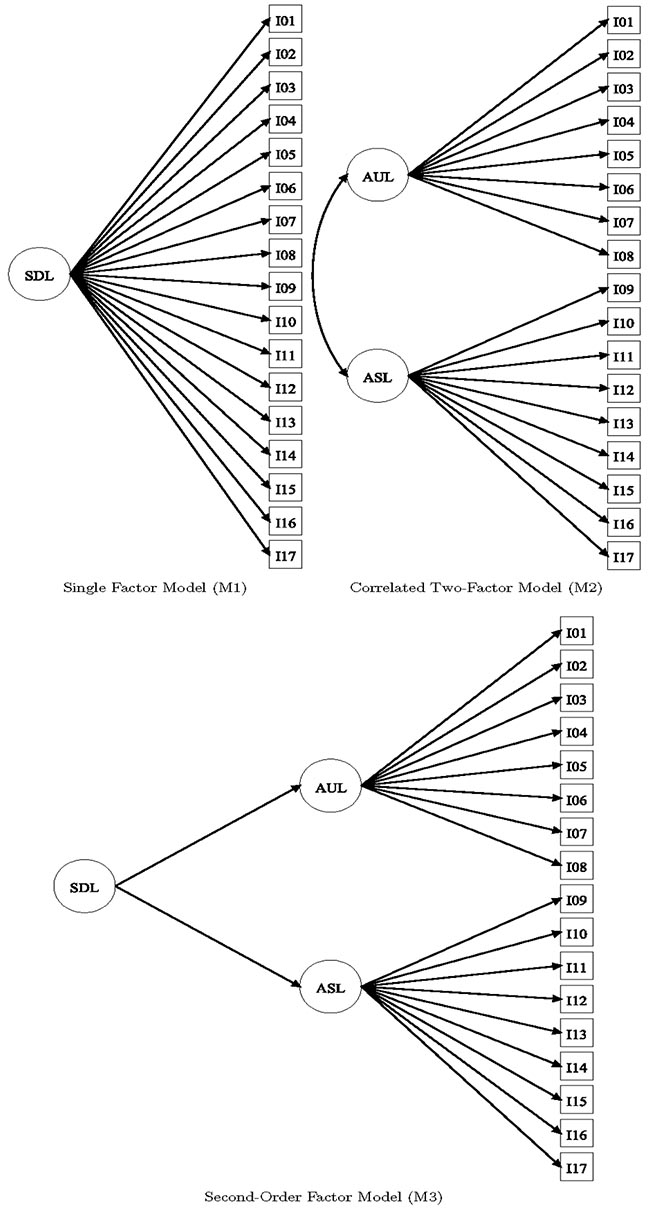

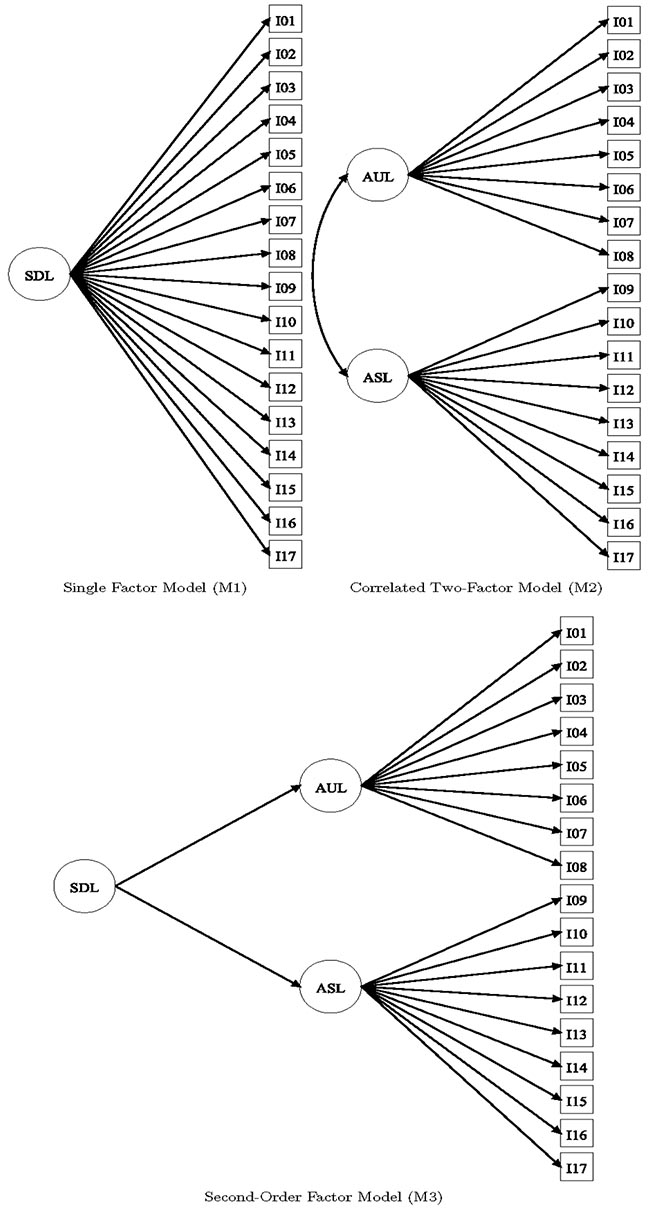

The SDOLS-T was validated in two stages, and CFA served as the analytical framework in both instances. Stage 1 for RQ1 used CFA to assess three competing model structures (Figure 1) which the methodological literature recommends should be examined because all are designed to explain the shared variance between items in a survey instrument (Gignac & Kretzschmar, 2017). These three models were: (a) the single factor model (M1); (b) the correlated two-factor model [M2, original structure from Su (2016)]; and (c) the second-order factor model (M3). With the best-fit model from stage 1, stage 2 for RQ2 further assessed the structure for levels of measurement invariance across the groups specified by each of the four demographic variables: (a) gender, (b) network connection, (c) online learning experience, and (d) grade.

Figure 1

Three Competing Factor Structures for the SDOLS-T in Stage 1

Note. SDL = self-directed learning; AUL = autonomous learning; ASL = asynchronous online learning; I = Item.

At stage 1 for RQ1, commonly used model fit statistics were examined to assess the three competing structures: χ2 test and alternative fit indices (AFIs) including the comparative fit index (CFI), the root mean square error of approximation (RMSEA), the standardized root mean square residual (SRMR), and the Tucker-Lewis index (TLI). At stage 2 for RQ2, the changes in these fit statistics (i.e., χ2 difference test and changes in the AFIs) were assessed to compare a hierarchy of two models, with one being nested within the other through model structure or parameter (equality) constraints at a certain level of measurement invariance.

In particular, regarding stage 2, given different levels of measurement invariance (Sass, 2011), this study investigated whether the SDOLS-T could reach scalar invariance, that is, strong (factorial) invariance, under each demographic variable. Scalar invariance requires three levels of invariance be retained simultaneously across the groups: (a) identical model structure or configural invariance, though all model parameters are still allowed to differ across groups, (b) equal thresholds for ordered categorical item response data or equal intercepts for continuous item response data, and (c) equal factor loadings. Only when scalar invariance holds can the comparison of groups be conducted regarding the latent factor or manifest means, or any other scores (e.g., total scores, Rasch scores; Putnick & Bornstein, 2016; Sass, 2011; Svetina et al., 2020; Thompson & Green, 2013). As for the ordering of testing invariance hypotheses, Wu and Estabrook (2016) argued that, for ordered categorical data, model identification would be more complex due to the presence of threshold parameters and recommended that, when the data contains more than two categories, threshold invariance be assessed ahead of the invariance of other parameters. This recommendation was implemented in previous studies (e.g., Svetina et al., 2020). When analyzing the SDOLS-T data, the study took the same recommended approach and, after establishing configural invariance, proceeded to test threshold invariance before assessing loading invariance.

Finally, regarding the software for CFA, the study used the lavaan and the semTools packages in R; the former is capable of handling ordered categorical data through a weighted least squares (WLS) estimator (Jorgensen et al., 2022; Rosseel, 2012). Stage 1 used the lavaan package only. In stage 2, when conducting a sequence of hierarchical tests to impose increasingly more restrictive equality constraints on CFA parameters across each pair of groups, the semTools::measEq.syntax() function served to automatically generate the lavaan model syntax and implement the model identification and invariance constraints by Wu and Estabrook (2016) under the δ-parameterization (Svetina et al., 2020). The models were next estimated by the lavaan package. In the end, to compare two nested models when testing an invariance hypothesis, the lavaan::lavTestLRT() function was used.

The results from the two stages of analyses are outlined next. Notably, the cutoff values used here for assessing model fit at both stages were traditionally designed for the normal-theory maximum likelihood estimation with continuous data. By contrast, this study implemented a WLS estimator with ordered categorical data. Although there exist known methodological issues regarding applying these traditional cutoffs in a research context such as this, the practice has been widely accepted in the literature and will continue until better alternatives are proposed and established (Xia & Yang, 2019).

The model fit statistics of M1 through M3 are presented in Table 3 (AFIs only). All three models demonstrated an adequate fit on CFI and TLI because they were close to the upper limit of 1.00 for a perfect fit. M2 performed the best on both criteria. Regarding SRMR, only M2 and M1 were lower than the cutoff of .080 for a good fit with M2 being the lowest. Finally, regarding RMSEA, M2 had the lowest value of .0533, also lower than the cutoff of .08 for an adequate fit (Byrne, 2010; MacCallum et al., 1996; West et al., 2012). Evidently, out of the three models, the correlated two-factor structure (M2) demonstrated the best fit as assessed by the highest values of CFI and TLI and the lowest values of RMSEA and SRMR.

Table 3

Results of Confirmatory Factor Analyses of Competing SDOLS-T Model Structures

| Model | χ2 | df | RMSEA | CFI | SRMR | TLI |

| Single Factor Model (M1) | 488.11** | 187 | .0697 | .9772 | .0743 | .9834 |

| Two-Factor Model (M2) | 360.96** | 186 | .0533 | .9867 | .0647 | .9903 |

| Second-Order Factor Model (M3) | 925.14** | 187 | .1092 | .9441 | .1078 | .9593 |

Note. SDOLS-T = Turkish Version of the Self-Directed Online Learning Scale; RMSEA = root mean square error of approximation; CFI = comparative fit index; SRMR = standardized root mean square residual; TLI = Tucker-Lewis index.

**p < .01.

The results of the measurement invariance analyses of the four demographic variables are presented in Table 4. Each measurement invariance analysis for one demographic variable was based on the sequence of three models (configural invariance/identical structure, equal threshold, and equal loading) proposed in Wu and Estabrook (2016) and implemented in, for example, Svetina et al. (2020). Referring to Table 4, at the configural invariance level, the four models from the four demographic variables all demonstrated an adequate fit as measured by RMSEA, CFI, SRMR, and TLI, even though they all had a statistically significant test. Next, at the equal threshold level, all four χ2 difference tests were statistically nonsignificant (Δp ranged from .0879-.4858), indicating that the equal threshold constraints did not significantly decrease the fit of each model. The changes in all AFIs were minimal at the third decimal place, suggesting no significantly worse fit from the equal threshold constraints (Chen, 2007; Cheung & Rensvold, 2002). Finally, at the equal loading level, two of the four χ2 difference tests were statistically nonsignificant (gender and network connection), while the others were significant (online learning experience and grade), even though none of the changes in the AFIs indicated any significant decrease in model fit. In summary, scalar invariance was achieved for gender and network connection. For online learning experience and grade, their scalar invariance was supported by all AFIs, but not by the χ2 difference test.

Table 4

Model Fit Statistics for Measurement Invariance Assessment Under Each of the Four Demographic Variables

| Demographic variable | Equality constraints | χ2 | df | Δχ2 | Δdf | RMSEA | ΔRMSEA | CFI | ΔCFI | SRMR | ΔSRMR | TLI | ΔTLI |

| Gender | Configural | 572.68** | 236 | .0930 | .9776 | .0811 | .9742 | ||||||

| Threshold | 595.90** | 270 | 42.629 | 34 | .0855 | -.0075 | .9783 | .0007 | .0811 | .0000 | .9781 | .0039 | |

| Loading | 635.78** | 285 | 22.001 | 15 | .0864 | .0009 | .9766 | -.0017 | .0820 | .0009 | .9777 | -.0004 | |

| Network connection | Configural | 565.78** | 236 | .0920 | .9777 | .0803 | .9743 | ||||||

| Threshold | 590.19** | 270 | 45.626 | 34 | .0848 | -.0072 | .9783 | .0006 | .0803 | .0000 | .9782 | .0039 | |

| Loading | 632.15** | 285 | 24.229 | 15 | .0859 | .0011 | .9765 | -.0018 | .0812 | .0009 | .9776 | -.0006 | |

| Online learning experience | Configural | 580.54** | 236 | .0941 | .9770 | .0792 | .9735 | ||||||

| Threshold | 604.48** | 270 | 44.321 | 34 | .0866 | -.0075 | .9776 | .0006 | .0792 | .0000 | .9775 | .0040 | |

| Loading | 677.84** | 285 | 36.952** | 15 | .0914 | .0048 | .9737 | -.0039 | .0803 | .0011 | .9749 | -.0026 | |

| Grade | Configural | 574.55** | 236 | .0932 | .9781 | .0796 | .9747 | ||||||

| Threshold | 590.91** | 270 | 33.627 | 34 | .0849 | -.0083 | .9792 | .0011 | .0796 | .0000 | .9791 | .0044 | |

| Loading | 663.61** | 285 | 38.965** | 15 | .0897 | .0048 | .9755 | -.0037 | .0819 | .0023 | .9766 | -.0025 |

Note. RMSEA = root mean square error of approximation; CFI = comparative fit index; SRMR = standardized root mean square residual; TLI = Tucker-Lewis index.

**p < .01.

The study validated the SDOLS-T instrument, a Turkish version of the original SDOLS instrument developed to measure students’ ability to take charge of their online learning. From WLS-based confirmatory factor analyses using the ordered categorical data from a sample of 332 undergraduate students majoring in education in a Turkish university, the study found psychometric evidence for the new instrument and assessed the extent to which the SDOLS-T functioned equivalently for four demographic groups: gender, network connection, online learning experience, and grade.

Regarding the first research question on the fit of each competing model to the data, the study examined three competing model structures for the SDOLS-T suggested from the methodological literature based on commonly used model fit indices. All three models were statistically significant on the test. Regarding the four AFI statistics, the correlated two-factor model (M2) was unanimously the best model; the other two were less satisfactory on either one (RMSERA in the case of M1) or two (SRMR and RMSEA in the case of M3) of the four AFIs. In summary, the findings from stage 1 confirm that the same factor structure proposed by Su (2016) for the SDOLS also applies to the SDOLS-T.

Regarding the second research question on the extent to which the SDOLS-T measures the same construct across the groups created by the four demographic variables, a measurement invariance approach was taken, where both the χ2 difference test and the changes in AFIs were used to compare pairs of nested models. The four AFIs supported up to the scalar invariance of the SDOLS-T across the four demographic group pairs. Additionally, for gender and network connection only, scalar invariance was also supported by the χ2 difference test. In summary, the measurement invariance analyses support the comparison of the SDOLS-T latent factor, manifest means, or other scores across groups specified by gender and network connection. As for groups defined by online learning experience and grade, any such comparison should proceed with caution. To compare the latent factor means when an appropriate level of measurement invariance is not satisfied, one recommended approach from the literature is to compare the groups on relevant statistics with and without imposing the equality constraints which have been tested to be invalid. If the discrepancies in model parameter estimates are small, comparing groups may be justified (Chen, 2008; Schmitt & Kuljanin, 2008).

The study examined the psychometric properties of the SDOLS-T for measuring Turkish university students’ ability to take charge of their learning in an online environment. First, through a comparison of three competing model structures which were all designed to explain the shared variance between the items of a survey instrument, the study concluded that the SDOLS-T has the same underlying structure as the original SDOLS instrument in English, with eight items measuring autonomous learning and the other nine measuring asynchronous online learning. Next, through measurement invariance analyses, the study concluded that the SDOLS-T allows the comparison of latent or manifest means, or any other scores (e.g., total scores, Rasch scores), across groups defined by gender and network connection where scalar invariance is unanimously supported by all fit statistics used in the study. The study briefly describes an approach from the literature for comparing those scores of groups defined by online learning experience and grade where scalar invariance is supported by AFIs only.

The study has implications for educational practice, research, and policy and decision-making in higher education in Turkey. First, for educational practice, instructional designers in Turkish colleges and universities may use the SDOLS-T as a diagnostic tool to measure and assess online students’ readiness and identify those whose SDL ability is relatively low before adjusting course designs to improve students’ self-directed skills and subsequently the chance of their success in online learning (Edmondson et al., 2012; Khiat, 2015; Tekkol & Demirel, 2018). Second, for research, the study offers a new instrument in Turkish for assessing students’ SDL ability in online learning. The instrument may be further validated for more research contexts in Turkey (e.g., Turkish students in secondary education), or serve as an outside criterion with which other instruments measuring theoretically-related constructs are correlated for evidence of construct validity (Demircioğlu et al., 2018). The instrument may also be used in SDL-related content area research in online education to characterize environments which are effective in helping students acquire and advance their SDL skills and/or to explore how students’ SDL skills are related to their academic success, desire for further academic pursuits and lifelong learning, and prospects of employability (Ahmad et al., 2019; Tekkol & Demirel, 2018). Third, for policy- and decision-making, the data collected using the SDOLS-T may help higher education administrators in Turkey better understand how their students’ SDL ability is related to their engagement and success in online courses, to the completion of online programs under the university-provided online learning management systems, and to students’ readiness for the job market. Accordingly, administrators may become better informed when making policies to more effectively reduce dropout and increase retention rates and designing strategies and tasks to better prepare students to become more SDL-capable for their prospective employers (Ahmad et al., 2019; Schulze, 2014; Sun et al., 2022).

Finally, the successful adaptation of the SDOLS instrument into the SDOLS-T in the Turkish setting may serve as evidence of the SDOLS having a high level of cultural sensitivity which may easily enable its use in multicultural settings. Thus, it is reasonable to anticipate the successful adaptation here may inspire similar SDOLS-related, scale validation studies in other languages. Further, in large-scale, cross-cultural research, the availability of the instrument in multiple languages would allow the survey to be filled out by participants from around the world in their native languages. With the collected survey data analyzed using the measurement invariance method, it would be possible to investigate the cultural universality of the (components/aspects, as measured by various survey (e.g., the SDOLS-T) items, of the) SDL construct.

This study is not without limitations, which could serve as future research directions. First, even though the original SDOLS was validated through Rasch modeling (Bond & Fox, 2015; Yang et al., 2020), the study here took a CFA approach under the structural equation modeling framework instead. Therefore, a possible extension of the study is to apply Rasch modeling to the SDOLS-T for additional psychometric evidence. Under Rasch modeling, differential item functioning may be used to assess the lack of measurement invariance (i.e., noninvariance) of SDOLS-T items, if any (Kim & Yoon, 2011; Meade et al., 2007). Second, the study did not assess the longitudinal invariance of the SDOLS-T. If students’ level of SDL ability evolves substantially over time (e.g., over years), ceiling or floor effects may occur later in life. Accordingly, SDOLS-T items may need to be updated to adjust for students’ behavioral changes. Besides, if the underlying meaning of SDL also evolves (e.g., the way students self-manage their learning changes over time as the field of online education is rapidly developing), the SDOLS-T instrument may need revisions as well to ensure it is still the construct of SDL that is measured (Chen, 2008; Widaman et al., 2010). Therefore, another possible extension of the study is to examine the longitudinal invariance of the SDOLS-T to assess its continued validity over time (Millsap & Cham, 2013). Finally, because any sign of item noninvariance contributing to model misfit is concerning in terms of item quality (Sass, 2011), identifying individual noninvariant items by conducting item level analyses could be a future extension of the existing study. Such analyses are usually guided by the modification indices suggesting which items could be freed to improve model fit, thus leading to partial measurement invariance at a certain level (Schmitt & Kuljanin, 2008).

Abuhammad, S. (2020). Barriers to distance learning during the COVID-19 outbreak: A qualitative review from parents’ perspective. Heliyon, 6(11), Article e05482. https://doi.org/10.1016/j.heliyon.2020.e05482

Ahmad, B. E., & Majid, F. A. (2010). Self-directed learning and culture: A study on Malay adult learners. Procedia - Social and Behavioral Sciences, 7, 254-263. https://doi.org/10.1016/j.sbspro.2010.10.036

Ahmad, B. E., Ozturk, M., Baharum, M. A. A., & Majid, F. A. (2019). A comparative study on the relationship between self-directed learning and academic achievement among Malaysian and Turkish undergraduates. Gading Journal for Social Sciences, 21(1), 1-11. https://ir.uitm.edu.my/id/eprint/29240/1/29240.pdf

Aşkın, İ. (2015). An investigation of self-directed learning skills of undergraduate students [Unpublished doctoral dissertation]. Hacettepe University.

Aşkın Tekkol, İ., & Demirel, M. (2018). Self-Directed Learning Skills Scale: Validity and reliability study. Journal of Measurement and Evaluation in Education and Psychology, 9(2), 85-100. https://doi.org/10.21031/epod.389208

Ates Cobanoglu, A. & Cobanoglu, I. (2021). Do Turkish student teachers feel ready for online learning in post-COVID times? A study of online learning readiness. Turkish Online Journal of Distance Education, 22(3), 270-280. https://doi.org/10.17718/tojde.961847

Balcı, T., Temiz, C. N., & Sivrikaya, A. H. (2021). Transition to distance education in COVID-19 period: Turkish pre-service teachers’ e-learning attitudes and readiness levels. Journal of Educational Issues, 7(1), 296-323. https://doi.org/10.5296/jei.v7i1.18508

Bond, T. G., & Fox, C. M. (2015). Applying the Rasch model (3rd ed.). Routledge.

Brislin, R. W. (1970). Back-translation for cross-cultural research. Journal of Cross-Cultural Psychology, 1(3), 185-216. https://doi.org/10.1177/135910457000100301

Byrne, B. M. (2010). Structural equation modeling with AMOS (2nd ed.). Routledge.

Cadorin, L., Bortoluzzi, G., & Palese, A. (2013). The self-rating scale of self-directed learning (SRSSDL): A factor analysis of the Italian version. Nurse Education Today, 33(12), 1511-1516. https://doi.org/10.1016/j.nedt.2013.04.010

Cadorin, L., Bressan, V., & Palese, A. (2017). Instruments evaluating the self-directed learning abilities among nursing students and nurses: A systematic review of psychometric properties. BMC Medical Education, 17, Article 229. https://doi.org/10.1186/s12909-017-1072-3

Caffarella, R. S. (1993). Self-directed learning. New Directions for Adult and Continuing Education, 57, 25-35. https://doi.org/10.1002/ace.36719935705

Çelik, K., & Arslan, S. (2016). Turkish adaptation and validation of Self-Directed Learning Inventory. International Journal of New Trends in Arts, Sports & Science Education (IJTASE), 5(1), 19-25. http://www.ijtase.net/index.php/ijtase/article/view/207

Chen, F. F. (2007). Sensitivity of goodness of fit indexes to lack of measurement invariance. Structural Equation Modeling: A Multidisciplinary Journal, 14(3), 464-504. https://doi.org/10.1080/10705510701301834

Chen, F. F. (2008). What happens if we compare chopsticks with forks? The impact of making inappropriate comparisons in cross-cultural research. Journal of Personality and Social Psychology, 95(5), 1005-1018. https://doi.org/10.1037/a0013193

Cheng, S.-F., Kuo, C.-L., Lin, K.-C., & Lee-Hsieh, J. (2010). Development and preliminary testing of a self-rating instrument to measure self-directed learning ability of nursing students. International Journal of Nursing Studies, 47(9), 1152-1158. https://doi.org/10.1016/j.ijnurstu.2010.02.002

Cheung, G. W. & Rensvold, R. B. (2002). Evaluating goodness-of-fit indexes for testing measurement invariance. Structural Equation Modeling: A Multidisciplinary Journal , 9(2), 233-255. https://doi.org/10.1207/S15328007SEM0902_5

Daily Sabah. (2021, March 20). Turkey’s remote education is project for future: Minister Selçuk. https://www.dailysabah.com/turkey/education/turkeys-remote-education-is-project-for-future-minister-selcuk

Demircioğlu, Z. I., Öge, B., Fuçular, E. E., Çevik, T., Nazligül, M. D., & Özçelik, E. (2018). Reliability, validity and Turkish adaptation of Self-Directed Learning Scale (SDLS). International Journal of Assessment Tools in Education, 5(2), 235-247. https://doi.org/10.21449/ijate.401069

Durnali, M. (2020). The effect of self-directed learning on the relationship between self-leadership and online learning among university students in Turkey. Tuning Journal for Higher Education, 8(1), 129-165. https://doi.org/10.18543/tjhe-8(1)-2020pp129-165

Edmondson, D. R., Boyer, S. L., & Artis, A. B. (2012). Self-directed learning: A meta-analytic review of adult learning constructs. International Journal of Educational Research, 7(1), 40-48. http://debdavis.pbworks.com/w/file/fetch/96898755/edmondson%20boyer%20artis%20--%20selfdirected%20learning%20a%20meta-analytic%20review.pdf

Ertuğ, N., & Faydali, S. (2018). Investigating the relationship between self-directed learning readiness and time management skills in Turkish undergraduate nursing students. Nursing Education Perspectives, 39(2), E2-E5. https://doi.org/10.1097/01.NEP.0000000000000279

Fisher, M., King, J., & Tague, G. (2001). Development of a self-directed learning readiness scale for nursing education. Nurse Education Today, 21(7), 516-525. https://doi.org/10.1054/nedt.2001.0589

Garrison, D. R. (1997). Self-directed learning: Toward a comprehensive model. Adult Education Quarterly, 48(1), 18-33. https://doi.org/10.1177/074171369704800103

Gignac, G. E., & Kretzschmar, A. (2017). Evaluating dimensional distinctness with correlated-factor models: Limitations and suggestions. Intelligence, 62, 138-147. https://doi.org/10.1016/j.intell.2017.04.001

Guglielmino, L. M. (1977). Development of the Self-Directed Learning Readiness Scale [Unpublished doctoral dissertation]. University of Georgia.

Hiemstra, R. (1994). Self-directed learning. In T. Husen & T. N. Postlethwaite (Eds.), The International Encyclopedia of Education (2nd ed.). Pergamon Press.

Hone, K. S., & El Said, G. R. (2016). Exploring the factors affecting MOOC retention: A survey study. Computers & Education, 98, 157-168. https://doi.org/10.1016/j.compedu.2016.03.016

Hung, M.-L., Chou, C., Chen, C.-H., & Own, Z- Y. (2010). Learner readiness for online learning: Scale development and student perceptions. Computers & Education, 55(3), 1080-1090. https://doi.org/10.1016/j.compedu.2010.05.004

İlhan, M., & Çetin, B. (2013). Çevrimiçi Öğrenmeye Yönelik Hazır Bulunuşluk Ölçeği’nin (ÇÖHBÖ) Türkçe Formunun Geçerlik ve Güvenirlik Çalışması [The validity and reliability study of the Turkish version of an Online Learning Readiness Scale]. Eğitim Teknolojisi Kuram ve Uygulama, 3(2), 72-101. https://dergipark.org.tr/tr/pub/etku/issue/6269/84216

Jorgensen, T. D., Pornprasertmanit, S., Schoemann, A. M., & Rosseel, Y. (2022). semTools: Useful tools for structural equation modeling (R package version 0.5-6) [Computer software]. https://CRAN.R-project.org/package=semTools

Jung, O. B., Lim, J. H., Jung, S. H., Kim, L. G., & Yoon, J. E. (2012). The development and validation of a self-directed learning inventory for elementary school students. The Korean Journal of Human Development, 19(4), 227-245.

Kara, M. (2022) Revisiting online learner engagement: Exploring the role of learner characteristics in an emergency period. Journal of Research on Technology in Education, 54(sup1), S236-S252. https://doi.org/10.1080/15391523.2021.1891997

Karatas, K. & Arpaci, I. (2021). The role of self-directed learning, metacognition, and 21st century skills predicting the readiness for online learning. Contemporary Educational Technology, 13(3), Article ep300. https://doi.org/10.30935/cedtech/10786

Karagülle, S., & Berkant, H. G. (2022). Examining self-managed learning skills and thinking styles of university students. Gazi University Journal of Gazi Education Faculty, 42(1), 669-710. https://doi.org/10.17152/gefad.1005908

Khiat, H. (2015). Measuring self-directed learning: A diagnostic tool for adult learners. Journal of University Teaching & Learning Practice, 12(2), Article 2. https://doi.org/10.53761/1.12.2.2

Kidane, H. H., Roebertsen, H., & van der Vleuten, C. P. M. (2020). Students’ perceptions towards self-directed learning in Ethiopian medical schools with new innovative curriculum: A mixed-method study. BMC Medical Education, 20, Article 7. https://doi.org/10.1186/s12909-019-1924-0

Kim, E. S., & Yoon, M. (2011) Testing measurement invariance: A comparison of multiple-group categorical CFA and IRT. Structural Equation Modeling: A Multidisciplinary Journal, 18(2), 212-228. https://doi.org/10.1080/10705511.2011.557337

Kim, R., Olfman, L., Ryan, T., & Eryilmaz, E. (2014). Leveraging a personalized system to improve self-directed learning in online educational environments. Computers & Education, 70, 150-160. https://doi.org/10.1016/j.compedu.2013.08.006

Kline, R. B. (2016). Principles and practice of structural equation modeling (4th ed.). Guilford Press.

Knowles, M. S. (1975). Self-directed learning: A guide for learners and teachers. Prentice Hall/Cambridge.

Kocaman, G., Dicle, A., Üstün, B., & Çimen, S. (2006). Kendi kendine ög˘ renmeye hazırolus¸ ölçeg˘ i: Geçerlilik güvenirlik çalıs¸ması [Self-Directed Learning Readiness Scale: Validity and reliability study; Paper presentation]. Dokuz Eylül University III. Active Education Congress, lzmir, Turkey.

Long, H. B. (1977). [Review of the book Self-directed learning: A guide for learners and teachers, by M. S. Knowles.]. Group & Organization Studies, 2(2), 256-257. https://doi.org/10.1177/105960117700200220

Lounsbury, J. W., & Gibson, L.W. (2006). Personal style inventory: A personality measurement system for work and school settings. Resource Associates.

Lounsbury, J. W., Levy, J. J., Park, S.-H., Gibson, L. W., & Smith, R. (2009). An investigation of the construct validity of the personality trait of self-directed learning. Learning and Individual Differences, 19(4), 411-418. https://doi.org/10.1016/j.lindif.2009.03.001

MacCallum, R. C., Browne, M. W., & Sugawara, H. M. (1996). Power analysis and determination of sample size for covariance structure modeling. Psychological Methods, 1(2), 130-149. https://doi.org/10.1037/1082-989X.1.2.130

Meade, A. W., Lautenschlager, G. J., & Johnson, E. C. (2007). A Monte Carlo examination of the sensitivity of the differential functioning of items and tests framework for tests of measurement invariance with Likert data. Applied Psychological Measurement, 31(5), 430-455. https://doi.org/10.1177/0146621606297316

Millsap, R. E. (2011). Statistical approaches to measurement invariance. Routledge.

Millsap, R. E., & Cham, H. (2013). Investigating factorial invariance in longitudinal data. In B. Laursen, T. D. Little, & N. A. Card (Eds.), Handbook of developmental research methods (pp. 109-148). Guilford Press.

Ozer, O., & Yukselir, C. (2021). “Am I aware of my roles as a learner?” The relationships of learner autonomy, self-direction and goal commitment to academic achievement among Turkish EFL learners. Language Awareness, 32(1), 19-38. https://doi.org/10.1080/09658416.2021.1936539

Peker Ünal, D. (2022). The predictive power of the problem-solving and emotional intelligence levels of prospective teachers on their self-directed learning skills. Psycho-Educational Research Reviews, 11(1), 46-58. https://doi.org/10.52963/PERR_Biruni_V11.N1.04

Polat, E., Hopcan, S., & Yahşi, Ömer. (2022). Are K–12 teachers ready for e-learning? The International Review of Research in Open and Distributed Learning, 23(2), 214-241. https://doi.org/10.19173/irrodl.v23i2.6082

Prior, D. D., Mazanov, J., Meacheam, D., Heaslip, G., & Hanson, J. (2016). Attitude, digital literacy and self-efficacy: Flow-on effects for online learning behavior. The Internet and Higher Education, 29, 91-97. https://doi.org/10.1016/j.iheduc.2016.01.001

Putnick, D. L., & Bornstein, M. H. (2016). Measurement invariance conventions and reporting: The state of the art and future directions for psychological research. Developmental Review, 41, 71-90. https://doi.org/10.1016/j.dr.2016.06.004

Republic of Turkey, Ministry of National Education. (n.d.). Turkey’s education vision 2023. https://planipolis.iiep.unesco.org/sites/default/files/ressources/turkey_education_vision_2023.pdf

Rosseel, Y. (2012). lavaan: An R package for structural equation modeling. Journal of Statistical Software, 48(2), 1-36. https://doi.org/10.18637/jss.v048.i02

Saritepeci, M., & Orak, C. (2019). Lifelong learning tendencies of prospective teachers: Investigation of self-directed learning, thinking styles, ICT usage status and demographic variables as predictors. Bartın University Journal of Faculty of Education, 8(3), 904-927. https://doi.org/10.14686/buefad.555478

Sass, D. A. (2011). Testing measurement invariance and comparing latent factor means within a confirmatory factor analysis framework. Journal of Psychoeducational Assessment, 29(4), 347-363. https://doi.org/10.1177/0734282911406661

Sawatsky, A. (2017, May 22). Instruments for measuring self-directed learning and self-regulated learning in health professions education: A systematic review [Paper presentation]. Society of Directors of Research in Medical Education (SDRME) 2017 Annual Meeting, Minneapolis, MN, United States.

Schmitt, N., & Kuljanin, G. (2008). Measurement invariance: Review of practice and implications. Human Resource Management Review, 18(4), 210-222. https://doi.org/10.1016/j.hrmr.2008.03.003

Schulze, A. S. (2014). Massive open online courses (MOOCs) and completion rates: Are self-directed adult learners the most successful at MOOCs? [Doctoral dissertation, Pepperdine University]. Pepperdine Digital Commons. https://digitalcommons.pepperdine.edu/cgi/viewcontent.cgi?article=1441&context=etd

Song, L., & Hill, J. R. (2007). A conceptual model for understanding self-directed learning in online environments. Journal of Interactive Online Learning, 6(1), 27-42. https://www.ncolr.org/jiol/issues/pdf/6.1.3.pdf

Su, J. (2016). Successful graduate students’ perceptions of characteristics of online learning environments (Unpublished doctoral dissertation). The University of Tennessee, Knoxville, TN.

Suh, H. N., Wang, K. T., & Arterberry, B. J. (2015). Development and initial validation of the Self-Directed Learning Inventory with Korean college students. Journal of Psychoeducational Assessment, 33(7), 687-697. https://doi.org/10.1177/0734282914557728

Sun, W., Hong, J.-C., Dong, Y., Huang, Y., & Fu, Q. (2022). Self-directed learning predicts online learning engagement in higher education mediated by perceived value of knowing learning goals. The Asia-Pacific Education Researcher, 32, 307-316. https://doi.org/10.1007/s40299-022-00653-6

Svetina, D., Rutkowski, L., & Rutkowski, D. (2020). Multiple-group invariance with categorical outcomes using updated guidelines: An illustration using M plus and the lavaan/semTools packages. Structural Equation Modeling: A Multidisciplinary Journal, 27(1), 111-130. https://doi.org/10.1080/10705511.2019.1602776

Tekkol, İ. A., & Demirel, M. (2018). An investigation of self-directed learning skills of undergraduate students. Frontiers in Psychology, 9, Article 2324. https://doi.org/10.3389/fpsyg.2018.02324

Thompson, M. S., & Green, S. B. (2013). Evaluating between-group differences in latent variable means. In G. R. Hancock & R. O. Mueller (Eds.), Structural equation modeling: A second course (2nd ed., pp. 163-218). Information Age Publishing.

Ünsal Avdal, E. (2013). The effect of self-directed learning abilities of student nurses on success in Turkey. Nurse Education Today, 33(8), 838-841. https://doi.org/10.1016/j.nedt.2012.02.006

West, S. G., Taylor, A. B., & Wu, W. (2012). Model fit and model selection in structural equation modeling. In R. H. Hoyle (Ed.), Handbook of structural equation modeling (pp. 209-231). Guilford Press.

Widaman, K. F., Ferrer, E., & Conger, R. D. (2010). Factorial invariance within longitudinal structural equation models: Measuring the same construct across time. Child Development Perspectives, 4(1), 10-18. https://doi.org/10.1111/j.1750-8606.2009.00110.x

Wu, H., & Estabrook, R. (2016). Identification of confirmatory factor analysis models of different levels of invariance for ordered categorical outcomes. Psychometrika, 81, 1014-1045. https://doi.org/10.1007/s11336-016-9506-0

Xia, Y., & Yang, Y. (2019). RMSEA, CFI, and TLI in structural equation modeling with ordered categorical data: The story they tell depends on the estimation methods. Behavior Research Methods, 51, 409-428. https://doi.org/10.3758/s13428-018-1055-2

Xie, X., Siau, K., & Nah, F. F.-H. (2020). COVID-19 pandemic—Online education in the new normal and the next normal. Journal of Information Technology Case and Application Research, 22(3), 175-187. https://doi.org/10.1080/15228053.2020.1824884

Yang, H., Su, J., & Bradley, K. D. (2020). Applying the rasch model to evaluate the self-directed online learning scale (SDOLS) for graduate students. The International Review of Research in Open and Distributed Learning, 21(3), 99-120. https://doi.org/10.19173/irrodl.v21i3.4654

Yurdugül, H., & Alsancak Sırakaya, D. A. (2013). The scale of online learning readiness: A study of validity and reliability. Education and Science, 38(169), 391-406. https://www.proquest.com/openview/c6b0a56d9385205d7bf3759a9bedd68d/1

Yurdugül, H., & Demir, Ö. (2017). An investigation of pre-service teachers’ readiness for e-learning at undergraduate level teacher training programs: The case of Hacettepe University. Hacettepe University Journal of Education, 32(4), 896-915. https://doi.org/10.16986/HUJE.2016022763

A Categorical Confirmatory Factor Analysis for Validating the Turkish Version of the Self-Directed Online Learning Scale (SDOLS-T) by Hongwei Yang, PhD, Müslim Alanoğlu, PhD, Songül Karabatak, PhD, Jian Su, PhD, and Kelly Bradley, PhD is licensed under a Creative Commons Attribution 4.0 International License.