Katherine Pang

University of Texas at Tyler, USA

The purpose of this study was to assess the pedagogical equivalence, as determined by knowledge gains, and the pedagogical effectiveness of certain components in a video-driven multimedia, web-based professional development training program as compared to a traditional, face-to-face program under real-world constraints of time and limited economic resources. The study focused on the use of video-driven multimedia, web-based instruction in the corporate environment to determine if the quality of the learning experience and the knowledge gained from the instruction were the same as with traditional methods. This experimental study assigned business professionals quasi-randomly to either a control group or an experimental group, where they attended either a live-instructed professional development program or a video-driven multimedia, web-based professional development program. Overall, results indicated that the video-driven multimedia, web-based instruction was not only pedagogically equivalent in terms of knowledge gains to the live instruction but that the knowledge gains were slightly higher among the web-based participants. Further, certain components in the web-based environment contributed more than components in the live environment to pedagogical effectiveness.

Keywords: Web-based training; video-driven multimedia; pedagogical equivalence; pedagogical effectiveness; corporate training; professional development; e-learning; instructional design

The primary purpose of the current study was to assess the pedagogical equivalence, as determined by knowledge gains, of video-driven multimedia, web-based instruction and traditional live instruction. A secondary purpose was to determine the pedagogical effectiveness of certain components in both the web-based and the live instruction. The study focused on the use of video-driven multimedia, web-based training in the corporate environment to determine not only if the quality of the learning experience was the same as with traditional, face-to-face instruction but also whether the knowledge gains were equivalent to establish pedagogical equivalency. The study was conducted using working professionals under real-world constraints of time and location and was conducted in the same manner as regularly conducted corporate training or professional development programs. The instructional design of the web-based, video-driven multimedia program was based on a modified version of Taylor’s (1994) NOVEX (novice-to-expert) analysis approach. The modified instructional design condensed his nine-step approach to six steps, emphasizing scaffolding and the use of expert declarative knowledge to develop a range of learning activities that measured pedagogical equivalence, such as knowledge gains and content mastery.

Since educational technology is in itself not transformative, it must use meaningful instructional design methodologies. It is important that technology as a delivery medium effectively highlight the content so it can be applied by the learner and aligned with learning goals. The web-based instructional program used in this study was designed according to the work of Taylor (1994) in his extension of Tennyson’s (1992) cognitive and constructivist learning theory known as the NOVEX (novice-to-expert) analysis approach to instructional design. The theory is based on the analysis of the cognitive structures of both novices and experts (NOVEX Analysis) and is derived from a constructivist heuristic. The fundamental structure of the NOVEX analysis approach to instructional design is to start with an identified domain-specific cognitive skill and then analyze the knowledge base of the expert in terms of the structure of the domain-specific objective knowledge. The expert’s framework is then used to measure the knowledge base of the targeted learner, who is the novice. The advanced organizer allows the instructional designer to develop learning strategies within the context of the expert knowledge base and to provide “explicit ideational scaffolding for the generation of the expert knowledge base which is the terminal objective of the instruction” (Taylor, p.8). An advanced organizer is material that is presented prior to learning, which is used by the learner to organize and interpret new, incoming information (Mayer, 2003).

The importance of the constructivist approach is well-accepted and as Huitt (2003) notes, the emphasis is that “an individual learner must actively build knowledge and skills (e.g., Bruner, 1990) and that information exists within these built constructs rather than in the external environment” (p. 386). For example, the proliferation of Moodle, an open-source learning management system (LMS) that is built on a foundation of constructivist pedagogy, is evidence of the use of constructivist principles in instructional design (Bellefeuille, Martin, & Buck, 2005). The NOVEX approach to instructional design integrated the constructivists’ approach, which mandates that instructors and educators design instruction based on existing learner knowledge and the experiences that learners bring to the learning environment. According to Taylor (1994) the NOVEX analysis approach to instructional design required a team approach of not only the instructional designer and content expert but also the novice, who can test the effectiveness of the knowledge transfer from the content expert. As a result, the instructional design for the content in this study was based on the analysis of the cognitive structures of both novices and experts. It emphasized relational and strategic information processing, and it was built in accord with constructivist precepts.

The use of technology in competency-based professional development and corporate training is well established. According to Blocker (2005), web-based or e-learning provides an opportunity to address many known business issues, such as cost reduction, access to information, learning accountability, and increased employee competence. The organizations dedicating the greatest level of resources to training recognize that learning can be a significant contributor to revenue. Rapid advances in Internet-based technologies and the use of the web as an educational resource have driven many organizations to deploy web-based professional development programs. In addition, instructional design theory that correlates to expected learner realities and organizational level e-learning solidifies objectives and outcomes. However, many educators and trainers in the corporate environment have been frustrated by their organization’s “effort to simply get something up and running” (Dick, 1996), rather than the organization focusing on how to best use cognitive and constructivist instructional design models to produce measurable outcomes. For many, frustration levels have centered on the question of the equivalence and effectiveness of learning and the application of skills within the realities of the organizational environment.

The use of technology is no longer a major issue in professional development and corporate training (Ertmer, 1999). Now, the major issues are how to design effective web-based instruction, whether there is a pedagogical difference between live and web-based training, and whether certain components of web-based training are integral to effective instructional design. The literature suggests (Reeves, 2002; Slotte & Herbert, 2006) that there are still doubts about the benefits of e-learning, so it is important to assess pedagogical equivalence and component effectiveness. If there is no trade-off in the pedagogical equivalence as determined by knowledge gains and if certain components of web-based instruction are identified as effective then the cost benefit of employing video-driven multimedia, web-based training may be used to create new opportunities for competency-based learning and to promote worker success and achievement. The current study is important in the field of cognition and instruction because most research has not emphasized professional development and competency-based learning that is designed in accord with cognitive based instructional design strategies in a video-driven multimedia, web-based learning context. Therefore, this study has both theoretical and practical significance in advancing video-driven multimedia, web-based training for professional development in the corporate environment. As most research has focused on web-based learning in college coursework, there is an emergent need to consider this issue within the real-world organizational context where training is juxtaposed with the cost-benefit of the training pedagogy.

Since the importance of professional development is vital to the maintenance and effectiveness of today’s workforce, organizations recognize that there is a need to frequently examine the pedagogy, instructional strategies, and delivery mediums of workplace training. According to Staron, Jasinski, and Weatherley (2006), we are now in the “knowledge era,” which is defined by its complexity, multiple priorities, high levels of energy, and opportunities. “It is an ‘intangible era’ where the growing economic commodity is knowledge . . . It is not just about accessing information but about how we learn to continually select, borrow, interpret, share, contextualize, generate, and apply knowledge to our work” (p. 3). Goodyear and Ellis (2008) posit that “our methods of analysis - and design - have to become more organic or ecological” (p. 148), and according to Fok and Ip (2006), there remain issues as to whether there is a tangible and real commitment, given time and money constraints, to examine learning benefits. It would therefore appear that although vendors, instructional designers, and subject matter experts unanimously emphasize the importance of training and instruction that allows for job-specific evaluation, there is a lack of sound research material in the area of pedagogical equivalency and effectiveness. This is particularly true in web-based learning and training that is based on a modified NOVEX approach to instructional design, which integrates video-driven multimedia components.

The current study examined the effects of a video-driven multimedia, web-based, competency-based professional development program in contrast to a live, instructor-led program, using the same instructional content on organizational leadership. Specifically, the following hypothesis was tested: A video-driven multimedia, web-based professional development program is pedagogically equivalent to a live, instructor-led program in delivering competency-based professional development training, as measured by knowledge gains. Further, certain components in the web-based environment are more effective for learning. To simplify the process while maintaining the integrity of the NOVEX analysis approach, this study used a modified version of the NOVEX analysis as a methodology for the instructional design of the web-based instructional content with an emphasis on relational and strategic information processing. The instructional content was delivered through a video-driven multimedia, web-based learning environment.

For purposes of this study pedagogical equivalence was operationalized by the participants’ knowledge gains and mastery of the instructional content. The effectiveness of the instructional design was operationalized in terms of certain components in the learning environment (i.e., videos, whiteboard, PowerPoint). A multiple choice post-test measured the equivalence of the instruction modalities, and a self-reported post-test measured the participants’ responses to the effectiveness of the components. Effectiveness was operationalized as its enhancement of learning in the learning environment.

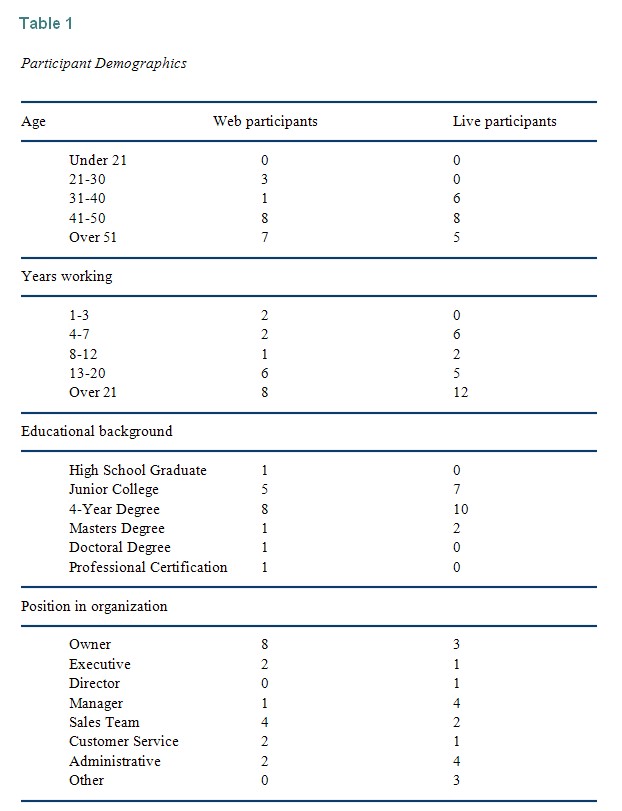

This study included 38 participants. The ages of the participants ranged from 21 to over 51 years; the majority of participants were 31 years of age and older. Additionally, the participants have worked from one to 21 years. Educational backgrounds of the participants varied from a high school diploma to a doctoral degree. Participants held a variety of positions within organizations. Many of the participants were business owners; other participants included members of sales teams, managers, or administrators (see Table 1).

Two self-reporting instruments were used to measure the dependent variables: (1) the pedagogical equivalence between the video-driven multimedia, web-based instruction and the live instruction, and (2) the effectiveness of certain components. A computer and Internet experience questionnaire determined if there were differences in homogeneity between the two groups. This survey had four questions on a 5-point Likert scale, ranging from 1 (lowest) to 5 (highest). The other questionnaire, which measured exposure to and experience with professional development programs, consisted of ten questions on a 5-point Likert scale, ranging from 1 (lowest) to 5 (highest). This self-reporting post-test measured the participants’ attitudinal responses to the components of the medium for instruction. Content characteristics were measured via a multiple choice post-test on the instructional content. The instructional design method was operationalized by certain components of the learning environment (i.e., videos, whiteboard, PowerPoint). A volunteer participant consent form and demographic survey were also administered.

The research design was formulated based on a classical experimental design. Through self-selection and selection by a supervisor, participants were assigned quasi-randomly to an experimental group (N = 19), the web-instructed program, and to a control group (N =19), the live-instructed program, to create equivalence between the groups. The primary dependent variable in this study was pedagogical equivalence between the video-driven multimedia, web-based instruction and the live instruction of a competency-based professional development program. A secondary dependent variable was the effectiveness of certain components in both the live and web-based training. Two self-reporting instruments measured these dependent variables. A self-report post-test measured the participants’ attitudinal responses to the components of the medium of instruction. A multiple choice post-test on the content of the instruction measured pedagogical equivalence as determined by knowledge gains.

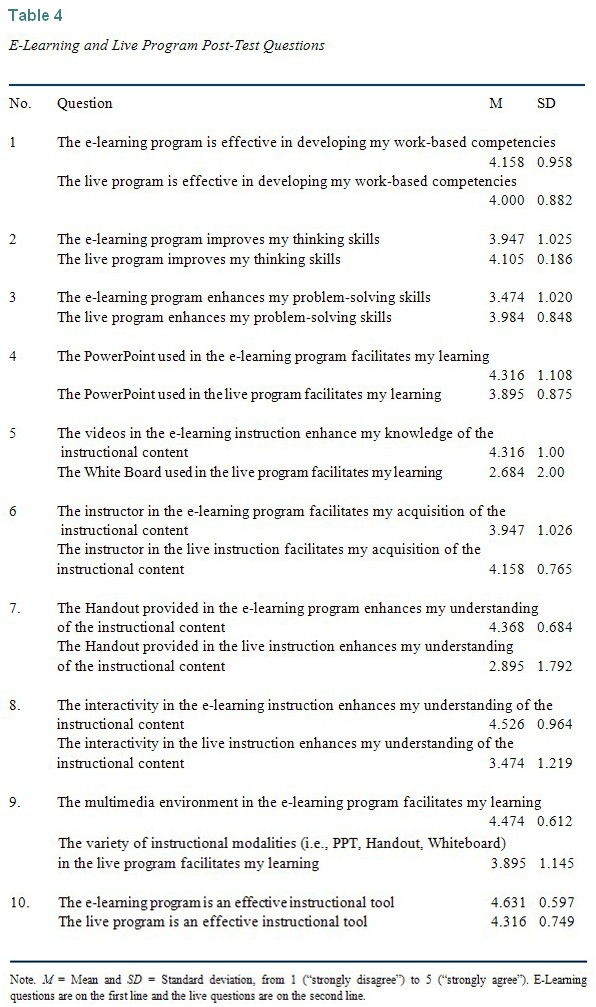

The first post-test measured pedagogical effectiveness in the form of participants’ attitudes to the components of instruction. The first post-test was prepared by the experimenter and reviewed by the subject matter expert (SME) instructor. There were two matched versions of this post-test, including one for the live-instructed participants and one for the web-instructed participants. The questions were designed to mirror one another. Minor changes were made to adjust for the tools used as part of the instruction. For example, in the web-based post-test, the word e-learning was used; for the live post-test, the word live was substituted for e-learning in the same question. To maintain the integrity of equivalence and balance, only two other changes were made to the wording of the questions. These alterations were made to capture the appropriate component for the learning environment. Question 5 was worded differently for the e-learning post-test (i.e., “The videos in the e-learning instruction enhance my knowledge of the instructional content”) in comparison to the live post-test (i.e., “The White Board used in the live program facilitates my learning”). In question 9, a variety of instructional modalities were used in the live post-test.

The second post-test measured pedagogical equivalence, specifically knowledge gains represented by responses on an objective multiple choice post-test for the content of the instruction. The multiple-choice post-test was highly analogous, in form and content, to the type used in any college classroom. This second post-test was developed by the instructor of the live program; the instructor was a SME with a master’s degree in human resources and substantial experience developing and delivering live, competency-based training. The post-test consisted of 15 multiple choice questions that were derived directly from the instructional content. This test was identical for both the web-instructed and live-instructed participants. A percentage score was attributed to each participant. To eliminate bias on the part of the scorer a set of correct answers was provided and used to score the post-test. For the experimental group, the study used the modified NOVEX approach to instructional design. The instructional content was delivered through a video-driven multimedia, web-based environment accessible by any web browser. The web-based learning management system included multimedia instructional content composed of video, text, hyperlinks, and self-assessment quizzes. For the control group, the study used a traditional live conference room with an instructor, PowerPoint presentation, and a whiteboard. A different instructor was used for the video content and the live instruction. Both instructors were proficient in instruction of the content. The control group and experimental group were exposed to the same instructional content for the same length of time. It is important to note that the delivery mediums (i.e., web-based multimedia versus live instruction) are akin to comparing “apples to oranges.” In many organizations live instruction is the preferred training method. This is due to a perception of pedagogical superiority. As a result, the comparison in this study was necessary to assess the pedagogical equivalence and, perhaps, to change the training culture in many organizations.

The learning tasks for the experiment were designed to be suitable for working professionals attending a professional development program. In addition, the length of the tasks was standardized so that the “length of task” would not be a contaminating artifact in the determination of complexity. To improve the instruments’ and post-tests’ content validity, two business professionals were invited to review the questions, to provide assessments, and to evaluate the inclusion of the questions in the instruments. The business professionals delivered their assessments informally by providing feedback to the instructor and experimenter, and they were involved in the development process.

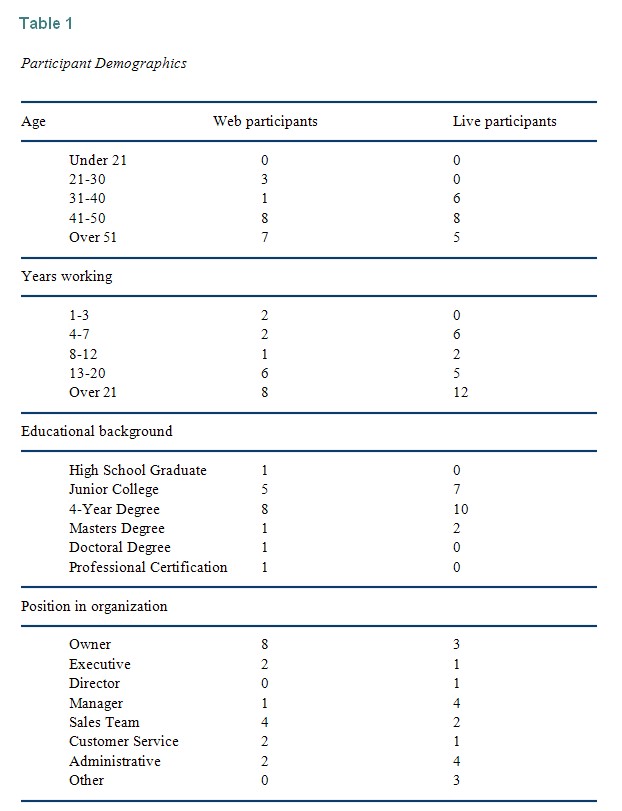

Controls for validity threats were implemented to ensure that internal threats, such as inadequate procedures, problems in applying treatments (i.e., discussions between participants), or participants’ characteristics, were minimized. This was accomplished by stating in the instructions that participants should not discuss their participation in this research with other business associates or colleagues and by drawing from a diverse pool of participants who would not have access to the course materials. In addition, possible external threats, such as generalization and inferential conclusions, were avoided by using volunteer participants from a random sample. In addition, due to the small sample size, a power analysis was performed to determine the ability to detect accurate and reliable effects and results. An a priori power analysis was conducted during the design of the study to determine what sample size would be necessary for 80% power based on the t-tests with two independent groups based on an α = .05 as illustrated in Table 2.

Descriptive statistics were assembled for the questionnaire data, the assessment, and post-tests. No statistical difference existed between the two groups in computer and Internet experience (see Table 2). On a scale of 1 to 5, the participants’ familiarity with computers for the web-based participants (M = 3.79) was equivalent to the live-instructed participants (M = 4.1). The use of Windows as an operating platform was similar for both the web-based participants (M = 3.9) and the live-instructed participants (M = 4.2). Additionally, the use of Internet Explorer to access the Internet was equivalent for the web-participants (M = 4.1) and the live-instructed participants (M = 4.3). Familiarity with using Flash or QuickTime to watch web-based video and animation on the Internet was closely related for both the web-based participants (M = 3.3) and the live-instructed participants (M = 3.1).

An F-test and t-test were used to analyze the data for the assessment and the two post-tests. The assessment listed 10 traits or practices that are characteristic of leadership or management; statistical analysis revealed significant results, p < .001 (see Table 3). The results revealed a difference between the web-instructed participants (M = .831, SD = .088) and the live-instructed participants (M = .610, SD = .253).

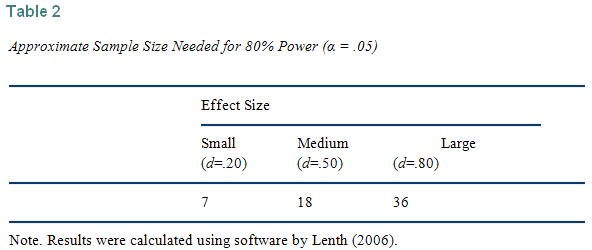

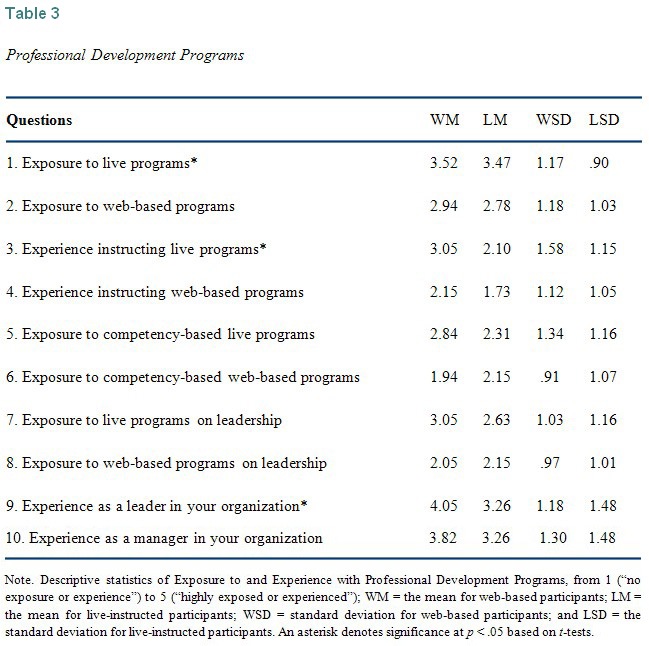

Based on t-tests, differences existed between the web-instructed and live-instructed participants on the questionnaire to determine exposure to and experience with professional development programs. Significant effects resided in questions 1, 3, and 9: question 1, exposure to live programs, p = .04; question 3, experience instructing live programs, p = .02; and question 9, as a leader within an organization, p = .04. The results revealed that some participants had more experience in and exposure to attending or instructing live professional development programs. However, results did not indicate more experience in the content domain of this study. In addition, the differences pertained to attending and instructing live programs and not web-based programs; therefore, a bias in favor of web-based instruction cannot be inferred from the data. The differences in self-assessed leadership did not relate to learning environment, its components, or instructional content; instead, the differences related to a position within an organization. Results indicated that web-based participants were better able to ascribe certain traits and characteristics to a leadership or management function than the participants in the live session. The results showed non-equivalence in the groups only on this particular assessment. The assessment was broad-based in nature and not derived from the instructional content. Thus, it should not affect the validity and reliability of the post-test on the instructional method and the effectiveness of its components. Also, it should not impact the measure of the content characteristics as the questions were not derived from the specific content of the instruction. In addition, there were no significant results in the subject matter post-test. As a result, it did not appear that the lack of equivalence on the assessment impacted the dependent variables of pedagogical equivalence (knowledge gains) and effectiveness (i.e., measured by components and content).

The t-test results for the e-learning and live program post-test showed that of the 10 items, four were significant: The first significant result was question 5, “The videos in the e-learning instruction enhance my knowledge of the instructional content,” p < .001; the second was question 7, “The Handout provided in the e-learning program enhances my understanding of the instructional content,” p < .001; the third was question 8, “The interactivity in the e-learning instruction enhances my understanding of the instructional content,” p = .03; and the last was question 9, “The multimedia environment in the e-learning program facilitates my learning,” p = .03.

The second post-test consisted of 15 multiple choice questions that were derived directly from the instructional content. The questions were scored and a percentage assigned to each participant, with 100% representing 15 out of 15. The web-instructed participants scored slightly higher (M = .766, SD = 0.142) than the live-instructed participants (M = .760, SD = .0.140), p = .38), establishing an equivalency in knowledge gains (see Table 4).

As the results indicated, the hypothesis was supported by the findings and highlighted the foundational point of this study, that a video-driven multimedia, interactive learning environment is pedagogically equivalent, as measured by knowledge gains, to traditional, live training in the delivery of a competency-based program. This was supported by 4 out of 10 questions in the first post-test. The significant results are as follows:

These results demonstrated that participants attributed a higher score to the functions that comprised video-driven multimedia, web-based learning. Additionally, the results indicated that those in a web-based program may use a handout more frequently if they can download it. These significant functions illustrated that the video-driven multimedia, web-based environment was not pedagogically inferior to traditional, live learning. In fact, knowledge gains in the web-based learning environment were a little higher suggesting not only pedagogical equivalence but also that the web-based environment may be a better alternative because of its cost-effectiveness.

This study provides a foundation for future experimental research in understanding other aspects of pedagogical equivalence and the effectiveness of components in video-driven multimedia, web-based professional development training as contrasted with face-to-face training. The current research indicated that video, a multimedia environment, and interactivity are important functions to deliver effective web-based professional development programs. Therefore, instructional designers, corporate trainers, and organizations can use these indicators to develop instructional strategies for designing and delivering more effective training programs. They can also use this study to diminish the perception that traditional, live training is pedagogically superior or more effective than web-based training in that the results from this study establish that knowledge gains were not only evident in both groups but slightly higher among the web-based learners. Many individuals in human resources, professional training societies (e.g., ASTD), e-learning communities (e.g., the E-Learning Guild), and elsewhere will benefit from the expansion of these results. Publications (e.g., Journal of Workplace Learning, Human Resource Development, and Studies in Continuing Education) can use this study to generate additional interest in research of this area. Although the sample size was small, there was ample power to validate the findings, and although a majority of the participants was drawn from those with business ownership or management experience, additional research can prove valuable for generalizing the results to other populations. In addition, using participants from a variety of organizational settings where professional development training is an important part of increasing an organization’s profitability would prove interesting. The significant findings of this study can be used in further research on different types of professional development training. The results have wide implications for corporate training, where on-demand learning, cost, and loss of revenue from travel and instruction often determine the mode and method for training. Thus implications exist for a variety of organizations that are tasked with training workers and delivering cost-effective professional development programs.

Bellefeuille, G., Martin, R. R., & Buck, M. P. (2005). From pedagogy to technology in social work education: A constructivist approach to instructional design in an online, competency-based child welfare course. Child & Youth Care Forum, 34, 371-389.

Blanchette, J., & Kanuka, H. (1999). Applying constructivist learning principles in the virtual classroom. Proceedings of Ed-Media/Ed-Telecom 99 World Conference. Retrieved April 10, 2008, from http://proxy.tamu-commerce.edu:8436/ehost/pdf?vid=17&hid=19&sid=9e630777-2219-4b27-8051-7cee8e49b925%40sessionmgr9

Blocker, M. J. (2005). E-learning: An organizational necessity (White Paper). Retrieved April 6, 2008, from http:// www.rxfrohumanperformance.com

Bruner, J. (1990). Acts of meaning. Cambridge, MA: Harvard University Press.

Bryans, P., Gormley, N., Stalker, B., & Williamson, B. (1998). From collusion to dialogue: Universities and continuing professional development. Continuing Professional Development, 1, 136-44.

Dick, W. (1996). The Dick and Carey model: Will it survive the decade? Educational Technology Research and Development, 44(3), 55-63.

Ertmer, P. (1999). Addressing first- and second-order barriers to change: Strategies for technology integration. Educational Technology Research and Development, 47, 47- 61.

Fok, A. W. P., & Ip, H. H. S. (2006). An Agent-based framework for personalized learning in continuing professional development. International Journal of Distance Education Technologies, 4(3), 48-61.

Goodyear, P., & Ellis, R. A. (2008). University students’ approaches to learning: Rethinking the place of technology. Distance Education, 29(2), 141-152.

Gunawardena, C. N., Lowe, C. A., & Anderson, T. (1997). Analysis of a global online debate and the development of an interaction analysis model for examining social construction of knowledge in computer conferencing. Journal of Educational Computing Research, 17, 397–431.

Huitt, W. (2003). Constructivism. Educational Psychology Interactive. Retrieved July 16, 2008, from http://chiron.valdosta.edu/whuitt/col/cogsys/construct.html

Johnson, S. D., & Aragon, S. R. (2002). Emerging roles and competencies for training in e-learning environments. Advances in Developing Human Resources, 4(4), 424-439.

Kauffman, D. (2004). Self-regulated learning in Web-based environments: Instructional tools designed to facilitate cognitive strategy use, metacognitive processing, and motivational beliefs. Journal of Educational Computing Research, 301, 139-161.

Lenth, R. V. (2006). Java Applets for Power and Sample Size [Computer software]. Retrieved December 1, 2008, from http://www.stat.uiowa.edu/~rlenth/Power.

Martinez, M., & Bunderson, V. (2000). Building interactive World Wide Web (Web) learning environments to match and support individual learning differences. Journal of Interactive Learning Research, 11, 163-195.

Mayer, R. (2003). Learning and instruction. New Jersey: Pearson Education.

Reeves, T. C. (2002). Keys to successful e-learning: Outcomes, assessment and evaluation. Educational Technology, 42, 23-9.

Slotte, V., & Herbert, A. (2006). Putting professional development online: Integrating learning as productive activity. Journal of Workplace Learning, 18, 235-247.

Staron, M., Jasinski, M., & Weatherley, R (2006). TAFE NSW ICVET 2006, Capability development for the knowledge era: Reculturing and life based learning. Paper presented at AVETRA Conference 2006, ‘Global VET: Challenges at Global, National and Local Levels.’ Retrieved December 1, 2008, from http://www.icvet.tafensw.edu.au/ezine/year_2006/may_jun/research_capability_development.htm

Taylor, J. C. (1994). NOVEX analysis: A cognitive science approach to instructional design. Educational Technology, 34, 5-13.

Tennyson, R. (1992). An educational learning theory for instructional design. Educational Technology, 32, 36-41.

Von Glasersfeld, E. (1995). A constructivist approach to teaching. In L. Steffe & J. Gale (Eds.), Constructivism in education (pp. 3-16). Hillsdale, NJ: Lawrence Erlbaum Associates.