Volume 23, Number 1

Jian-Wei Tzeng1, Chia-An Lee2, Nen-Fu Huang2, Hao-Hsuan Huang2, and Chin-Feng Lai3*

1Center for Teaching and Learning Development, National Tsing Hua University, Taiwan; 2Department of Computer Science, National Tsing Hua University; 3Department of Engineering Science, National Cheng Kung University, Taiwan

*The corresponding author is Chin-Feng Lai

Massive open online courses (MOOCs) are open access, Web-based courses that enroll thousands of students. MOOCs deliver content through recorded video lectures, online readings, assessments, and both student-student and student-instructor interactions. Course designers have attempted to evaluate the experiences of MOOC participants, though due to large class sizes, have had difficulty tracking and analyzing the online actions and interactions of students. Within the broader context of the discourse surrounding big data, educational providers are increasingly collecting, analyzing, and utilizing student information. Additionally, big data and artificial intelligence (AI) technology have been applied to better understand students’ learning processes. Questionnaire response rates are also too low for MOOCs to be credibly evaluated. This study explored the use of deep learning techniques to assess MOOC student experiences. We analyzed students’ learning behavior and constructed a deep learning model that predicted student course satisfaction scores. The results indicated that this approach yielded reliable predictions. In conclusion, our system can accurately predict student satisfaction even when questionnaire response rates are low. Accordingly, teachers could use this system to better understand student satisfaction both during and after the course.

Keywords: MOOC, deep learning, learner satisfaction, learning analytics

Massive open online courses (MOOCs) are open-access educational resources that offer various academic courses to the general public through the Internet (Kop, 2011). Since 2012, MOOCs have included high-quality video lectures from universities worldwide. The self-directed learning environment provided by MOOCs signifies a modern approach to education. Users of MOOCs can learn not only from instructional videos created by professors but also through other methods suited to their individual learning styles, including live-streaming video lectures, efficient assessments, and discussion forums (McAuley et al., 2010).

A considerable amount of learning data can be collected and analyzed from the increasingly large number of MOOC users. Many studies have been conducted based on MOOC data; for instance, Kop et al. (2011) described the use of Facebook groups by MOOC participants and obtained data from learner experience surveys, participant demographics, and learner progression through courses. Adamopoulos (2013) analyzed a dataset of MOOC user-generated content to identify factors that predicted self-reported course progress.

Within the broader context of the discourse surrounding big data, educational providers are increasingly collecting, analyzing, and using student information (Papamitsiou & Economides, 2014; Su et al., 2021; Su & Lai, 2021; Su & Wu, 2021). Data have been collected to personalize learning experiences and allocate resources to individual students (Gašević et al., 2015; Leitner et al., 2017). Additionally, big data and artificial intelligence (AI) technology have been applied to better understand learning. Researchers initially focused on creating personalized teaching systems for lone learners, but recent studies have emphasized the interactions between students and the learning material (Kay, 2012). Cognitive science can be used to help lecturers understand the nature of learning and teaching. Thus, the findings can be used to build better systems to help learners gain new skills or understand new concepts. AI has now begun to affect the student experience through analyses of learning data (du Boulay, 2016).

Learner satisfaction refers to student perceptions of both the learning experience and the value of the education received (Baxter Magolda, 1993). According to Donohue and Wong (1997), satisfaction can affect student motivation. It is a significant intermediate outcome (Donohue & Wong, 1997) and a predictor of retention (Baxter Magolda, 1993). Bean and Bradley (1986) found that for college students, satisfaction had a greater impact on their performance than performance had on their satisfaction. However, Klobas et al. (2014) stated that researchers know very little about learner motivations, experiences, and satisfaction. Veletsianos (2013) also noted that discussions about new educational innovations, such as MOOCs, lack input from learners. Accordingly, it is reasonable to conclude that student satisfaction, as determined by student feedback, is a critical factor influencing academic success.

Some studies, such as Liu et al. (2014) and Onah et al. (2014) have characterized MOOC student perspectives by investigating what they learned, the aspects of MOOCs they found most useful, and their motivations for enrolling in MOOCs. However, these studies have been limited to surveying enrollees in journalism MOOCs or analyzing blog posts written by MOOC students related to their MOOC experiences.

Researchers have tried to understand the high dropout rate of MOOCs. (Magold, 1993). Onah et al. (2014) postulated several reasons of low dropout rate, such as low motivation to complete the courses, lack of time, digital and learning skills, and level of the course and lack of support.

Information collected by researchers and e-learning providers has come primarily in the form of big data or learning analytics gathered from observations of online student interactions with the instructors, the content, and their classmates. However, this approach has proved insufficient for gaining a comprehensive understanding of learner experiences in open online learning.

Studies investigating MOOCs from the perspective of an individual learner have collected data from learner experience surveys and on (a) participant demographics; (b) learner progression throughout various courses (in terms of, for example, the number of videos viewed or tests taken; Kop et al., 2011); (c) class size and completion rate (Adamopoulos, 2013); or (d) students’ behaviors, motivations, and communication patterns (Swinnerton et al., 2016). These metrics mirrored attendance and completion data and have enabled researchers to assess this form of education.

Advancements in technology have enabled the application of data-mining techniques and AI to the analysis of MOOCs. Some studies of MOOC performance have analyzed the language used in discussion forums to make predictions. Other researchers have used natural language processing (McNamara et al., 2015; Wen et al., 2014). More recently, these techniques have been used to identify student sentiment among MOOC enrollees (Moreno-Marcos et al., 2018; Pérez et al., 2019).

Due to its numerous advantages, AI has been increasingly applied in education. First, AI techniques have improved lecturers’ understanding of learning and teaching, and facilitated the design of new systems that help learners gain new skills or grasp new concepts (du Boulay, 2016). Therefore, the application of AI to large MOOC datasets has drawn substantial attention. Second, Fauvel et al. (2018) proposed that AI tools could be used to better understand MOOC participant sentiment, and that MOOC instructors use these data to deliver better courses and develop more useful educational tools. AI could also be used to analyze student learning effectiveness by using records of learning behaviors. Some AI tools have been applied to make online learning more similar to its offline counterpart in order to help students better achieve their learning goals. Because of the variety in student learning adaptability, habits, and behavior, personalized service in MOOCs has been seen as especially important (Tekin et al., 2015).

Although there has been an increasing interest in artificial intelligence in educational research, less than five percent of such studies have addressed deep learning in education. However, given the rapid advance of deep learning, application of it in education is seen to have dramatic potential (Chen et al., 2020). Therefore, in terms of future research, the system examined in this study, since it is based on deep learning, could be a useful example of developing such a system for predicting student performance.

One of the challenges of lecturing in a MOOC is accurately understanding the learner experience. It has proved impossible to keep track of all posts and interactions of the numerous enrollees. The analysis of individual learner experience is critical for course evaluation. According to Donath (1996) learner comments and actions indicated their sentiments and concerns toward a course. Without the appropriate analytical tools, it has been difficult to understand differences in learner sentiment and experiences across different learner groups in a large class.

This study proposed a method for evaluating students’ satisfaction by using machine learning. In this method, the learning behavior of participants within the course was used as input for the model, and compared with the results of a survey of MOOC students. The method focused specifically on certain MOOC features students considered important. Thus, educators can use the findings of this research in order to modify their MOOCs to increase student satisfaction and enhance the student learning experience.

Training data for the model came from MOOCs at National Tsing Hua University (NTHU). Logs of learning activities, such as video-watching behavior and exercise completion, were collected and transformed to measures of learning behavior in the model. The proposed model used a deep neural network (DNN) with regression. The result predicted by the DNN was compared with survey responses to evaluate the accuracy of the model. These findings helped us evaluate MOOC learner satisfaction, and aided the design and execution of MOOC lectures.

Student feedback to the courses is one of significant indicators in both face-to-face and online courses. Due to the availability of educational big data, Gameel (2017) analyzed data collected from 1,786 learners enrolled in four MOOCs. Learners perceived that the following aspects influenced learning satisfaction: learner-content interaction, as well as the usefulness, teaching aspects, and learning aspects of the MOOC. From learners’ perspective, those aspects offer valuable insights into understanding the quality and satisfaction of the MOOCs.

To date, MOOCs have not provided participants (i.e., educators or learners) with any form of timely analysis on forum content. Consequently, educators have been unable to reply to questions or comments from hundreds of students in a timely manner (Shatnawi et al., 2014).

Because feedback has been too general, incomplete, or even incorrect, automation may be a solution to this problem. Automatic techniques include (a) functional testing, where feedback is usually insufficient as a guide for novices; (b) software verification for finding bugs in code, which may confuse novices because these tools often ignore true errors or report false errors; and (c) comparisons using reference solutions, in which many reference solutions or pre-existing correct submissions are usually required. One study used a semantic-aware technique to provide personalized feedback that aimed to mimic an instructor looking for code snippets in student submissions for a coding MOOC (Marin et al., 2017).

Moreover, some researchers take advantage of machine learning to analyze the feedback from MOOCs (Hew et al., 2020). Several deep learning models are used to predict student performance, such as dropout prediction (Xing & Du, 2019) or grade prediction (Yang et al., 2017). To make the accuracy higher, precise big data analysis is also a critical direction thing to MOOC. Some researchers want to analyze video watching data precisely (Hu et al., 2020).

Higher education institutions and experts have had a strong interest in extracting useful features pertaining to the course and to learner sentiment from such feedback (Dohaiha et al., 2018; Kastrati et al., 2020). It is thus imperative to develop a reliable automated method to extract these sentiments when dealing with large MOOCs (Sindhu et al., 2019). For instance, Lundqvist et al. (2020) evaluated student feedback within a large MOOC. Their dataset contained 25,000 reviews from MOOC users. The participants were divided into three groups (i.e., beginner, experienced, and unknown) based on their level of experience with the topic. The researchers used the Valence Aware Dictionary for Sentiment Reasoning as an algorithm for sentiment analysis.

Several studies were instructive sources for the design of the questionnaire used in this research. Durksen et al. (2016) used cutting-edge methods to analyze students’ satisfaction in a learning environment. They examined educational and psychological aspects of traditional and MOOC learning settings to compare outcomes (e.g., students’ characteristics, course design). This psychological perspective postulated that the basic needs for autonomy, competence, relatedness, and belonging characterized learner experiences in MOOCs (Durksen et al., 2016).

Other studies have focused on workload and precisely quantified students’ workload. In one study, the workload of medical students was quantized using a specifically developed and self-completed questionnaire (Gonçalves, 2014). Additionally, Çakmak (2011) designed a method to quantify instructor style, including factors such as making clear statements, using one’s time effectively, and using technology. Çakmak referred to student positivity towards instructor style as style approval. Marciniak (2018) also described effective methods for assessing course quality, which encompassed dimensions evaluating all aspects of the program.

Below, we first describe the data collection process in terms of course information and learning behavioral data used in this study. Examples of schema of video and exercise from the platform are also shown to indicate the data structure. Then, we report the design and content of student questionnaire with the response rate of each course. Finally, how data is extracted from the learning activity logs to formulate the predictive model is illustrated with performance evaluation measure.

To avoid bias, different types of MOOC courses offered by NTHU in February 2020 were selected:

Some advanced placement (AP) courses offered in May 2020 were also chosen:

Students in these MOOCs were expected to spend three hours each week watching online videos and completing practice exercises. They were also expected to discuss the course content with their peers. For Introduction to IoT, students were also required to conduct experiments in some offline laboratory sessions.

Videos are the primary teaching method for most MOOCs. In this study, we collected data on video playback actions, such as playing, pausing, seeking, and adjusting the playback speed (Table 1) as well as data on each user’s answers for each exercise (Table 2). If a student entered the exercise page but did not answer the exercise questions, we coded the student’s response to the exercise as No. The feature timeCost (in seconds) was defined as how long the student took to answer each question. For example, if a student spent 10 seconds answering a question, the timeCost value for that student for that question was 10. The 308,517,712 learning behavior data was transformed into meaningful features as input of the DNN model. We sorted all course data into the categories of training data, validation data, and testing data according to the ratio of 0.64, 0.16, and 0.2.

Table 1

Student Video Activity Schema

| Feature | Description | Example |

| userId | Student ID | 2,198 |

| courseId | Course ID | 10900CS0003 |

| chapterId | Chapter ID | 10900CS0003ch04 |

| videoId | Video ID | -WSFgrGEs |

| action | Student action when recording | playing |

| currentTime | Video time when recording | 13.3234 |

| playRate | Video play rate when recording | 1.25 |

| volume | Video volume when recording | 100 |

| update_at | Recording time | 2020-05-11T22:40:41 |

Table 2

Student Exercise Activity Schema

| Feature | Description | Example |

| userId | Student ID | 2198 |

| courseId | Course ID | 10900CS0003 |

| chapterId | Chapter ID | 10900CS0003ch04 |

| exerId | Exercise ID | 10900CS0003ch04e1 |

| score | Exercise answer score | 1 |

| timeCost | Time cost on this exercise | 10 |

| userAns | Student answer | [1, 3, 4] |

| correctAns | Correct answer | [1, 2] |

| update_at | Recording time | 2020-05-11T23:51:41 |

Referencing the literature, we focused on the following five categories of student sentiment survey: (a) workload, (b) need fulfillment, (c) intelligibility, (d) style approval, and (e) student engagement. The questionnaire had 22 items in total (Table 3). This research used five-point Likert scale to evaluate the answers provided by students. Rating 1 indicated their worst experience while rating 5 indicated their best experience.

Of the 6,016 students enrolled in the aforementioned courses, 993 filled out the questionnaire, and 764 of the 993 responses were valid. The questionnaire response rates for each course are reported in Table 4; the Cronbach’s alpha was 0.842. The response rates for the various courses ranged from 5% to 15%, a result strongly correlated with the number of students completing their MOOCs (Jordan, 2015). Introduction to IoT had the highest response rate (45%) due to the requirement for learners to attend in-person experiment sessions.

Table 3

Questionnaire Design and Content

| Field | Citation | Topic |

| Workload | Gonçalves (2014) | It takes a lot of time to watch the videos for this course. |

| I think this course is quite difficult. | ||

| I can keep up with the subsequent courses without spending much time reviewing. | ||

| Need fulfillment | Durksen et al. (2016) Alario-Hoyos et al. (2017) | The course material is consistent with what I expect to learn. |

| The course material is not what I currently need to learn. | ||

| This course will be helpful for my future courses and research. | ||

| This course is helpful for my future job search. | ||

| This course is related to my major. | ||

| Intelligibility | Ochando (2017) | The teacher’s style helps me easily understand the content. |

| The teacher is able to explain the key points and clarify confusing points. | ||

| The teacher’s method is too disorganized for me to keep up. | ||

| The teacher is unclear, and I have difficulty understanding. | ||

| The teacher’s methods make me feel that this course is an efficient way to learn. | ||

| Style approval | Çakmak (2011) | The teacher’s style makes me eager to learn. |

| The way the teacher speaks makes me feel a little hesitant. | ||

| The teacher’s tone does not make me feel irritated. | ||

| The teacher’s rhythm puts me at ease. | ||

| The teacher’s methods make me feel pressured. | ||

| Student engagement | Marciniak (2018) | I watched the course videos at least once before the end of the course. |

| I review the exercises by myself offline. | ||

| I will find related videos about unfamiliar concepts. | ||

| I will rewatch videos to review unfamiliar concepts. |

Table 4

Response Rate Information

| Course name | Number of students | Number of responses | Response rate |

| Introduction to IoT | 255 | 115 | 0.45 |

| Introduction to Calculus | 490 | 103 | 0.20 |

| Introduction to Programming in Python | 569 | 95 | 0.16 |

| Financial Decision Analysis | 383 | 56 | 0.14 |

| Systems Neuroscience | 201 | 22 | 0.10 |

| Ecosystem and Global Change | 233 | 43 | 0.18 |

| Common Good in Social Design | 121 | 20 | 0.16 |

| Introduction to Data Structure | 249 | 26 | 0.10 |

| AP-Introduction to Calculus | 980 | 217 | 0.22 |

| AP-General Physics | 275 | 26 | 0.09 |

| AP-General Chemistry | 371 | 52 | 0.14 |

| AP-Introduction to Life Science | 156 | 27 | 0.17 |

| AP-Principles of Economics | 172 | 17 | 0.10 |

| AP-Introduction to Computer Science | 202 | 18 | 0.08 |

| AP-Introduction to Programming in Python | 449 | 58 | 0.12 |

| AP-Introduction to Programming Language | 259 | 22 | 0.08 |

Logs of activity involving videos and exercises were collected. Information regarding video playback fell into one of seven categories: (a) video operations (e.g., play and pause); (b) whether the users actually watched the video being played; (c) start and end times; (d) current time; (e) playback speed; (f) volume; and (g) other information. We analyzed the information and extracted 35 video features, as listed in Table 5. Information regarding exercises was divided into six categories: (a) user answer, (b) correct answer, (c) points, (d) time spent by users, (e) types of questions, and (f) other. Eight exercise features were extracted (Table 5).

Table 5

Video and Exercise Features

| Feature | Description |

| real_watch_time | Time spent watching videos (including time spent with the video on pause or fast-forwarded) |

| video_watch_time | Time spent watching videos |

| play_count | Number of times the video was played in a week |

| pause_count | Number of times the video was paused in a week |

| change_rate_count | Number of times the video play rate was changed in a week |

| seek_forward_count | Number of times the video was fast-forwarded in a week |

| seek_back_count | Number of times the video was skipped backward in a week |

| finish_ratio | Ratio of videos finished in a week |

| review_ratio | Ratio of videos reviewed in a week |

| video_progress_ratio | Proportion of video play time and video watch time |

| video_len_per_week | Total length of videos assigned per week |

| real_watch_time_per_week | Total video watch time per week (included pause, fast-forward, and others) |

| video_watch_time_per_week | Video watch time per week |

| real_watch_time_per_video_len | Proportion of student’s watch time to total watch time of all assigned videos in a week (watch time included time spent with the video on pause or fast-forwarded) |

| video_watch_time_per_video_len | Proportion of all assigned videos that were watched in a week |

| end_to_start_days | Days from learning start date to learning end date |

| real_learning_days | Numbers of learning days per week |

| times_in_real_learning_days | Time spent learning during a learning day |

| average_learning_time_each_path | Average learning time for a student |

| week_block_num_mean | Mean number of learning blocks in a week |

| week_block_num_std | Standard deviation of mean number of learning blocks in a week |

| week_block_time_mean | Mean time of learning blocks in a week |

| week_block_time_std | Standard deviation of number of learning blocks in a week |

| day_block_time_mean | Mean number of learning blocks in a learning day |

| day_block_num_std | Standard deviation of mean number of learning blocks in a learning day |

| day_block_time_mean | Mean time of learning blocks in a learning day |

| day_block_time_std | Standard deviation of number of learning blocks in a learning day |

| min_15 | Mean number of learning blocks > 15 min. in a week |

| min_30 | Mean number of learning blocks > 30 min. in a week |

| min_45 | Mean number of learning blocks > 45 min. in a week |

| gap_mean | Mean number of days spent not learning |

| gap_std | Standard deviation of the number of days spent not learning |

| course_weeks | Number of times a student took a course in a week |

| video_total_len | Sum of the lengths (in seconds) of all videos in a week |

| video_counts | Number of videos watched in a week |

| exercise_type | Exercise type (single, multiple, or fill-in-the-blanks) |

| correct_rate | Percentage of questions answered correctly |

| answer_count | Number of attempts before the student answered correctly |

| time_cost | Time taken to complete an exercise |

| review_video_before_answer | Whether the student watched a related video before answering correctly for the first time |

| review_video_after_answer | Whether the student watched a related video after answering correctly for the first time |

| answering_progress | Type of question processing style (type 1 to 6) |

| correct_count | Number of questions answered correctly |

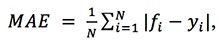

Every student has a unique learning mode and unique learning behavior, and we hypothesized that these would affect their satisfaction. To verify this hypothesis, we inputted the student learning behavior variables into a five-layer DNN model (see Figure 1 for illustration), which used a rectified linear unit activation function to predict the satisfaction score. When creating predictions of student satisfaction for MOOCs, it is crucial to avoid inaccuracies caused by sparse data (Yang et al., 2018). To avoid this problem, input for our system included only the learning data of students who completed the questionnaire.

The mean absolute error (MAE) was used to evaluate the performance of the model. In brief, we used holdout cross validation to obtain the test data, and the data were then used to calculate the MAE as follows:

where fi and yi are the predicted and actual scores for student i, respectively, and N is the number of students. The MAE is the difference between the predicted and actual scores, with a lower MAE indicating superior predictive performance.

Figure 1

Architecture of Satisfaction Score Prediction Model Based on Learning Behaviors

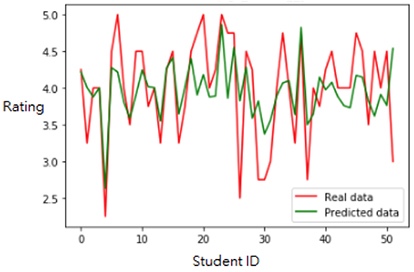

The effectiveness of our prediction model was evaluated in terms of the MAE by using the data from the Table 4 courses. Table 6 shows the MAE output for the answer to each question from the questionnaire. Our model performed best when computing the answers to questions related to course health, and worst when computing the answers to style approval questions. The results indicated that learning behavior is affected to some degree by student satisfaction. The MAEs shown in Table 6 ranged from 0.41 to 0.55. This may result from our use of a five-point rating system. The predicted data successfully captured the trend of real data, as shown in Figure 2.

Figure 2

Example of Satisfaction Score Prediction Model Based on Learning Behaviors

Note. This figure is made based on Question 5-1.

Once students’ answers to the questionnaire survey were collected, the five categories of results were computed by the overall answer score for each part of the questionnaire. Subsequently, we collected data on the learning behavior of participating MOOC students. Thereafter, these data were analyzed and used to predict the student satisfaction scores.

The results demonstrated that this system enabled teachers to understand multiple aspects of learner satisfaction before the end of the course. Additionally, because course evaluation surveys have high nonresponse rates (Table 4) this system was useful as an alternative method of providing lecturers with feedback predictions for students who do not fill out questionnaires. On the basis of the predicted feedback, teachers can adjust the content, workload, teacher-student or student-student interactions during the course. Compared with the conventional approach, which is disadvantaged by insufficient learner responses and where feedback is given only after the course, our method was more flexible and accurate.

Before the end of the course, the instructor can also use different approaches to track student performance and thus help students by adjusting the course schedule, offering more office hours, or allocating more time to covering more difficult topics. In addition, this system may provide students a chance to reflect on their own performance based on the predictions.

In the future, this system could be combined with a learning log feature. Teachers would then use the student’s learning history to better understand their status, and so develop more sophisticated and efficient interactive teaching methods, improve course quality, and increase student satisfaction.

Table 6

Predictive Performance

| Field | Question (value of answers range from 1 to 5) | MAE |

| Workload | It takes a lot of time to watch the videos for this course. | 0.4679 |

| I think this course is quite difficult. | 0.5192 | |

| I can keep up with subsequent courses without spending much time reviewing. | 0.4679 | |

| Need fulfillment | The course material is consistent with what I expect to learn. | 0.4308 |

| The course material is not what I currently need to learn. | 0.4692 | |

| This course will be helpful for my future courses and research. | 0.4115 | |

| This course is helpful for my future job search. | 0.4654 | |

| This course is related to my major. | 0.4462 | |

| Intelligibility | The teacher’s style helps me easily understand the content. | 0.4654 |

| The teacher is able to explain the key points and clarify confusing points. | 0.4691 | |

| The teacher’s method is too disorganized for me to keep up. | 0.4769 | |

| The teacher is unclear and I have difficulty understanding. | 0.5231 | |

| The teacher’s methods make me feel that this course is an efficient way to learn. | 0.5077 | |

| Style approval | The teacher’s style makes me eager to learn. | 0.4577 |

| The way the teacher speaks makes me feel a little hesitant. | 0.5423 | |

| The teacher’s tone does not make me feel irritated. | 0.523 | |

| The teacher’s rhythm puts me at ease. | 0.5385 | |

| The teacher’s methods make me feel pressured. | 0.5 | |

| Student engagement | I watched the course videos at least once before the end of the course. | 0.4712 |

| I review the exercises by myself offline. | 0.4135 | |

| I will find related videos about unfamiliar concepts. | 0.4136 | |

| I will re-watch videos to review unfamiliar concepts. | 0.4615 |

The data used as input was collected from the courses in Table 4. Differences between these courses may affect the accuracy of our model. Future research might divide courses into categories to investigate subject matter-related effects. For example, the difficulty of a course may influence student concentration. Researchers can also use different methods to analyze the survey responses.

Education is foundational to a well-functioning society. Due to recent technological advancements, techniques from big data are now available for increasing the quality of courses. To properly use big data, researchers have adopted AI to investigate topics related to education. Through data analysis, processing, and prediction, AI can support lecturers in solving problems and making decisions. In combination with MOOCs, AI can help teachers create a better learning environment and enable students to achieve their learning goals—the common aim of all mainstream MOOC platforms.

In this study, we proposed a method to solve the problem of low MOOC student survey response rates, which prevents teachers from evaluating learner satisfaction in their courses. We established a system that predicted student course satisfaction based on their learning behavior. Our system was tested with student data from NTHU’s MOOC platform. These data pertained to student behavior when watching videos and answering exercise questions. Subsequently, a deep learning model was used to process the data and produce a predicted level of course satisfaction for a given student. If the output is made viewable by students, this system may also give them a chance to reflect on their course performance based on the system’s predictions.

Lastly, this system can benefit both lecturers and learners. Teachers can track student course satisfaction and learners can give instant feedback on course modifications. If a lecturer receives prompt feedback that guides course modifications, lecturers can better react to student input. Therefore, our system is an innovative method for improving interaction between teachers and students.

This study was funded by the Ministry of Science and Technology of Taiwan. The projects ID were MOST 110-2511-H-007 -007, MOST-109-2511-H-007-010 and MOST-108-2221-E-007-062-MY3. It was also funded by National Tsing Hua University, Taiwan.

Adamopoulos, P. (2013). What makes a great MOOC? An interdisciplinary analysis of student retention in online courses. In R. Baskerville & M. Chau (Eds.), Proceedings of the 34th International Conference on Information Systems (ICIS 2013). http://aisel.aisnet.org/icis2013/proceedings/BreakthroughIdeas/13/

Alario-Hoyos, C., Estévez-Ayres, I., Pérez-Sanagustín, M., Delgado Kloos, C., & Fernández-Panadero, C. (2017). Understanding learners’ motivation and learning strategies in MOOCs. The International Review of Research in Open and Distributed Learning, 18(3), 120-137. https://doi.org/10.19173/irrodl.v18i3.2996

Baxter Magolda, M. B. (1993). What doesn’t matter in college? [Review of the book What matters in college: Four critical years revisited, by A. W. Astin]. Journal of Higher Education 22(8), 32-34. https://doi.org/10.2307/1176821

Bean, J., & Bradley, R. (1986). Untangling the satisfaction-performance relationship for college students. The Journal of Higher Education, 57(4), 393-412. https://doi.org/10.2307/1980994

Boulay, B. d. (2016). Artificial intelligence as an effective classroom assistant. IEEE Intelligent Systems, 31(6), 76-81. http://users.sussex.ac.uk/~bend/papers/meta-reviewsIEEEV5.pdf

Çakmak, M. (2011). Changing roles of teachers: Prospective teachers’ thoughts. Egitim ve Bilim, 36, 14-24. https://www.webofscience.com/wos/woscc/full-record/WOS:000286914500002?SID=E3dPJYYg8zalgwNi8L7 http://egitimvebilim.ted.org.tr/index.php/EB/article/download/173/235

Chen, X., Xie, H., Zou, D., & Hwang, G. J. (2020). Application and theory gaps during the rise of artificial intelligence in education. Computers and Education: Artificial Intelligence, 1, 100002. https://doi.org/10.1016/j.caeai.2020.100002

Dohaiha, H., Prasad, P. W. C., Maag, A., & Alsadoon, A. (2018). Deep learning for aspect-based sentiment analysis: A comparative review. Expert Systems with Applications, 118, 272-299. https://doi.org/10.1016/j.eswa.2018.10.003

Donath, J. (1996). Identity and deception in the virtual community. In M. H. Smith & P. Kollock (Eds.), Communities in cyberspace (pp. 29-59). Routledge. https://smg.media.mit.edu/people/judith/Identity/IdentityDeception.html

Donohue, T. L., & Wong, E. H. (1997). Achievement motivation and college satisfaction in traditional and nontraditional students. Education, 118(2), 237-243. https://go.gale.com/ps/anonymous?id=GALE%7CA20479498&sid=googleScholar&v=2.1&it=r&linkaccess=abs&issn=00131172&p=AONE&sw=w

Du Boulay, B. (2016). Artificial intelligence as an effective classroom assistant. IEEE Intelligent Systems, 31, 76-81. https://doi.org/10.1109/MIS.2016.93

Durksen, T., Chu, M.-W., Ahmad, Z. F., Radil, A., & Daniels, L. (2016). Motivation in a MOOC: A probabilistic analysis of online learners’ basic psychological needs. Social Psychology of Education, 19, 241-260. https://doi.org/10.1007/s11218-015-9331-9

Fauvel, S., Yu, H., Miao, C., Cui, L., Song, H., Zhang, L., Li, X., & Leung, C. (2018). Artificial Intelligence Powered MOOCs: A Brief Survey [Paper presentation]. IEEE International Conference on Agents. Singapore. https://doi.org/10.1109/AGENTS.2018.8460059

Gameel, B. (2017). Learner satisfaction with massive open online courses. American Journal of Distance Education, 31(2), 98-111. https://doi.org/10.1080/08923647.2017.1300462

Gašević, D., Dawson, S., & Siemens, G. (2015). Let’s not forget: Learning analytics are about learning. TechTrends, 59(1), 64-71. https://doi.org/10.1007/s11528-014-0822-x

Gonçalves, G. (2014). Studying public health. Results from a questionnaire to estimate medical students’ workload. Procedia - Social and Behavioral Sciences, 116, 2915-2919. https://doi.org/10.1016/j.sbspro.2014.01.679

Hew, K. F., Hu, X., Qiao, C., & Tang, Y. (2020). What predicts student satisfaction with MOOCs: A gradient boosting trees supervised machine learning and sentiment analysis approach. Computers & Education, 145, 103724. https://doi.org/10.1016/j.compedu.2019.103724

Hu, H., Zhang, G., Gao, W., & Wang, M. (2020). Big data analytics for MOOC video watching behavior based on Spark. Neural Computing and Applications, 32(11), 6481-6489. https://doi.org/10.1007/s00521-018-03983-z

Jordan, K. (2015). Massive open online course completion rates revisited: Assessment, length and attrition. The International Review of Research in Open and Distributed Learning, 16(3). https://doi.org/10/f5vw

Kastrati, Z., Imran, A., & Kurti, A. (2020). Weakly supervised framework for aspect-based sentiment analysis on students’ reviews of MOOCs. IEEE Access, 8, 106799-106810. https://doi.org/10.1109/ACCESS.2020.3000739

Kay, J. (2012). AI and education: Grand challenges. Intelligent Systems, IEEE, 27(5), 66-69. https://doi.org/10.1109/MIS.2012.92

Klobas, J. E., Mackintosh, B., & Murphy, J. (2014). The anatomy of MOOCs. In P. Kim (Ed.), Massive open online courses: The MOOC revolution (pp. 1-22). Routledge. https://www.researchgate.net/publication/272427367_The_anatomy_of_MOOCs

Kop, R. (2011). The challenges to connectivist learning on open online networks: Learning experiences during a massive open online course. The International Review of Research in Open and Distributed Learning, 12(3), 19-38. https://doi.org/10.19173/irrodl.v12i3.882

Kop, R., Fournier, H., & Mak, J. S. F. (2011). A pedagogy of abundance or a pedagogy to support human beings? Participant support on massive open online courses. The International Review of Research in Open and Distributed Learning, 12(7), 74-93. https://doi.org/10.19173/irrodl.v12i7.1041

Leitner, P., Khalil, M., & Ebner, M. (2017). Learning analytics in higher education: A literature review. In A. Peña-Ayala (Ed.), Learning analytics: Fundamentals, applications, and trends (pp. 1-23). Springer International Publishing AG. https://link.springer.com/chapter/10.1007/978-3-319-52977-6_1

Liu, M., Kang, J., Cao, M., Lim, M., Ko, Y., Myers, R., & Schmitz Weiss, A. (2014). Understanding MOOCs as an emerging online learning tool: Perspectives from the students. American Journal of Distance Education, 28(3), 147-159. https://doi.org/10.1080/08923647.2014.926145

Lundqvist, K., Liyanagunawardena, T., & Starkey, L. (2020). Evaluation of student feedback within a MOOC using sentiment analysis and target groups. The International Review of Research in Open and Distributed Learning, 21(3), 140-156. https://doi.org/10.19173/irrodl.v21i3.4783

Marciniak, R. (2018). Quality assurance for online higher education programmes: Design and validation of an integrative assessment model Applicable to Spanish universities. The International Review of Research in Open and Distributed Learning, 19(2), 127-154. https://doi.org/10.19173/irrodl.v19i2.3443

Marin, V., Pereira, T., Sridharan, S., & Rivero, C. (2017). Automated personalized feedback in Introduction Java Programming MOOCs. 2017 IEEE 33rd International Conference on Data Engineering (ICDE; pp. 1259-1270). https://doi.org/10.1109/ICDE.2017.169

McAuley, A., Stewart, B., Siemens, G., & Cormier, D. (2010). In the open: The MOOC model for digital practice (Knowledge Synthesis Grants on the Digital Economy) [Grant Report]. Social Sciences & Humanities Research Council of Canada. https://www.oerknowledgecloud.org/archive/MOOC_Final.pdf

McNamara, D., Baker, R., Wang, Y., Paquette, L., Barnes, T., & Bergner, Y. (2015). Language to completion: Success in an educational data mining massive open online class. Proceedings of the 8th International Educational Data Mining Society (pp. 388-391). https://www.researchgate.net/publication/319764516_Language_to_Completion_Success_in_an_Educational_Data_Mining_Massive_Open_Online_Class

Moreno-Marcos, P., Alario-Hoyos, C., Munoz-Merino, P., Estévez-Ayres, I., & Delgado-Kloos, C. (2018). Sentiment analysis in MOOCs: A case study. IEEE Global Engineering Education Conference (EDUCON; pp. 1489-1496). https://doi.org/10.1109/EDUCON.2018.8363409

Ochando, H. M. P. (2017). Transcultural validation within the English scope of the questionnaire evaluation of variables moderating style of teaching in higher education. CEMEDEPU. Procedia-Social and Behavioral Sciences, 237, 1208-1215. https://doi.org/10.1016/j.sbspro.2017.02.191

Onah, D., Sinclair, J., & Boyatt, R. (2014). Dropout rates of massive open online courses: Behavioural patterns. Proceedings of the 6th International Conference on Education and New Learning Technologies (EDULEARN14). https://doi.org/10.13140/RG.2.1.2402.0009

Papamitsiou, Z., & Economides, A. (2014). Learning analytics and educational data mining in practice: A systematic literature review of empirical evidence. Educational Technology & Society, 17(4), 49-64. https://www.j-ets.net/collection/published-issues/17_4 https://www.researchgate.net/publication/267510046_Learning_Analytics_and_Educational_Data_Mining_in_Practice_A_Systematic_Literature_Review_of_Empirical_Evidence

Pérez, R. C., Jurado, F., & Villen, A. (2019). Moods in MOOCs: Analyzing emotions in the content of online courses with edX-CAS. IEEE Global Engineering Education Conference (EDUCON; pp. 1467-1474). https://doi.org//10.1109/EDUCON.2019.8725107

Shatnawi S., Gaber M. M., & Cocea M. (2014) Automatic content related feedback for MOOCs based on course domain ontology. In E. Corchado, J. A. Lozano, H. Quintián, & H. Yin (Eds.) Intelligent data engineering and automated learning, IDEAL 2014 (Lecture Notes in Computer Science, Vol. 8669). Springer. https://doi.org/10.1007/978-3-319-10840-7_4

Shatnawi, S., Gaber, M., & Haig, E. (2014). Automatic content related feedback for MOOCs based on course domain ontology (Vol. 8669). International Conference on Intelligent Data Engineering and Automated Learning. https://www.researchgate.net/publication/265132990_Automatic_Content_Related_Feedback_for_MOOCs_Based_on_Course_Domain_Ontology

Sindhu, I., Daudpota, S., Badar, K., Bakhtyar, M., Baber, J., & Nurunnabi, M. (2019). Aspect based opinion mining on student’s feedback for faculty teaching performance evaluation. IEEE Access, 7, 108729-108741. https://doi.org/10.1109/ACCESS.2019.2928872

Su, Y. S., & Lai, C. F. (2021). Applying educational data mining to explore viewing behaviors and performance with flipped classrooms on the social media platform Facebook. Frontiers in Psychology, 12, 653018. https://doi.org/10.3389/fpsyg.2021.653018

Su, Y. S., & Wu, S. Y. (2021). Applying data mining techniques to explore users behaviors and viewing video patterns in converged it environments. Journal of Ambient Intelligence and Humanized Computing. https://doi.org/10.1007/s12652-020-02712-6

Su, Y. S., Ding, T. J., & Chen, M. Y. (2021). Deep learning methods in internet of medical things for valvular heart disease screening system. IEEE Internet of Things Journal. https://ieeexplore.ieee.org/document/9330761

Swinnerton, B., Morris, N., Hotchkiss, S., & Pickering, J. (2016). The integration of an anatomy massive open online course (MOOC) into a medical anatomy curriculum: Anatomy MOOC. Anatomical Sciences Education, 10(1), 53-67. https://doi.org/10.1002/ase.1625

Tekin, C., Braun, J., & van der Schaar, M. v. d. (2015). eTutor: Online learning for personalized education [Paper presentation]. 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Brisbane, Australia. https://arxiv.org/abs/1410.3617

Veletsianos, G. (2013). Learner experiences with moocs and open online learning. Hybrid Pedagogy Publica. http://learnerexperiences.hybridpedagogy.com

Wen, M., Yang, D., & Rose, C. (2014). Sentiment analysis in MOOC discussion forums: What does it tell us? In J. Stamper, Z. Pardos, M. Mavrikis, & B. M. McLaren (Eds.), Proceedings of the 7th International Conference on Educational Data Mining (EDM 2014; pp. 130-137). https://www.dropbox.com/s/crr6y6fx31f36e0/EDM%202014%20Full%20Proceedings.pdf

Xing, W., & Du, D. (2019). Dropout prediction in MOOCs: Using deep learning for personalized intervention. Journal of Educational Computing Research, 57(3), 547-570. https://doi.org/10.1177/0735633118757015

Yang, S. J. H., Lu, O. H. T., Huang, A. Y. Q., Huang, J. C. H., Ogata, H., & Lin, A. J. Q. (2018). Predicting students’ academic performance using multiple linear regression and principal component analysis. Journal of Information Processing, 26(2), 170-176. https://doi.org/10.2197/ipsjjip.26.170

Yang, T. Y., Brinton, C. G., Joe-Wong, C., & Chiang, M. (2017). Behavior-based grade prediction for MOOCs via time series neural networks. IEEE Journal of Selected Topics in Signal Processing, 11(5), 716-728. https://doi.org/10.1109/JSTSP.2017.2700227

MOOC Evaluation System Based on Deep Learning by Jian-Wei Tzeng, Chia-An Lee, Nen-Fu Huang, Hao-Hsuan Huang, and Chin-Feng Lai is licensed under a Creative Commons Attribution 4.0 International License.