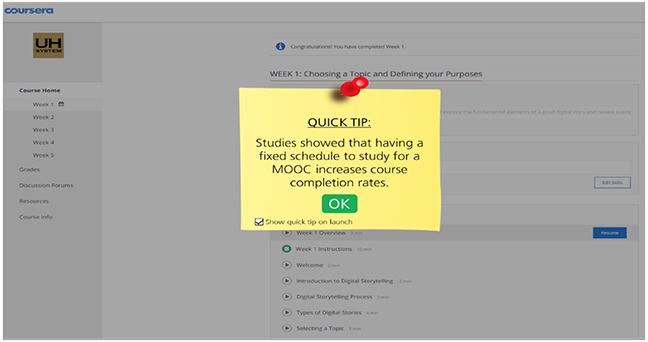

Figure 1. An illustration of a quick tip pop-up providing a suggestion related to study goals.

Volume 20, Number 3

Erwin Handoko1, Susie L. Gronseth2, Sara G. McNeil3, Curtis J. Bonk4, and Bernard R. Robin5

1,2,3,5University of Houston, 4Indiana University Bloomington

Despite providing advanced coursework online to learners around the world, massive open online courses (MOOCs) have had notoriously low completion rates. Self-regulated learning (SRL) frames strategies that students can use to enhance motivation and promote their engagement, persistence, and performance self-monitoring. Understanding which SRL subprocesses are most relevant to the MOOC learning context can guide course designers and instructors on how to incorporate key SRL aspects into the design and delivery of MOOCs. Through surveying 643 MOOC students using the Online Self-Regulated Learning Questionnaire (OSLQ), the present study sought to understand the differences in the use of SRL between those who completed their course and those who did not. MOOC completers were found to have significantly higher applications of one SRL specific subprocess, namely goal setting. Additional SRL subprocesses of task interest/values, causal attribution, time management, self-efficacy, and goal-orientation also emerged from an analysis of open-ended responses as key contributors to course completion. The findings from this study provide further support regarding the role of SRL in MOOC student performance and offer insight into learners’ perceptions on the importance of SRL subprocesses in reaching course completion.

Keywords: Self-regulated learning, SRL, massive open online course, MOOC completion, online self-regulated learning questionnaire, OSLQ, goal setting

The proliferation of massive open online courses (MOOCs) in the past decade has been a whirlwind. Beginning with George Siemens and Stephen Downes’s Connectivism and Connective Knowledge (referred to as CCK08) course in 2008, MOOCs have expanded access to course content for learners around the globe. In 2017, there were about 9,400 MOOCs offered by more than 800 universities worldwide, with over 81 million students signing up for at least one course (Shah, 2018). Another indicator of the rapid growth of MOOCs can be found in the percentage of higher education institutions in the United States offering MOOCs. According to Allen, Seaman, Poulin, and Strout (2016), there has been a substantial increase of institutions offering MOOCs in the United States from 2.6% in 2012 to 13.6% in 2015. Furthermore, several prominent MOOC platforms, including Coursera and EdX, have partnered with universities to deliver credit-bearing courses leading to degrees (Agarwal, 2015; Straumsheim, 2016). With such growth and global reach, MOOCs offer great potential for expanding worldwide access to online continuing education and professional learning opportunities.

Despite such promise and popularity, the typically low completion rates of MOOCs have been concerning to MOOC providers (Yuan & Powell, 2013). For example, a study of 39 MOOCs offered through Coursera and EdX reported MOOC completion rates ranging from 0.9% to 36.1%, with a median of 6.5% (Jordan, 2014). It is worthwhile to note that students may have reasons for enrolling in MOOCs beyond intending to complete a course, such as shopping for potential courses to eventually complete, dabbling in specific course topics that are of interest, and auditing to increase knowledge about the course material but without a desire to complete any assignments (DeBoer, Ho, Stump, & Breslow, 2014). However, higher completion rates have been observed among MOOC students who paid for certificates; even so, the completion rate median for fee-based certificates tops out around 60% (Chuang & Ho, 2016). Therefore, MOOC completion rates are still a pressing issue regardless of students’ payment status.

The factors affecting student performance in MOOCs are complex and varied, including learner engagement (Jung & Lee, 2018; Nawrot & Doucet, 2014), declaration of intention to complete (Reich, 2014; Wang & Baker, 2018), and motivation for career advancement (Watted & Barak, 2018). Learner persistence in MOOCs has also been linked to learners’ perceptions of teaching presence (Gregori, Zhang, Galvan-Fernández, de Asís, & Fernández-Navarro, 2018; Hone & El-Said, 2016) and the ease of use of the course platform (Jung & Lee, 2018). Finally, subprocesses of self-regulated learning (SRL), the focus of the present study, have also been found to correlate with student performance (Kizilcec, Pérez-Sanagustín, & Maldonado, 2017). To illustrate, one study involving interviews with learners of a health profession MOOC found that self-efficacy, task strategies, goal setting, and help-seeking of professionals were key ways that successful learners self-regulated in the course (Milligan & Littlejohn, 2016). Prior work in this area has yet to address why students who intend to complete their courses and who even pay for verified certificates still sometimes fail to complete them. Perhaps specific components of SRL are more powerful contributors to a student’s likelihood to complete a MOOC than are some other components.

The present study investigated this issue and sought to understand the differences in SRL subprocesses between those who completed their course (i.e., MOOC completers) and those who did not (i.e., MOOC non-completers). Understanding key SRL differences can enable course designers and instructors to develop course structures that better support MOOC learners. In this study, students were surveyed in two MOOCs offered by a public university located in the southwest region of the United States. The following two research questions guided this study:

Research question 1: In what ways do MOOC completers differ from MOOC non-completers in regard to SRL?

Research question 2: What SRL strategies contribute to student success in completing MOOCs?

The “massiveness” and “openness” of MOOCs are key characteristics that distinguish these courses from other online courses. Such openness fueled large student enrollments in the early MOOCs, with an initial average of more than 2,000 students per course (McAuley, Stewart, Siemens, & Cormier, 2010). However, median enrollments in MOOCs rapidly ballooned to over 40,000 participants from around the globe just a few years later (Jordan, 2014). More recent studies have set the median around 8,000 participants (Chuang & Ho, 2016). There have since been updates to enrollment policies on different MOOC platforms, such as requiring students to pay a fee to earn verified certificates of completion. Nonetheless, MOOC enrollments with the intent for such certificates still tend to be larger than traditional online courses, with estimates of at least 500 paying students in a typical MOOC course (Chuang & Ho, 2016).

MOOCs based on traditional university courses are often referred to as xMOOCs (eXtended massive open online courses). Such courses are often versions of traditional courses that have been adapted to accommodate large enrollments, as well as the great diversity of students’ educational and cultural backgrounds. Along with grades and course withdrawals, completion of courses is commonly used as a proxy in online education for measuring student performance (Picciano, 2002). This has been the case in MOOC research as well, though some experts caution that the characteristic openness of MOOCs adds some complexity to this issue (DeBoer et al., 2014).

Reich (2014) suggested that MOOC completion should be viewed from the context of student intent. He found that students who registered with the intention to complete their MOOCs had higher completion rates than their peers who registered with the intention to just browse or audit the MOOC in which they are enrolled. Accordingly, the use of MOOC completion rates seems to be more fitting when used to assess the performance of verified certificate students (or Signature Track on the Coursera platform), as enrollment in such programs has been found to be a dominant factor in motivating students to complete their courses (Watted & Barak, 2018).

Limitations in being able to provide personalized course delivery and individual feedback have led many MOOC designers to opt for more behaviorist pedagogical approaches in which video lectures and computer-graded assignments are primarily used (Knox, 2013). Learners are expected to self-manage much of their study skills, such as planning their learning goals, adjusting their study environments, and identifying sources that could help with assignments (Littlejohn, Hood, Milligan, & Mustain, 2016). Such study skills are often touted as essential SRL subprocesses and are typically the hallmarks of successful learners (Zimmerman, 2013).

SRL is a construct that consists of multiple elements involved with planning, organizing, self-monitoring, and self-evaluating so that students are “metacognitively, motivationally, and behaviorally active participants in their own learning process” (Zimmerman, 1989, p. 329). Zimmerman (2013) identified 18 subprocesses involved in SRL: (a) goal setting, (b) time management, (c) self-efficacy, (d) outcome expectation, (e) task interest/value, (f) goal orientation, (g) self-instruction, (h) imagery, (i) attention focusing, (j) task strategies, (k) environmental structuring, (l) help-seeking, (m) metacognitive monitoring, (n) self-recording, (o) self-evaluation, (p) causal attribution, (q) self-satisfaction/affect, and (r) adaptive/defensive. Research findings consistently demonstrate that students with higher SRL levels achieve better academic results than those with lower SRL levels, both in face-to-face (e.g., Pintrich, 2004) and online (e.g., Broadbent & Poon, 2015) learning environments.

Since SRL behaviors tend to be context-dependent (Schunk, 2001), investigating SRL in MOOCs could shed light on how such strategies might impact student performance in the massive, open, online context. Initial studies thus far have found marked differences between MOOC students with high and low SRL scores, respectively, particularly in areas of motivation and goals for participation (Littlejohn et al., 2016). More recently, a study by Tsai, Lin, Hong, and Tai (2018) identified learner metacognition as a significant contributor to learner continuance in a MOOC. Similarly, learner volitional control when they act purposefully regarding time management has been found to support successful MOOC completion (Kizilcec et al., 2017).

Successful MOOC students are often skilled at connecting with others when they need help; for instance, asking questions of classmates via course discussion forums (Gillani & Eynon, 2014) as well as from others outside of the course who may have relevant skills or experiences (Breslow et al., 2013). Setting goals and other strategic planning activities have also been found to support higher student performance (Kizilcec et al., 2017). Such studies demonstrate the connection between SRL and student performance in general; however, further investigation into the impact of specific SRL subprocesses on MOOC completion is needed.

Study participants were drawn from registered students of two MOOCs developed by a public university in the Southwestern United States and offered on the Coursera platform. The MOOCs were part of the Powerful Tools for Teaching and Learning teacher professional development series that addressed educational technology topics. Digital Storytelling (DS-MOOC) focused on the principles and educational uses of digital storytelling; the practice of telling stories using computer-based tools (Robin, 2008). In contrast, Web 2.0 Tools (Web 2.0-MOOC) addressed a variety of Web-based tools that support classroom communication, collaboration, and creativity. Both courses were five weeks in length and were offered in English. Students were expected to commit about three to four hours each week for each course to work through the materials and activities. Regarding quality, the courses have received high student ratings, averaging 4.5 (DS-MOOC) and 4.6 (Web 2.0-MOOC) out of 5 stars prior to this study. In both courses, students earned certificates of completion if they achieved at least a 70% average for the course activities and assignments.

Out of the 65,227 registrations in the two courses, potential participants for this study were selected as those that completed at least one graded assignment in either course and were at least 18 years old at the time of the post-course survey. After removing duplications of students who participated in both courses, 5,935 students met the inclusion criteria. Of these, 643 completed the survey (10.8%).

Participants self-identified as either MOOC completer or MOOC non-completer through a specific survey item. There were 315 (49.0%) MOOC completers and 328 (51.0%) MOOC non-completers (see Table 1). Most (87.3%) participants in these two MOOCs reported that they did not enroll in the Signature Track program for these courses. Participant ages at the time of the survey ranged from 19 to 84 years old, with an average of 45.75 years (SD = 12.23). There were more females (68.4%) than males (29.4%), with 2.2% of the respondents not indicating gender. Interestingly, most participants were highly educated, with 92.8% having college degrees.

Table 1

Respondents’ Demographic Information

| Variable | Number | Percent (%) |

| MOOC completion status | ||

| Did not complete | 328 | 51.0 |

| Completed | 315 | 49.0 |

| Signature Track enrollment | ||

| Not Enrolled | 561 | 87.3 |

| Enrolled | 82 | 12.7 |

| Gender | ||

| Male | 189 | 29.4 |

| Female | 440 | 68.4 |

| Prefer not to say | 14 | 2.2 |

| Age | ||

| 20 or younger | 3 | 0.5 |

| 21-25 | 16 | 2.5 |

| 26-30 | 61 | 9.5 |

| 31-35 | 80 | 12.4 |

| 36-40 | 74 | 11.5 |

| 41-45 | 88 | 13.7 |

| 46-50 | 83 | 12.9 |

| 51-55 | 81 | 12.6 |

| 56-60 | 77 | 12.0 |

| 61-65 | 47 | 7.3 |

| 66-70 | 21 | 3.3 |

| 71-75 | 9 | 1.4 |

| 80 or older | 3 | 0.5 |

| Highest degree or level of education completed | ||

| Some high school, no diploma | 2 | 0.3 |

| High school graduate, diploma or equivalent (e.g., GED) | 12 | 1.9 |

| Some college credit, no degree | 10 | 1.6 |

| Trade/technical/vocational training | 8 | 1.2 |

| Associate degree | 14 | 2.2 |

| Bachelor’s degree | 147 | 22.9 |

| Master’s degree | 318 | 49.5 |

| Professional degree | 54 | 8.4 |

| Doctorate degree | 78 | 12.1 |

The data this study were collected using a survey comprised of the Online Self-Regulated Learning Questionnaire (OSLQ) and an additional open-response item. Six OSLQ subscales, associated with each of the SRL subprocesses (Barnard, Lan, To, Paton, & Lai, 2009), were used: goal setting, environmental structuring, task strategies, time management, help-seeking, and self-evaluation. The OSLQ is well-validated and has been found to be a reliable instrument for measuring student SRL levels in online learning environments (Barnard et al., 2009; Chang et al. 2015). The reliability of the OSLQ was calculated using Cronbach’s alpha coefficient. The composite coefficient (α =.88) demonstrated that the internal consistency of the scale was acceptable. The Cronbach alphas by subscale ranged from.65 to.84, revealing satisfactory discriminating power (see Table 2).

Table 2

Cronbach’s Alpha for Each Subscale

| Dependent variable | α |

| Goal setting | .75 |

| Environmental structuring | .84 |

| Task strategies | .65 |

| Time management | .67 |

| Help-seeking | .78 |

| Self-evaluation | .75 |

In the OSLQ portion of the survey, there were 24 closed-response items, with Likert responses ranging from 1 (strongly disagree) to 5 (strongly agree). The complete instrument is available online (http://digitalstorytelling.coe.uh.edu/MOOCSurvey/OSLQ.pdf). One item, TM3, was slightly modified for this study, from “I prepare my questions before joining in [the] chat room and discussion” to “I prepare my questions before joining in discussion forums.” Scoring the OSLQ involved totaling responses across the items, with higher totals indicating higher levels of learner self-regulation. An open-ended item was added at the end of the survey, asking respondents to describe factors that they believed contributed to their MOOC completion (for MOOC completers) or to their not completing their MOOC (for MOOC non-completers).

A study invitation e-mail was sent to each potential participant that introduced the researchers, described the study purpose and requirements to participate, offered an incentive for study participation, and provided the online survey link. The survey link was unique for each e-mail so that the survey responses could be connected to students’ grade reports. The data collection process took place over a two-week period in February 2017. An e-mail reminder was sent one week after the initial invitation e-mail to those who did not respond to the first invitation.

Data from responses to the OSLQ items were analyzed using a one-way multivariate analysis of variance (MANOVA) to explore possible differences in SRL strategies between MOOC completers and MOOC non-completers. Responses to the open-ended item were analyzed in three phases using a directed qualitative content analysis approach (Elo et al., 2014; Hsieh & Shannon, 2005). In the first phase, an initial codebook was created, based on the 18 SRL subprocesses (Zimmerman, 2013). In the codebook, codes were defined, corresponding SRL phases and areas were identified, and examples from study data were noted. Code definitions were further expanded and refined as data was coded and recoded. The complete definitions are available online (http://digitalstorytelling.coe.uh.edu/MOOCSurvey/definitions.pdf).

In the second phase, the 603 submitted responses were coded by the first and second authors, with one or more codes applied to each response. For example, the response “I enrolled in the courses because I was interested in the topic. Generally, once I start something, I complete it” (Respondent 466) was coded as task interest/value and goal setting. The authors jointly coded 40 responses initially to align their interpretation of the codes and code definitions. They then individually coded the remaining 563 responses. Individual coding was compared, and agreement was observed in 516 of the individually-coded responses (91.65% inter-rater agreement). The researchers then met, discussed the codes, and resolved all differences until 100% agreement was reached. In the third phase, descriptive statistics were calculated for MOOC completers and MOOC non-completers from the coding, and leading areas were identified.

The preliminary investigation detected a few univariate and multivariate outliers on the goal setting and the environmental structuring subscales, as assessed by boxplot and Mahalanobis distance (p < .001), respectively. A comparison of the results of a one-way MANOVA with and without the outliers showed that the goal setting subscale had significant results in both situations, while the environmental structuring subscale had a significant result when the outliers were included. To reduce bias in data analysis, a 5% trimming was applied, which removed the top and bottom 5% of the data in the two variables (Field, 2018).

Subscale distribution curves revealed slight skewness and kurtosis for some of the dependent variables. However, since MANOVA is considered to be fairly robust to deviations from normality, it was decided to proceed with the data analysis. The scatterplot matrices provided evidence for meeting the assumption of linear relationships among the independent variables. There was no multicollinearity, as assessed by Spearman’s rho; the weakest correlation was between environmental structuring and self-evaluation (rs = .166, p < .001), while the strongest correlation was between help-seeking and self-evaluation (rs = .689, p < .001).

The assumption of homogeneity of variances was met for each of the dependent variables, as assessed by Levene’s test of equality of variances (p < .05). Pillai’s Trace showed that there was a statistically significant difference between the MOOC completer and MOOC non-completer groups in regard to SRL, F (6, 570) = 4.875, p = .000; partial η2 = .049; observed power = .992. Further tests of between-subject effects (see Table 3) found significant differences between MOOC completers and MOOC non-completers, (F (1, 575) = 22.844, p = .000; partial η2 = .038; observed power = .998) in the Goal Setting subscale. The other five subscales did not show a significant difference between the MOOC Completer and MOOC Non-completer (p >.05).

Table 3

Results of Tests of Between-Subjects Effects

| Dependent variable | Mean square | F | Sig. | Partial eta squared | Observed power |

| Goal setting | 11.856 | 22.844 | 0.000 | 0.038 | 0.998 |

| Environmental structuring | 0.450 | 1.207 | 0.272 | 0.002 | 0.195 |

| Task strategies | 0.124 | 0.185 | 0.667 | 0.000 | 0.071 |

| Time management | 0.030 | 0.033 | 0.855 | 0.000 | 0.054 |

| Help-Seeking | 0.690 | 0.796 | 0.373 | 0.001 | 0.145 |

| Self-Evaluation | 0.734 | 1.033 | 0.310 | 0.002 | 0.174 |

Note. Hypothesis df = 1, and error df = 575.

Following the findings from the MANOVA analysis, multiple independent t-tests were run on each of the five items in the goal setting subscale (GS1-GS5) to identify those that generated different responses between MOOC completers and MOOC non-completers. The significance level was corrected with Bonferroni correction to reduce the risk of a type I error (Field, 2018), and the corrected p-value was 0.01. The items that showed a significant difference between the two groups were GS1, GS2, GS3, and GS4 (p < .01). See Table 4 for the complete results.

Table 4

Results of Multiple T-Tests for the Items in Goal Setting and Environment Structuring

| Levene’s test | T-test for equality of means | |||||||||

| F | Sig. | t | df | Sig. (2-tailed) | Mean difference | Std. error difference | 95% CI of the difference | |||

| Lower | Upper | |||||||||

| GS1 | Equal variances assumed | 1.583 | .209 | -3.060 | 576 | .002 | -.228 | .074 | -.374 | -.081 |

| Equal variances not assumed | -3.061 | 575.768 | . 002 | -.228 | .074 | -.374 | -.082 | |||

| GS2 | Equal variances assumed | 9.783 | .002 | -3.046 | 577 | .002 | -.251 | .082 | -.413 | -.089 |

| Equal variances not assumed | -3.050 | 565.440 | . 002 | -.251 | .082 | -.413 | -.089 | |||

| GS3 | Equal variances assumed | 1.540 | .215 | -5.331 | 577 | .000 | -.410 | .077 | -.561 | -.259 |

| Equal variances not assumed | -5.338 | 566.789 | . 000 | -.410 | .077 | -.560 | -.259 | |||

| GS4 | Equal variances assumed | 8.013 | .005 | -4.202 | 577 | .000 | -.341 | .081 | -.500 | -.181 |

| Equal variances not assumed | -4.206 | 570.411 | . 000 | -.341 | .081 | -.500 | -.182 | |||

| GS5 | Equal variances assumed | .017 | .897 | -2.004 | 576 | . 046 | -.217 | .108 | -.429 | -.004 |

| Equal variances not assumed | -2.003 | 575.260 | .046 | -.217 | .108 | -.429 | -.004 | |||

Note. 0.01 is the criterion for significance (as calculated by dividing 0.05 with 5 using the Bonferroni correction method).

Because research question 2 relates to the SRL strategies that students identified as contributing to their successful MOOC completion, the reported results and associated discussion section of this article will focus mainly on the principal themes from the analysis of MOOC completer responses. In the coding of the open-ended item, there were 464 codes applied to the 306 MOOC completer responses. Comparisons of codes are provided in Table 5.

With 175 (37.72%) entries, task interest/value was most frequently mentioned as a critical contributor to their course completion. The causal attribution subprocess was the second leading theme from the responses (14.66% of entries). Another theme was time management, accounting for 12.72% of the entries such as strategies related to allocating study time, creating a study schedule, and ensuring time availability. Self-efficacy was coded in 11.42% of the entries, including any mentions of motivation, self-motivation, self-discipline, commitment, and will. The final leading theme that emerged was goal orientation (5.82% of entries). Most of the statements were related to learner desire to earn certificates of completion and commendations from their peers or organizations.

Table 5

The Frequency of SRL Codes for MOOC Completers

| Subprocess | Frequency | % |

| Task interest/value | 175 | 37.72 |

| Causal attribution | 68 | 14.66 |

| Time management | 59 | 12.72 |

| Self-efficacy | 53 | 11.42 |

| Goal orientation | 27 | 5.82 |

| Goal setting | 20 | 4.31 |

| Help-seeking | 18 | 3.88 |

| Outcome expectation | 14 | 3.02 |

| Self-instruction | 12 | 2.59 |

| Self-satisfaction/affect | 9 | 1.94 |

| Environmental structuring | 3 | 0.65 |

| Task strategies | 3 | 0.65 |

| Self-evaluation | 2 | 0.43 |

| Attention focusing | 1 | 0.22 |

| Adaptive/defensive | 0 | 0.00 |

| Imagery | 0 | 0.00 |

| Metacognitive monitoring | 0 | 0.00 |

| Self-recording | 0 | 0.00 |

| Total | 464 | 100 |

Certainly, SRL strategies are used by every learner to some extent whether consciously or unconsciously. However, what can set high performing students apart from low performers is their awareness of SRL and the use of these strategies in their learning process (Zimmerman, 2013). Analysis from the OSLQ data showed that MOOC completers reported significantly higher use of the goal setting SRL subprocess than did MOOC non-completers. MOOC completers scored higher in particular aspects of goal setting, such as establishing standards for the assignments, setting short-term goals (i.e., daily or weekly) and long-term goals (i.e., monthly or for the semester), and self-monitoring to maintain what they perceived as a high standard for learning in their MOOCs. This finding is consistent with prior studies that found goal setting to be a critical SRL subprocess and a significant predictor of learning success in MOOCs (Kizilcec et al., 2017; Littlejohn et al., 2016).

The statistical analyses also showed that MOOC completers did not differ statistically from MOOC non-completers on the other five subprocesses. A possible explanation for this could relate to how the MOOCs were structured. In both MOOCs, the tasks were very procedural, and participants were given step-by-step instructions to complete the assignments. Also, the assignments required students to self-reflect, provide reviews to other students’ works, and actively participate in the discussion forums. Having such aspects of the courses structured for learners could have minimized the need for them to self-initiate the strategies.

It should be noted, though, that this finding does not imply that goal setting in isolation will directly result in learner performance gains. Qualitative content analysis of the open-ended responses submitted by MOOC Completers also identified five key SRL subprocesses of task interest/value, causal attribution, time management, self-efficacy, and goal orientation. It was found that MOOC completers often applied multiple SRL subprocesses to improve their learning experience in the MOOC environment. These five SRL subprocesses will each be further discussed in turn.

The task interest/value subprocess relates to why the respondents registered for the MOOCs in the first place. This subprocess, which is composed of several main factors including importance, interest, and relevance or usefulness of the skills (Eccles et al., 1983), is closely related to goal setting. Learners in this study who considered that the topics were important or relevant to their careers were more likely to put forth their best efforts to achieve their learning goals, as illustrated by Respondent 231’s response, “[The] MOOC was important to me because of the content, which is related to my occupation.” Learners who consider the topics relevant to their daily lives or feel that the learning tasks are interesting will typically display higher learning performance (Pintrich, 2004).

The causal attribution subprocess refers to student’s perception of the causes of their performance during a learning process (Zimmerman, 2013). Learners may perceive better learning performance when the course design is congruent with their learning preferences. For instance, Respondent 502 stated that “the interface, content design and the flow of content made it interesting and relevant to what we needed to know.” Similar findings in relation to course design and course completion are reported in Hone and El Said (2016).

Though no significant difference was evident between MOOC completers and MOOC non-completers on the OSLQ for the time management subprocess, it emerged as a theme from the open-ended responses. For example, Respondent 209 noted that “having a clear idea of what the required tasks were, and setting aside time to get them done” are the key contributors to the learner’s MOOC completion. Broadbent and Poon (2015) similarly had mixed findings in their systematic review, wherein five of the seven studies they analyzed found a significant positive correlation between time management and student performance in online learning and two had no relationship.

Self-Efficacy, the subprocess that refers to learner belief in his or her ability to complete a learning task, is also related to goal setting (Bandura, 1997). Respondent 595 illustrated the relationship well:

The fact that I had over one year of experience in online learning probably helped tremendously. Aside from earning my Master’s degree online, I am also a doctoral candidate for EdD, Curriculum and Instruction, and have specialized in distance education, therefore giving me a huge advantage over other learners enrolled in either of these MOOCs.

Learners with positive prior experiences may tend to have higher self-appraisals of their abilities and higher levels of self-efficacy. These learners are generally committed and motivated to complete the goals that they have set. The theme of higher self-efficacy among MOOC completers in this study is congruent with other MOOC studies (e.g., Barak, Watted, & Haick, 2016; Wang & Baker, 2018).

The goal orientation subprocess refers to learner orientation preferences in achieving the goals that they have set. Dweck (1986) suggested that this subprocess has two dimensions: (a) a learning dimension that desires to improve one’s competence by mastering new skills, and (b) a performance dimension that seeks to demonstrate one’s competence to others to gain favorable judgments or avoid negative judgments from others. In MOOCs, orienting to goals could involve activities such as working toward obtaining course certificates of completion in order to earn recognition or career advancement, such as described by Respondent 198 who said that “our workplace was encouraging us to do a MOOC related to education and gave a monetary incentive for it.” The emergence of goal orientation as a key theme in the qualitative data concurs with a recent study by Wang and Baker (2018).

The findings from this study present several implications for MOOC instructors who create and develop MOOCs, and for MOOC platform providers that partner with universities and other organizations. The results from this study showed that the goal setting subprocess was significant to MOOC learner success. Nevertheless, the sequence of actions involved from setting a learning goal to achieving it is complex, and involves other SRL subprocesses as well.

Instructors and instructional designers may want to consider elements of course design that could strategically help students in achieving their learning goals. Setting learning goals could be supported, for example, by providing a course outline detailing the course description, assessment overview, and the expected time commitments for course activities prior to the beginning of the course. The course outline could help learners determine the importance of the course and develop better strategies for achieving their learning goals if they decide to enroll in the MOOC. The time commitment details could be reinforced at course launch by providing time estimates for the activities scheduled for each week of the course. These time estimates can be generated from user data gathered during the first course offering week and from following implementations of the course; therefore, time estimates can be refined based on calculated averages of the actual time prior students spent on the course activities (Nawrot & Doucet, 2014).

Self-guided pre-assessment prompts regarding student readiness to learn in the MOOC environment could also be provided in the form of a course readiness checklist before students begin a course. Such a checklist would provide potential online learners with pre-course prompting and feedback intended to help them identify areas in their study habits and learning spaces in which they may need to make modifications or intentionally address in order to be successful in the MOOC format. This type of pre-assessment has been used in online courses for over a decade (e.g., the Online Readiness Assessment; Williams, n.d.), and it could be a worthwhile strategy to apply to the MOOC learning environment as well. Pre-assessment items related to learner readiness for MOOC-formatted instruction would prompt students to consider areas such as learning preferences, study skills, as well as technology skills and access. By working through the pre-assessment prior to taking a MOOC, students would consider these and other areas when planning their course-related learning goals. Further, pre-assessment responses could generate automated feedback such that students scoring lower in familiarity with course topics could be directed to background material that would address their prior knowledge gaps. Building in such support for students can be worthwhile, as students who have such sufficient pre-course topic familiarity tend to have higher levels of confidence and MOOC engagement (Littlejohn et al., 2016).

Another course design recommendation stemming from this study is to inform learners about how assignments will be evaluated. For open-ended projects, such as creating digital storytelling videos, examples of completed assignments could also be provided to illustrate expectations regarding aspects of breadth, depth, and quality. Having this information may help students better understand what is expected from them for these assignments and enable them to set short-term goals and plan their next steps to achieve those goals.

In addition to providing information about course details and expectations, and guiding students toward setting goals, incorporating reminders into the MOOC learning platform can spur students to stay engaged in their learning (Cleary, 2018). Reminders could take the form of quick tips that pop-up each time a student logs into the course to provide suggestions and advice related to study-related goals (see Figure 1). Reminders could also be sent through notifications, messages, and e-mail.

Figure 1. An illustration of a quick tip pop-up providing a suggestion related to study goals.

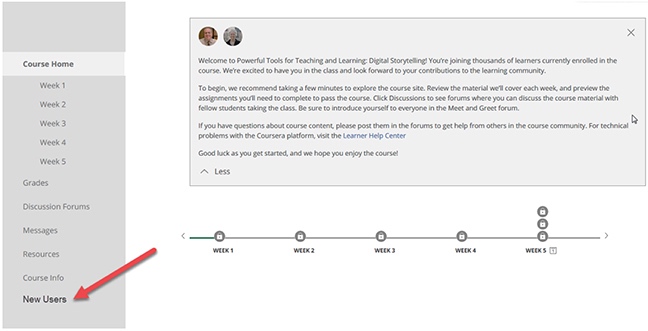

Consideration can also be given to how new MOOC learners may experience challenges due to unfamiliarity with nuances of the MOOC learning platform. While platforms typically offer help centers that provide answers to technical platform questions, they sometimes require learners to navigate away from the course sites. Furthermore, help center information can be overwhelming, as it aims to provide a knowledge base for all users on the platform. Adding a New Users tab next to the course content could support new learners within the course site (see Figure 2). In addition to information on the technical aspects of the MOOC platform, the tab could also provide answers to frequently asked questions, as well as recommendations regarding study strategies from instructors and previously successful learners.

Figure 2. Adding a new users tab to help new students get adjusted to the MOOC.

While this study offers insights into SRL subprocesses that can contribute to MOOC completion, there are some limitations in the generalizability of the findings. Participants from this study self-identified as MOOC completers. Thus, their perceptions concerning course completion may not reflect whether they actually received certificates of completion. Future studies could involve participant recruitment based on course activity data to identify MOOC completers. Participants in this study were from two MOOCs on educational technology topics. Hence, further research is needed to determine if the themes that emerged in this study are characteristic of MOOC completion in other subject areas. Replicating this study with varied student populations could contribute to a greater understanding of the observed themes.

Course characteristics, such as length and assignment difficulty, may also have affected student performance in this study. Future research could investigate how students engage in SRL in courses of different lengths and difficulty levels, as well as courses offered through various course platforms. The participants in the study tended to be highly educated, which is typical in MOOCs (Hansen & Reich, 2015). However, this characteristic may limit the generalization of the findings for MOOCs that target learners with limited educational backgrounds.

The two MOOCs in this study were offered through the Coursera platform. As with any learning management system or learning delivery mechanism, platform characteristics could have impacted learner perceptions regarding course design. The study also utilized learner self-report data that relies on the participants’ views and recollections of their applications of SRL subprocesses. Future research could involve observations and gathering evidence of learner applications of SRL subprocesses by MOOC completers.

Since the OSLQ was not initially developed for the MOOC setting, further studies are needed to explore the validity and reliability of the OSLQ for assessing SRL in a MOOC. There is also an opportunity for the development of MOOC-oriented SRL instruments that could identify additional SRL subprocesses specific to learning in MOOCs. MOOC-specific instruments could enrich understanding of how SRL contributes to MOOC completion and provide insights into how SRL is applicable in MOOC learning environments.

Prior research has identified the importance of SRL in student learning. The present study extends this research to highlight the role of goal setting, specifically within the context of MOOCs. It further illuminates particular aspects of this SRL subprocess that course instructors and designers could target to support learners. Goal setting is complex and can involve other SRL subprocesses, such as task interest/value, causal attribution, time management, self-efficacy, and goal orientation. By having early access to information about course content and expectations, learners can proactively decide whether the course topics and activities align with their interests and priorities. Time commitment details and pre-assessment feedback can be used to inform and prompt students to set specific, personalized short-term and long-term course goals. Setting such goals can position MOOC students for better performance and help them to identify, work toward, and ultimately achieve their learning goals.

Agarwal, A. (2015, April 22). Reimagine freshman year with the global freshman academy [Web log post]. Retrieved from http://blog.edx.org/reimagine-freshman-year-global-freshman/

Allen, I., Seaman, J., Poulin, R., & Straut, T. T. (2016). Online report card: Tracking online learning in the United States. Retrieved from http://onlinelearningsurvey.com/reports/onlinereportcard.pdf

Bandura, A. (1997). Self-efficacy: The exercise of control. New York, NY: W H Freeman/Times Books/Henry Holt & Co.

Barak M., Watted, A., & Haick, H. (2016). Motivation to learn in massive open online courses: Examining aspects of language and social engagement. Computers & Education, 94, 49-60. doi: 10.1016/j.compedu.2015.11.010

Barnard, L., Lan, W. Y., To, Y. M., Paton, V. O., & Lai, S.-L. (2009). Measuring self-regulation in online and blended learning environments. Internet and Higher Education, 12(1), 1-6. doi: 10.1016/j.iheduc.2008.10.005

Breslow, L., Pritchard, D. E., DeBoer, J., Stump, G. S., Ho, A. D., & Steaton, D. T. (2013). Studying learning in the worldwide classroom: Research into edX’s first MOOC. Research & Practice in Assessment, 8(1), 13-25. Retrieved from http://www.rpajournal.com/dev/wp-content/uploads/2013/05/SF2.pdf

Broadbent, J., & Poon, W. L. (2015). Self-regulated learning strategies and academic achievement in online higher education learning environments: A systematic review. Internet and Higher Education, 27, 1-13. doi: 10.1016/j.iheduc.2015.04.007

Chang, H. -Y., Wang, C. -Y., Lee, M. -H., Wu, H. -K., Liang, J. -C., Lee, S. W. -Y.,... Tsai, C. -C. (2015). A review of features of technology-supported learning environments based on participants’ perceptions. Computers in Human Behavior, 53, 223-237. doi: 10.1016/j.chb.2015.06.042

Chuang, I., & Ho, A. (2016, December 23). HarvardX and MITx: Four years of open online courses - Fall 2012-Summer 2016. doi: 10.2139/ssrn.2889436

Cleary, T. J. (2018). The self-regulated learning guide: Teaching students to think in the language of strategies. New York, NY: Routledge.

DeBoer, J., Ho, A. D., Stump, G. S., & Breslow, L. (2014). Changing “course”: Reconceptualizing educational variables for massive open online courses. Educational Researcher, 43(2), 74-84. doi: 10.3102/0013189X14523038

Dweck, C. S. (1986). Motivational processes affecting learning. American Psychologist, 41(10), 1040-1048. doi: 10.1037/0003-066X.41.10.1040

Eccles, J., Adler, T. F., Futterman, R., Goff, S. B., Kaczala, C. M., Meece, J. L., & Midgley, C. (1983). Expectancies, values, and academic behaviors. In J. T. Spence (Ed.), Achievement and achievement motivation (pp. 75-146). San Francisco, CA: Freeman.

Elo, S., Kääriäinen, M., Kanste, O., Pölkki, T., Utriainen, K., & Kyngäs, H. (2014). Qualitative content analysis: A focus on trustworthiness. SAGE Open, 4, 1-10. doi: 10.1177/2158244014522633

Field, A. (2018). Discovering statistics using IBM SPSS statistics (5th ed.). Thousand Oaks, CA: SAGE.

Gilllani, N., & Eynon, R. (2014). Communication patterns in massively open online courses. Internet and Higher Education, 23, 18-26. doi: 10.1016/j.iheduc.2014.05.004

Gregori, E. B., Zhang, J., Galván-Fernández, C., & Fernández-Navarro, F. d. A. (2018). Learner support in MOOCs: Identifying variables linked to completion. Computers & Education, 122, 153-168. doi: 10.1016/j.compedu.2018.03.014

Hansen, J. D., & Reich, J. (2015). Democratizing education? Examining access and usage patterns in massive open online courses. Science, 350(6265), 1245-1248.

Hone, K. S., & El Said, G. R. (2016). Exploring the factors affecting MOOC retention: A survey study. Computers & Education, 98, 157-168. doi: 10.1016/j.compedu.2016.03.016

Hsieh, H. -F., & Shannon, S. E. (2005). Three approaches to qualitative content analysis. Qualitative Health Research, 15(9), 1277-1288. doi: 10.1177/1049732305276687

Jordan, K. (2014). Initial trends in enrolment and completion of massive open online courses. International Review of Research in Open and Distributed Learning, 15(1), 133-160. doi: 10.19173/irrodl.v15i1.1651

Jung, Y., & Lee, J. (2018). Learning engagement and persistence in massive open online courses (MOOCs). Computers & Education, 122, 9-22. doi: 10.1016/j.compedu.2018.02.013

Kizilcec, R. F., Pérez-Sanagustín, M., & Maldonado, J. J. (2017). Self-regulated learning strategies predict learner behavior and goal attainment in massive open online courses. Computers & Education, 104, 18-33. doi: 10.1016/j.compedu.2016.10.001

Knox, J. (2013). Digital culture clash: “Massive” education in the e-learning and digital cultures MOOC. Distance Education, 35(2), 164-177. doi: 10.1080/01587919.2014.917704

Littlejohn, A., Hood, N., Milligan, C., & Mustain, P. (2016). Learning in MOOCs: Motivations and self-regulated learning in MOOCs. Internet and Higher Education, 29, 40-48. doi: 10.1016/j.iheduc.2015.12.003

McAuley, A., Stewart, B., Siemens, G., & Cormier, D. (2010). The MOOC model for digital practice. Retrieved from http://www.elearnspace.org/Articles/MOOC_Final.pdf

Milligan, C., & Littlejohn, A. (2016). How health professionals regulate their learning in massive open online courses. Internet and Higher Education, 31, 113-121. doi: 10.1016/j.iheduc.2016.07.005

Nawrot, I., & Doucet, A. (2014). Building engagement for MOOC students: Introducing support for time management on online learning platforms. In C. -W. Chung, A. Broder, K. Shim, & T. Suel (Eds.), Proceedings of the companion publication of the 23rd international conference on world wide web (pp. 1077-1082). doi: 10.1145/2567948.2580054

Pintrich, P. R. (2004). A conceptual framework for assessing motivation and self-regulated learning in college students. Educational Psychology Review, 16(4), 385-407. doi: 10.1007/s10648-004-0006-x

Picciano, A. G. (2002). Beyond student perceptions: Issues of interaction, presence, and performance in an online course. Journal of Asynchronous Learning Networks, 6(1), 21-40. Retrieved from http://www.anitacrawley.net/Resources/Articles/Picciano2002.pdf

Reich, J. (December, 2014). MOOC completion and retention in the context of student intent. EDUCAUSE Review Online. Retrieved from http://er.educause.edu/articles/2014/12/mooc-completion-and-retention-in-the-context-of-student-intent

Robin, B. (2008). Digital storytelling: A powerful technology tool for the 21st century classroom. Theory into Practice, 47(3), 220-228. doi: 10.1080/00405840802153916

Schunk, D. H. (2001). Social cognitive theory and self-regulated learning. In B. J. Zimmerman & D. H. Schunk, (Eds.), Self-regulated learning and academic achievement (2nd ed., pp. 125-152). Mahwah, NJ: Lawrence Erlbaum.

Shah, D. (2018, January 18). By the numbers: MOOCs in 2017 [Web log post]. Retrieved from https://www.class-central.com/report/mooc-stats-2017/

Straumsheim, C. (2016, April 27). Georgia Tech’s next steps [Web log post]. Retrieved from https://www.insidehighered.com/news/2016/04/27/georgia-tech-plans-next-steps-online-masters-degree-computer-science

Tsai, Y. -h., Lin, C. -h., Hong, J. c., & Tai, K. h. (2018). The effects of metacognition on online learning interest and continuance to learn with MOOCs. Computers & Education, 121, 18-29. doi: 10.1016/j.compedu.2018.02.011

Wang, Y., & Baker, R. (2018). Grit and intention: Why do learners complete MOOCs? International Review of Research in Open and Distributed Learning, 19(3), 21-42. doi: 10.19173/irrodl.v19i3.3393

Watted, A., & Barak, M. (2018). Motivating factors of MOOC completers: Comparing between university-affiliated students and general participants. Internet and Higher Education, 37, 11-20. doi: 10.1016/j.iheduc.2017.12.001

Williams, V. (n.d.). Online readiness assessment [Web survey]. Retrieved from https://pennstate.qualtrics.com/jfe/form/SV_7QCNUPsyH9f012B?s=246aa3a5c4b64bb386543eab834f8e75&Q_JFE=qdg

Yuan, L., & Powell, S. (2013). MOOCs and open education: Implications for higher education (White Paper Serial No. 2013: WP01). Boston, MA: CETIS. doi: 10.13140/2.1.5072.8320

Zimmerman, B. J. (1989). A social cognitive view of self-regulated academic learning. Journal of Educational Psychology, 81(3), 329-339. doi: 10.1037/0022-0663.81.3.329

Zimmerman, B. J. (2013). From cognitive modeling to self-regulation: A social cognitive career path. Educational Psychologist, 48(3), 135-147. doi: 10.1080/00461520.2013.794676

Goal Setting and MOOC Completion: A Study on the Role of Self-Regulated Learning in Student Performance in Massive Open Online Courses by Erwin Handoko, Susie L. Gronseth, Sara G. McNeil, Curtis J. Bonk, and Bernard R. Robin is licensed under a Creative Commons Attribution 4.0 International License.