Volume 20, Number 1

Donggil Song1, Marilyn Rice1, and Eun Young Oh2

1Sam Houston State University, Huntsville, TX. USA, 2Seoul National University, Seoul, South Korea

Online learning environments could be well understood as a multifaceted phenomenon affected by different aspects of learner participation including synchronous/asynchronous interactions. The aim of this study was to investigate learners' participation in online courses, synchronous interaction with a conversational virtual agent, their relationships with learner performance, and the participation/interaction factor identification. To examine learner participation, we collected learning management system (LMS) log data that included the frequency and length of course access, discussion board postings, and final grades. To examine synchronous learner interaction, we collected learners' conversation logs from the conversational agent. We calculated the quantity and quality of discussion postings and conversations with the agent. The results showed that the frequency and length of course access, the quantity and quality of discussion postings, and the quality of conversation with the agent were significantly associated with the learner achievement. This study also identified two factors that comprise online learning participation and interaction: interaction quality and LMS-oriented interaction.

Keyword: online learning, learner participation, online interaction, conversational agent

Enrollment in online courses has sharply increased, specifically in higher education (Seaman, Allen, & Seaman, 2018), boosting educational researchers' interest in online learning. Researchers in this field are redefining our understanding of presence in light of the ability of individuals to interact extensively online with learning content, instructors, peers, and the learning environment. At the same time, they acknowledge major challenges, such as low levels of learner performance, passive participation, and higher attrition rates (Levy, 2007; Stoessel, Ihme, Barbarino, Fisseler, & Stürmer, 2015). In a comparison study investigating the difference between face-to-face and online courses in higher education, on average 10% of online learners failed in courses whereas only 4% of face-to-face learners did (Ni, 2013). Thus, the expansion of distance education has both benefits and detriments.

Low learner participation is one of the most significant issues in online education. This could be caused by poorly designed interaction opportunities for learners. Research has shown that online learning can be as effective as face-to-face courses, but only if learners are provided well-designed interaction activities (Hawkins, Graham, Sudweeks, & Barbour, 2013; Joksimović, Gašević, Kovanović, Riecke, & Hatala, 2015; Picciano, 2002). Croxton (2014) found that purposefully designed and engaging interaction tasks played a significant role in learner persistence in online courses. Therefore, it is imperative that we design online learning environments to foster meaningful interactions for learners (Bettinger, Liu, & Loeb, 2016; Goggins & Xing, 2016; Hrastinski, 2008).

One of the challenges of encouraging learner participation through purposeful and engaging interactions is that current online learning activities are mostly designed in an asynchronous manner. It is difficult in a typical online course for an instructor to promote positive experiences of interaction for learners because these require immediate and quality feedback from the instructor. In asynchronous environments, it is also demanding to implement the kinds of seamless and continuous learning activities that would assist learners in carrying out real-world projects (Boling, Hough, Krinsky, Saleem, & Stevens, 2012). Although online courses can use synchronous activities such as online conferencing, there are concerns that synchronous meetings could diminish one of the major benefits of open and distributed learning: that learners can learn at any time (Song & Lee, 2014). Accordingly, much of the research in online learning has focused on learners' participation issues in asynchronous interaction activities, while there is a lack of studies on learners' synchronous interaction behaviors in the context of distributed learning environments.

A number of researchers have reported that learners' active participation in online courses is associated with high levels of learner performance and higher retention rates (Bettinger et al., 2016; Goggins & Xing, 2016; Hrastinski, 2008; Stoessel et al., 2015). Michinov, Brunot, Le Bohec, Juhel, and Delaval (2011) found that learner participation levels, measured by the number of messages learners posted to discussion forums, mediated the relationship between learners' procrastination and academic achievement. The researchers suggest that encouraging learner participation leads to the increased performance of online learners, especially those who have a tendency to procrastinate. Bettinger et al. (2016) also examined the effects of learners' participation on their online course performance and persistence. More active participation in discussion forums was associated with higher performance in the courses and lower dropout rates in the following academic term. Learners' active participation can therefore be considered a key factor in learning success in online courses.

With respect to the issue of learner participation, note that participation is intertwined with interaction. Generally, learner participation refers to "a process of taking part and also to the relations with others that reflect this process" (Wenger, 1998, p. 55). This broad definition includes two types of learner behavior (i.e., "a process of taking part," such as submitting an assignment or reading an assigned article) and interaction (i.e., "the relations with others," such as chatting with peers or having a discussion with peers). In this research area, the concept of learner behavior as a "process of taking part" has been employed as a narrower definition of learner participation rather than the concept of learner participation includes interaction. For example, the following studies showed that encouraging learners' active participation by providing more interaction opportunities is one effective approach that promotes success in online courses (Croxton, 2014; Hawkins et al., 2013; Joksimović et al., 2015; Picciano, 2002; Wu, Yen, & Marek, 2011). It seems that interaction is a factor that contributes to participation.

It should be noted that not all interaction activities promote participation and performance. Sabry and Baldwin (2003) explored relationships between learners' preferences and online interactions with information, with the instructor, and with other learners. One hundred eighty-nine undergraduate and graduate students completed a questionnaire that asked about their online learning interaction experiences. Learners were found to have different perspectives and preferences towards online interactions. Specifically, regarding frequency of use and perceived usefulness, learners in their study more often interacted with information than with their instructor and with other learners. Similarly, the effects of interaction on online learners' performance might depend on the content of interactions. Kang and Im (2013) investigated learner interactions and perceived learning in an online environment. Six hundred fifty-four undergraduate students responded to a survey that asked about learners' interactions with their instructor and their perceived performance. Kang and Im's exploratory factor analysis showed that instruction-related interaction factors had more predictive power for perceived performance than non-instructional interaction factors (e.g., social intimacy, social exchange of personal information). Thus, it seems that instructional content-related interaction has a more significant effect on learner performance than the other types of interactions. Still, further research is required to identify different types of interaction and their different roles in online learning.

In the previous studies (Goggins & Xing, 2016; Swan, 2001), the quantity of interaction has been considered a significant factor that predicts learning outcomes. Goggins and Xing (2016) examined learners' asynchronous interaction activities in a discussion forum in an online graduate course. The quantity of learner participation in the discussion (which was measured by the number of posts both written and read) was significantly correlated with achievement. In their investigation of 1,406 undergraduate students' perceptions of their online learning experience, Swan (2001) also found that students who were more active in online courses, higher levels of personal activity including higher perceived levels of interaction with the instructor and peers, reported higher levels of satisfaction and perceived learning and earned higher course grades. Swan found that frequent interaction with course materials was one of the most important aspects of online learning. Therefore, the magnitude of interaction might promote achievement of learning outcomes.

Asynchronous and synchronous interaction. Along with the importance of interaction quantity and content-related aspects, the synchronicity of interaction has been discussed in the previous studies (Baker, 2010; Johnson, 2006; Swan, 2001). Asynchronous interactions are beneficial for learners because they offer the time to find more learning resources, speculate about the topic, reflect on their learning, and elaborate their own knowledge (Johnson, 2006). Online discussion forums provide learners opportunities to reflect on peers' and instructors' contributions and consider their own arguments before sharing them. Conversely, asynchronous interactions often lack timeliness or immediacy. In learners' interactions with instructors, timely and immediate responses can reduce the psychological distance between them and promote learner achievement in online learning environments (Swan, 2001). Baker (2010) investigated undergraduate and graduate students' perception of affect, cognition, motivation, and their instructors' immediacy and presence in online courses. Immediacy of interaction was strongly associated with students' positive affective and cognitive status. Although asynchronous interaction activities such as discussion forums may partially support timely interaction, this requires an instructor's prompt facilitation and learners' immediate responses, which are not common in online courses.

If immediacy is key to positive interaction effects, synchronous interactions could be more effective than asynchronous interactions in certain contexts. However, synchronous interaction activities have not frequently been implemented in online courses because of the challenges they present. The instructor needs to ask all students to be online at a certain time and moderate large-scale online conversations (Yamagata-Lynch, 2014). This type of practical difficulty is one reason that few studies have examined learners' synchronous interaction activities in online courses (Giesbers, Rienties, Tempelaar, & Gijselaers, 2014). Accordingly, the roles, characteristics, and effectiveness of synchronous interactions in online courses have not been fully explored. Thus, because of the challenges, we need to get creative in our implementation of synchronous interaction for both research and practice areas.

Conversational virtual agents, or chatbots, are computer programs that communicate with users in natural language and so have been used for user-system interaction in many online spaces (Shawar & Atwell, 2007). By simulating human dialog patterns, they can conduct an interaction task through conversation with a user. One of the first conversational agent systems, ELIZA, was developed in 1966 by Joseph Weizenbaum. Users interacted with ELIZA in a synchronous manner. ELIZA simulated a therapist role in clinical treatment situations, analyzed what users typed, and created its responses based on predefined decision rules.

There are cases in which conversational agent systems have been used for educational purposes (Fryer, Ainley, Thompson, Gibson, & Sherlock, 2017; Heller, Proctor, Mah, Jewell, & Cheung, 2005; Jia, 2009). Abbasi and Kazi (2014) investigated the use of a conversational agent as an answer retrieval tool to solve programming questions. Seventy-two undergraduate students were randomly assigned to either a Google group that used the Google search engine to retrieve information, or to a conversational agent group that asked questions of the agent to retrieve information. Examining the pre- and post-test memory retention measurement, they found that the conversational agent group significantly outperformed the Google group on learning outcomes. This type of technology appears to facilitate interaction opportunities for online learners. Further, the use of conversational agents in a synchronous manner might prompt more in-depth investigation of the roles and effectiveness of synchronous interaction in online learning environments. Still, the use of conversational agents in online courses as a synchronous interaction activity tool for both research and practice is in its infancy. Because few studies have examined conversational agents as a synchronous interaction medium in educational settings, there is little knowledge of what roles this type of interaction might play in online courses.

The purpose of this study is to examine learners' participation in online courses, synchronous interaction with a conversational agent, their relationships with learner performance, and identification of the factors that comprise participation/interaction. Our research questions are:

For this study, we adopted a quantitative single-case research design, and used correlation and factor analyses of learners' participation in an LMS, interaction with a conversational agent, and learner performance. The data for this study were collected from online courses at a mid-sized university located in the southern United States. Typically, a completely asynchronous model has been used for delivering the online courses via LMS. The courses were organized into 15 weekly themes and topic modules. The LMS content for the courses included a syllabus, announcements, reading assignments, supplementary reading materials, weekly discussion topics and questions, and related links.

Fifty-six participants were recruited from four graduate courses in an instructional technology program. The courses lasted 15 weeks, with an introduction module in the first week and a review and final paper submission in the last week. Each of the 13 regular weekly modules included an asynchronous discussion based on each topic reading (e.g., journal paper, book chapter), conducted on an online forum in the LMS. Students were required to complete the 13 weeks of asynchronous discussion activity throughout the course term. In addition, students in all courses were assigned interaction with a conversational virtual agent that was designed to encourage the acquisition of content knowledge and logical argumentation skills. Students were asked to interact with the agent about instructional topics using reflection prompts such as "Why do we need to use educational multimedia?", "Are mobile Apps good for teaching?", "Peer review would be helpful?", "In your project, was the ISD (Instructional Systems Design) Step useful or effective?", and so on. Students were required to complete seven interaction sessions throughout the semester.

The conversational virtual agent system we used in this study was designed and developed to demonstrate the feasibility of the agent for better support of synchronous interaction in online courses (Song, Oh, & Rice, 2017). The conversational agent was an independent online application that was not embedded in the LMS; students could access the agent directly using a web browser, without logging into the LMS. Learners interacted with the agent through text-based chat. The virtual agent analyzed their input and replied to their questions and responses. As shown in Figure 1, the agent asks a question, and the learner answers the question. The agent initiates the question-answer interaction, for example, "Can you teach me the course, Educational Multimedia?" The agent also responds to the learner's answer; when the answer is short, the agent may ask "Would you please explain more about it?"

Figure 1. Screenshot of a learner interacting with the conversational agent system.

To examine learner participation, we collected participants' LMS log data, including the frequency of course access, length of course access, discussion board messages, and the final grade. We calculated the length of discussion board messages and rated their quality using procedures we will describe shortly. To examine synchronous learner interactions, we collected participants' conversation logs from the agent system, and also calculated their length and rated their quality. In total, we collected data on seven variables: System Access, Time Spent, Discussion Length, Discussion Quality, Conversation Length, Conversation Quality, and Final Grade.

Discussion and conversation quality measurement. We rated participants' discussion board messages and conversations with the agent using a scoring rubric. We used Bradley, Thom, Hayes, and Hay's (2008) adaptation of a coding scheme developed by Gilbert and Dabbagh (2005) to measure the quality of online discussion, and then modified it for our own context. We scored discussion board messages and conversations with the agent as follows:

An instructor from the participating courses and a research assistant separately coded participants' discussion board postings and conversations with the agent. Interrater reliability using the intraclass coefficient for the initial rating was.91. The raters resolved disagreements by consensus reached throughout five different discussion meetings. In the event the raters did not agree, the third author of this study was asked to consider each rater's justifications and make a final decision.

We first calculated means and standard deviations of the seven variables (see Table 1). System Access and Time Spent had relatively large standard deviations, which means that there were large individual differences in learners' access to the LMS.

We found no statistically significant difference of all seven variables among the participating online courses (Final Grade: F (3, 52) =.79, p =.51; System Access: F (3, 52) = 1.38, p =.23; Time Spent: F (3, 52) =.56, p =.64; Discussion Length: F (3, 52) = 1.00, p =.40; Discussion Quality: F (3, 52) = 1.16, p =.34; Conversation Length: F (3, 52) = 1.97, p =.13; Conversation Quality: F(3, 52) =.37, p =.78), perhaps due to our small sample size.

Table 1

Means and Standard Deviations of Variables (N = 56)

| Course | n | Final Grade (up to 500) | System Access (frequency) | Time Spent (hours) | Discussion Length (words) | Discussion Quality (up to 5.0) | Convers. Length (words) | Convers. Quality (up to 5.0) |

| M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | M (SD) | ||

| A | 16 | 464.78 (28.69) | 227.63 (53.96) | 89.42 (46.21) | 3,901.31 (904.42) | 2.93 (.53) | 2,308.56 (906.08) | 3.06 (.62) |

| B | 9 | 472.77 (25.90) | 204.11 (63.31) | 70.32 (42.77) | 3,706.67 (639.10) | 3.31 (.35) | 2,955.00 (656.90) | 3.19 (.24) |

| C | 16 | 467.54 (24.97) | 224.94 (58.30) | 83.60 (48.44) | 4,252.50 (964.52) | 3.25 (.67) | 2,326.50 (699.12) | 3.23 (.73) |

| D | 15 | 477.97 (22.13) | 248.53 (36.76) | 94.24 (36.76) | 4,180.40 (877.46) | 3.15 (.60) | 2,237.53 (696.32) | 3.28 (.59) |

| Total | 56 | 470.38 (25.40) | 228.68 (53.47) | 85.98 (45.15) | 4,045.13 (880.03) | 3.14 (.57) | 2,398.55 (777.82) | 3.19 (.60) |

To examine the relationships between learner participation and interaction, participation data from the LMS and the learner interaction data from the agent system were analyzed using a parametric correlation analysis. As shown in Table 2, Final grade has a correlation with System Access (r (54) =.357, p =.01), Time Spent (r (54) =.297, p =.03), Discussion Length (r (54) =.423, p =.001), Discussion Quality (r (54) =.514, p <.001), and Conversation Quality (r (54) =.462, p <.001), but not with Conversation Length (r (54) =.133, p =.33). System Access has a correlation with Time Spent (r (54) =.286, p =.03) and Discussion Length (r (54) =.295, p =.03). In addition, there is a strong correlation between Discussion Quality and Conversation Quality (r (54) =.776, p <.001). The sample size and the correlation analysis are discussed in terms of the factor analysis in the limitation section.

Table 2

Correlation of Learner Participation in Online Courses and Conversational Activity (N = 56)

| Final Grade | System Access | Time Spent | Discussion Length | Discussion Quality | Conversation Length | Conversation Quality | |

| Final Grade | 1 | ||||||

| System Access | .357* (p =.01) | 1 | |||||

| Time Spent | .297* (p =.03) | .286* (p =.03) | 1 | ||||

| Discussion Length | .423** (p =.001) | .295* (p =.03) | .215 (p =.11) | 1 | |||

| Discussion Quality | .514** (p <.001) | .211 (p =.12) | .120 (p =.38) | .180 (p =.19) | 1 | ||

| Conversation Length | .133 (p =.33) | -.037 (p =.79) | -.085 (p =.53) | -.018 (p =.90) | .232 (p =.09) | 1 | |

| Conversation Quality | .462** (p <.001) | .178 (p =.19) | .181 (p =.18) | .209 (p =.12) | .776** (p <.001) | .214 (p =.11) | 1 |

* Correlation is significant at the 0.05 level (2-tailed).

** Correlation is significant at the 0.01 level (2-tailed).

To identify participation/interaction factors underlying participants' behavior, we used a Principal Components Analysis (PCA). For the factor analysis, the sample-to-item ratio is important for determining the appropriate sample size. Because the final grade is an outcome of participation, we did not include it in the factor analysis, so our sample-to-item ratio is 9.3 (56 samples divided by 6 items), well within the recommended range of 5-20 samples per item (Pituch & Stevens, 2016). The correlation was investigated in research question 1 (see Table 2). The determinant is .275, which meets the requirement (i.e., greater than .000001) for the assumption of a factor analytic solution (Beavers et al., 2013). The Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy is .60, which is considered suitable (i.e., greater than .50) for factor analysis (Tabachnick & Fidell, 2013). Bartlett's test of sphericity is significant (p <.0001), which means that the variables are correlated highly enough to provide a reasonable basis for factor analysis (Hair, Black, Babin, Anderson, & Tatham, 2010). All the initial communalities are greater than.40, which means that the sample size is not likely to distort results (Tabachnick & Fidell, 2013).

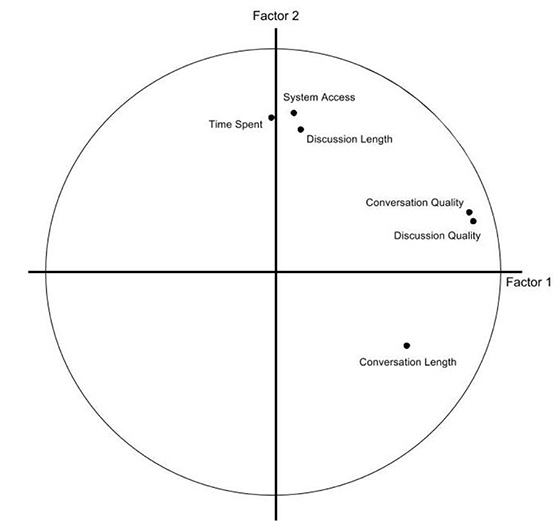

The initial eigenvalues showed that the first factor explained 35.9% of the variance, and the second factor 22.6% of the variance. Eigenvalues of the third, fourth, fifth, and sixth factors were smaller than 1. No items were eliminated because there was no item that failed to meet the minimum criteria of having a primary factor loading of.4 or above and no cross-loading of.3 or above. Because the first component explained 35.9% of the total variance, which was less than 50%, we conducted a PCA of all items using Varimax with Kaiser normalization rotations to assess how six variables clustered. All items had primary loadings over.5. Factors 1 and 2 were rotated, based on the eigenvalues-over-1 criterion and the scree plot. Table 3 displays the items and component loadings for the rotated components. After the rotation, the first component accounted for 31.0% of the variance, and the second component 27.5% of the variance. The two factors explain a total of 58.5% of the variance for the entire set of variables.

Table 3

Component Loadings for the Rotated Components (N = 56)

| Item | Component Loading | Communality | |

| Factor 1 | Factor 2 | ||

| System Access | .097 | .718 | .523 |

| Time Spent | -.007 | .695 | .483 |

| Discussion Length | .119 | .647 | .433 |

| Discussion Quality | .872 | .235 | .816 |

| Conversation Length | .586 | -.322 | .447 |

| Conversation Quality | .858 | .270 | .809 |

| Eigenvalues | 2.151 | 1.359 | |

| % of variance | 31.012 | 27.491 | |

Figure 2 shows how closely related the items are to each other and to the two components. Factor 1 was labeled Interaction Quality due to the high loadings by Discussion Quality and Conversation Quality. Factor 2 was labeled LMS-oriented Interaction due to the high loadings by System Access, Time Spent, and Discussion Length. Overall, our analyses indicated that two distinct factors were underlying learners' participation behaviors in online courses including synchronous and asynchronous interaction activities.

Figure 2. The component plot in rotated space.

In this study, the frequency and length of course access, the quantity and quality of asynchronous discussion, and the quality of synchronous conversation with a virtual agent were significantly associated with the learner achievement. Overall, the results of this study support previous research findings that established a relationship between learners' participation/interaction in online courses and their learning performance (Croxton, 2014; Stoessel et al., 2015; Wu et al., 2011). In our study, the quantity of conversation with the virtual agent was not significantly tied to achievement. One possible explanation, drawing upon Garrison and Cleveland-Innes's (2005) findings, is that learners' performance depended more on the quality of interaction than on the quantity.

It was not surprising that the frequency of course access was significantly correlated with the length of course access and the quantity of asynchronous discussion. Participants had to access the LMS in order to participate in the discussion forums, but not conversations with the virtual agent. In addition, the quality of discussion board posts and the quality of conversations with the agent were strongly related. It seems that the learners' depth of learning is fairly independent of interaction synchronicity.

Learner participation is a significant factor affecting success in online courses (Bettinger et al., 2016; Goggins & Xing, 2016; Hrastinski, 2008), and learner interaction is seen as central to online learning participation. The concept of interaction is multifaceted, and different types of interaction have different effects on learners' participation, satisfaction, and performance (Jung, Choi, Lim, & Leem, 2002). Therefore, when aiming to promote learners' quality interaction for their active participation and enhanced learning performance, the confluence of different types and characteristics of interactional aspects should be taken into account. Our results indicate two factors that comprise learner participation/interaction in online courses. We called the first factor Interaction Quality, which includes the quality of discussion forum posts and conversation with the agent. We called the second factor LMS-oriented Interaction, which includes the frequency and length of course access, and the quantity of discussion forum posts. In our factor analysis, there was no clear discrepancy between synchronous and asynchronous interactions. Rather, interaction quality and LMS-oriented interaction emerged as the primary distinction among learner participation behaviors in online courses. Note that the quality of discussion board posts is included in the Interaction Quality factor, and the quantity thereof is included in LMS-oriented Interaction. This suggests that the same mode of interaction, in this case asynchronous communication, can have different roles in online learning.

Our findings also suggest that course topic-related communication between the learner and a virtual agent is practically applicable to online courses in a synchronous manner. Our participants successfully had a conversation with the virtual agent about course topics and materials. The instructional content-related communication with the conversational virtual agent might have a positive effect on learner performance and satisfaction, as previous studies showed that computer agent-based experiences offer the learner meaningful learning experience (e.g., Delialioglu & Yildirim, 2007; Garrison & Cleveland-Innes, 2005). We suggest this is because such interaction motivates the learner to express their opinions and encourages them to complete tasks.

There are notable limitations in this study. First, our sample size was admittedly small. We found our sample size appropriate based on the context and the sample-to-item ratio; however, some would suggest that 50-100 is not a sufficient sample size (Comrey & Lee, 1992). Second, we were unable to perform course-level analysis due to the small sample size. Thus, more in-depth factor analyses and course-level analyses with a larger sample size are required for future research. Third, learners' final grades might not represent learner performance precisely, specifically in graduate courses. Graduate courses encompass diverse aspects including legitimate argumentation, logical discussion, reasoning, academic writing, and critical thinking that could not possibly be reflected in one specific score. We recommend future studies with the inclusion of different types of learner assessment methods and data. Fourth, a text-based chat might not fully serve as a synchronous interaction method. Just as people use language for human interaction, learners want to use spoken language to communicate even in online learning environments (Shawar & Atwell, 2007). Therefore, text-to-speech and voice recognition technologies might provide additional benefits, and verbal interaction with the agent should be investigated. Last, although our motivation in conducting the current study was seeking ways to remediate the higher attrition rate of online courses, we did not directly address retention. Outcomes such as course completion and attrition rates should be examined in future studies.

The results of this study suggest that conversational virtual agents have potential for increasing meaningful interactions for learners in online courses. With the rapid expansion of computing power and artificial intelligence techniques, we can expect to see extensive application of these technologies in our daily lives, including in the field of education. It would be beneficial to the distance education field if we determined how best to incorporate intelligent agent systems into current online courses. Clearly, pedagogy using conversation agents in online learning environments deserves further investigation.

In this study, online learners experienced synchronous interactions with the conversational agent. Nonetheless, it is uncertain that the conversational agent provides any types of social interaction or social presence for the online learner. Social presence is an important concept in online learning process that encompasses online communication and interaction (Tu, 2002). In online learning environments, it seems that learners' academic performance correlates with the perceptual level of social presence (Richardson & Swan, 2003). Because the learner can share their opinions and exchange critical ideas through social interaction, social presence might be associated with the level of learner interaction, which would establish a meaningful learning experience (Garrison & Cleveland-Innes, 2005). In addition, learner satisfaction could be tied more closely to learners' perceptions of their social and interpersonal interaction than to knowledge demonstration (Dennen, Aubteen Darabi, & Smith, 2007). What we have not examined in this study is the virtual agent system's ability to provide social and interpersonal interaction opportunities for the learner. The social and interpersonal aspects of conversational agents need to be further examined.

Learners need immediate feedback from the instructor and timely support from peers and subject matter experts, which could best be supported through synchronous interactions. Most learners would expect the instructor to be available at all times and to respond to their questions and requests (Drange, Sutherland, & Irons, 2015). Still, asynchronous discussion methods have pedagogical benefits such as supporting learners' writing processes and providing reflection time (Andresen, 2009). For these reasons, a combination of synchronous and asynchronous communication has been suggested to promote online learners' participation and engagement (Giesbers et al., 2014). As researchers have suggested (Beldarrain, 2006; Ohlund, Yu, Jannssch-Pennell, & Digangi, 2000), synchronous and asynchronous interaction might be connected in a complementary mutual relationship. The reflective and collaborative properties of asynchronous interaction might be well supplemented by the immediate and timely attributes of synchronous interaction. In addition, active interaction in synchronous tasks lead to positive interaction in asynchronous communication (Giesbers et al., 2014). This mutual relationship requires further investigation. Since this research area requires the analysis of a large amount of data, learning analytics and data mining techniques should be employed (Song, 2018).

We conducted this study to better grasp learner participation and interaction in online courses. Learner participation is not simply represented as a quantity of interaction or the access to the learning space. Our results offer a representation of how learner behavior indicators in online courses are associated with each other, but we need to further investigate different types of synchronous and asynchronous interaction in different types of online learning environments, specifically the use of conversational agent systems.

This research was supported by Enhancement Research Grant (ERG) 2016 at Sam Houston State University (#290145).

Abbasi, S., & Kazi, H. (2014). Measuring effectiveness of learning chatbot systems on student's learning outcome and memory retention. Asian Journal of Applied Science and Engineering, 3(2), 251-260. Retrieved from http://journals.abc.us.org/index.php/ajase/article/view/251-260

Andresen, M. A. (2009). Asynchronous discussion forums: Success factors, outcomes, assessments, and limitations. Journal of Educational Technology & Society, 12(1), 249-257. Retrieved from https://www.jstor.org/stable/pdf/jeductechsoci.12.1.249

Baker, C. (2010). The impact of instructor immediacy and presence for online student affective learning, cognition, and motivation. Journal of Educators Online, 7(1), 1-30. Retrieved from https://eric.ed.gov/?id=EJ904072

Beavers, A. S., Lounsbury, J. W., Richards, J. K., Huck, S. W., Skolits, G. J., & Esquivel, S. L. (2013). Practical considerations for using exploratory factor analysis in educational research. Practical Assessment, Research & Evaluation, 18(6), 1-13. Retrieved from http://www.pareonline.net/getvn.asp?v=18&n=6

Beldarrain, Y. (2006). Distance education trends: Integrating new technologies to foster student interaction and collaboration. Distance Education, 27(2), 139-153. doi: 10.1080/01587910600789498

Bettinger, E., Liu, J., & Loeb, S. (2016). Connections matter: How interactive peers affect students in online college courses. Journal of Policy Analysis and Management, 35(4), 932-954. doi: 10.1002/pam.21932

Boling, E. C., Hough, M., Krinsky, H., Saleem, H., & Stevens, M. (2012). Cutting the distance in distance education: Perspectives on what promotes positive, online learning experiences. The Internet and Higher Education, 15(2), 118-126. doi: 10.1016/j.iheduc.2011.11.006

Bradley, M. E., Thom, L. R., Hayes, J., & Hay, C. (2008). Ask and you will receive: How question type influences quantity and quality of online discussions. British Journal of Educational Technology, 39(5), 888-900. doi: 10.1111/j.1467-8535.2007.00804.x

Comrey, A. L., & Lee, H. B. (1992). A first course in factor analysis (2nd ed.). Hillsdale, NJ: Lawrence Erlbaum. Retrieved from http://psycnet.apa.org/record/1992-97707-000

Croxton, R. A. (2014). The role of interactivity in student satisfaction and persistence in online learning. Merlot Journal of Online Learning and Teaching, 10(2), 314-325. Retrieved from http://jolt.merlot.org/vol10no2/croxton_0614.pdf

Delialioglu, O., & Yildirim, Z. (2007). Students' perceptions on effective dimensions of interactive learning in a blended learning environment. Journal of Educational Technology & Society, 10(2), 133-146. Retrieved from https://www.jstor.org/stable/pdf/jeductechsoci.10.2.133

Dennen, V. P., Aubteen Darabi, A., & Smith, L. J. (2007). Instructor-learner interaction in online courses: The relative perceived importance of particular instructor actions on performance and satisfaction. Distance Education, 28(1), 65-79. doi: 10.1080/01587910701305319

Drange, T., Sutherland, I., & Irons, A. (2015, March). Challenges of interaction in online teaching: A case study. Proceedings of the International Conference on E-Technologies and Business on the Web (pp. 35-42). Paris, France: The Society of Digital Information and Wireless Communications. Retrieved from http://ebw2015.sdiwc.us/

Fryer, L. K., Ainley, M., Thompson, A., Gibson, A., & Sherlock, Z. (2017). Stimulating and sustaining interest in a language course: An experimental comparison of Chatbot and Human task partners. Computers in Human Behavior, 75, 461-468. doi: 10.1016/j.chb.2017.05.045

Garrison, D. R., & Cleveland-Innes, M. (2005). Facilitating cognitive presence in online learning: Interaction is not enough. The American Journal of Distance Education, 19(3), 133-148. doi: 10.1207/s15389286ajde1903_2

Giesbers, B., Rienties, B., Tempelaar, D., & Gijselaers, W. (2014). A dynamic analysis of the interplay between asynchronous and synchronous communication in online learning: The impact of motivation. Journal of Computer Assisted Learning, 30(1), 30-50. doi: 10.1111/jcal.12020

Gilbert, P. K., & Dabbagh, N. (2005). How to structure online discussions for meaningful discourse: A case study. British Journal of Educational Technology, 36(1), 5-18. doi: 10.1111/j.1467-8535.2005.00434.x

Goggins, S., & Xing, W. (2016). Building models explaining student participation behavior in asynchronous online discussion. Computers & Education, 94, 241-251. doi: 10.1016/j.compedu.2015.11.002

Hair, J. F., Black, W. C., Babin, B. J., Anderson, R. E., & Tatham, R. L. (2010). Multivariate data analysis (7th ed.). Upper Saddle River, NJ: Prentice Hall. Retrieved from https://www.pearson.com/us/higher-education/program/Hair-Multivariate-Data-Analysis-7th-Edition/PGM263675.html

Hawkins, A., Graham, C. R., Sudweeks, R. R., & Barbour, M. K. (2013). Academic performance, course completion rates, and student perception of the quality and frequency of interaction in a virtual high school. Distance Education, 34(1), 64-83. doi: 10.1080/01587919.2013.770430

Heller, B., Proctor, M., Mah, D., Jewell, L., & Cheung, B. (2005, June). Freudbot: An investigation of chatbot technology in distance education. Proceedings of the 2005 EdMedia: World Conference on Educational Media and Technology (pp. 3913-3918). Montreal, Canada: Association for the Advancement of Computing in Education. Retrieved from https://psych.athabascau.ca/html/chatterbot/ChatAgent-content/EdMediaFreudbotFinal.pdf

Hrastinski, S. (2008). What is online learner participation? A literature review. Computers & Education, 51(4), 1755-1765. doi: 10.1016/j.compedu.2008.05.005

Johnson, G. M. (2006). Synchronous and asynchronous text-based CMC in educational contexts: A review of recent research. TechTrends, 50(4), 46-53. doi: 10.1007/s11528-006-0046-9

Joksimović, S., Gašević, D., Kovanović, V., Riecke, B. E., & Hatala, M. (2015). Social presence in online discussions as a process predictor of academic performance. Journal of Computer Assisted Learning, 31(6), 638-654. doi: 10.1111/jcal.12107

Jia, J. (2009). CSIEC: A computer assisted English learning chatbot based on textual knowledge and reasoning. Knowledge-Based Systems, 22(4), 249-255. doi: 10.1016/j.knosys.2008.09.001

Jung, I., Choi, S., Lim, C., & Leem, J. (2002). Effects of different types of interaction on learning achievement, satisfaction and participation in web-based instruction. Innovations in Education and Teaching International, 39(2), 153-162. doi: 10.1080/14703290252934603

Kang, M., & Im, T. (2013). Factors of learner-instructor interaction which predict perceived learning outcomes in online learning environment. Journal of Computer Assisted Learning, 29(3), 292-301. doi: 10.1111/jcal.12005

Levy, Y. (2007). Comparing dropouts and persistence in e-learning courses. Computers & Education, 48(2), 185-204. doi: 10.1016/j.compedu.2004.12.004

Mayadas, A. F., Bourne, J., & Bacsich, P. (2009). Online education today. Science, 323(5910), 85-89. doi: 10.1126/science.1168874

Michinov, N., Brunot, S., Le Bohec, O., Juhel, J., & Delaval, M. (2011). Procrastination, participation, and performance in online learning environments. Computers & Education, 56(1), 243-252. doi: 10.1016/j.compedu.2010.07.025

Ni, A. Y. (2013). Comparing the effectiveness of classroom and online learning: Teaching research methods. Journal of Public Affairs Education, 19(2), 199-215. Retrieved from https://www.jstor.org/stable/23608947

Ohlund, B., Yu, C. H., Jannssch-Pennell, A., & Digangi, S. A. (2000). Impact of asynchronous and synchronous Internet-based communication on collaboration and performance among K-12 teachers. Journal of Educational Computing Research, 23, 405-420. doi: 10.2190/U40F-M2LK-VKVW-883L

Picciano, A. G. (2002). Beyond student perceptions: Issues of interaction, presence, and performance in an online course. Journal of Asynchronous Learning Networks, 6(1), 21-40. Retrieved from https://pdfs.semanticscholar.org/bfdd/f2c4078b58aefd05b8ba7000aca1338f16d8.pdf

Pituch K. A., & Stevens, J. (2016). Applied multivariate statistics for the social sciences (3rd ed.). Mahwah, NJ: Routledge. Retrieved from https://www.routledge.com/Applied-Multivariate-Statistics-for-the-Social-Sciences-Analyses-with/Pituch-Stevens/p/book/9780415836661

Richardson, J. C., & Swan, K. (2003). Examining social presence in online courses in relation to students' perceived learning and satisfaction. Journal of Asynchronous Learning Networks, 7(1), 68-88. Retrieved from http://hdl.handle.net/2142/18713

Sabry, K., & Baldwin, L. (2003). Web-based learning interaction and learning styles. British Journal of Educational Technology, 34(4), 443-454. doi: 10.1111/1467-8535.00341

Seaman, J. E., Allen, I. E., & Seaman, J. (2018). Grade increase: Tracking distance education in the United States. Retrieved from Babson College, Babson Survey Research Group website: https://eric.ed.gov/?id=ED580852

Shawar, B. A., & Atwell, E. (2007). Chatbots: are they really useful? LDV Forum, 22(1), 29-49. Retrieved from http://www.jlcl.org/2007_Heft1/Bayan_Abu-Shawar_and_Eric_Atwell.pdf

Song, D. (2018). Learning Analytics as an educational research approach. International Journal of Multiple Research Approaches, 10(1), 102-111. doi: 10.29034/ijmra.v10n1a6

Song, D., & Lee, J. (2014). Has Web 2.0 revitalized informal learning?: The relationship between the levels of Web 2.0 and informal learning websites. Journal of Computer Assisted Learning, 30(6), 511-533. doi: 10.1111/jcal.12056

Song, D., Oh. E., & Rice, M. (2017, July). Interacting with a conversational agent system for educational purposes in online courses. Proceedings of the 10th International Conference on Human System Interaction (pp. 78-82). Ulsan, South Korea: IEEE. doi: 10.1109/HSI.2017.8005002

Stoessel, K., Ihme, T. A., Barbarino, M. L., Fisseler, B., & Stürmer, S. (2015). Sociodemographic diversity and distance education: Who drops out from academic programs and why? Research in Higher Education, 56(3), 228-246. doi: 10.1007/s11162-014-9343-x

Swan, K. (2001). Virtual interaction: Design factors affecting student satisfaction and perceived learning in asynchronous online courses. Distance Education, 22(2), 306-331. doi: 10.1080/0158791010220208

Tabachnick, B. G., & Fidell, L. S. (2013). Using multivariate statistics (6th ed.). Boston, MA: Pearson. Retrieved from https://www.pearson.com/us/higher-education/program/Tabachnick-Using-Multivariate-Statistics-6th-Edition/PGM332849.html

Tu, C. H. (2002). The measurement of social presence in an online learning environment. International Journal on E-learning, 1(2), 34-45. Retrieved from https://www.learntechlib.org/p/10820/

van den Boom, G., Paas, F., & van Merriënboer, J. J. (2007). Effects of elicited reflections combined with tutor or peer feedback on self-regulated learning and learning outcomes. Learning and Instruction, 17(5), 532-548. doi: 10.1016/j.learninstruc.2007.09.003

Wenger, E. (1998). Communities of practice: Learning, meaning, and identity. Cambridge: Cambridge University Press. Retrieved from http://www.cambridge.org/9780521663632

Wu, W. C. V., Yen, L. L., & Marek, M. (2011). Using online EFL interaction to increase confidence, motivation, and ability. Journal of Educational Technology & Society, 14(3), 118-129. Retrieved from https://www.jstor.org/stable/jeductechsoci.14.3.118

Yamagata-Lynch, L. C. (2014). Blending online asynchronous and synchronous learning. The International Review of Research in Open and Distributed Learning, 15(2), 189-212. Retrieved from http://www.irrodl.org/index.php/irrodl/article/view/1778/2837

Participation in Online Courses and Interaction With a Virtual Agent by Donggil Song, Marilyn Rice, and Eun Young Oh is licensed under a Creative Commons Attribution 4.0 International License.