Volume 19, Number 5

Bart Rienties, Christothea Herodotou, Tom Olney, Mat Schencks, and

Avi Boroowa

Open University UK, Learning and Teaching Innovation

The importance of teachers in online learning is widely acknowledged to effectively support and stimulate learners. With the increasing availability of learning analytics data, online teachers might be able to use learning analytics dashboards to facilitate learners with different learning needs. However, deployment of learning analytics visualisations by teachers also requires buy-in from teachers. Using the principles of technology acceptance model, in this embedded case-study, we explored teachers' readiness for learning analytics visualisations amongst 95 experienced teaching staff at one of the largest distance learning universities by using an innovative training method called Analytics4Action Workshop. The findings indicated that participants appreciated the interactive and hands-on approach, but at the same time were skeptical about the perceived ease of use of learning analytics tools they were offered. Most teachers indicated a need for additional training and follow-up support for working with learning analytics tools. Our results highlight a need for institutions to provide effective professional development opportunities for learning analytics.

Keywords: learning analytics, information visualisation, learning dashboards, distance education

Over 20 years of research has consistently found that teachers play an essential role in online, open and distributed learning (Lawless & Pellegrino, 2007; Mishra & Koehler, 2006; Shattuck & Anderson, 2013; van Leeuwen, Janssen, Erkens, & Brekelmans, 2015). Beyond managing the learning process, providing pedagogical support, and evaluating learning progression and outcomes, several authors (Muñoz Carril, González Sanmamed, & Hernández Sellés, 2013; Rienties, Brouwer, & Lygo-Baker, 2013; Shattuck, Dubins, & Zilberman, 2011) have highlighted that online teachers also have a social, personal, and counselling role in online learning. With recent advancements in learning analytics, teachers will increasingly receive an unprecedented amount of information, insight, and knowledge about their learners and their diverging needs. Learning analytics dashboards in particular may provide teachers with opportunities to support learner progression, and perhaps personalised, rich learning on a medium to large scale (Fynn, 2016; Rienties, Cross, & Zdrahal, 2016; Tempelaar, Rienties, & Giesbers, 2015).

With the increasing availability of learner data (i.e., "static data" about the learner; such as demographics or prior educational success) and learning data (i.e., "dynamic data" about the behaviour of a learner; such as engagement in a virtual learning environment, library swipes or number of discussion forum messages) in most institutions (Fynn, 2016; Heath & Fulcher, 2017; Rienties, Giesbers, Lygo-Baker, Ma, & Rees, 2016), powerful analytics engines (Hlosta, Herrmannova, Zdrahal, & Wolff, 2015) that offer visualisations of student learning journeys (Charleer, Klerkx, Duval, De Laet, & Verbert, 2016; Daley, Hillaire, & Sutherland, 2016; Jivet, Scheffel, Specht, & Drachsler, 2018) may enable teachers to provide effective support to diverse groups of learners. Indeed, two recent systematic reviews of learning analytics dashboards (Jivet et al., 2018; Schwendimann et al., 2017), which reviewed 26 and 55 studies respectively, indicated that teachers and students will be able to obtain (almost) real-time information about how, where, and when to study. Several authors have also indicated that learning analytics dashboards may empower teachers to provide justintime support (Daley et al., 2016; Herodotou et al., 2017; Mor, Ferguson, & Wasson, 2015; Verbert, Duval, Klerkx, Govaerts, & Santos, 2013) and help them to fine-tune the learning design; especially if large numbers of students are struggling with the same task (Rienties, Boroowa et al., 2016; Rienties & Toetenel, 2016).

While many studies (e.g., Ferguson et al., 2016; Heath & Fulcher, 2017; Papamitsiou & Economides, 2016; Schwendimann et al., 2017) have indicated the potential of learning analytics, the success of learning analytics adoption ultimately relies on the endorsement of the teacher. Teachers are one of the key stakeholders who will access and interpret learning analytics data, draw conclusions about students' performance, take actions to support students, and improve the curricula. Several studies (e.g., Muñoz Carril et al., 2013; Rienties & Toetenel, 2016; Shattuck & Anderson, 2013; Shattuck et al., 2011) have indicated that institutions may need to empower teachers further by introducing appropriate professional development activities to develop teachers' skills in effectively using technology and learning analytics dashboards.

Although several studies have recently indicated a need for a better understanding of how teachers make sense of learning analytics dashboards (Charleer et al., 2016; Ferguson et al., 2016; Schwendimann et al., 2017; van Leeuwen et al., 2015), to the best of our knowledge, no large-scale study is available that has explored and tested how online teachers may make sense of such learning analytics dashboards and interrelated data. In particular, it is important to unpack why some teachers might be more willing and able to adopt these new learning analytics dashboards into practice than others who struggle to make sense of the technology. One common approach to understand the uptake of new technologies is the Technology Acceptance Model (TAM) by Davis and colleagues (1989) which distinguishes between perceived ease of use and perceived usefulness of technology as key drivers for adoption by teachers. In this study, we therefore aim to unpack how teachers who attended a two hour Analytics4Action Workshop (A4AW) tried to make sense of learning analytics dashboards in an embedded case-study and whether (or not) teachers' technology acceptance influenced how they engaged in A4AW and their overall satisfaction.

Several recent studies in this journal have highlighted that the role of teachers in providing effective support in online learning is essential (e.g., Shattuck & Anderson, 2013; Stenbom, Jansson, & Hulkko, 2016). For example, in a review of 14 studies of online teaching models, Muñoz Carril et al. (2013) identified 26 different but overlapping roles that teachers perform online; from advisor, to content expert, to trainer. With the increased availability of learning analytics data (Daley et al., 2016; Herodotou et al., 2017; Jivet et al., 2018; Schwendimann et al., 2017; Verbert et al., 2013) and the provision of learning analytics dashboards to provide visual overviews of data, there are also growing expectations on teachers to keep track of their students' learning.

In order to implement learning analytics in education, teachers need to be aware of the complex interplay between technology, pedagogy, and discipline-specific knowledge (Herodotou et al., 2017; Mishra & Koehler, 2006; Rienties & Toetenel, 2016; Verbert et al., 2013). However, research has shown that providing learning analytics dashboards to teachers that lead to actionable insight is not always straightforward (Schwendimann et al., 2017). For example, a recent study by Herodotou et al. (2017) comparing how 240 teachers used learning analytics visualisations at the Open University (OU), indicated that most teachers found it relatively easy to engage with the visualisations. However, many teachers struggled to put learning analytics recommendations into concrete actions for students in need (Herodotou et al., 2017). Follow-up qualitative interviews indicated that some teachers preferred to learn a new learning analytics system using an auto-didactic approach, that is, experimenting and testing the various functionalities of learning analytics dashboards by trial-and-error (Herodotou et al., 2017).

One crucial, potentially distinguishing factor as to whether (or not) teachers start and continue to (actively) use technology and learning analytics dashboards is their acceptance of technology (Rienties, Giesbers et al., 2016; Šumak, Heričko, & Pušnik, 2011; Teo, 2010). Technology acceptance research (Davis, 1989; Davis, Bagozzi, & Warshaw, 1989) originates from the information systems (IS) domain developed models which have successfully been applied to educational settings (Pynoo et al., 2011; Sanchez-Franco, 2010; Šumak et al., 2011). The TAM model is founded on the well-established Theory of Planned Behaviour (Ajzen, 1991), which states that human behaviour is directly preceded by the intention to perform this behaviour. In turn, three factors influence intentions, namely: personal beliefs about one's own behaviour, one's norms, and the (perceived) amount of behavioural control one has.

Building on this theory, TAM states that the intention to use learning analytics dashboards by teachers is influenced by two main factors: the perceived usefulness (i.e., PU: the extent to which a teacher believes the use of learning analytics dashboards and visualisations will, for example, enhance the quality of his/her teaching or increase academic retention) and the perceived ease of use (i.e., PEU: the perceived effort it would take to use learning analytics). The influence of PU and PEU has been consistently shown in educational research (Pynoo et al., 2011; Sanchez-Franco, 2010). For example, Teo (2010) found that PU and PEU were key determinants for 239 pre-service teachers' attitudes towards computer use. In an experimental study of 36 teachers using a completely new Virtual Learning Environment (VLE), with and without video support materials, Rienties, Giesbers et al. (2016) found that PEU significantly predicted whether teachers successfully completed the various VLE tasks, while PU was not significantly predictive of behaviour and training needs.

In addition, a wide range of literature has found that individual and discipline factors influence the uptake of technology and innovative practice in education. For example, Teo and Zhou (2016) indicate that age, gender, teaching experience, and technology experience might influence teachers' technology acceptance. Similarly, a study comparing 151 learning designs at the OU, Rienties and Toetenel (2016) found significant differences in the way teachers designed courses and implemented technology across various disciplines.

This study is nested within the context of the OU, which provides open-entry education for 150,000+ "non-traditional" students. In 2014, as part of a large suite of initiatives to provide support to its diverse learners, the OU introduced a significant innovation project called The Analytics Project. The Analytics Project, which had a budget of £2 million, was tasked with attempting to better understand how learning analytics approaches could be developed, tested, and applied on an institutional scale. The Analytics Project established an ethics framework (Slade & Boroowa, 2014), introduced predictive modelling tools (Herodotou et al., 2017; Hlosta et al., 2015; Rienties, Cross et al., 2016), and developed a hands-on support structure called the Analytics4Action (A4A) Framework. The purpose of the A4A was to help teachers make informed design alterations and interventions based upon learning analytics data (Rienties, Boroowa et al., 2016). One element within this A4A Framework is specifically focussed on professional development of OU staff; the context in which this study was conducted.

In line with Muñoz Carril et al. (2013), the OU academic staff and non-academic staff (e.g., instructional designers, curriculum managers) perform a range of interconnected teaching roles; jointly design, implement, and evaluate online modules as part of module teams (Herodotou et al., 2017; Rienties, Boroowa et al., 2016; Rienties, Cross et al., 2016; Rienties & Toetenel, 2016). As a result, the 26 online teaching roles identified by Muñoz Carril et al. (2013) are shared by all OU teaching staff and therefore our professional development focussed on a wide range of academic and non-academic staff.

Working together with the OU A4A team, we trained 95 experienced teaching staff using an innovative training method called Analytics4Action Workshop (A4AW). Within this A4AW, a range of learning analytics tools was provided to teachers in order to learn where the key affordances and limitations of the data visualisation tools were (Rienties, Boroowa et al., 2016). We worked together with teachers to understand how to improve our learning analytics dashboards to enhance the power of learning analytics in daily practice. Therefore, this study will address the following two research questions:

A4AW was developed and implemented by five training experts within the OU with years of practical and evidence-based training experience to accommodate different learning approaches for teachers. The innovative and interactive workshop was designed to test the effectiveness of learning analytics dashboards. Rather than providing an instructor-heavy "click-here-and-now-there" demonstration, we designed an interactive training programme with opportunities for flexibility and adaptivity where participants could "authentically" work on their own contexts. The training was broken down into two phases, whereby during each phase participants had ample time to work and experiment with the various learning analytics dashboards and tools while at the same time bringing lessons learned together as the end of each phase. Within the structure of A4AW, the types of learning activities, patterns of engagement, and the various learning dashboards used are described in Table 1.

Table 1

Design of A4AW Professional Development

| Phase | Duration in minutes | Pedagogy | Data source | Software type | Update frequency | Data type |

| 0 | 10 | Instructors | General introduction of approach and explanation of case-study | - | - | - |

| 1 | 30 | Pair | Module Profile Tool | SAS | Daily | Demographic/Previous and concurrent study data. |

| Module Activity Chart | Tableau | Fortnightly | VLE usage/Retention /Teacher marked assessments (TMAs). | |||

| SeAM Data Workbook | Tableau | Bi-annually | End of module student satisfaction survey data. | |||

| 20 | Whole class | Discussion and Reflection | - | - | - | |

| 2 | 40 | Pair | VLE Module Workbook | Tableau | Fortnightly | VLE/tools/resources usage |

| Learning Design Tools | Web interface | Ad hoc | Workload mapping/activity type spread. | |||

| 10 | Whole class | Discussion and Reflection | - | - | - | |

| 10 | Instructors | Lessons Learned | - | - | - | |

| - | Individually | Evaluation | - | - | - |

Note. The duration of each of these activities was dependent on the "flow" of the respective group in order to allow for participants to maximise their professional development opportunities.

In the first 10 minutes, the instructors introduced the purpose of A4AW as well as the authentic case-study, in an open, undirected manner. Within the module, participants were asked to take on the role of a team chair (i.e., teacher) who had unexpectedly taken on responsibility for a large scale introductory module on computer science. Participants were paired with another participant and sat together behind one PC with a large screen. In this way, if one participant did not know how to use a particular learning analytics tool or where to click, it was expected that the paired participant might provide some advice; a less intrusive approach than continuously having an instructor "breathing down their neck". In case participants got stuck, two instructors were available in the room to provide support and help.

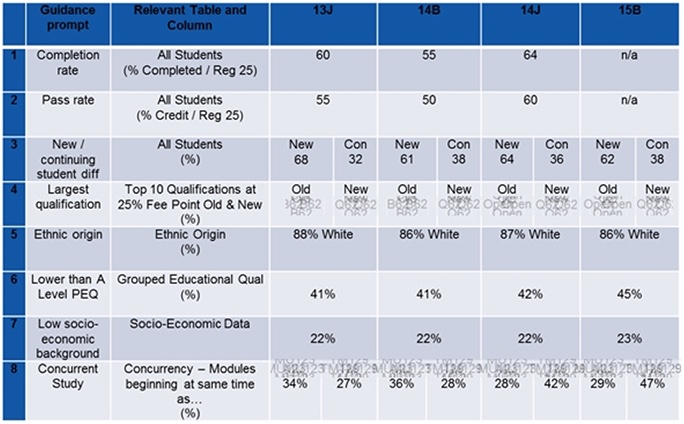

Subsequently, in Phase 1, or "monitoring data," the participants were expected to explore the data from the various learning analytics dashboards in a self-directed way for around 30 minutes, then record their findings on paper or in a digital repository. Participants had access to existing data sources which allowed them to monitor the "health" of the module in the case-study, establish a context, and compare this with their own expertise in their own teaching modules. An example of this is Table 2, which provided a breakdown of students of the case-study module in the last four implementations, whereby both learner characteristics (e.g., previous education, socio-economic, ethnicity) and learning behaviour (e.g., pass rates, concurrent study) were presented. In particular, Table 2 illustrates other modules students were following in parallel, in order to help teachers identify whether there were overlaps in assessment timings.

Table 2

Breakdown of Composition of Students in Case-Study

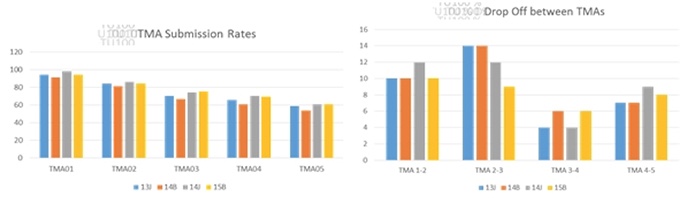

Another example of a data set is in Figure 1, which provided teachers with a visual overview of the percentage of students who completed the various teacher-marked assessments (TMAs). The right column of Figure 1 illustrates the relative drop-off of assessment submissions in comparison to the previous assessment point. Instructors were on hand to guide when required but attempted only to provide assistance in navigation and confirming instructions as far as possible. In order to encourage relevancy and reduce abstraction, participants were also encouraged to spend around 5-10 minutes looking at the data for the module to which they were affiliated. At the end of the 30 minute session, the group was brought back together; a whole class discussion and reflection took place for 20 minutes, facilitated by the instructors, on what learning had been achieved. Participants were encouraged to share their experiences, interpretations, problems, and successes with the group in an inclusive way, as indicated in Table 1.

Figure 1. Assessment submission rates over time.

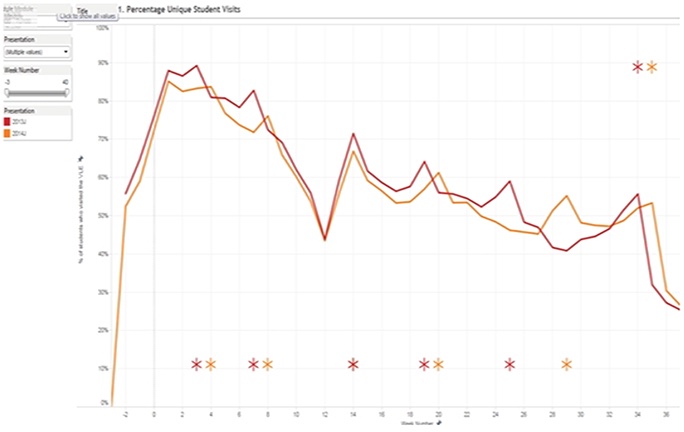

Figure 2. VLE engagement in case-study.

In Phase 2, module teams had access to more fine-grained data to allow them to "drill-down" and investigate further performance concerns or issues flagged in the "monitoring data" phase. Phase 2 was referred to as "investigating issues". Participants were encouraged to interrogate more fine-grained learning design and actual VLE engagement data (see Figure 2), to attempt to identify potential issues, and where feasible, to use the dashboards for their own taught modules in order to explore the affordances and limitations of these dashboards (40 minutes). Afterwards, again in a whole-class format, the participants shared notes and discussed their experiences with using the various learning analytics dashboards (10 minutes). Finally, the instructors presented some of their own findings and reflections of the case-study module in order to confirm, contrast, and explore further the findings with the participants (10 minutes).

Participants within this study were academic staff and instructional designers from the largest university in Europe, the Open University (OU). Participants were recruited in the spring of 2016 in two ways. First of all, as part of a wider strategic Analytics4Action project (Rienties, Boroowa et al., 2016), 50 module teams of academics, who participated in bi-monthly one-to-one sessions with learning analytics specialists to help them to use learning analytics data to intervene in their modules, were invited to join the A4AW sessions. Secondly, instructional designers and curriculum managers affiliated with these modules were invited to join the A4AW session, as well as any other member of staff who indicated an interest to join the learning analytics professional development training.

Participants were enrolled in one of ten sessions of two hours each in a large computer lab according to their time preference. In total 95 members of staff joined the A4AW, of which 63 (66%) completed the survey (see next section). Of the 63 participants, 43 indicated their name (which was optional), of whom 65% were female. Using web-crawling and OU Management Information techniques, 25 participants were identified as academics (2 professors; 9 senior lecturers/associate professors; 12 lecturers/assistant professors; 1 staff tutor; 1 PhD student), 16 were non-academics (1 senior regional manager; 1 senior instructional designer; 4 regional managers; 10 curriculum/qualification managers).

Measurement of technology acceptance model. At the end of the A4AW session, participants were asked to complete a paper-based survey about their PEU of the OU learning analytics data visualisation tools and their PU. Given that many of the learning analytics tools were in beta stages of development, it was important for us to know how easy and useful these tools were perceived to be by teachers. The TAM scales of Davis (1989) typically consist of two times six items on PU and PEU. As most TAM questionnaires have focussed on users and students in particular rather than teachers, in line with Rienties, Giesbers et al. (2016), we rephrased the items to fit our teacher context.

Measurement of perceived training needs and satisfaction with A4AW format. In addition to the six items of TAM, in line with Muñoz Carril et al. (2013), participants were asked to indicate after the A4AW whether other members would need specific professional development training to use the OU learning analytics tools (i.e., Do you expect most staff will need formal training on the data tool?). In addition, two items on the quality of the instructional provision were included (e.g., Did the instructors provide clear instructions on what to do?), and one overall satisfaction item (i.e., Overall, were you satisfied with the training?). All instruments used a Likert response scale of 1 (totally disagree) to 5 (totally agree). Finally, two open questions were included about "What do you like?" and "What could be improved?" in terms of A4AW.

Control variables. In line with Teo and Zhou (2016), we controlled for differences in A4AW experiences based upon gender, (non) academic profile, seniority, discipline, and level of teaching (e.g., year 1, 2, 3, post-graduate).

An embedded case-study was undertaken to examine the characteristics of a single individual unit (recognising its individuality and uniqueness); namely, teacher, designer, or an organisation (Jindal-Snape & Topping, 2010). Yin (2009) emphasised that a case-study investigates a phenomenon in-depth and in its natural context. Therefore, the purpose of a case-study is to get in-depth information of what is happening, why it is happening and what are the effects of what is happening. As part of the embedded case-study, the five authors were involved in the design, implementation and evaluation of the A4AW. The first, third, and fifth author originally designed and implemented the first two out of ten A4AW sessions. Afterwards, the third, fourth, and fifth author supported the implementation of the remaining eight A4AW sessions, whereby the second author and first author independently analysed and discussed the data (i.e., surveys, materials, notes, post-briefings) from the participants and the three trainers. By combining both quantitative and qualitative data from participants as well as qualitative data and reflections from the five instructors, rich intertwined narratives emerged during the ten implementations of A4AW.

With a mean score of 4.44 (SD = 0.59; Range: 2.67 - 5) the vast majority of respondents were satisfied with the A4AW provision. In line with Rienties, Giesber et al. (2016), taking a positive cut-off value of 3.5 and a negative cut-off value of < 3.0, 89% of the participants indicated they were satisfied with the A4AW programme and 96% were satisfied with instructors in particular. In terms of perceived training needs for working with learning analytics tools at the OU, the vast majority of participants (86%) indicated that members of staff would need additional training and follow-up support. Furthermore, no significant differences in satisfaction were found in terms of gender, discipline, or functional role, indicating that participants in general were positive about the A4AW programme. In terms of open comments, several participants indicated that the format of the A4AW was appropriate, in particular the worked-out example, the instructional support, and working in pairs: "Good to have a sample module and data set to identify key issues. Short sharp and focused. Clear instructions. Excellent explanation" (R12, female, senior lecturer, business); "Briefing session good, interesting tools, good to work in pairs. Looking forward to exploring the tools further in my own time and surgeries in the new [academic] year" (R60, female, curriculum manager, health and social care). Several participants responded with positive observations about the hands-on, practical, approach that the trainings adopted: "Preferred the hands on experience to a presentation. Need to play with tools and respond with issues" (R33, female, senior instructional designer, central unit); "Hands on and practical sessions. Good opportunity to ask questions" (R11, female, academic, business).

One of the advantages of using this interactive approach may be that participants felt more in control and were able to interrogate the data in a way that gave them ownership of their learning. Participants were free to experiment and trial ideas with peers rather than being presented with the "right" solution, or "best" approach to click through the learning analytics visualisations. This flexibility supported teacher autonomy, which is found to relate to greater satisfaction and engagement (Lawless & Pellegrino, 2007; Mishra & Koehler, 2006; Rienties et al., 2013). In line with the explicit purpose of the A4AW programme, the instructors specifically encouraged participants to provide constructive feedback on how to improve the current tools. At the time when the A4AW sessions were held, most OU tools visualised real/static data per module, which might have made it more difficult to make meaningful comparisons between modules: "Briefing uncovered much more info available on the module. It would be helpful to have comparative data to add context to module" (R42, Male, Regional manager, business).

Furthermore, several participants indicated that they would need more time and support to unpack the various learning analytics tools and underlying data sources: "More work on how to interpret issues underlying data/results" (R10, Female, Lecturer, law); "To have more time to work on our own modules and have list of tasks, e.g. find x, y, z, in your module. Also, we need help to interpret the data" (R49, Female, Lecturer, education). At the same time, some participants indicated that they were worried how to implement these tools in practice given their busy lives: "Very interesting, learned a lot, but there is so much data and so little time. Not sure how I will find the time to process and then use all of it" (R56, Female, lecturer, social science).

In terms of PEU of the OU learning analytics tools after the end of A4AW, as illustrated in Table 2, only 34% of participants were positive (M = 3.31, SD = 0.75, Range: 2 - 5). In contrast, most of the participants (68%) were positive in terms of PU of OU learning analytics tools (M = 3.76, SD = 0.63, Range: 2-5). In a way, this result was as expected, as participants had to navigate with five different visualisation tools during the training. Several of these tools, such as the VLE Module Workbook (i.e., VLE activity per week per resource & activity, searchable) and SeAM Data Workbook (i.e., student satisfaction data sortable based upon student characteristics) were new or in beta format for some participants, while the Module Profile Tool (i.e., detailed data on the students studying a particular module presentation), Module Activity Chart (i.e., data on a week-by-week basis about number of students still registered, VLE site activity, and assessment submission) and Learning Design Tools (i.e., blueprint of learning design activities, and workload per activity per week) were already available to members of staff previously. In other words, the relatively low PEU scores of the OU learning analytics tools are probably due to the beta stage of development. Thus, most participants were optimistic about the potential affordances of learning analytics tools to allow teachers to help to support their learners, while several participants indicated that the actual tools that were available might not be as intuitive and easy to use.

Table 3

Correlation Matrix of TAM, Satisfaction and Training Needs

| Scale | M | SD | α | 1 | 2 | 3 |

| 1. Perceived ease of use (PEU) | 3.31 | 0.75 | .902 | |||

| 2. Perceived usefulness (PU) | 3.76 | 0.62 | .831 | .244 | ||

| 3. Perceived need for training | 4.24 | 0.82 | -.086 | .158 | ||

| 4. Satisfaction training | 4.44 | 0.59 | .846 | .435** | .421** | .089 |

Note. **p <.01

In Table 3, both PEU and PU were positively correlated with satisfaction of the training, indicating that teachers and members of staff who had higher technology acceptance were more positive about the merits of the training. Conversely, teachers with a low technology acceptance were less satisfied with the format and approach of the A4AW. Given that most participants indicated that staff members needed professional development to use learning analytics tools, no significant correlations were found in terms of technology acceptance and perceived need of training for staff at the OU. In line with findings from Teo and Zhou (2016), follow-up analyses (not illustrated) indicated no significant effects in terms of gender, academic profile, level of teaching, and discipline, indicating that the identified features were common across all participants. In other words, across the board and irrespective of teachers' technology acceptance, the clear steer from participants was that additional training and support would be needed to understand, unpack, and evaluate the various learning analytics visualisations and data approaches before teachers could actively use them to support students.

A vast number of institutions are currently exploring whether or not to start to use learning analytics (Ferguson et al., 2016; Tempelaar et al., 2015). While several studies have indicated that professional development of online teachers is essential to effectively use technology (Muñoz Carril et al., 2013; Shattuck & Anderson, 2013) and learning analytics in particular (McKenney & Mor, 2015; Mor et al., 2015), to the best of our knowledge, we were the first to test such a learning analytics training approach on a large sample of 95 teaching staff. Using an embedded case-study approach (Jindal-Snape & Topping, 2010; Yin, 2009), in this study we aimed to unpack the lived experiences of 95 experienced teachers in an interactive learning analytics training methodology coined as Analytics for Action Workshop (A4AW), which aimed to support higher education institution staff on how to use and interpret learning analytics tools and data.

In itself, both from the perspectives of the participants as well as the A4AW trainers (who are the authors of this study), the A4AW approach seemed to work well in order to unpack how teachers are using innovative learning analytics tools (see Research Question 1). In particular, pairing up participants allowed them to work in a safe, inclusive environment to discover some of the complexities of the various learning analytics tools. At the same time, in our own hands-on experiences in the ten sessions, we saw considerable anxieties engaging with technologies and learning analytics dashboards; how these new approaches may impact the teachers' identities and roles in an uncertain future.

Data collected from post-training paper-based surveys revealed that almost all of the participants were satisfied with the format and delivery of A4AW and the instructors. Nonetheless, 86% of participants indicated a need for additional training and follow-up support for working with learning analytics tools, which is in line with previous findings in the broader context of online learning (Muñoz Carril et al., 2013; Shattuck et al., 2011; Stenbom et al., 2016). Qualitative data from open-ended questions pointed to satisfaction due to the hands-on and practical nature of the training. Despite satisfaction with the training, the majority of participants found the learning analytics dashboards difficult to use (low PEU); yet this outcome could be explained by the fact that the tools were at a beta stage of development. This was also reflected from the post-briefings with and reflections of the A4AW trainers, whereby many participants seemed to struggle with some of the basic functionalities of the various learning analytics dashboards.

In accordance with the main principles of TAM and studies examining teachers' acceptance of technology (Šumak et al. (2011), both PEU and PU were positively correlated with satisfaction of the learning analytics training. This indicated that participants with higher technology acceptance irrespective of job role and other demographic variables were more positive about the merits of the training; whereas those with lower technology acceptance were less satisfied with the format and approach of the A4AW (see Research Question 2). In addition and in contrast to TAM assumptions, there was no relationship between PU and PEU. This could perhaps be explained by the fact that the tools were not fully developed and as user-friendly as they were at a beta testing stage. We do acknowledge that this could be the case even when teachers interact with a refined final version of the tools.

As indicated by this study and others (Herodotou et al., 2017; Schwendimann et al., 2017; van Leeuwen et al., 2015), providing teachers with data visualisations to prompt them to start with a teacher inquiry process and to intervene in an evidence-based manner is notoriously complex. In particular, as most institutions have various learner (e.g., demographics) and learning data (e.g., last access to library, number of lectures attended) of their students stored in various data sets that are not necessarily linked or using the same data definitions, providing a holistic perspective of the learning journey of each student is a challenge (Heath & Fulcher, 2017; Rienties, Boroowa et al., 2016). Especially as learners and teachers are increasingly using technologies outside the formal learning environment (e.g., Facebook, WhatsApp), teachers need to be made aware during their professional development that every data visualisation using learning analytics is by definition an abstraction of reality (Fynn, 2016; Slade & Boroowa, 2014).

A limitation of this study is the self-reported nature of measurements of teachers' level of technology acceptance, although we contrasted the self-reported nature with the lived experiences of the five trainers during and after the sessions. Potentially, more fine-grained insights could be gained if interactions with learning analytics tools and peers were also captured. Moreover, as this embedded case-study was nested within one large distance learning organisation, this raises issues of generalisability of the outcomes across universities', academic, or other staff. Also, institutions that offer time and space to staff to experiment with learning analytics tools and data might present a different picture in terms of usefulness and acceptance. Allowing time for experimentation might lead teachers to engage with tools more effectively due to the absence of time pressure and potential anxiety (Rienties et al., 2013).

It would be fruitful if future research examined staff engagement with learning analytics tools over time to capture how initial perceptions of ease of use and usefulness might have changed after they gained the skills to use these tools effectively. Towards this direction, more research is needed to examine training methodologies that could support interaction with learning analytics tools and alleviate any fears and concerns related to the tools' use and acceptance. Despite the above mentioned limitations, we believe we are one of the first to provide a large numbers of staff with hands-on professional development opportunities to use learning analytics dashboards. Our findings do suggest that if institutions want to adopt learning analytics approaches, it is essential to provide effective professional development opportunities for learning analytics and in particular provide extra support for teachers and instructional design staff with low technology acceptance.

In general, our study amongst 95 experienced teachers indicated that most teachers found our learning analytics dashboards a potentially useful addition to their teaching and learning practice. Also, the interactive format of the A4AW approach was mostly appreciated, in particular, the opportunities to work in pairs and to get "one's hands dirty" with actual data and visualisations. At the same time, our own lived experiences during these 10 A4AW sessions indicated that many teachers found it difficult to interpret the various data sources and learning dashboards; to make meaningful connections between the various data components. In part this may be due to the lab environment situation and task design, but in part this also highlighted a need for data literacy and further training to unpack the information from the various learning analytics dashboards.

Some participants felt more comfortable exploring the various dashboards and data in an autodidactic manner, perhaps given their academic role or (quantitative) research background; while others struggled to make sense of the various dashboards. Therefore, we are currently working at the OU to provide more personalised professional development programmes, while at the same time providing simple hands-on sessions for early-adopters and "proficient" teachers who already have a strong TAM and understanding of OU data. As highlighted in this and other studies, making sense of data using learning analytics dashboards is not as straightforward as the beautiful visualisations seem to suggest.

Ajzen, I. (1991). The theory of planned behavior. Organizational Behavior and Human Decision Processes, 50(2), 179-211. doi: 10.1016/0749-5978(91)90020-T

Charleer, S., Klerkx, J., Duval, E., De Laet, T., & Verbert, K. (2016, September 13-16). Creating effective learning analytics dashboards: Lessons learnt. In K. Verbert, M. Sharples, & T. Klobučar (Eds.). Adaptive and adaptable learning. Paper presented at thee 11th European Conference on Technology Enhanced Learning, EC-TEL 2016 (pp. 42-56). Lyon, France: Springer International Publishing.

Daley, S. G., Hillaire, G., & Sutherland, L. M. (2016). Beyond performance data: Improving student help seeking by collecting and displaying influential data in an online middle-school science curriculum. British Journal of Educational Technology, 47(1), 121-134. doi: 0.1111/bjet.12221

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319-340.

Davis, F. D., Bagozzi, R. P., & Warshaw, P. R. (1989). User acceptance of computer technology: A comparison of two theoretical models. Management Science, 35(8), 982-1002.

Ferguson, R., Brasher, A., Cooper, A., Hillaire, G., Mittelmeier, J., Rienties, B.... Vuorikari, R. (2016). Research evidence of the use of learning analytics: Implications for education policy. In R. Vuorikari & J. Castano-Munoz (Eds.), A European framework for action on learning analytics (pp. 1-152). Luxembourg: Joint Research Centre Science for Policy Report.

Fynn, A. (2016). Ethical considerations in the practical application of the Unisa socio-critical model of student success. International Review of Research in Open and Distributed Learning, 17(6). doi: 10.19173/irrodl.v17i6.2812

Heath, J., & Fulcher, D. (2017). From the trenches: Factors that affected learning analytics success with an institution-wide implementation. Paper presented at the 7th International learning analytics & knowledge conference (LAK17; pp. 29-35). Vancouver, BC: Practitioner Track.

Herodotou, C., Rienties, B., Boroowa, A., Zdrahal, Z., Hlosta, M., & Naydenova, G. (2017). Implementing predictive learning analytics on a large scale: The teacher's perspective. Paper presented at the Proceedings of the seventh international learning analytics & knowledge conference (pp. 267-271). Vancouver, British Columbia: ACM. Canada.

Kuzilek, J., Hlosta, M., Herrmannova, D., Zdrahal, Z., & Wolff, A. (2015). OU Analyse: Analysing at-risk students at The Open University. Learning Analytics Review, LAK15-1, 1-16. Retrieved from http://www.laceproject.eu/publications/analysing-at-risk-students-at-open-university.pdf

Jindal-Snape, D., & Topping, K. J. (2010). Observational analysis within case-study design. In S. Rodrigues (Ed.), Using analytical frameworks for classroom research collecting data and analysing narrative (pp. 19-37). Dordecht: Routledge.

Jivet, I., Scheffel, M., Specht, M., & Drachsler, H. (2018). License to evaluate: preparing learning analytics dashboards for educational practice. In Proceedings of the 8th International Conference on Learning Analytics and Knowledge - LAK'18 (pp. 31-40). Sydney, New South Wales, Australia: ACM Press. doi: 10.1145/3170358.3170421

Lawless, K. A., & Pellegrino, J. W. (2007). Professional development in integrating technology into teaching and learning: Knowns, unknowns, and ways to pursue better questions and answers. Review of Educational Research, 77(4), 575-614. doi: 10.3102/0034654307309921

McKenney, S., & Mor, Y. (2015). Supporting teachers in data-informed educational design. British Journal of Educational Technology, 46(2), 265-279. doi: 10.1111/bjet.12262

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108(6), 1017-1054.

Mor, Y., Ferguson, R., & Wasson, B. (2015). Editorial: Learning design, teacher inquiry into student learning and learning analytics: A call for action. British Journal of Educational Technology, 46(2), 221-229. doi: 10.1111/bjet.12273

Muñoz Carril, P. C., González Sanmamed, M., & Hernández Sellés, N. (2013). Pedagogical roles and competencies of university teachers practicing in the e-learning environment. International Review of Research in Open and Distributed Learning, 14(3). doi: 10.19173/irrodl.v14i3.1477

Papamitsiou, Z., & Economides, A. (2016). Learning analytics for smart learning environments: A meta-analysis of empirical research results from 2009 to 2015. In J. M. Spector, B. B. Lockee, & D. M. Childress (Eds.), Learning, design, and technology: An international compendium of theory, research, practice, and policy (pp. 1-23). Cham: Springer International Publishing.

Pynoo, B., Devolder, P., Tondeur, J., van Braak, J., Duyck, W., & Duyck, P. (2011). Predicting secondary school teachers' acceptance and use of a digital learning environment: A cross-sectional study. Computers in Human Behavior, 27(1), 568-575. doi: 10.1016/j.chb.2010.10.005

Rienties, B., Boroowa, A., Cross, S., Kubiak, C., Mayles, K., & Murphy, S. (2016). Analytics4Action evaluation framework: A review of evidence-based learning analytics interventions at Open University UK. Journal of Interactive Media in Education, 1(2), 1-12. doi: 10.5334/jime.394

Rienties, B., Brouwer, N., & Lygo-Baker, S. (2013). The effects of online professional development on higher education teachers' beliefs and intentions towards learning facilitation and technology. Teaching and Teacher Education, 29, 122-131. doi: 10.1016/j.tate.2012.09.002

Rienties, B., Cross, S., & Zdrahal, Z. (2016). Implementing a learning analytics intervention and evaluation framework: what works? In B. Kei Daniel (Ed.), Big data and learning analytics in Higher Education (pp. 147-166). Cham: Springer International Publishing. doi: 10.1007/978-3-319-06520-5_10

Rienties, B., Giesbers, S., Lygo-Baker, S., Ma, S., & Rees, R. (2016). Why some teachers easily learn to use a new Virtual Learning Environment: a Technology Acceptance perspective. Interactive Learning Environments, 24(3), 539-552. doi: 10.1080/10494820.2014.881394

Rienties, B., & Toetenel, L. (2016). The impact of learning design on student behaviour, satisfaction and performance: a cross-institutional comparison across 151 modules. Computers in Human Behavior, 60, 333-341. doi: 10.1016/j.chb.2016.02.074

Sanchez-Franco, M. J. (2010). WebCT - The quasimoderating effect of perceived affective quality on an extending Technology Acceptance Model. Computers & Education, 54(1), 37-46. doi: 10.1016/j.compedu.2009.07.005

Schwendimann, B. A., Rodríguez-Triana, M. J., Vozniuk, A., Prieto, L. P., Boroujeni, M. S., Holzer, A., ... Dillenbourg, P. (2017). Perceiving learning at a glance: a systematic literature review of learning dashboard research. IEEE Transactions on Learning Technologies, 10(1), 30-41. doi: 10.1109/TLT.2016.2599522

Shattuck, J., & Anderson, T. (2013). Using a design-based research study to identify principles for training instructors to teach online. International Review of Research in Open and Distributed Learning, 14(5). doi: 10.19173/irrodl.v14i5.1626

Shattuck, J., Dubins, B., & Zilberman, D. (2011). Maryland online's inter-institutional project to train higher education adjunct faculty to teach online. International Review of Research in Open and Distributed Learning, 12(2). doi: 10.19173/irrodl.v12i2.933

Slade, S., & Boroowa, A. (2014). Policy on ethical use of student data for learning analytics. Milton Keynes: Open University UK.

Stenbom, S., Jansson, M., & Hulkko, A. (2016). Revising the community of inquiry framework for the analysis of one-to-one online learning relationships. The International Review of Research in Open and Distributed Learning, 17(3). doi: 10.19173/irrodl.v17i3.2068

Šumak, B., Heričko, M., & Pušnik, M. (2011). A meta-analysis of e-learning technology acceptance: The role of user types and e-learning technology types. Computers in Human Behavior, 27(6), 2067-2077. doi: 10.1016/j.chb.2011.08.005

Tempelaar, D. T., Rienties, B., & Giesbers, B. (2015). In search for the most informative data for feedback generation: Learning analytics in a data-rich context. Computers in Human Behavior, 47, 157-167. doi: 10.1016/j.chb.2014.05.038

Teo, T. (2010). A path analysis of pre-service teachers' attitudes to computer use: applying and extending the technology acceptance model in an educational context. Interactive Learning Environments, 18(1), 65-79. doi: 10.1080/10494820802231327

Teo, T., & Zhou, M. (2016). The influence of teachers' conceptions of teaching and learning on their technology acceptance. Interactive Learning Environments, 25(4), 1-15. doi: 10.1080/10494820.2016.1143844

van Leeuwen, A., Janssen, J., Erkens, G., & Brekelmans, M. (2015). Teacher regulation of cognitive activities during student collaboration: Effects of learning analytics. Computers & Education, 90, 80-94. doi: 10.1016/j.compedu.2015.09.006

Verbert, K., Duval, E., Klerkx, J., Govaerts, S., & Santos, J. L. (2013). Learning Analytics Dashboard Applications. American Behavioral Scientist, 57(10), 1500-1509. doi: 10.1177/0002764213479363

Yin, R. K. (2009). Case-study research: Design and methods (5th ed.). Thousand Oaks: Sage.

Making Sense of

Learning Analytics Dashboards: A Technology Acceptance Perspective of 95

Teachers by Bart Rienties, Christothea Herodotou, Tom Olney, Mat Schencks, and

Avi Boroowa is licensed under a Creative Commons Attribution 4.0

International License.