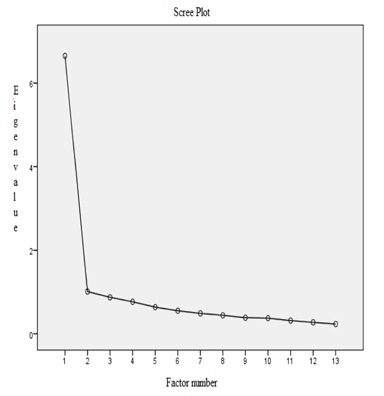

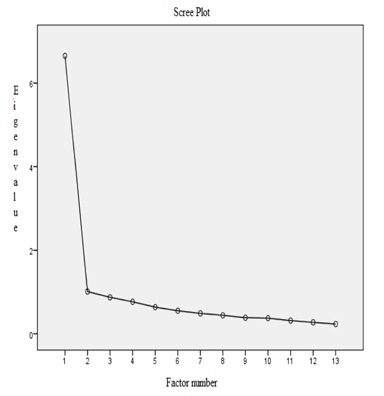

Figure 1. Scree plot for the EFMC-MOOC sample.

Volume 19, Number 4

Juan Antonio Valdivia Vázquez*, Maria Soledad Ramirez-Montoya, and Jaime Ricardo Valenzuela Gónzalez

Tecnologico de Monterrey; *corresponding author

Understanding factors promoting or preventing participants' completion of a massive open online course (MOOC) is an important research topic, as attrition rates remain high for this environment. Motivation and digital skills have been identified as aspects promoting student engagement in a MOOC, and they are considered necessary for success. However, evaluation of these factors has often relied on tools for which the psychometric properties have not been explored; this suggests that researchers may be working with potentially inaccurate information for judging participants' profiles. Through a set of analyses (t-test, exploratory factor analysis, correlation), this study explores the relationship between information collected by administering valid and reliable pre and post instruments to measure traits of MOOC attendees. The findings from this study support previously reported outcomes concerning the strong relationships among motivation, previous knowledge, and perceived satisfaction factors for MOOC completers. Moreover, this study provides evidence of the feasibility of developing valid assessments for evaluation purposes.

Keywords: MOOC assessment, exploratory factor analysis, assessment validity

Since their emergence in 2008, massive open online courses (MOOCs) have ignited the academic community due to their potential concerning a variety of interests beyond presenting a flexible educational alternative (Gaebel, 2013). Ranging from college instructional purposes (e.g., blended learning; Rayyan et al., 2016) to international workforce training (Garrido et al., 2016), educators constantly evolve MOOC scopes, moving forward educational content design and technological platforms (Zhang & Nunamaker, 2003). As a result of the variety of applications that involve fewer resources and financial costs when compared to a traditional options, MOOC projects have become a feasible response to contemporary massive educational challenges (Pegler, 2012).

However, beyond a merely educational response perspective, MOOCs have the potential to become a massive research laboratory (Diver & Martinez, 2015). By individualizing learning, this environment challenges well examined dynamics under traditional educative settings (Mazoue, 2013). For instance, unlike traditional courses, MOOCs' characteristics not only determine the ways in which content is delivered (e.g., asynchronically, massively, etc.; Kilgore, Bartoletti, & Freih, 2015), but they also challenge what is known about students' learning characteristics (e.g., learning and habit styles, interest in learning, etc.; Barcena, Martin-Monje, & Read, 2015). Moreover, given that MOOCs are courses designed to reach needs sought by huge audiences (Kennedy, 2014), a continuous research approach is required to understand better teaching and learning characteristics present in this format. Therefore, researchers are contributing constantly to the literature by examining MOOCs' technology, design, delivery conditions, and learning and assessment, among other aspects (Daradoumis, Bassi, Xhafa, & Caballe, 2013).

However, despite an increasing amount of research promoting learning aspects in MOOC participants, MOOC completion rates remain low (0.7%-52.1%, with a median value of 12.6%; Jordan, 2015). This makes it necessary to examine what prevents or promotes an attendee's completion of a MOOC, as completion rates challenge efforts to ensure a MOOC meets quality features for its educational content (Kilgore, Bartoletti, & Freih, 2015) or design (Kerr, Houston, Marks, & Richford, 2015).

In this regard, educational and psychological aspects have been reexamined to compare outcomes between traditional and MOOC learning settings (e.g., students' characteristics, course design, etc.; Durksen, Chu, Ahmad, Radil, & Daniels, 2016). However, given that within a traditional setting, learner's expectations are more standardized and course completion rates can be a sign of student success (Littlejohn, Hood, Milligan, & Mustain, 2016), researchers must evaluate outcomes from this environment when working with MOOC attendees.

Examining MOOC completers has become a common strategy to evaluate participants' performance (time spent, execution of tasks, etc.; Stevanovic, 2014), where research shows motivation and digital skills are features strongly supported by MOOC literature to predict learners' performance (Pursel, Zhang, Jablokow, Choi, & Velegol, 2016; Xu & Yang, 2016).

Given that motivation is strongly related to student engagement (Shapiro et al., 2017), MOOC researchers have included this factor into their agenda. Now, educators deem motivation as an important ingredient for participants' self-regulated learning (Magen-Nagar & Cohen, 2017) and as a requirement to succeed when acquiring content from a MOOC (Barak, Watted, & Haick, 2016).

Although social motivation is an important aspect for traditional learners, inner factors are required to learn from MOOC (e.g., intrinsic and extrinsic motivations; Xiong et al., 2015). Because the scope of MOOCs enables the delivery of education asynchronously and massively (Chen, 2013), continuing to examine motivation remains a fruitful direction for research (de Barba, Kennedy, & Ainley, 2016) as MOOCs reach enormous and diverse audiences (Admiraal, Huisman, & Pilli, 2015).

On the other hand, digital skills are essential features to address in MOOC research, as technology is part of the MOOC environment by definition (Rivera & Ramírez, 2015). Moreover, these courses evolve continuously thanks to educational technology (Yuan & Powell, 2013). It has been found that people with high levels of digital skills choose to participate in MOOCs whereas people with lower levels opt for traditional training (Castaño-Muñoz, Kreijns, Kalz, & Punie, 2017). Thus, limited technology skills hamper participants' opportunities to finish a MOOC as this format involves a high level of self-management of educational content (Onah, Sinclair, & Boyatt, 2014).

Among the required skills to attend a MOOC, searching and processing information and digital communication are central (Aesaert, Nijlen, Vanderlinde, & Braak, 2014). Thus, it is not surprising that researchers are interested in continuing to evaluate motivation and digital skills given their importance for MOOC education.

Although traditional assessments (e.g., scoring, providing feedback, etc.) are considered to examine a learner's motivation and digital skills, these kinds of assessment cannot be used in a MOOC design because a course offered under this format reaches a massive audience regularly (Admiraal, Huisman, & Pilli, 2015). Even though validity aspects of traditional tools used to assess readiness toward e-learning remains uncertain (Farid, 2014), criterion-referenced (Dray, Lowenthal, Miszkiewicz, Ruiz-Primo, & Marczynski, 2011); and theoretical or empirical data can be used to develop valid and reliable tools to explore factors contributing to or impeding students' participation in MOOCs (Xiong et al., 2015).

Given the existing need to reinforce tools used to evaluate motivation and digital skills traits, along with the data-enriched environment of a MOOC (Thille, Scheneider, Piech, Halawa, & Greene, 2014), information collected from MOOC participants is a suitable opportunity to research motivation and digital skill assessments.

Because it is imperative to understand learning specific to the MOOC context (Littlejohn et al., 2016), and to continuously gather information about factors encouraging MOOC completion (Blackmore, 2014), this study examines participants' motivation and digital knowledge characteristics via data collection using a new set of pre assessments and post assessments.

The objective of this study is to examine relationships between motivation and digital aspects influencing participants to attend a MOOC. In addition, using information obtained from MOOC completers, this examination is extended to evaluate pre-reports and post-reports. To accomplish this objective, procedures were executed (a) identify information among MOOC completers and non-completers, (b) evaluate psychometric properties of the post-measurement tool, and (c) correlate initial and ending information from MOOC completers.

Participants (n = 1,315; males= 746, females = 589) from a MOOC titled "La reforma enérgetica y sus oportunidades" (Energetic reform and its opportunities; Tecnológico de Monterrey, 2017) comprised the data set for this study. Their ages ranged from 15 to 77 years (mean = 30.88, standard deviation [SD] = 10.55), and they reported the following educational levels: high school, 23%; associate's degree, 9%; bachelor's degree, 50%; graduate degree, 14%; and not reported, 4%. In terms of discipline, this pool reported having the following backgrounds: health, 1.75%; art and humanities, 3.35%; business, 12.77%; social sciences, 23.65%; science and engineering, 29.81%; and not defined, 28.66%. Most participants attended this MOOC from a Mexican location (97.5%); the remaining locations included Argentina, Colombia, and Ecuador. For a second set of analyses, available information from participants who finished the mentioned MOOC were included (n = 313).

For the first set of analyses, information collected using the second section of the "Encuesta inicial sobre intereses, motivaciones y conocimientos previos en MOOC" ("Initial assessment for evaluate interests, motivation and previous knowledge"; EIIMC-MOOC; Valenzuela, Mena, & Ramírez-Montoya, 2017a) was evaluated. This section collects information regarding participants' reported motivation and previous knowledge related to attending this MOOC. The EIIMC-MOOC presents reliability coefficients of α =.898 for the overall structure and α1 =.872, α2 =.879, and αε =.728 for motivation, previous general knowledge (measuring digital skills), and previous specific knowledge factors, respectively (Valdivia Vazquez, Valenzuela, & Ramírez-Montoya, 2017).

For the second set of analyses, we used the "Encuesta final sobre intereses, motivaciones y conocimientos previos en MOOC" ("Ending assessment for interests, motivations, and previous knowledge"; EFMC-MOOC; Valenzuela, Mena, & Ramírez-Montoya, 2017b). The EFMC-MOOC is a mixed-format, 17-item tool designed to evaluate the changes in motivation and knowledge that participants experience after attending a MOOC related to the topic of energy. Given that the EFMC-MOOC was conceived to post-evaluate participants' motivation and knowledge, its second section emulates the EIIMC-MOOC tool in content and format. Examples of the items include "Este curso satisfizo las necesidades de formación que me llevaron a inscribirme en él" ("This course satisfied the training needs that motivated me to enroll in it"; motivation and interests) and "Creo que este curso me permitió adquirir los conocimientos básicos de los contenidos estudiados" ("I believe this course allowed me to acquire basic knowledge from the content explored"; acquired knowledge). Experts in education and methodology have evaluated the EFMC-MOOC for content validity, and its format and content have been piloted to evaluate examinees' comprehension (Valdivia, Valenzuela, & Ramirez-Montoya, 2017). For this study, the second section of the EFMC-MOOC was examined for its psychometric properties (the first section collects demographics).

The EIIMC-MOOC and EFMC-MOOC were administered at the beginning and end of the "La reforma enérgetica y sus oportunidades" (Energetic reform and its opportunities) MOOC using links embedded in the course. These links took participants to an online survey service where directions to answer and statements regarding authorizing the use of information collected and confidentiality were presented for each tool. Participation was voluntary, without incentive, and the time needed to complete the survey was approximately 30 minutes.

Participants from the "La reforma enérgetica y sus oportunidades" MOOC were divided into two groups - participants who completed both tools (completers) and those who completed the initial tool only (noncompleters). The rationale for employing these groups was to create a proxy to consider participants finishing (group 2) and not finishing (group 1) the course. Thus, to identify profile differences and similarities, as a first set of analyses, a series of t-test analyses was conducted for the defined groups across scores for each factor (motivation, previous general knowledge, and previous specific knowledge) measured by the EIIMC-MOOC tool.

Next, the structure of the EFMC-MOOC tool was examined via exploratory factor analysis using the axis factoring method including oblique rotation (direct oblimin); reliability was estimated via Cronbach's alpha. Examining the EFMC-MOOC structure allowed the instruments' scopes to be contrasted, as psychometric properties for the EIIMC-MOOC have already been reported (Valdivia Vazquez, Valenzuela, & Ramírez-Montoya, 2017).

Finally, as content validity for both instruments were already established by a panel of experts before examining the psychometric properties of the EFMC-MOOC tool, correlation analysis was conducted to evaluate associations between pre and post information collected from participants in group 2; to this end, scores yielded from the initial and ending tools were used as variables. All analyses were executed using SPSS 24.0 software.

Table 1 shows that on average, participants who finished the MOOC scored higher across variables (motivation, previous general knowledge, and specific knowledge) measured by the initial survey. However, although all mean scores presented significant differences when compared to scores from participants who did not finish the course, the results represented a low effect size (r range of .097 to .223; see Table 2).

Table 1

Means by Group

| Variable | MOOC finished? | N | Means | Std. dev. | Std. error |

| Motivation | N | 1004 | 19.55 | 6.086 | .192 |

| Y | 313 | 20.88 | 4.712 | .266 | |

| General knowledge | N | 1004 | 16.48 | 5.430 | .171 |

| Y | 313 | 17.68 | 3.837 | .217 | |

| Specific knowledge | N | 1004 | 5.3 | 2.139 | .068 |

| Y | 313 | 5.99 | 1.702 | .096 |

Table 2

Independent Samples t-Test

| Variable | Equal variances | F | t | df | Mean diff. | Std. error diff. | Lower | Upper | Effect size |

| Motivation | assumed | 6.47 * | -3.56 * | 1315 | -1.34 | 0.38 | -2.07 | -0.60 | 0.10 |

| not assumed | -4.07 * | 664.95 | -1.34 | 0.33 | -1.99 | -0.69 | 0.15 | ||

| General knowledge | assumed | 20.04* | -3.64 * | 1315 | -1.20 | 0.33 | -1.85 | -0.55 | 0.10 |

| not assumed | -4.35 * | 734.27 | -1.20 | 0.28 | -1.74 | -0.66 | 0.16 | ||

| Specific knowledge | assumed | 18.35* | -5.17 * | 1315 | -0.68 | 0.13 | -0.94 | -0.42 | 0.14 |

| not assumed | -5.81 * | 646.06 | -0.68 | 0.12 | -0.91 | -0.45 | 0.22 |

Notes. a) *Significant at the p<.01 level. b) Lower and upper levels at 95% of confidence intervals of the difference.

The descriptive statistics showed that the normality assumptions were met; the set of 13 items presented an absolute value smaller than 2.3 for skewness (mean of -1.39; range from -2.34 to -0.76), and kurtosis had a mean of 3.29 (range from -0.009 to 9.13). In the presence of large samples, absolute values greater than 3.0 and 10.0 indicate problematic skew and kurtosis indices, respectively (Kline, 2005).

The Kaiser Meyer Olkin (KMO) measure verified the sampling adequacy for the analysis; the result was .97, which is well above the acceptable limit of .5 (Kaiser, 1974). Bartlett's test of sphericity, χ2 (78) = 2123.559, p <.00, indicated that correlations between items were sufficiently large for executing an exploratory factor analysis procedure.

An initial analysis was run to obtain eigenvalues for each factor in the data. Two factors had eigenvalues over Kaiser's criterion of 1. In combination, they explained 52.18% of the variance (see Table 3).

Table 3

Total Variance Explained for the EFMC-MOOC Sample

| Item | Initial eigenvalues | Extraction sums of squared loadings | ||||

| Total | % of variance | Cumulative % | Total | % of variance | Cumulative % | |

| 1 | 6.65 | 51.15 | 51.15 | 6.19 | 47.64 | 47.64 |

| 2 | 1.01 | 7.77 | 58.93 | 0.59 | 4.54 | 52.18 |

| 3 | 0.87 | 6.71 | 65.64 | |||

| 4 | 0.76 | 5.89 | 71.53 | |||

| 5 | 0.63 | 4.90 | 76.43 | |||

| 6 | 0.55 | 4.24 | 80.67 | |||

| 7 | 0.48 | 3.75 | 84.43 | |||

| 8 | 0.44 | 3.40 | 87.84 | |||

| 9 | 0.38 | 2.96 | 90.80 | |||

| 10 | 0.37 | 2.88 | 93.68 | |||

| 11 | 0.34 | 2.43 | 96.11 | |||

| 12 | 0.27 | 2.10 | 98.20 | |||

| 13 | 0.23 | 1.80 | 100 | |||

Note. Extraction method: principal axis factoring.

This criterion is a good indicator for the number of factors that are tenable to retain when considering a combination of sample size (>250), and the average retained communality is .51 or higher (Field, 2009). Table 4 shows the item communalities extracted for this solution.

Table 4

Communalities for the EFMC-MOOC Sample

| Item | Initial | Extraction |

| 1 | 0.43 | 0.45 |

| 2 | 0.52 | 0.58 |

| 3 | 0.64 | 0.62 |

| 4 | 0.45 | 0.42 |

| 5 | 0.48 | 0.45 |

| 6 | 0.35 | 0.27 |

| 7 | 0.50 | 0.45 |

| 8 | 0.51 | 0.66 |

| 9 | 0.50 | 0.54 |

| 10 | 0.42 | 0.40 |

| 11 | 0.60 | 0.66 |

| 12 | 0.57 | 0.58 |

| 13 | 0.67 | 0.71 |

Note. Extraction method: principal axis factoring.

The scree plot showed a clear inflexion that would justify retaining two factors (Figure 1). Thus, given the large sample size, convergence of the scree plot, and Kaiser criterion found on this solution, two factors were retained in the final analysis.

Figure 1. Scree plot for the EFMC-MOOC sample.

A clear pattern matrix was obtained for this two-factor solution (see Table 5). The items that clustered higher than 0.40 on the same components suggested that Factor 1 represents a motivation and interest dimension (6 items), whereas Factor 2 represents gained knowledge (4 items).

Table 5

Pattern Matrix for the EFMC-MOOC Sample

| Item | Factor 1 | Factor 2 |

| 1 | 0.91 | |

| 2 | 0.63 | |

| 3 | 0.44 | |

| 4 | 0.72 | |

| 5 | 0.71 | |

| 6 | 0.80 | |

| 7 | 0.66 | |

| 8 | 0.86 | |

| 9 | 0.72 | |

| 10 | ||

| 11 | 0.53 | |

| 12 | ||

| 13 |

Notes. Extraction method: principal axis factoring. Rotation method: oblimin with Kaiser; rotation method converged in 10 iterations.

Reliability analysis estimated via Cronbach's method presented α =.898 for the structure. The values were α1 =.829 and α2 =.882 for Factors 1 and 2, respectively.

In terms of the correlation results obtained when preinformation and postinformation was obtained from participants who finished the MOOC, there were significant (p <.01 level) outcomes across all factors examined. Motivation presented a higher correlation when examined with factors taken from the final tool (r =.606 and r =.506 for Factors 1 and 2, respectively). As for the other initial factors, previous general and specific knowledge correlated moderately significantly with final Factors 1 and 2, although previous specific knowledge presented a weaker relationship (see Table 6).

Table 6

Correlations Between Initial and Final Information

| Variable | n | Factor 1 | Factor 2 |

| Factor 1 | 313 | 1 | 0.70 ** |

| Factor 2 | 313 | 0.70 ** | 1 |

| Motivation | 294 | 0.61 ** | 0.51 ** |

| Previous general knowledge | 296 | 0.50 ** | 0.47 ** |

| Previous specific knowledge | 301 | 0.36 ** | 0.40 ** |

** Note. Correlation significant at the 0.01 level (two-tailed). Factor 1= professional development, factor 2= technology skills.

MOOC environments are becoming an important setting for exploring learners' characteristics. Accordingly, the results from this study support efforts to continue investigating such characteristics, especially to understand the participants' motivation, knowledge (previous and acquired), and levels of satisfaction. This research line is important because after a completer"s profile is identified, MOOCs can be personalized to engage attendance more effectively as a strategy to reduce dropout rates (Alario-Hoyos, Pérez-Sanagustín, Delgado-Kloos, Parada, & Muñoz-Organero, 2014).

When examining the initial information, the scores for motivation and previous general and specific content knowledge factors were higher for the completers group compared with the non-completers group. These outcomes also showed low effect sizes, suggesting that the results need to be interpreted cautiously; however, they are in agreement with the literature reporting that completers obtain significantly higher ratings because they have confidence in their ability to complete MOOCs successfully (Barak et al., 2016). Moreover, it is notable that the scores were consistently significant across all factors, although the categories for grouping attendees (completers vs. non-completers) did not account for heterogeneous background profiles. Thus, future analysis to differentiate attendees' profiles should also consider reviewing other types of information (e.g., educational levels, work training, etc.) about participants to evaluate differences by subcategories as well.

As for the structure of the EFMC-MOOC, the results support the claim that this tool meets the initial validity and reliability standards. Item loadings for each factor suggest this tool measures participants' levels of satisfaction about the gains obtained after attending the MOOC. This satisfaction level can be evaluated by a two-factor structure involving (a) professional development gains and (b) technology skills growth. These factors correlate highly, but they are well differentiated (r =.737), and, together, they explain 52% of the variance, which is consistent with the findings reported in the literature when exploratory analyses are executed. In terms of reliability, the EFMC-MOOC shows internal consistency for the overall structure and across factors. An advantage of examining the psychometric structure of an instrument relates to the viability of interpreting students' scores properly, for instance, to identify students at risk (Farid, 2014). In traditional education, administering pre-assessment and post-assessment tools with similar content is a regular activity to evaluate learning; however, for MOOC environments, this activity is still developing (Chudzicki, Chen, Zhou, Alexandron, & Pritchard, 2015). Accordingly, the present results align with such efforts. Moreover, developing reliable measures provides opportunities for current efforts to track and understand participants' changes in behavior and performance occurring across MOOC attendance (Aiken et al., 2014; Perna et al., 2014). Future research projects could include using valid tools as formative assessments to track such changes, as it is desirable to have immediate measures rather than a delayed measure of situational interest (de Barba et al., 2016).

In terms of the pre-information and post-information derived from attendees finishing the MOOC, all scores from factors measured initially correlated significantly to the final scores. The results showed a consistent moderate association across variables. As in a previous report about the role motivation plays in perceived learning (Horzum, Kaymak, & Gungoren, 2015), the motivation factor measured in this study appeared to be the stronger variable associated with perceived satisfaction levels for attending a course. In contrast, the previous specific knowledge variable correlated less with the final information, and although prudence recommended when to interpret previous knowledge self-evaluation scores (Lui & Li, 2017), this finding agrees with reports asserting that this factor not only relates to engagement, but is also a strong predictor for success in a MOOC (Kennedy, Coffrin, & de Barba, 2015).

Overall, the findings from this study are consistent with the previous literature focussing on the need to understand attrition factors and motivational transition across MOOCs (Xu & Yang, 2016). Accordingly, it has also been suggested that pedagogical models should consider the technology practices involved (e.g., digital skills) to engage participants continuously to increase retention (Petronzi & Hadi, 2016). The combination of factors evaluated in this study (motivation, knowledge, satisfaction level) follows suggestions about not relying on behavioral aspects exclusively, but instead, including cognitive elements, as both aspects are related to MOOC engagement, and both increase the probability of completing a course (Li & Baker, 2016).

Finally, examining potential relationships among information collected before and after attending a course and comparing initial profiles of completers and non-completers may have benefits in terms of orienting MOOCs to the work market because a solely academic-oriented objective can detract from participants' learning, as transfer of knowledge is not guaranteed (Sanchez-Acosta, Escribano-Otero, & Valderrama, 2014). Thus, future research should consider how motivation and previous knowledge result when MOOCs target different objectives, as in the applied project supporting participants from this MOOC. Moreover, data emerging for such research should also consider an open-access perspective because by nature, MOOCs comprise open-access learning materials. Thus, the results and tools derived from MOOCs should align to this perspective to ensure that they are innovative (McGreal, Mackintosh, & Taylor, 2013).

This research is a product of Project 266632 of the "Laboratorio Binacional para la Gestión Inteligente de la Sustentabilidad Energética y la Formación Tecnológica" ["Bi-national Laboratory on Smart Sustainable Energy Management and Technology Training"], funded by the CONACYT SENER Fund for Energy Sustainability (Agreement: S0019=2014=01).

Admiraal W, Huisman B., & Pilli, O. (2015). Assessment in massive open online courses. The Electronic Journal of e-Learning, 13(4), 207-216.

Aesaert, K., van Nijlen, D., Vanderlinde, R., & van Braak, J. (2014). Direct measures of digital information processing and communication skills in primary education: Using item response theory for the development and validation of an ICT competence scale. Computers & Education, 76, 168-181. doi: 10.1016/j.compedu.2014.03.013

Aiken, J. M., Lin, S., Douglas, S. S., Greco, E. F., Thoms, B. D., Schatz, M. F., & Caballero, M. D. (2013). The initial state of students taking an introductory physics MOOC. Proceedings of the 2013 Physics Education Research Conference, Portland, OR. doi: 10.1119/perc.2013.pr.001

Alario-Hoyos, C., Pérez-Sanagustín, M., Delgado-Kloos, C., Parada, H. A., & Muñoz-Organero, M. (2014). Delving into participants' profiles and use of social tools in MOOCs. IEEE Transactions on Learning Technologies, 7(3), 260-266. doi: 10.1109/TLT.2014.2311807

Barak, M., Watted. A., & Haick, H. (2016). Motivation to learn in massive open online courses: Examining aspects of language and social engagement. Computers & Education, 94, 49-60. doi: 10.1016/j.compedu.2015.11.010

Barcena, E., Martin-Monje, E., & Read, T. (2015). Potentiating the human dimension in Language MOOCs. Proceedings of the European MOOC Stakeholder Summit, Mons, Belgium, 46-54.

Blackmore, K. (2014). Measures of success: Varying intention and participation in MOOCs. Paper presented at the ACS Annual Canberra Conference, Canberra, Australia.

Castaño-Muñoz, J., Kreijns, K., Kalz, M., & Punie, Y. (2017). Does digital competence and occupational setting influence MOOC participation? Evidence from a cross-course survey. Journal of Computing in Higher Education, 29, 28-46. doi: 10.1007/s12528-016-9123-z

Chen, J.C. (2013). Opportunities and challenges of MOOCS: Perspectives from Asia. Proceedings of the IFLA World Library and Information Congress 79th IFLA General Conference and Assembly (pp. 1-16), Singapore: The International Federation of Library Associations and Institutions.

Chudzicki, C., Chen, Z., Zhou, Q., Alexandron, G., & Pritchard, D. E. (2015). Validating the pre/post-test in a MOOC environment. In A. D. Churukian, D. L. Jones, & L. Ding (Eds.), Proceedings of the 2015 Physics Education Research Conference (pp. 83-86). Maryland: American Association of Physics Teachers. doi: 10.1119/perc.2015.pr.016

Daradoumis, T., Bassi, R., Xhafa, F., & Caballe, S. (2013). A review of massive e-learning (MOOC) design, delivery and assessment. Paper presented at the Eighth International Conference on P2P, Parallel, Grid, Cloud and Internet Computing, Compiegne, France. doi: 10.1109/3PGCIC.2013.37

de Barba, P. G., Kennedy, G. E., & Ainley, M. D. (2016). The role of students' motivation and participation in predicting performance in a MOOC. Journal of Computer Assisted Learning, 32, 218-231. doi: 10.1111/jcal.12130

Diver, P., & Martinez, I. (2015). MOOCs as a massive research laboratory: Opportunities and challenges. Distance Education, 36(1), 5-25. doi: 10.1080/01587919.2015.1019968

Dray, B. J., Lowenthal, P. R., Miszkiewicz, M. J., Ruiz-Primo, M. A., & Marczynski, K. (2011). Developing an instrument to assess student readiness for online learning: A validation study. Distance Education, 32(1), 29-47. doi: 10.1080/01587919.2011.565496

Durksen, T. L., Chu, M., Ahmad, Z. F., Radil, A. I., & Daniels, L. M. (2016). Motivation in a MOOC: A probabilistic analysis of online learners' basic psychological needs. Social Psychology of Education, 19, 241-260. doi: 10.1007/s11218-015-9331-9

Farid, A. (2014). Student online readiness assessment tools: A systematic review approach. Electronic Journal of e-Learning, 12(4), 375-382.

Field, A. (2009). Discovering statistics using SPSS (3rd ed.). London, UK: SAGE Publications.

Gaebel, M. (2013). MOOCs - Massive open online courses. Report of the European University Association (EUA). Retrieved from http://www.eua.be/Libraries/publication/EUA_Occasional_papers_MOOCs.pdf?sfvrsn=2

Garrido, M., Koepke, L., Andersen, S., Mena, A., Macapagal, M., & Dalvit, L. (2016). An examination of MOOC usage for professional workforce development outcomes in Colombia, the Philippines, & South Africa. Seattle, WA: Technology & Social Change Group, University of Washington Information School.

Horzum, M. B., Kaymak, Z. D., & Gungoren, O. C. (2015). Structural equation modeling towards online learning readiness, academic motivations, and perceived learning. Educational Sciences: Theory & Practice, 15(3), 759-770. doi: 10.12738/estp.2015.3.2410

Jordan, K. (2015). Massive open online course completion rates revisited: Assessment, length and attrition. International Review of Research in Open and Distributed Learning, 16(3), 341-358.

Kaiser, H. F. (1974). An index of factorial simplicity. Psychometrika, 39, 31-36.

Kennedy, G., Coffrin, C., & de Barba, P. (2015.). Predicting success: How learners' prior knowledge, skills and activities predict MOOC performance. Proceedings of the Fifth International Conference on Learning Analytics and Knowledge (pp. 136-140). Poughkeepsie, NY: ACM Publications. doi: 10.1145/2723576.2723593

Kennedy, J. (2014). Characteristics of massive open online courses (MOOCs): A research review, 2009-2012. Journal of Interactive Online Learning, 13(1), 1-16.

Kerr, J., Houston, S., Marks. L., & Richford, A. (2015). Building and executing MOOCs: A practical review of Glasgow's first two MOOCs (Massive Open Online Courses ). Glasgow, Scotland: University of Glasgow. Retrieved from http://www.gla.ac.uk/media/media_395337_en.pdf

Kilgore, W., Bartoletti, R., & Freih, M. A. (2015). Design intent and iteration: The #HumanMOOC. Proceedings of the European MOOC Stakeholder Summit (pp. 7-12). Mons, Belgium: 2015 eMOOCS conference committee.

Kline, R. B. (2005). Principles and practice of structural equation modeling. New York, NY: Guilford Press.

Li, Q., & Baker, R. (2016). Understanding engagement in MOOCs. In T. Barnes, M. Chi, & M. Feng (Eds.) Proceedings of the 9th International Conference on Educational Data Mining (pp. 605-606). Raleigh, NC; International Conference on Educational Data Mining (EDM) 2016.

Littlejohn, A., Hood, N., Milligan, C., & Mustain, P. (2016). Learning in MOOCs: Motivations and self-regulated learning in MOOCs. Internet and Higher Education, 29, 40-48. doi: 10.1016/j.iheduc.2015.12.003

Lui, J., & Li, H. (2017). Exploring the relationship between student pre-knowledge and engagement in MOOCs using polytomous IRT. In X. Hu, T. Barnes, A. Hershkovitz, & L, Paquette (Eds.) Proceedings of the 10th International Conference on Educational Data Mining (pp. 410-411). Wuhan, China: International Conference on Educational Data Mining (EDM) 2017.

Magen-Nagar, N., & Cohen, L. (2017). Learning strategies as a mediator for motivation and a sense of achievement among students who study in MOOCs. Education and Information Technologies, 22, 1271-1290. doi: 10.1007/s10639-016-9492-y

Mazoue, J. G. (2013). The MOOC model: Challenging traditional education. Formamente, 8(1-2), 161-174.

McGreal, R., Mackintosh, W., & Taylor, J. (2013). Open educational resources university: An assessment and credit for students initiative. In R. McGregor, W. Kinutia, & S. Marshall (Eds.), Open educational resources: Innovation research and practices (pp. 47-59). Vancouver, BC: Commonwealth of Learning.

Onah, D.F., Sinclair, J., & Boyatt, R. (2014). Dropout rates of massive open online courses: Behavioural patterns. Proceedings of the 6th International Conference on Education and New Learning Technologies (EDULEARN14), Barcelona, Spain. doi: 10.13140/RG.2.1.2402.0009

Pegler, C. (2012). Reuse and repurposing of online digital learning resources within UK higher education: 2003-2010. (Unpublished doctoral dissertation). The Open University. United Kingdom. Retrieved from http://oro.open.ac.uk/32317/1/Pegler_PhD_final_print_copy.pdf

Perna, L. W., Ruby, A., Boruch, R. F., Wang, N., Scull, J., Ahmad, S., & Evans, C. (2014). Moving through MOOCs: Understanding the progression of users in massive open online courses. Educational Researcher, 43(9), 421-432. doi: 10.3102/0013189X14562423

Petronzi, D., & Hadi, M. (2016). Exploring the factors associated with MOOC engagement, retention and the wider benefits for learners. European Journal of Open, Distance and e-Learning, 19(2), 129-146.

Pursel, B. K., Zhang, L., Jablokow, K. W., Choi. G. W., & Velegol, D. (2016). Understanding MOOC students: Motivations and behaviours indicative of MOOC completion. Journal of Computer Assisted Learning, 32, 202-217. doi: 10.1111/jcal.12131

Rayyan, A., Fredericks, C., Colvin, K. F., Liu, A., Teodorescu, R., Barrantes, A., ... Pritchard, D. E. (2016). A MOOC based on blended pedagogy. Journal of Computer Assisted Learning, 32, 190-201. doi: 10.1111/jcal.12126

Rivera, N., & Ramirez, M. S. (2015). Digital skills development: MOOC as a tool for teacher training. Proceedings from International Conference of Education, Research, and Innovation (ICERI2015), Seville, Spain.

Sanchez-Acosta, E., Escribano-Otero, J. J., & Valderrama, F. (2014). Motivación en la educación masiva online. Desarrollo y experimentación de un sistema de acreditaciones para los MOOC. [Motivation in massive education online: Development and testing of a system of accreditation badges for MOOC]. Digital Education Review, 25, 18-35.

Shapiro, H. B., Lee, C. H., Wyman Roth, N. E., Li, K., Cetinkaya-Rundel, M., & Canelas, D. A. (2017). Understanding the massive open online course (MOOC) student experience: An examination of attitudes, motivations, and barriers. Computers & Education, 110, 35-50. doi: 10.1016/j.compedu.2017.03.003

Stevanovic, N. (2014). Effects of motivation on performance of students in MOOC. Paper presented at Sinteza 2014 - Impact of the Internet on Business Activities in Serbia and Worldwide, Belgrade, Serbia. doi: 10.15308/sinteza-2014-418-422

Tecnológico de Monterrey (Producer). (2017). La reforma energetica y sus oportunidades: MOOC. [The energy reform and its opportunities: MOOC]. Retrieved from https://energialab.tec.mx/es/la-reforma-energetica-y-sus-oportunidades

Thille, C., Scheneider, R.F., Piech, C., Halawa, S.A., & Greene, D.K. (2014). The future of data-enriched assessment. Research and Practice in Assessment, 9, 5-16.

Valdivia, J.A., Valenzuela, J.R., & Ramírez-Montoya, M.S. (2017). Pilotaje de contenido de instrumentos MOOC [Content pilot for MOOC instruments]. Retrieved from https://repositorio.itesm.mx/bitstream/handle/11285/622345/170210-Reporte%20Pilotaje%20Instrumentos.pdf?sequence=1&isAllowed=y

Valdivia Vázquez, J.A., Valenzuela, J.R., & Ramirez-Montoya, M.S. (2017). Encuesta inicial sobre intereses, motivaciones y conocimientos previos en MOOC: Reporte de validación y confiabilidad. [Initial assessment to evaluate interests, motivation and previous knowledge: Validity and reliability report]. Retrieved from https://repositorio.itesm.mx/bitstream/handle/11285/622442/Encuesta_inicial_motivacioonesMOOC.pdf?sequence=1&isAllowed=y

Valenzuela, J.R., Mena, J.J., & Ramírez-Montoya, M.S. (2017a). Encuesta inicial sobre intereses, motivaciones y conocimientos previos en MOOC [Initial assessment to evaluate interests, motivation and previous knowledge]. Retrieved from https://repositorio.itesm.mx/bitstream/handle/11285/622349/170210-EncuestaInicial.pdf?sequence=1

Valenzuela, J.R., Mena, J.J., & Ramírez-Montoya, M.S. (2017b). Encuesta final sobre intereses, motivaciones y conocimientos previos en MOOC [Final assessment to evaluate interests, motivation and previous knowledge]. Retrieved from https://repositorio.itesm.mx/bitstream/handle/11285/622348/170210-EncuestaFinal.pdf?sequence=1&isAllowed=y

Xiong, Y., Li, H., Kornhaber, M. L., Suen, H. K., Pursel, B., & Goins, D. D. (2015). Examining the relations among student motivation, engagement and retention. Global Education Review, 2(3), 23-33.

Xu, B., & Yang, D. (2016). Motivation classification and grade prediction for MOOCs learners. Computational Intelligence and Neuroscience, 2016, 1-7. doi: 10.1155/2016/2174613

Yuan, L., & Powell, S. (2013). Open education: Implications for higher education. Retrieved from https://www.researchgate.net/publication/265297666_MOOCs_and_Open_Education_Implications_for_Higher_Education

Zhang, D., & Nunameker, J. F. (2003). Powering e-learning in the new millennium: Information Systems Frontiers, 5(2), 207-2018.

Motivation and Knowledge: Pre-Assessment and Post-Assessment of MOOC Participants From an Energy and Sustainability Project by Juan Antonio Valdivia Vázquez, Maria Soledad Ramirez-Montoya, and Jaime Ricardo Valenzuela Gónzalez is licensed under a Creative Commons Attribution 4.0 International License.