Volume 18, Number 3

Dr. Michelle J Eady1, Dr Stuart Woodcock2, and Ashley Sisco3

1University of Wollongong, 2Macquarie University, 3Western University

As e-learning maintains its popularity worldwide, and university enrolments continue to rise, online tertiary level coursework is increasingly being designed for groups of distributed learners, as opposed to individual students. Many institutions struggle with incorporating all facets of online learning and teaching capabilities with the range and variety of software tools available to them. This study used the EPEC Hierarchy of Conditions (ease of use, psychologically safe environment, e-learning self-efficacy, and competence) for E-Learning/E-Teaching Competence (Version II) to investigate the effectiveness of an online synchronous platform to train pre-service teachers studying in groups at multiple distance locations called satellite campuses. The study included 58 pre-service teachers: 14 who were online using individual computers and 44 joining online, sitting physically together in groups, at various locations. Students completed a survey at the conclusion of the coursework and data were analyzed using a mixed methods approach.

This study's findings support the EPEC model applied in this context, which holds that success with e-learning and e-teaching is dependent on four preconditions: 1) ease of use, 2) psychologically safe environment, 3) e-learning self-efficacy, and 4) competency. However, the results also suggest two other factors that impact the success of the online learning experience when working with various sized groups. The study demonstrates that the effectiveness of a multi-location group model may not be dependent only on the EPEC preconditions but also the effectiveness of the instructor support present and the appropriateness of the tool being implemented. This has led to the revised EPEC Hierarchy of Conditions for E-Learning/E-Teaching Competence (Version III).

Keywords: e-learning, synchronous technology, multi-locations, EPEC model

Twenty-first century Australia is experiencing the ongoing advantages that the expansion of the Internet has to offer. Now with the National Broadband Network (NBN), Internet connectivity is faster, more reliable, and more affordable than ever. E-learning was initially introduced in Australia to provide distance learners with equitable access to educational opportunities (Bell & Federman, 2013; Colbran & Gilding, 2013; Issa et al., 2012). It has offered a solution to some of the distance-related barriers that arise due to Australia's combination of vast land base and low, widely-distributed population (Reiach, Averbeck, & Cassidy, 2012; Stacey, 2005). Subsequently, e-learning gained popularity with advancements in information and communications technology, increased Internet use and home access, and a growing "learner-earner" cohort (Cheok & Wong, 2015; Juan, Huertas, Cuypers, & Loch, 2012; Lin, Feng Liu, Ko, & Cheng, 2008; Wu, Pienaar, O'Brien, & Feng, 2013).

E-learning enables the tertiary student flexibility to learn across different time zones and locations as an alternative or supplement to face-to-face learning (Bell & Federman, 2013; Issa et al., 2012; Tynan, Ryan, & Lamont-Mills, 2015; Watson, 2013; Wu et al., 2013). Some institutions offer distance studies in addition to classroom courses (Charles Sturt University, 2015). Others are dedicated specifically to providing flexible online learning to Australian and international students (Open Universities Australia, 2013).

Largely influenced by constructivist theory, computer-mediated communication tools used in distance studies are increasingly being designed for groups rather than individuals (Benson & Samarawickrema, 2009; Issa et al., 2012). In response to these trends, some educational institutions have offered multi-location online courses, which leverage online tools to deliver a course to groups studying in multiple locations. One popular type of multi-location design is the "hub-and-spoke" approach, where coursework is administered through a main campus (the hub) to smaller facilities (the spokes) (Moore, 2013). The university in question has a main campus with a number of smaller campuses, referred to as satellite campuses, scattered throughout the region. The satellite campuses had a history of self-contained management and teaching methods until the adoption of video conferencing, which connected the main campus with the satellite campuses.

Since the uptake of synchronous technologies in tertiary settings, there have been extensive studies which look at the theory and practice of working within an online environment (Anderson, 2008; McLoughlin, 2001; Reeves, Herrington, & Oliver, 2002), including: the similarities and differences between synchronous and asynchronous platforms, the ways in which they are used at universities, and how students engage with the different types of technology (Chen, Ko, Lin, & Lin, 2005; Hastie, Chen, & Kuo, 2007; Lan, Chang, & Chen, 2012). In our prior research, we developed the EPEC Hierarchy of Conditions (Woodcock, Sisco, & Eady, 2015) and applied it to 1:1 student to computer ratio (Sisco, Woodcock & Eady, 2015). However, up until the point of this study, no one had attempted to use the EPEC Hierarchy of Conditions (Version II) (Sisco et al., 2015) to look at the effectiveness of synchronous online technology to engage students studying at the main campus with groups of students studying at various satellite campuses. The purpose of this study was to determine if the EPEC Hierarchy of Conditions for E-Learning/E-Teaching Competence (Version II) was applicable in a multi-location setting.

There are many points to consider when moving into the online learning space. Tertiary practitioners would be prudent to consider the challenges and concerns documented in current research before they rush to join the trend of online learning. By reflecting on these details, practitioners can better support their students with well-designed programs and efficient, effective use of technology.

Student interactivity has been shown to be important to learning (Oprea, 2014; Watson, 2013). While scholars disagree about whether technology generally helps or hinders student interactivity (Makri, Papanikolaou, Tsakiri, & Karkanis, 2014; Molinari, 2012; Skramstad, Schlosser, & Orellana, 2012), they concur that it depends on student engagement, as well as the nature of the online tool in question (Bell & Federman, 2013; Giesbers, Rienties, Tempelaar, & Gijselaers, 2014; Molinari, 2012).

Researchers and practitioners recommend using a combination of asynchronous and synchronous tools to support collaborative learning (Giesbers et al., 2014; Hrastinski, Keller, & Carlsson, 2010; Wei, Peng, & Chou, 2015). In particular, interactive synchronous technology, such as live-time platforms and videoconferencing, facilitates dynamic sharing opportunities between tutors and students that asynchronous technologies cannot match (Giesbers et al., 2014; Nortvig, 2013).

Effective communication between all parties is crucial to building learning communities, collaborative opportunities, coursework delivery and the overall success of learning (Beaumont, Stirling, & Percy, 2009; Makri et al., 2014). Studies by Dixon et al. (2008) and Beaumont et al. (2009) suggest the success of collaborative exercises depends largely on the online instructor's effectiveness in communicating expectations. Studies by Beaudoin (2013) and Lee (2012) suggest that online discourse can present barriers to students' participation in discussions, which can inhibit students' interactions even when using synchronous technologies that emulate classroom learning (Lee, 2012; Nortvig, 2013).

Unfortunately, merely providing the tools that allow for group interaction does not ensure that meaningful collaborative learning will occur (Guo, Chen, Lei, & Wen, 2014; Juan et al., 2012; Tynan et al., 2015). Lack of high-speed Internet connectivity, online skills and familiarity with technology, as well as technical difficulties, are common challenges for e-learning students (Beaumont et al., 2009; Cradduck, 2012; Guo et al., 2014; Higgins & Harreveld, 2013; Nortvig, 2013; Reiach et al., 2012). Previous studies have found that online students report feelings of isolation due to distance, the disjointed nature of these programs (Beaumont et al., 2009; Higgins & Harreveld, 2011) and inadequate online communication between instructors and students (Abrami, Bernard, Bures, Borokhovski, & Tamim, 2011; Skramstad et al., 2012). Additionally, the lack of visibility in online learning can decrease social pressure to participate, inhibiting teamwork and degrading the group experience (Beaudoin, 2013; Dixon et al., 2008; Ehlers, 2013; Sun & Rueda, 2012).

Previous research suggests that online instructors play an important role in determining the quality of a student's learning experience by fostering a 'space of equals' based on trust, care and respect as well as by supporting and motivating students, providing timely feedback and facilitating effective, collaborative learning experiences (Ehlers, 2013; Higgins & Harreveld, 2013; Kirschner, Kreijns, Phielix, & Fransen, 2015; Molinari, 2012; Oprea, 2014; Skramstad et al., 2012; Wheeler & Reid, 2013; Wu et al., 2013). However, online instructors face constant, competing demands due to the additional work of online learning, such as the preparation, planning, and delivery of these courses, and the support required when teaching in multi-locations (Lefoe, Gunn, & Hedberg, 2002), each of which universities often fail to recognize (Beaumont et al., 2009).

Despite challenges, e-learning continues to become increasingly popular in tertiary institutions and numerous studies contend that technology improves interactivity and results in effective learning for online students (Cheok & Wong, 2015; Cradduck, 2012; Meyer, 2014; Rivers, Richardson, & Price, 2014; Wei et al., 2015). This division in the literature about the benefits and challenges associated with e-learning led the authors to inquire, "What factors determine whether e-learning will be effective in multi-location group settings?" and "How can e-learning competence in multi-location settings be measured?"

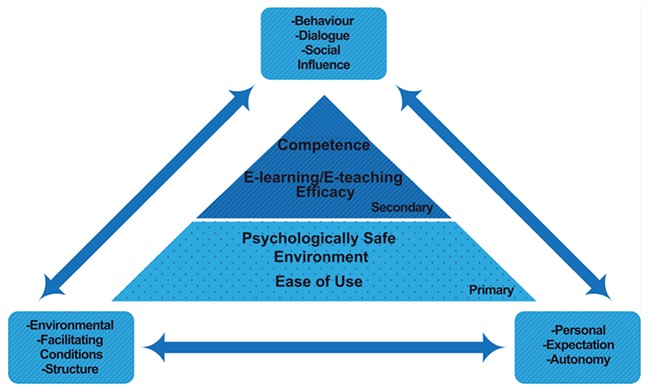

In response, Sisco et al., (2015) developed the EPEC Hierarchy of Conditions for E-Learning/E-Teaching Competence (Version II) (see Figure 1). Drawing on Bandura's (1977) Social Cognitive Theory (SCT), Piccoli, Ahmed, and Ives' (2001) E-Learning Acceptance Model (ELAM) and Moore's (1993) Transactional Distance Theoretical framework (TDT), the EPEC model proposes that e-learning and e-teaching competency are dependent on four hierarchal conditions: ease of use, psychologically safe environment, e-learning self-efficacy, and competency.

Figure 1. EPEC Hierarchy of Conditions for E-Learning/E-Teaching Competence (Version II) (Sisco et al., 2015). This model is based on Bandura's (1977) social cognitive theory (SCT), Piccoli, Ahmed, and Ives' (2001) e-learning acceptance model (ELAM) and Moore's (1993) transactional distance theoretical framework (TDT).

SCT asserts that the interaction among personal (cognitive), behavioral (social), and environmental (physical) factors influence human behavior (Bandura, 1977, 1986, 1989; Fertman & Allensworth, 2010; Zikic & Saks, 2009). Specifically, humans tend to behave based on what they deem to be reasonable from their environments (Bandura, 1989). Both ELAM (Piccoli et al., 2001) and TDT (Moore, 1993) are adaptations of SCT (Bandura, 1977). ELAM adapts SCT to the e-learning context with a focus on acceptance in relation to "'effort expectancy' (or perceived ease of use)... [and]... 'performance expectancy' (or perceived usefulness)" (Sisco et al., 2015, pp. 28-29). TDT focuses on the difference of understanding or potential for misunderstanding between teacher and student from a communications perspective (Umrani-Khan & Iyer, 2009).

Together, these theories provide a comprehensive foundation for the EPEC hierarchy of e-learning needs, from a lens that focuses on human behavior (SCT) in a digital (ELAM) communication (TDT) context. Within the EPEC model, "social influence" (ELAM) and "dialogue" (TDT) align with "behavior" (SCT), "expectation" (ELAM), and "autonomy" (TDT) align with "personal" (SCT) and "facilitating conditions" (ELAM) and "structure" (TDT) align with "environment" (SCT) (Sisco et al., 2015).

Applying this in a multi-location context could involve a tutorial class occurring across a number of locations. Students at one location are paying attention to the tutor and what they are saying via the synchronous platform (environment influences cognition, a personal factor). Students who are unsure of the information that the tutor is communicating may raise their hand to ask questions from their location via the synchronous platform (cognition influences behavior). The tutor may then try to simplify the point or give further examples of the point being made (behavior influences environment). The tutor may also give students a task for discussion within their tutorial group to work on at their location and then come back together and present their work through the synchronous platform (environment influences cognition, which influences behavior). As the students are working on the task, they may believe they are performing well (behavior influences cognition).

While this theoretical framework has been used to analyze e-learning and e-teaching competence, it has yet to be applied in to a multi-locations, synchronous context, involving groups of students, learning together at a common physical location (Sisco et al., 2015).

Fifty-eight pre-service teachers who were enrolled in a graduate-level core subject course in elementary education at an Australian university participated in this study. Out of the random sample of 99 participants who were enrolled in the course, 59% chose to participate in this research project. These participants fell into two distinct groups. The first group of 44 students (Group A) was comprised of students at three of the university's satellite campuses who attended the online sessions together in groups, signing in and participating around one computer together at each site. These groups had 13, 15, and 16 participants onsite at the satellite campuses. The second group of 14 students (Group B) enrolled at the main campus, however, were given the opportunity to access the tutorial online from their individual devices at a location of their choosing.

Historically, these satellite campuses were placed in remote and isolated locations to offer tertiary education opportunities to learners living in those areas. It was not until the adoption of video conferencing that the satellite campus students were able to attend lectures at a distance with the larger cohort on campus. Video conferencing was successful although the students at a distance did little more than log into the video conference and watch what was happening in the lecture theatre. However with the capabilities that the Internet can afford, a synchronous online platform (CENTRA©) was available to link together the satellite campuses and the main campus. CENTRA© would allow all participants to work together and have discussions in real-time much like in a face-to-face tutorial setting. The participants had the ability to talk using a microphone and live text to one another, share content virtually and work collaboratively in small groups in the online environment. The researchers were eager to see if an interactive live-time tutorial could be facilitated in an attempt to engage all the students, both individuals and groups at a distance. While one tutor, at the main campus, delivered tutorials, facilitators were located at each satellite campus.

This study employed a survey instrument, which was developed, based on the literature reviewed, to collect data designed to investigate pre-service teachers' experiences when participating in a multi-locations synchronous tutorial class. The survey used included 10 open-ended as well as 10 Likert-scale questions (see Table 1). Open-ended questions were used to yield opinions and beliefs, as well as general individual responses (de Vaus, 2002; Fraenkel & Wallen, 2006). This style of questioning is particularly relevant and powerful when examining research questions which are more exploratory in nature (Creswell, 2002). The survey also included Likert-scale questions, which included responses ranging from 0 (very poor or very inconvenient) to 6 (excellent or extremely convenient) in relation to the multi-location synchronous tutorials. The survey was distributed to students via email, completed, and then returned via email to the researchers.

Table 1

Survey Questions: This Table Shows the Likert-Scale and Open-Ended Questions Asked of Participants

| Question | Likert-scale | Comment |

| 1. Overall, how did you rate the tutorial sessions on Centra? | 0 = Very poor 6 = Excellent | All questions provided space for open-ended comments from participants. |

| 2. As a student, how did you rate your learning and understanding on Centra? | 0 = Very poor 6 = Excellent | |

| 3. How did you rate the participation opportunities during class? | 0 = Very poor 6 = Excellent | |

| 4. Was it beneficial to have this tutorial online? | 0 = Not at all 6 = Extremely | |

| 5. What was the ease of use of Centra? | 6 = Extremely | |

| 6. Did you have any technical issues with the Centra sessions? | 0 = None 6 = Very many | |

| 7. Did you feel the learning was any different while connecting to different campuses? | 0 = Much worse 6 = Much better | |

| 8. How confident are you to participate online compared to in the classroom? | 0 = Less confident | |

| 9. How likely are you to participate on Centra than face-to-face? | 0 = Much worse 6 = Much better | |

| 10. If you had a choice in the future to choose between a Centra tutorial or face- to-face, which would you choose? | 0 = Face-to-face 6 = Centra |

A limitation of this data collection method is the dependence upon participants' self- reporting, which allows questions of honesty and bias (Mertens, 2005). Educational research frequently makes use of self-report measures, with strategies in place to mitigate the effects (Darling-Hammond, Chung, & Frelow, 2002; Kleickmann & Anders, 2013). Within this study, it was made clear to the participants that their anonymous responses would not affect their marks, which would be calculated and declared prior to the researchers looking at the data. In addition, the survey responses were evaluated on an objective basis through the lens of EPEC Hierarchy of Conditions for e-Learning/e-Teaching Competence (Version II) (Sisco et al., 2015).

The researchers administered a core subject within the post-graduate elementary education degree over 13 weeks. All of the tutorial groups were covering the same lesson content. The tutorial leaders at the satellite campuses were given a 30-minute introduction to using the online platform so that they knew how to enter the e-learning space and control its features. The online live-time platform used interactive icons (e.g., clapping, laughing, check marks, etc.) so participants could respond without speaking. Participants also had use of microphone and video camera capabilities. Sessions were recorded, giving students the ability to return to the learning experience for review. At the end of the semester, the participants were asked to volunteer to complete a survey about their experiences in the online tutorial.

The data were analyzed using a thematic content analysis of responses to the open-ended questions, which was helpful for "sift[ing] through large volumes of data with relative ease in a systematic fashion" (Stemler, 2001, p. 1) and for creating data categories (Guba & Lincoln, 2000). To begin the analysis, an "accuracy of fit" was employed (Glasser & Strauss, 1967; Ritchie & Lewis, 2003). This required careful reading through the data to discover evolving themes. The data were viewed through the lens of the EPEC Hierarchy of Conditions for E- Learning/E-Teaching Competence (Version II) (Sisco et al., 2015) to determine the relevance of the model in the multi-locations context.

This study also employed a quantitative component to complement and supplement the qualitative data collected and capture more nuanced results (Creswell, 2002).

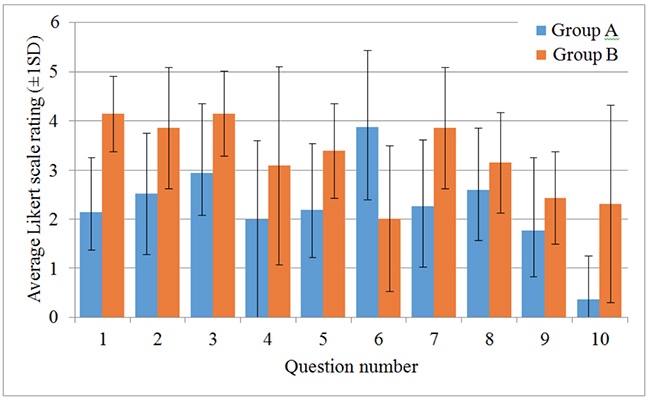

The quantitative findings of this study are outlined below in Figure 2. They have been presented by questions posed, and also by participant group. The results are representative of the employed Likert-scale of 0 (very poor or very inconvenient) to 6 (excellent or extremely convenient), and presented in the order that the questions appeared in the survey.

Figure 2. The average Likert-scale rating of the groups by question (±1SD). This figure represents the average Likert-scale ratings results by the questions asked highlighting Group A's responses in blue and Group B's responses in orange, and the standard deviation (SD).

Participants' impressions of the online sessions varied, based on their location, with Group A rating them as being poor or mediocre (mean score (M) = 2.1, standard deviation (SD) = 1.1), in contrast to Group B who rated them as being good (M=4.1, SD=0.8). However, Group B participants' comments suggest they experienced similar difficulties to Group A, including the platform's lack of engagement and interactivity, time consuming technical difficulties, and slow Internet connection.

Following a similar pattern to their overall impressions of tutorial sessions, participants' learning and understanding with synchronous technology varied based on their group. Group A found their ability to learn and understand somewhat difficult (M=2.5, SD=1.2) and inhibited by technical difficulties. While Group B also experienced these technical difficulties, they reflected positively on the learning and understanding achieved with synchronous technology and rated the synchronous technology as somewhat good for learning and understanding (M=3.9, SD=1.2).

The perceived opportunities for participation in tutorial activities and discussions were greater for Group B than for Group A. These differences appear to be accounted for by the different contexts that students were operating in (i.e., individually or in groups), incorporating both student-student and student-tutor relationships. Group A respondents felt opportunities for participation were mediocre (M=2.9, SD=1.4). Group B reported that there was ample opportunity for them to participate (M=4.1, SD=0.9), but less than what would be possible with videoconferencing.

Group A generally did not find the multi-location tutorials to be beneficial, due to technical difficulties, absence of support at their location, and perceived lack of value added by the technology, among other reasons (M=2.0, SD=1.6). Group B found the tutorials somewhat beneficial (M=3.1, SD=2.0), but some felt there could have been better use of the technology and tools available to them.

Group A found synchronous technology somewhat difficult to use (M=2.2, SD=1.4), while Group B found it somewhat easy to use, with some minor difficulties reported (M=3.4, SD=1.0).

Group A experienced many varied technical issues that interfered with their learning (M=3.9, SD=1.6), although these decreased over time. Group B reported fewer technical difficulties (M=2.0, SD=1.5), which lessened with each session.

Most of Group A reported that connecting with other campuses through synchronous technology had an adverse impact on their learning (M=2.3, SD=1.4), due largely to the implementation of the platform, and the technical difficulties experienced. Group B generally appreciated the diversified learning opportunities provided by connecting with different campuses (M=3.9, SD=1.2), although they raised some concerns about the platform.

Group A were somewhat less confident participating in online tutorials (M=2.6, SD=1.3), with several preferring face-to-face interactions. Group B rated their confidence online as the same as in-person (M=3.1, SD=1.0), with mixed comments relating to the use and nature of the technology.

Group A were much less likely to participate in learning through synchronous technology than face-to-face (M=1.8, SD=1.5) because of technical issues that inhibit engagement, lack of video, difficulty using synchronous technology, waiting time involved while others are typing responses, and a preference for face-to-face interaction.

Group B were slightly less likely to participate via synchronous technology than in-person (M=2.4, SD=0.9). They described synchronous technology as an important tool, and not as scary as they had anticipated.

Group A strongly preferred face-to-face tutorials over online synchronous technology sessions but provided some alternative uses for the synchronous technology (M=0.4, SD=0.9). Group B were fairly balanced in their view, but preferred face-to-face sessions. They were nearly as likely to choose synchronous technology as face-to-face (M=2.3, SD=2.0).

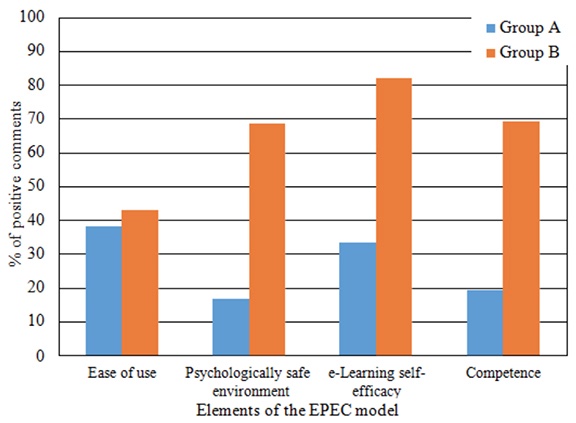

Figure 3. Percentage of positive comments in relation to the elements of the EPEC Hierarchy of Conditions for E-Learning/E-Teaching Competence (Version II) (Sisco et al., 2015). This figure shows the percentage of positive comments in relation to all of the responses when the EPEC model is applied to the participants' responses.

The qualitative data from the survey were also coded using the lens of the EPEC Hierarchy of Conditions for E-Learning/E-Teaching Competence (Version II) (Sisco et al., 2015). The process entailed coding the responses, either negative or positive, that related to the elements of the model and analyzing them respectively. The results of this analysis are detailed below.

Group A reported experiencing many technical difficulties, which wasted time and impeded engagement, interaction, and learning. These included: poor sound quality (background noise, interference from other campuses, and microphones not working); unreliable and slow connection; and difficulty starting up, logging on, connecting with campuses, and saving their work. Group B mentioned similar difficulties, although to a lesser extent. For both groups, the technical difficulties reportedly decreased over time.

In addition to technical difficulties, several participants reported a lack of structure and integration with other campuses, insufficient time, and a lack of incentive to participate. Participants reiterated these technical issues throughout the survey, and time spent waiting for responses from other campuses, as challenges that inhibited opportunities to participate, share, and debate ideas. Specifically, one participant said the "pressure" of sessions and method of typing on a screen hindered their learning and understanding.

When synchronous technology worked, Group A participants commented that the lectures were "fantastic," the discussions were "relevant" and "useful," and it was a good collaborative tool to share ideas across campuses. One participant said synchronous technology sessions were the "best online lectures we've had so far!" Some participants said they could see the potential benefits of the technology. They liked the concept, learning how to use the technology, and sharing with other campuses. Group A participants reported that they found the platform relatively easy to use once it was set up and/or with practice, although several commented that they did not use it directly, as within the group settings, only one student at a time could directly interact.

Some participants in Group B described the synchronous technology tutorials as "simpler and clearer" and "interesting and interactive." At the same time, Group B provided many criticisms of learning and understanding with the technology, including: a lack of clarity, coherence, and engagement; confusion about the technology's features; time spent waiting for other campuses to respond; and a lack of engaging content. Group B participants described synchronous technology as less personal but more convenient and "an important technological tool."

Some participants in Group A commented that they saw benefit in using synchronous technology for other purposes, including one-on-one learning, connecting with classrooms around the world, and providing distance education to remote schools. Participants in Group B also suggested alternative uses of the technology, such as blended learning experiences where synchronous technology can add to or supplement classroom learning.

Participants in Group A suggested that synchronous technology might be a useful alternative for learners who dislike face-to-face environments. These participants reported there were good opportunities for participation using the technology, including ample time, incentive to interact, and opportunity for all students and campuses to respond. Even so, many participants in Group A expressed their preference for in-person classroom learning.

Group B said engaging with students from other campuses using synchronous technology enriched their learning because it diversified information, ideas, and perspectives, and thus, understanding. One participant said it "allowed students that we haven't interacted with on campus to voice their opinions and be heard by a different audience." However, participants also described connecting with campuses as "slower" and "less fluid."

Several participants in Group A commented that they were confident using technology, but preferred face-to-face learning. They listed the limited training at their campus, feeling intimidated by the technology, and being unsure about how to resolve technical problems among the reasons for their lack of confidence.

Group B provided mixed comments about their own abilities to experience success in online environments. Several participants expressed inhibitions, including lack of experience with technology in general and confusion around its use. One participant suggested more experience with the technology would be beneficial in building confidence. Another participant said "[synchronous technology] is not as scary, having interacted with it."

A number of barriers that hindered their learning and understanding were consistently mentioned by Group A participants throughout the survey. The technical difficulties that they encountered included lack of support, and high student-tutor ratio, which inhibited their competence, as well as a lack of training in the use of the technology. One participant said they "didn't see that there was any benefit having this tutorial online—there didn't seem to be anything that couldn't be done in individual campus tutorials."

Group B reportedly did not encounter as many barriers as Group A, despite experiencing similar difficulties (although less frequently). Several participants noted the flexibility and convenience of learning at a distance, and the opportunity for collaboration, as benefits of synchronous technology.

Participant responses revealed additional factors that impact upon e-learning/e-teaching competence, beyond those identified in the EPEC Hierarchy of Conditions. Participants emphasized the importance of a good classroom tutor facilitating each session, and the development of a skill set to enable the use of appropriate technology platforms.

Tutor presence in group situations. Many participants in Group A attributed the varied challenges they faced to the high student-tutor ratio. One participant said there were too many people in the class to provide adequate participation opportunities using this platform. Other participants felt intimidated by the technology, without an expert to guide them.

The value-added to Group A's experience appeared to be affected by the high student-teacher ratio. One participant said they could see the benefits of connecting with other campuses when the number of participants is small, but the group they belonged to before considering other campuses was an ideal size for a tutorial. Another participant suggested connecting with campuses could be more effectively facilitated if there was one tutor per campus, rather than one tutor for all 58 students.

Group B participants did not report difficulties in this area.

Appropriate technology tools. Participants in both groups reported that limited training or experience on the given platform hindered their learning. Several participants reported that it would have been beneficial and desirable to utilize other synchronous technology tools, such as videoconferencing, and alternative groupings. They raised concerns about the chosen platform, and felt that their opportunities to interact with others and engage with the content were limited by it.

The results of this study support the EPEC Hierarchy of Conditions for E-Learning/E-Teaching Competence (Version II) (Sisco et al., 2015) (see Figure 1) in group contexts as well as previously confirmed contexts (Sisco et al., 2015). Importantly, the findings affirm that ease of use provides more opportunity for a psychologically safe environment, which empowers e-learning efficacy, and that in turn promotes competence. The results also suggest that there are two other major influences on online learning for groups gathered together on site, which need to be considered in these contexts.

The EPEC Hierarchy of Conditions for E-Learning/E-Teaching Competence (Version II) suggests that user-friendliness is the main requirement for a psychologically safe environment, e-learning efficacy and e-learning competence (Sisco et al., 2015). Participants in Group B, who rated the synchronous technology as somewhat easier to use (ease of use), also rated the platform as providing good or very good opportunities for participation (psychologically safe). They reported improved or greater confidence using the synchronous technology (efficacy), and that it was somewhat good or good for building learning and understanding (competence).

In contrast, Group A rated the synchronous technology as difficult to use (ease of use). Technical difficulties were cited as inhibiting participation (psychologically safe environment), confidence (efficacy), and learning and understanding (competence). However, when the synchronous technology worked, Group A provided positive comments. These findings confirm the foundational importance of the ease of use element of the EPEC hierarchy (Woodcock et al., 2015).

The results suggest that there are several important factors in providing a psychologically safe environment. These include mediating frustration, encouraging engagement, calming anxieties associated with technology, and promoting discussion and interaction.

Aside from technical difficulties, Group A reported interference due to the large number of students and lack of equitable opportunity to participate. Group B—who were using their own individual devices—provided similar feedback about the large number of students, but highly rated opportunities for participation. These participants discussed how interesting and enjoyable it was to connect with others and share ideas across campuses.

The results also support the EPEC hierarchy in suggesting that efficacy or participant confidence was an important factor in participants' synchronous learning experiences. Within the EPEC hierarchy and in other literature, ease of use and the psychological safety of the environment has been shown to influence e-learning efficacy, which in turn influences e-learning competence (Woodcock et al., 2015; de la Torre Cruz & Arias, 2007; Woldab, 2014).

This hierarchy is demonstrated in the results of this study, where Group A cited technical difficulties (ease of use), as well as lack of training and support resolving technical difficulties and feeling intimidated by the technology (psychologically safe environment) as reasons why they felt less confident using it. In contrast, Group B felt as confident online as face-to-face, because of the psychologically safe environment they experienced through the platform.

The results support the EPEC hierarchy in suggesting that ease of use, a psychologically safe environment and e-learning efficacy are all important in achieving e-learning competence, not only when individuals are learning in online environments (Lambe, 2007; Sisco et al., 2015) but also in settings where groups of people are learning together using one computer. Group A rated their experience with the synchronous technology as being difficult to learn and understand with (competence), attributing this to technical difficulties and the lack of support needed to resolve issues. The large number of students within each group and in the cohort as a whole was also referenced as a barrier. In contrast, Group B rated the synchronous technology as somewhat good to learn and understand with (competence), despite a few technical difficulties.

The results also revealed the importance of tutor presence and availability to the group during the synchronous sessions as an additional factor to the EPEC hierarchy. This would preferably be a technically savvy individual who can effectively facilitate the group through their online experience. This finding supports the work of Higgins and Harreveld (2013) and Wheeler and Reid (2013), among others, in recognizing the important role that tutors can play in e-learning environments.

This study supports the work of Molinari (2012) and Wu et al. (2013) in showing the importance of having a quality tutor present in the room who is familiar with the technology to effective distributed group e-learning. Different levels of tutor presence can explain why Group B ratings of their experience with the synchronous technology were much higher than their Group A counterparts, despite experiencing the same challenges.

The apparent lack of adequate training provided to tutors at the satellite campuses may have inhibited their effectiveness and ability to support their students as they negotiated the online learning environment (Beaumont et al., 2009; Tynan et al., 2015). This suggests the presence of a tutor who could provide support and facilitate conditions could enhance students' competence with technology, as seen in the work of Dixon et al. (2008) and Lambe (2007).

The second addition to the literature that this study has revealed is in regards to the appropriate technology tools that we use for students in our tertiary settings. The findings of this study contrast with the work of Beaumont et al. (2009), which suggested that tertiary students might bypass interactive technology, such as videoconferencing, in favor of asynchronous communication, such as email. Instead, this study's participants revealed the varied challenges associated with using synchronous platforms within a multi-location model. This is aligned with the work of Giesbers et al. (2014), Guo et al. (2014), and Makri et al. (2014) regarding the importance of choosing appropriate technological tools to support students' learning, as well as the work of Molinari (2012) and Wu et al. (2013) regarding the importance of a tutor in the room who is familiar with the technology being used.

The nature of the online synchronous platform employed in this course—text-based communication within individual and group satellite settings—presented barriers to students' interactions, engagement, and learning. Participants suggested alternative platforms and tools, including videoconferencing, which they would have preferred to use.

The synchronous platform used in this study did not appear to be the best choice for the given circumstances, although it has been shown to be effective in other e-learning contexts (Beaumont et al., 2009; Tynan et al., 2015). Nonetheless, many participants in this study gained experience, confidence, and e-learning skills that will help them in their future careers (Beaumont et al., 2009; Dixon et al., 2008).

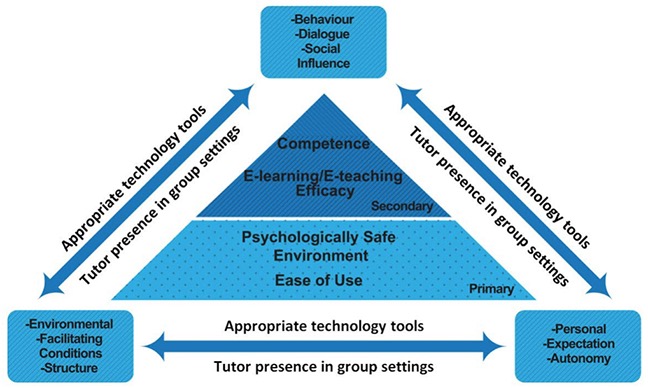

To recognize the additional factors revealed in this study, we propose an amendment to the EPEC Hierarchy of Conditions for E-Learning/E-Teaching Competence (Version II) (Sisco et al., 2015). This amendment recognizes the importance of tutor presence in group settings and appropriate technology tools identified in this study, and forms the EPEC Hierarchy of Conditions for E-Learning/E-Teaching Competence (Version III) (see Figure 4).

Figure 4. EPEC Hierarchy of Conditions for E-Learning/E-Teaching Competence (Version III) (Eady, Woodcock, & Sisco, 2017). This model is a revision of Version II to incorporate the additional factors of appropriate technology tools and tutor presence in group settings.

In an increasingly digital world where online programs are being adopted by a growing number of tertiary institutions, educators will need to acquire the skills to effectively teach, interact with, and support their students through technology (Higgins & Harreveld, 2013; Tynan et al., 2015). This study has shown that a multi-locations approach should be managed using the lens of the EPEC hierarchy to ensure that its elements—ease of use, psychologically safe environment, e-learning efficacy, and competence—and the additional factors of tutor presence and appropriate technology tools are considered in the development of online courses. Using the EPEC hierarchy can inform, improve practice, and greatly enhance educators' understandings regarding the complexities of online learning—an important step in developing quality e-learning programs in any field (Cheok & Wong, 2015; Molinari, 2012; Robertson & Hardman, 2012; Woodcock et al., 2015).

While there are benefits to the multi-location model reflected in this and previous research (Bell & Federman, 2013; Dixon et al., 2008; Lambe, 2007; Sisco et al., 2015; Tynan et al., 2015), the findings of this study demonstrate that the model's effectiveness is highly dependent on the EPEC Hierarchy of Conditions for E-Learning/E-Teaching Competence: ease of use, psychologically safe environment, e-learning efficacy, and e-learning competence; and the additional factors identified in this study: tutor presence in group settings and appropriate technology tools. The challenges encountered through this multi-location e-learning experience, including inconsistent experiences across groups, technical difficulties, and a lack of tutor support, hindered the cohort as a whole from achieving e-learning efficacy and competence.

Given the rise of e-learning within tertiary institutions, with programs increasingly being designed for groups of learners rather than individuals, further research into multi-location e-learning, and the opportunities and challenges it presents is of high value (Devlin, Feraud, & Anderson, 2008; Issa et al., 2012). In particular, research that investigates the characteristics of effective tutors in multi-location settings would allow the hub-and-spoke model of e-learning to be more effective through the placement of quality tutors at each location. Additionally, an examination of the various forms of synchronous technologies through the lens of the EPEC Hierarchy of Conditions for E-Learning/E-Teaching Competence (Version III) would allow the most appropriate tools and platforms, such as videoconferencing or alternative synchronous platforms (e.g., Adobe Connect© or Elluminate©), to be used in online multi-location learning in the future.

Moving forward in this digital age, tertiary institutions worldwide must commit time and resources into the development of high quality, relevant, and sustainable e-learning practices which will continue to transform our students' learning.

Abrami, P. C., Bernard, R. M., Bures, E. M., Borokhovski, E., & Tamim, R. M. (2011). Interaction in distance education and online learning: Using evidence and theory to improve practice. Journal of Computing in Higher Education, 23(2), 22.

Anderson, T. (2008). The theory and practice of online learning. Alberta, CA: Athabasca University Press.

Bandura, A. (1977). Social learning theory. Englewood Cliffs, NJ: Prentice Hall.

Bandura, A. (1986). Social foundations of thought and action: A social cognitive theory. Englewood Cliffs, NJ: Prentice-Hall.

Bandura, A. (1989). Human agency in social cognitive theory. American Psychologist, 44, 1175-1184.

Beaudoin, M. F. (2013). Reflections on seeking the "invisible" online learner (and instructor). In U. Bernath, A. Szücs, A. Tait, & M. Vidal (Eds.), Distance and e-learning intransition (pp. 529-542): John Wiley & Sons, Inc.

Beaumont, R., Stirling, J., & Percy, A. (2009). Tutor's forum: Engaging distributed communities of practice. Open Learning, 24(2), 141-154.

Bell, B. S., & Federman, J. E. (2013). E-learning in post secondary education. The Future of Children, 23(1), 165-185.

Benson, R., & Samarawickrema, G. (2009). Addressing the context of e-learning: Using transactional distance theory to inform design. Distance Education, 30(1), 5-21.

Charles Sturt University. (2015). Study on campus, study by distance. Retrieved from http://www.csu.edu.au/

Chen, N.-S., Ko, H.-C., Lin, K., & Lin, T. (2005). A model for synchronous learning using the internet. Innovations in Education & Teaching International, 42(2), 181-194. doi: 10.1080/14703290500062599

Cheok, M. L., & Wong, S. L. (2015). Predictors of e-learning satisfaction in teaching and learning for school teachers: A literature review. International Journal of Instruction, 8(1), 75-90.

Colbran, S., & Gilding, A. (2013). E-learning in Australian law schools. Legal Education Review, 23(1 & 2), 201-239.

Cradduck, L. (2012). The future of Australian e-learning: It's all about access. The E-Journal of Business Education & Scholarship of Teaching, 6(2), 1-11.

Creswell, J. W. (2002). Research design: Qualitative, quantitative and mixed methods approaches (2nd ed.). Thousand Oaks, CA: Sage Publications, Inc.

Darling-Hammond, L., Chung, R., & Frelow, F. (2002). Variation in teacher preparation: How well do different pathways prepare teachers to teach? Journal of Teacher Education, 53, 286-302.

de la Torre Cruz, M. J., & Arias, P. F. C. (2007). Comparative analysis of expectancies of efficacy in inservice and prospective teachers. Teaching and Teacher Education, 23, 641-652.

de Vaus, D. A. (2002). Surveys in social research (5th ed.). Crows Nest, Australia: Allen & Unwin.

Devlin, B., Feraud, P., & Anderson, A. (2008). Interactive distance learning technology and connectedness. Education in Rural Australia, 18(2), 53-62.

Dixon, R., Dixon, K., & Axmann, M. (2008, 30 Nov – 3 Dec). Online student centred discussion: Creating a collaborative learning environment. Paper presented at the Hello! Where Are You in the Landscape of Educational Technology? ASCILITE Conference, Melbourne, Australia.

Ehlers, U. D. (2013). Designing collaborative learning for competence development. In U. Bernath, A. Szücs, A. Tait, & M. Vidal (Eds.), Distance and e-learning in transition (pp. 195-216). London, UK: John Wiley & Sons, Inc.

Fertman, C. I., & Allensworth, D. D. (Eds.). (2010). Health promotion programs: From theory to practice. San Francisco, CA: Jossey-Bass.

Fraenkel, J. R., & Wallen, N. E. (2006). How to design and evaluate research in education (6th Ed.). Boston, MA: McGraw Hill.

Giesbers, B., Rienties, B., Tempelaar, D., & Gijselaers, W. (2014). A dynamic analysis of the interplay between asynchronous and synchronous communication in online learning: The impact of motivation. Journal of Computer Assisted Learning, 30(1), 30-50. doi: 10.1111/jcal.12020

Glasser, B. G., & Strauss, A. L. (1967). The development ofgrounded theory : Chicago, IL: Alden.

Guba, E., & Lincoln, Y. (2000). Paradigmatic controversies, contradictions and emerging influences. In Denzin, N. & Lincoln, Y. (Eds.), The SAGE handbook of qualitative research (pp. 163-188). Thousand Oaks: CA: Sage Publication Inc.

Guo, W., Chen, Y., Lei, J., & Wen, Y. (2014). The effects of facilitating feedback on online learners' cognitive engagement: Evidence from the asynchronous online discussion. Education Sciences, 2(2), 193-208.

Hastie, M., Chen, N.-S., & Kuo, Y.-H. (2007). Instructional design for best practice in the synchronous cyber classroom. Journal of Educational Technology & Society, 10(4), 281-294.

Higgins, K., & Harreveld, R. E. (2011). The casual academic in university distance education from isolation to integration: A prescription for change. Paper presented at the 2011-2021 Summit: Global challenges and perspectives of blended and distance learning, Sydney, Australia. Retrieved from http://hdl.cqu.edu.au/10018/918753

Higgins, K., & Harreveld, R. E. 2013). Professional development and the university casual academic: Integration and support strategies for distance education. Distance Education, 34(2), 189-200.

Hrastinski, S., Keller, C., & Carlsson, S. A. (2010). Design exemplars for synchronous e- learning: A design theory approach. Computers & Education, 55(2), 652-662.

Issa, T., Issa, T., & Chang, V. (2012). Technology and higher education: An Australian case study. International Journal of Learning, 18(3), 223-236.

Juan, Á. A., Huertas, M. A., Cuypers, H., & Loch, B. (2012). Mathematical e-learning. RUSC, 9(1), 278-283.

Kirschner, P. A., Kreijns, K., Phielix, C., & Fransen, J. (2015). Awareness of cognitive and social behaviour in a CSCL environment. Journal of Computer Assisted Learning, 31(1), 59-77. doi: 10.1111/jcal.12084

Kleickmann, T., & Anders, Y. (2013). Learning at university. In M. Kunter, J. Baumert, W. Blum, U. Klusmann, S. Krauss, & M. Neubrand (Eds.), Cognitive activation in the mathematics classroom and professional competence of teachers (pp. 321-332). New York: Springer.

Lambe, J. (2007). Student teachers, special education need and inclusion education: Reviewing the potential for problem-based, e-learning pedagogy to support practice. Journal of Education for Teaching: International Research and Pedagogy, 33, 359-377.

Lan, Y.-J., Chang, K.-E., & Chen, N.-S. (2012). CoCAR: An online synchronous training model for empowering ICT capacity of teachers of Chinese as a foreign language. Australasian Journal of Educational Technology, 28(6), 1020-1038.

Lee, J. (2012). Patterns of interaction and participation in a large online course: Strategies for fostering sustainable discussion. Educational Technology and Society, 15(1), 260-272.

Lefoe, G., Gunn, C., & Hedberg, J. G. (2002). Recommendations for teaching in a distributed learning environment: The students' perspective. Australian Journal of Educational Technology, 18(1), 40-56.

Lin, C. H., Feng Liu, E. Z., Ko, H. W., & Cheng, S. S. (2008). Combination of service learning and pre-service teacher training via online tutoring. WSEAS Transactions on Communications, 7(4), 258-268.

Makri, K., Papanikolaou, K., Tsakiri, A., & Karkanis, S. (2014). Blending the community of inquiry framework with learning by design: Towards a synthesis for blended learning in teacher training. Electronic Journal of e-Learning, 12(2), 183-194.

McLoughlin, C. (2001). Inclusivity and alignment: Principles of pedagogy, task and assessment design for effective cross-cultural online learning. Distance Education, 22(1), 7-29.

Mertens, D. M. (2005). Researchandevaluationineducationandpsychology:Integrating diversity with quantitative, qualitative, and mixed methods. Thousand Oaks, California: SAGE Publications.

Meyer, K. A. (2014). Student engagement in online learning: What works and why. ASHE Higher Education Report, 40(6), 1-114.

Molinari, C. A. (2012). Online and blended learning: Are we delivering quality undergraduate programs in health administration? The Journal of Health Administration Education, 29(1).

Moore, M. G. (1993). Theory of transactional distance. In D. Keegan (Ed.), Theoretical principals of distance education. New York: Routledge.

Moore, M. G. (Ed.) (2013). Handbook of distance education (3rd ed.): New York: Routledge.

Nortvig, A. M. (2013). In the presence of technology: Teaching in hybrid synchronous classrooms. Proceedings of the International Conference on e-Learning, 347-353.

Open Universities Australia. (2013). Open Universities Australia: 2013 annual report. Retrieved from http://www.open.edu.au/

Oprea, C. L. (2014). Interactive-creative learning. Paper presented at The International Annual Scientific Session Strategies XXI, Bucharest.

Piccoli, G., Ahmed, R., & Ives, B. (2001). Web-based virtual learning environments: A research framework and a preliminary assessment of the effectiveness in basic IT skills training. MIS Quarterly, 25(4), 401-426.

Reeves, T. C., Herrington, J., & Oliver, R. (2002). Authentic activities and online learning. In A. Herrington (Ed.), Research and development in higher education: Quality conversations (Vol. 25, pp. 562-567). Hammondville, NSW: Higher Education Research and Development Society of Australasia, Inc.

Reiach, S., Averbeck, C., & Cassidy, V. (2012). The evolution of distance education in Australia: Past, present, future. Quarterly Review of Distance Education, 13(4), 247-252.

Ritchie, J., & Lewis, J. (2003). Qualitative research practice: A guide for social science students and researchers. London, UK: Sage Publications.

Rivers, B. A., Richardson, J. T. E., & Price, L. (2014). Promoting reflection in asynchronous virtual learning spaces: Tertiary distance tutors' conceptions. International Review of Research in Open and Distance Learning, 15(3), 215-231.

Robertson, L., & Hardman, W. (2012). Entering new territory: Crossing over to fully synchronous, e-learning courses. College Quarterly, 15(3).

Sisco, A., Woodcock, S., & Eady, M.J. (2015). Pre-service perspectives on e-teaching: Assessing e- teaching using the EPEC hierarchy of conditions for e-learning/teaching competence. Canadian Journal of Learning and Technology, 41(3), 1-32.

Skramstad, E., Schlosser, C., & Orellana, A. (2012). Teaching presence and communication timeliness in asynchronous online courses. Quarterly Review of Distance Education, 13(3), 183-198.

Stacey, E. (2005). The history of distance education in Australia. Review of Distance Education, 6(3), 253-259.

Stemler, S. (2001). An overview of content analysis. Practical Assessment, Research & Evaluation, 7(17).

Sun, J. C. Y., & Rueda, R. (2012). Situational interest, computer self-efficacy and self-regulation: Their impact on student engagement in distance education. British Journal of Educational Technology, 43(2), 191-204. doi: 10.1111/j.1467-8535.2010.01157.x

Tynan, B., Ryan, Y., & Lamont-Mills, A. (2015). Examining workload models in online and blended teaching. British Journal of Educational Technology, 46(1), 5-15. doi: 10.1111/bjet.12111

Umrani-Khan, F., & Iyer, S. (2009). ELAM:A model for acceptance and use of e-learning by teachers and students. Paper presented at the International Conference on e-Learning, Institute of Technology Bombay, Mumbai, India.

Watson, S. (2013). Tentatively exploring the learning potentialities of postgraduate distance learners' interactions with other people in their life contexts. Distance Education, 34(2), 175-188.

Wei, H. C., Peng, H., & Chou, C. (2015). Can more interactivity improve learning achievement in an online course? Effects of college students' perception and actual use of a course-management system on their learning achievement. Computers and Education, 83, 10-21.

Wheeler, S., & Reid, F. (2013). Student perceptions of immediacy and social presence in distance education. In U. Bernath, A. Szücs, A. Tait, & M. Vidal (Eds.), Distanceand e-learning in transition (pp. 411-426): John Wiley & Sons, Inc.

Woldab, Z. E. (2014). E-learning technology in pre-service teachers training: Lessons for Ethiopia. Journal of Educational and Social Research,4, 159-166.

Woodcock, S., Sisco, A., & Eady, M.J., (2015). The learning experience: Training teachers using online synchronous environments. Journal of Educational Research and Practice, 5(1), 21-34.

Wu, P., Pienaar, J., O'Brien, D., & Feng, Y. (2013). Delivering construction education programs through the distance mode: Case study in Australia. Journal of Professional Issues in Engineering Education and Practice, 139(4), 325-333. doi: doi:10.1061/(ASCE)EI.1943-5541.0000162

Zikic, J., & Saks, A. M. (2009). Job search and social cognitive theory: The role of career-relevant activities. Journal of Vocational Behavior, 74, 117-127. doi: 10.1016/j.jvb.2008.11.001

Employing the EPEC Hierarchy of Conditions (Version II) To Evaluate the Effectiveness of Using Synchronous Technologies with Multi-Location Student Cohorts in the Tertiary Education Setting by Dr. Michelle J Eady, Dr Stuart Woodcock, and Ashley Sisco is licensed under a Creative Commons Attribution 4.0 International License.