Figure 1. Neutral-face agent and smiling-face agent.

Volume 17, Number 5

Tze Wei Liew1, Nor Azan Mat Zin2, Noraidah Sahari2, Su-Mae Tan1

1Multimedia University, Malaysia, 2Universiti Kebangsaan Malaysia, Malaysia

The present study aimed to test the hypothesis that a smiling expression on the face of a talking pedagogical agent could positively affect a learner's emotions, motivation, and learning outcomes in a virtual learning environment. Contrary to the hypothesis, results from Experiment 1 demonstrated that the pedagogical agent's smile induced negative emotional and motivational responses in learners. Experiment 2 showed that the social meaning of a pedagogical agent's smile might be perceived by learners as polite or fake. In addition, qualitative data provided insights into factors that may cause negative perceptions of a pedagogical agent's smile, which in turn lead to negative affective (emotional and motivational) states in learners. Theoretical and design implications for pedagogical agents in virtual learning environment are discussed in the concluding section of the paper.

Keywords: Pedagogical agent, smiling expression, emotion contagion, emotion, virtual learning environment, motivation

One of the major drawbacks of e-learning relates to the lack of social and personal presence in this environment (Searls, 2012). This issue has prompted the implementation of artificial intelligent characters known as "pedagogical agents" in virtual learning systems (Krämer & Bente, 2010; Veletsianos & Russell, 2014). Pedagogical agents are visual characters that reside within the digital space of virtual learning systems. They are viewed as intrinsically social interfaces, which allows for the inclusion of socio-emotive values in the learning process (Guo & Goh, 2015; Kim & Baylor, 2015). Embedded within virtual learning environments, pedagogical agents take instructional roles such as tutor, learning companion and coach in e-learning environments (Kim & Baylor, 2006; Kim & Baylor, 2015).

Proponents of pedagogical agent have claimed that the visual embodiment of an agent can create a "persona effect" in virtual educational systems. The persona effect refers to a phenomenon in which the mere presence of an agent leads to an enhanced learning experience in a virtual learning environment (see Lester et al., 1997; Moreno, Mayer, Spires, & Lester, 2001). Moreover, it was also argued that pedagogical agents can help induce a sense of social cues in a multimedia message that can prime the social conversation schema in learners (Mayer, Sobko, & Mautone, 2003).

Against this background, advocates of pedagogical agents have posited that if visual interfaces of agents are integrated into learning systems, learners will experience higher motivation to learn (Schroeder, Adesope, & Gilbert, 2013). In a similar vein, an agent may function as a "social model" that can influence a learner's motivation and attitude regarding a subject domain (Plant, Baylor, Doerr, & Rosenberg-Kima, 2009; Rosenberg-Kima, Baylor, Plant, & Doerr, 2008; Yilmaz & Kılıç-Çakmak, 2012). Previous studies have shown that pedagogical agents that were young, female and "cool" were instrumental in positively changing young women's attitudes and self-efficacy in engineering-related domains (Plant et al., 2009; Rosenberg-Kima et al., 2008).

In addition, the inclusion of a pedagogical agent may allow relevant information in virtual environment to be pointed out effectively during the learning process. For instance, prior studies have demonstrated that participants learned more in a system with a pedagogical agent than in a system without an agent (Atkinson, 2002; Yung & Paas, 2015). Atkinson (2002) suggested that the agent enhanced learning by providing gestures that helped learners to pay attention to relevant information. With the presence of an agent, learners' cognitive resources were effectively utilized, as learners did not need to dedicate their mental efforts to searching the virtual learning space for relevant visual information (Yung & Paas, 2015, Atkinson, 2002).

Pedagogical agents can also function as "vessels" that contain distributed information (Veletsianos & Russell, 2014). In other words, pedagogical agents can scaffold learners by asking questions, providing hints, or offering alternative perspectives during learning. Holmes (2007) demonstrated that learners who interacted with pedagogical agents produced deeper explanations in post-tests than learners who did not interact with an agent. In his paper, Holmes pointed out that the agents were designed to stimulate deeper understanding in novice learners by listening and providing feedback regarding the subject domain. Hence, learners who "conversed" with agents had the advantage of exercising good explanatory skills and, hence, outperformed learners who did not interact with the agents.

In virtual learning systems, can a learner's emotional and motivational response be affected by the facial expression of a virtual agent? If so, what kind of emotional response is induced by what type of agent's expression? These are interesting questions, as recent advances in multimedia technology allow the development of realistic virtual learning agents with emotional states indicated through speech (synthetic and/or recorded), body language, and facial expressions (Beale & Creed, 2009). As more and more embodied agents are being implemented in educational virtual domains (i.e., virtual learning, e-training, serious gaming, edutainment and blended learning), it is hypothesized that the design of an agent's facial expression has a strong impact on learner-virtual agent interaction.

Nevertheless, it is still unclear how people perceive and respond to synthetic displays of emotions by virtual agents (Beale & Creed, 2009). Against this background, the present study aims to explore the effects on emotional, motivational and learning outcomes in learners as a result of perceiving facial expressions of virtual learning agents. More specifically, we focus our investigation on how a pedagogical agent's smiling expression affects the learner's emotions, motivation and learning outcomes.

The "media equation" makes a compelling case that human-to-human rules of social psychology are also relevant to human-virtual agent interaction (Reeves & Nass, 1996). Studies have shown that the social rules that are established in human-to-human communications are also prevalent in human-to-computer settings. Some of the social interplays that have been observed empirically in human-computer experiments are the stereotype effect (Liew, Tan, & Jayothisa, 2013), inhibition/facilitation effect (Hayes, Ulinski, & Hodges, 2010; Zanbaka, Ulinski, Goolkasian, & Hodges, 2007), politeness effect (Wang et al., 2008), similarity attraction (Nass & Lee, 2001), empathy (Guadagno, Swinth, & Blascovich, 2011) and the venting effect (Klein, Moon, & Picard, 2002).

Emotional contagion refers to the automatic tendency for a person's emotional state to be affected by another person's emotional expression (Hatfield, Cacioppo, & Rapson, 1994). In social interaction, people unconsciously send cues about their emotional state through non-verbal signals such as facial expressions, vocal inflections, body gestures, and posture, and perceive such cues sent by others. The inducement of emotions between people is not caused by an intended action of a person, but only by the perception of the emotional expression of that person (McIntosh, Druckman, & Zajonc, 1994). Literature has shown that emotional contagion can also occur in human-computer interaction (de Melo, Carnevale, & Gratch, 2012; Krämer, Kopp, Becker-Asano, & Sommer, 2013; Neumann & Strack, 2000; Qu, Brinkman, Ling, Wiggers, & Heynderickx, 2014; Wong & McGee, 2012). As discussed below, smiling expressions of agents have been shown to affect users' emotional and motivational experiences in virtual systems.

In one study, Tsai, Bowring, Marsella, Wood, and Tambe (2012) showed that participants experienced increased happiness simply by viewing static images of smiling virtual agents. Tsai and his team conducted another experiment to ascertain if the effect of emotional contagion would positively impact participants' trust and likelihood of cooperation in a virtual simulation of strategic decision making. However, it was found that the effect of emotional contagion disappeared in the virtual scenario. In a subsequent experiment, the emotional contagion effect reappeared (albeit not reaching statistical significance) when the need for decision making was removed from the virtual scenario. Taken together, Tsai and his team proposed that participants' act of deliberate thinking (as required to make a strategic decision) diminished the effect of emotional contagion.

Related research by Ku et al. (2005) revealed that participants felt congruent emotions while observing virtual agents with different facial expressions agents (i.e., neutral, happy, and angry). Interestingly, they demonstrated that the intensity of congruent emotions felt by viewers increased according to the intensity of the facial expressions by virtual avatars. This observation is in line with the mechanism of mimicry, in which greater emotional display by the agents results in higher levels of emotional contagion in people. Though the study did not extend its findings to virtual applications and scenarios, it asserted that people's emotional responses are spontaneously affected by facial expressions of virtual agents.

In an experiment by Krämer et al. (2013), participants were paired with either a non-smiling agent, an infrequent-smiles agent, or a frequent-smiles agent. Though the frequency of agent smiles did not differently affect participants' perceptions of the agent, it was shown that participants tended to mimic the smile of the virtual agent. Furthermore, it was observed that these mimicry responses were elicited even when participants did not consciously notice the facial expression of the virtual agent. Though participants' emotions were not measured in the study, the study suggested the mechanism of facial mimicry in human-agent interaction.

An experiment by Pertaub, Slater, and Barker (2002) showed that speakers in virtual environment were affected emotionally by a virtual avatar's non-verbal characteristics such as facial expressions, body postures, and gestures. As predicted, speakers felt less anxiety when paired with virtual audiences that displayed positive expressions such as encouraging nods, frequent smiles, and appropriate body orientation. In contrast, virtual audiences that showed negative characteristics such as yawning, slouching, and avoidance of eye contact induced a higher level of anxiety response from the human speaker.

De Melo et al. (2012) demonstrated that the virtual agents' static facial displays of joy, sadness, anger and guilt influenced participants' decision to counter-offer, accept or drop out from virtual negotiations. Furthermore, people's expectations about the agents' decisions were impacted by these facial expressions. Though there were no measures of emotion taken from the participants, the study affirmed that the facial expressions of virtual agents induce subconscious behavioral and cognitive responses from people.

Based on these related works, there is empirical evidence regarding the effects of smiling expressions by agents in human-agent interaction. More specifically, there is a tendency for people to automatically "catch" emotions from smiling expressions of virtual agents (Krämer et al., 2013; Ku et al., 2005; Tsai et al., 2010). These findings seem to support the emotional transference mechanism, in which people are more likely to experience emotional convergence (rather than divergence) by simply observing the facial expressions of virtual agents. Studies regarding agent's smile have been conducted in virtual scenarios such as decision-making (Tsai et al., 2012), negotiation (de Melo et al., 2012) and games (Krämer et al., 2013).

However, research related to the effects of an agent's smile in a virtual learning environment is still scant. This research gap needs to be filled, as there is a potential for virtual agents to express motivation and excitement in a way that is infectious to human learners (Beale & Creed, 2009; Burleson, Picard, Perlin, & Lippincott, 2004). In other words, it might be interesting to determine if an agent's smile can automatically induce positive emotions in learners. It is further hoped that the induced positive emotion can have a facilitating effect on learners' motivation and perception of learning environments (Liew & Tan, 2016; Isen & Reeve, 2005; Um, Plass, Hayward, & Homer, 2012; Yusof & Zin, 2013). Hence, we hypothesized that an agent's smiling expression will positively enhance a learner's motivation, perceptions and learning outcomes in a virtual learning environment.

In addition, prior studies tested the effects of an agent's smile with static images of virtual agents (see de Melo et al., 2012; Ku et al., 2005; Tsai et al., 2012). As there is an increasing trend and expectation for believable agents to dynamically express emotions in virtual applications, it is, therefore, imperative to extend this line of investigation with animated virtual agents. Previous work has only explored the effects of an agent's smile with the agent's facial expression as the sole modality and source of emotional cues (see de Melo et al., 2012; Ku et al., 2005; Tsai et al., 2012). The effect of a smiling expression has not yet been tested for scenarios in which facial expressions and vocal expressions are presented by talking virtual agents.

Against this background, this paper aims to extend the field by addressing two key points. Firstly, we aim to investigate the effects of smiling expression with talking pedagogical agents rather than static images of pedagogical agents. That is, we aim to use agents that display dynamic facial expressions (i.e., eye, lips, head, and facial movement), which are synchronized with computer-generated speech. Similar to previous studies (Ku et al., 2005; Tsai et al., 2012), we focus on smiling expressions of talking virtual agents as the potential cause for emotional contagion in learner-agent interaction in virtual learning systems. Secondly, we aim to explore the effects of an agent's smiling expression in the context of a virtual learning environment.

Pursuant to this goal, two experiments were conducted in which participants were either exposed to a neutral or a frequent smiling expression of a virtual agent. Experiment 1 explored the effects of an agent's smiling expression on the learner's emotions, motivation and learning outcomes. Experiment 2 served as a post-hoc study that utilized quantitative and qualitative data to assess how learners might perceive the meaning of smiles by the virtual agent in the virtual learning environment.

In this experiment, we aimed to determine the effects of an agent's smiling expression on a learner's emotions, motivation, and learning outcomes in virtual learning environments. This experiment also served to test the hypothesis that a smiling expression of a virtual agent will positively affect a learner's emotions, motivation and learning outcomes.

One hundred and seven freshmen (40 males, 67 females, mean age = 20) at a university were recruited to participate in exchange for extra course credits. All participants were business majors who had no experience in programming-related subjects (including virtual learning) and did not have any experience interacting with virtual agents.

Two 3D male virtual agents were designed to convey either a neutral facial expression or a constant smiling expression (see Figure 1). The audio speech of both agents was generated using the IVONA Text-To-Speech engine (American English-Joey, default speed). The virtual learning environment served as an interactive platform for the virtual agent to deliver lessons on basic computer programming (see Figure 2).

Figure 1. Neutral-face agent and smiling-face agent.

Figure 2. Virtual learning environment.

To measure learners' emotions, the positive affect scale from PANAS-X was utilized (Watson & Clark, 1999). The survey asked respondents to indicate the degree to which they experienced 10 types of positive feelings using a 5-point Likert-type scale ranging from 1 (very slightly or not at all) to 5 (very much) (coefficient alpha =.84). The total score for General Positive Affect was obtained by adding the 10 responses to the items. Besides the positive affect, the different subscales obtained from the survey were joviality, self-assurance, and attentiveness (see Watson & Clark, 1999).

A survey consisting of three items, each scaled from 1 (strongly disagree) to 7 (strongly agree) measured learners' perceptions of the virtual lessons: 1) the lesson is fun; 2) the lesson makes me curious to know more about the subject; and 3) I am willing to come back to this lesson in the future. Learners' perceptions of virtual agents were measured by 5 items, each scaled from 1 (strongly disagree) to 7 (strongly agree): (1) I like Michael (the virtual avatar), (2) Michael is knowledgeable, (3) Michael is friendly, (4) I can trust Michael, and (5) I am willing to learn with Michael in the future.

Learning achievements of participants were measured as total scores on comprehension post-tests. The comprehension test asked learners to predict the output of twelve short program statements. The transfer tests required learners to apply algorithmic rules as learned in the virtual lessons to the new situation, in order to achieve the correct output. Each correct output was awarded 1 point; hence, the maximum score obtainable was 12. These measures were consistent with the cognitive skills of knowledge retention and comprehension in computer science.

Participants (n = 107) were randomly paired with the neutral virtual agent (control group, n = 53) or smiling virtual agent (experimental group, n = 54). All participants were seated in front of individual computers with earphones. To deter participants from suspecting the intention of the experiment and thus paying attention to the facial expression of the virtual agent and to their own emotions, two important measures were taken: (1) participants in the control and experimental groups were separated accordingly into two different laboratories so that they would be unaware of the different conditions, and (2) participants were told that the study was for the purpose of measuring computer aptitudes. After the 30-minute interactive lesson with the virtual agent, participants were instructed to fill up the surveys (in order) for measuring emotions, perceptions on the virtual learning, and perceptions on the virtual agent. Finally, participants were given 30 minutes to complete the post-test.

An independent sample t-test was performed to compare scores for emotion, perception of virtual learning, perception of the virtual agent, and the post-test between participants in smiling- and neutral-agent conditions. Table 1 shows the means, standard deviations and p-values of the t-test analysis.

Table 1

Means, Standard Deviations and p-values of t-test Analysis

| Control (Neutral) [Mean, SD] | Experimental (Smiling) [Mean, SD] | p-value (2-tailed) | |

| Emotion | |||

| General Positive Affect* | 28.36 (7.77) | 25.67 (7.53) | < 0.1 |

| Joviality* | 8.42 (2.58) | 7.43 (2.79) | < 0.1 |

| Self-Assurance** | 5.49 (1.89) | 4.70 (1.84) | < 0.05 |

| Attentiveness | 8.49 (2.58) | 8.14 (2.81) | > 0.1 |

| Perception of learning environment | |||

| Enjoyment | 3.92 (1.63) | 3.79 (1.34) | > 0.1 |

| Curiosity** | 4.43 (1.51) | 3.88 (1.60) | < 0.05 |

| Willingness to return* | 3.84 (1.71) | 3.33 (1.53) | = 0.05 |

| Perception of virtual agent | |||

| Likability | 4.15 (1.43) | 4.16 (1.60) | > 0.1 |

| Knowledgeable | 4.77 (1.50) | 4.71 (1.66) | > 0.1 |

| Credibility | 4.77 (1.37) | 4.70 (1.65) | > 0.1 |

| Friendliness | 4.49 (1.59) | 4.73 (1.61) | > 0.1 |

| Willingness to return | 3.81 (1.67) | 4.21 (1.43) | > 0.1 |

| Post-test | 7.13 (3.05) | 7.71 (3.17) | > 0.1 |

As shown in the table, participants experienced marginally less positive emotion when paired with the smiling virtual agent, t (105) = 1.755, p = 0.072. Based on the positive affect sub-scales, it was shown that the smiling virtual agent had a negative impact on participants' feelings of joviality, t (105) = 1.820, p = 0.082 and self-assurance, t (105) = 2.184, p = 0.031. Finally, the smiling virtual agent had a negative impact on learners' perceptions of virtual learning. It was shown that the participants experienced lower curiosity to explore the virtual lesson more, t (105) = 225, p = 0.028 and less willingness to return to the virtual lesson, t (105) = 1.974, p = 0.05 than did participants engaging the neutral agent condition. No significant effects were found for participants' perceptions of the virtual agents and post-test scores. Finally, results from 2-way ANOVA determined that these findings were not moderated by participant's gender; for all significances, p > 0.05.

The purpose of this study was to determine the effects of agent's smiling expression on learner's emotion, motivation and learning outcome in virtual learning systems. To achieve this, participants were either paired with the neutral-face agent or smiling-face agent in a virtual learning environment. Based on the data, there were no significant differences of post-test scores between learners in smiling and neutral agent conditions. Hence, learning outcome was not affected by the presence of a smiling agent.

However, it was shown that participants who interacted with the smiling-face virtual agent experienced lower positive affect, joviality and self-assurance than did participants who interacted with the neutral-face virtual agent. Furthermore, the smiling expression of the virtual agent eroded participants' perceptions of virtual learning environments. This discovery is at odds with earlier findings (Ku et al., 2005; Tsai et al., 2012), which highlighted that the smiling expression of virtual agents causes congruent (i.e., joyful) emotions in participants.

Why did the smiling expression of the agent negatively impact learners' emotions and motivation in virtual learning systems? It is plausible that participants' emotional responses to a smiling expression of the virtual agents are different, based on participants' diverse appraisals of the perceived meaning of smiles. Indeed, recent research posits that people distinguish between genuine and fake smiles given by virtual agents (Ochs, Niewiadomski, & Pelachaud, 2012; Ochs & Pelachaud, 2013). In particular, genuine smiles (also called felt or Duchenne smiles) express an agent's emotional state of happiness, while fake smiles communicate politeness, embarrassment, or hiding true feelings (Ochs & Pelachaud, 2013; Rhem & André, 2005). In fact, an investigation of human-virtual agent interaction showed that people's feelings of enjoyment are affected by the perceived meaning of virtual agents' smiles (Ochs & Pelachaud, 2013).

To distinguish the dissimilar meanings of smiles, we follow an emergent theory proposed by Niedenthal, Mermillod, Maringer, and Hess (2010) called the Model of Simulation of Smiles (SIMS). In this model, three types of smiles are proposed: ones to indicate felt positive emotion (enjoyment smiles); ones to express social motives, such as politeness and greetings (affiliative smiles); and smiles to communicate dominance, pride, and masking of negative intentions (dominant smiles). Placing the SIMS in human-virtual agent interaction, it seems likely that the emotion-congruence effect (i.e., positive emotion) occurs only if virtual agents are perceived to be expressing enjoyment smiles. Conversely, emotion-divergence (i.e., negative emotion) is induced when smiles of virtual agents are perceived as fake, such as a dominant smile that masks negative emotions.

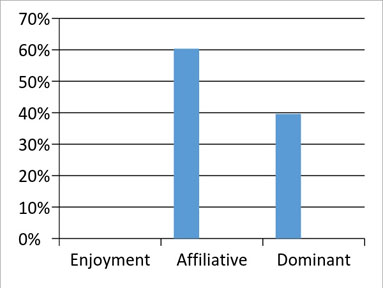

Following this view, we hypothesize that the negative emotion and reduced motivation occurred because participants might have perceived the virtual agent's smile as fake (i.e., dominant) rather than genuine smile (i.e., affiliative or enjoyment). To seek a better understanding regarding this issue, a follow-up study (Experiment 2) was conducted. Experiment 2 served to determine how the smiling expression of the virtual agent might be perceived by learners. Based on the SIMS, the prototypical smiles under investigation are enjoyment, affiliative, and dominant. A qualitative survey was also utilized to shed light on learners' perceptions of a virtual agent's smile.

The outcomes of Experiment 1 showed that the smiling expression of the virtual agent had negative impacts on participants' emotions and motivations. Based on the factors discussed above, we hypothesized that the emotion-divergence occurred because participants were more likely to perceive the agent's smile as dominant rather than enjoyment/affiliative. Hence, this study examined how the smiling expressions of pedagogical agents are interpreted by learners in a virtual learning system.

From the same university as in Experiment 1, 56 freshmen (23 males, 33 females, mean age = 19) were recruited to participate in exchange for partial course credits. No participant had had any previous experience with virtual agents.

For the purpose of this study, we used the virtual learning environment that is paired only with the smiling-face agent (see Figures 1 and 2).

Based on the SIMS, a multiple choice survey asked participants to choose one of the following: the virtual agent is smiling because he is happy (enjoyment); the virtual agent is smiling because he is being polite (affiliative); and the virtual agent is smiling because he is hiding his true emotions (dominant).

Participants (n = 56) were told that the study required them to evaluate the meaning of the smile displayed by the virtual agent in the virtual learning environment. Within a closed classroom, participants viewed and listened to the lesson presented by the virtual agent, which was projected on a 4000mm x 4000mm screen. The agent's speech was channelled through four mounted PA speakers. After observing the virtual agent, the participants were asked to select one answer that described their perception of the agent's smile: enjoyment, affiliative, or dominant. Next, they were instructed to give their subjective evaluation of the smile displayed by the virtual agent.

In our earlier discussion, we noted that the social meaning of a pedagogical agent's smile might be interpreted differently by learners. Arguably, negative emotion and motivation may arise because learners perceive the smile of a virtual agent as non-enjoyment (dominant or affiliative) rather than enjoyment. To explore these issues, a descriptive analysis was performed to compare the frequencies of participants' perception of the three smiles: enjoyment, affiliative, and dominant. As shown in Figure 3, the perception of an enjoyment smile is 0 % percent. This means that none of the participants thought that the virtual agent was genuinely expressing a state of happiness. Instead, the virtual agent's smile was perceived to be either affiliative/being polite (60.38 %) or dominant/hiding emotions (39.62 %). To explore whether gender moderated the perceptions of the agent's smile (i.e., dominant versus affiliative), a Chi-square analysis was conducted. Results indicated that male and females did not significantly differ in their perceptions of the agent's smile, χ(1) = 0.04, p > 0.05.

Figure 3. Perception of agent's smiles.

We conducted an exploratory analysis to tease apart the remarks made by participants on the agent's smile (Table 2). Interestingly, there were consistent and recurring themes in the remarks, based on how smiles were perceived (affiliative or dominant). Specifically, participants who perceived the smile as affiliative commented, "…somehow his smile makes me less stressed," "less scared because of his smile," "he is teaching patiently and politely," "not scared because…smile is so friendly and has true feelings," and "audience will feel comfortable when seeing his smile."

Taken together, this implies that an affiliative smile by virtual agents seems to induce positive feeling of social attachment and intimacy in participants (as suggested in the SIMS). As for when the agent's smile was perceived as dominant, participants remarked, "creepy and fake," "forced smile," "he tries to be polite, feel scared because he has no feeling," "scared...unnatural smile," "…is hiding his true feelings," "feels like he is going to kill me, his smile is tricky," "looks happy but fake, feeling scared as he is hiding something from audience," and "he is showing off." Overall, these comments reflect a sense of distrust, withdrawal, vigilance, and general negative emotion in participants who perceived the dominant smile.

Table 2

Learners' Comments Regarding Pedagogical Agent's Smile

| Interpretation of agent's smiling expression | Comments regarding agent's smile |

| Affiliative (polite) |

|

| Dominant (fake) |

|

This study proposes two key insights that contribute to the research in learner-pedagogical agent interaction in virtual learning environments. Firstly, this study shows that facial expression in talking virtual agents can automatically and subconsciously induce emotional and motivational responses in learners. The emotion contagion effects were observed even when the smiling expression of the virtual agent was not consciously attended to (see procedure of Experiment 1). Moreover, our study shows that facial expressions in talking agents affect how people perceive virtual environments. Plausibly, emotional responses induced by facial expressions of agents changed the way participants perceived the virtual environment. Notably, exploratory analysis yields a strong correlation ( r > 5, p = 0.000) in all relationships between emotion scores and perceptions of the learning environment (i.e., curiosity to explore the virtual lesson, willingness to come back to the virtual lesson, and enjoyment of the virtual lesson).

Secondly, our study reveals that the virtual agent's smile induced a divergent (negative) effect on participants' emotional and motivational states. The effect of emotional divergence was initially surprising, as one might expect that the agent's smile would produce congruent (i.e., feeling of happiness) emotions in participants (see Ku et al., 2005; Tsai et al., 2012). However, a deeper perspective regarding this issue was found in the literature describing the role of social comparison mechanisms in divergent emotional contagion (Epstude & Mussweiler, 2009). In the light of social comparison, people do not always perceive an agent's smile as enjoyment, which expresses a genuine state of happiness (Ochs & Pelachaud, 2013). Rather, as shown in Experiment 2, the agent's smile could be interpreted as non-enjoyment, such as affiliative (being polite) or dominant (masking negative emotions). It can be further argued that the social interpretation of an agent's smile as "dominant" and "fake" produces negative impacts on emotion and motivation in virtual learning systems.

Our results from Experiment 2 showed that participants' interpretations of agent smiles were almost equally divided between dominant and affiliative. Curiously, none of the participants perceived the agent's smile as enjoyment. Hence, one might ask: Why was the agent's smile perceived as non-enjoyment (dominant or affiliative) rather than enjoyment? Insights regarding this question can be found in participants' comments about the virtual agent and its smile. Some of the remarks regarding the agent's smile and speech (from Experiment 2) indicated that the agent's speech was emotionless and monotonous, albeit being clear and articulate. Hence, the lack of emotion in the agent's voice diminished the perceptions of smiling agents as being truly happy. Instead, the incongruent pairing of emotionless voice and a smiling facial expression might have induced a disconcerting emotional effect in participants, resulting in various interpretations, such as "fake," "scary," "hiding its true emotion," "unnatural," and "tricky" (Experiment 2).

This observation mirrors earlier findings by Creed and Beale (2008), which indicated that mismatched facial and voice expressions elicit negative responses from people. Furthermore, there were a few comments describing the lack of synchronization between speech and lip movement, such as "fake" and "creepy," suggesting the uncanny valley effect, which might have contributed to the negative perceptions of the agent's smile (see Tinwell, Grimshaw, Nabi, & Williams, 2011). Taken together, this study suggests that the voice expression and lip synchronization influence the perception of the smile of a talking animated virtual. It is worth noting that learners who perceived the agent smile as affiliative (being polite) mentioned positively that they were more "comfortable" and "less scared and stressed" when engaging the virtual agent (Experiment 2). Similarly, findings in a recent study (Guadagno et al., 2011) suggest that an agent's smile positively impacts participants' social evaluation of the virtual agent (i.e., perceived empathy).

Based on this prior discourse, it is imperative for virtual agent designers to be aware that a mismatch of the facial expression and voice expression of a virtual agent may elicit unfavourable emotional response from learners. In the case of this study, the neutral (emotionless) voice of the virtual agent distorted the perception of the virtual agent's smile, which led to negative emotional and motivational responses from learners. As voice quality is shown to impact perception, our future work will include an agent that displays congruent emotional cues through both facial and voice expression. These future studies are motivated by the quest to determine if congruent positive display of an agent's facial and vocal expression (i.e., happiness, enthusiasm and excitement) can positively influence learners' affective factors such as emotion, motivation and learning perception in a virtual learning environment.

This study is not without limitations. In the context of our experiment, the duration of the virtual lesson was restricted to 30 minutes. This duration might not represent the length of a typical lesson in a virtual learning environment. Hence, the effects of an agent's smile have yet to be ascertained for a prolonged virtual lesson. Future research should use longitudinal research to enhance understanding of how a smiling agent affects cognitive and socio-emotive behaviours in respondents.

One of the reviewers of this paper pointed out that a participant's demographic (e.g., ethnicity, gender) and personality traits might have impacted his or her perception of an agent's smile. Unfortunately, our study design and data did not allow for additional insights into why some learners perceived the agent's smile as affiliative, while others perceived the agent's smile as fake/dominant. Hence, future research should explore how a learner's demographic, cultural and personality markers may influence his or her perception of an agent's smile. Moreover, as suggested by the same reviewer, the findings of our study should be replicated with different agent designs (i.e., gender, role and ethnicity), in order to associate the effects of an agent's smile with agent properties such as gender (Ozogul, Johnson, Atkinson, & Reisslein, 2013; Wang & Yeh, 2013), stereotype (Liew, Tan, & Jayothisa, 2013; Veletsianos, 2010) and ethnicity (Kim & Wei, 2011; Moreno & Flowerday, 2006). Last but not least, a control group using the voice paired with static images of a smiling agent can be included in future experiments, in order to reinforce the validity of the current findings of this paper.

This research has been supported by the Fundamental Research Grant Scheme by Malaysia Ministry of Higher Education under grant agreement ID: MMU/RMC-PL/SL/FRGS/2014/1.

Atkinson, R. K. (2002). Optimizing learning from examples using animated pedagogical agents. Journal of Educational Psychology, 94 (2), 416-427.

Beale, R., & Creed, C. (2009). Affective interaction: How emotional agents affect users. International Journal of Human-Computer Studies, 67 (9), 755-776.

Burleson, W., Picard, R. W., Perlin, K., & Lippincott, J. (2004, July). A platform for affective agent research. In Workshop on Empathetic Agents, International Conference on Autonomous Agents and Multiagent Systems, Columbia University, New York, NY.

Creed, C., & Beale, R. (2008). Psychological responses to simulated displays of mismatched emotional expressions. Interacting with Computers, 20 (2), 225-239.

de Melo, C. M., Carnevale, P., & Gratch, J. (2012, September). The effect of virtual agents' emotion displays and appraisals on people's decision making in negotiation. In Y. Nakano, M. Neff, A. Paiva, & M. Walker (Eds.), Intelligent Virtual Agents, 12th International Conference, IVA 2012, Santa Cruz, CA, USA (pp. 53-66). New York: Springer.

Epstude, K., & Mussweiler, T. (2009). What you feel is how you compare: How comparisons influence the social induction of affect. Emotion, 9 (1), 1-14.

Guadagno, R. E., Swinth, K. R., & Blascovich, J. (2011). Social evaluations of embodied agents and avatars. Computers in Human Behavior, 27 (6), 2380-2385.

Guo, Y. R., & Goh, D. H. L. (2015). Affect in embodied pedagogical agents meta-analytic review. Journal of Educational Computing Research, 53 (1), 124-149.

Hatfield, E., Cacioppo, J. T., & Rapson, R.L. (1994). Emotional contagion. Cambridge [England]:Cambridge University Press.

Hayes, A. L., Ulinski, A. C., & Hodges, L. F. (2010, January). That avatar is looking at me! Social inhibition in virtual worlds. In Y. Nakano, M. Neff, A. Paiva, & M. Walker (Eds.), Intelligent Virtual Agents (pp. 454-467). Berlin Heidelberg: Springer.

Holmes, J. (2007). Designing agents to support learning by explaining. Computers & Education, 48 (4), 523-547.

Isen, A. M., & Reeve, J. (2005). The influence of positive affect on intrinsic and extrinsic motivation: Facilitating enjoyment of play, responsible work behavior, and self-control. Motivation and Emotion, 29 (4), 295-323.

Kim, Y., & Baylor, A. L. (2006). A social-cognitive framework for pedagogical agents as learning companions. Educational Technology Research and Development, 54 (6), 569-596.

Kim, Y., & Baylor, A. L. (2015). Research-based design of pedagogical agent roles: A review, progress, and recommendations. International Journal of Artificial Intelligence in Education, 26 (1), 160-169.

Kim, Y., & Wei, Q. (2011). The impact of learner attributes and learner choice in an agent-based environment. Computers & Education, 56 (2), 505-514.

Klein, J., Moon, Y., & Picard, R. W. (2002). This computer responds to user frustration: Theory, design, and results. Interacting with computers, 14 (2), 119-140.

Krämer, N. C., & Bente, G. (2010). Personalizing e-learning. The social effects of pedagogical agents. Educational Psychology Review, 22 (1), 71-87.

Krämer, N., Kopp, S., Becker-Asano, C., & Sommer, N. (2013). Smile and the world will smile with you—The effects of a virtual agent's smile on users' evaluation and behavior. International Journal of Human-Computer Studies, 71 (3), 335-349.

Ku, J., Jang, H. J., Kim, K. U., Kim, J. H., Park, S. H., Lee, J. H.,... & Kim, S. I. (2005). Experimental results of affective valence and arousal to avatar's facial expressions. CyberPsychology & Behavior, 8 (5), 493-503.

Lester, J. C., Converse, S. A., Kahler, S. E., Barlow, S. T., Stone, B. A., & Bhogal, R. S. (1997, March). The persona effect: Affective impact of animated pedagogical agents. In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems (pp. 359-366). ACM.

Liew, T. W., & Su-Mae, T. (2016). The Effects of Positive and Negative Mood on Cognition and Motivation in Multimedia Learning Environment. Journal of Educational Technology & Society, 19 (2), 104-115.

Liew, T. W., Tan, S. M., & Jayothisa, C. (2013). The effects of peer-like and expert-like pedagogical agents on learners' agent perceptions, task-related attitudes, and learning achievement. Educational Technology & Society, 16 (4), 275-286.

Mayer, R. E., Sobko, K., & Mautone, P. D. (2003). Social cues in multimedia learning: Role of speaker's voice. Journal of Educational Psychology, 95 (2), 419.

McIntosh, D. N., Druckman, D., & Zajonc, R. B. (1994). Socially induced affect. In D. Druckman & R.A. Bjork (Eds.), Learning, remembering, believing: Enhancing human performance (pp. 251-276). Washington, DC: National Academy Press.

Moreno, R., & Flowerday, T. (2006). Students' choice of animated pedagogical agents in science learning: A test of the similarity-attraction hypothesis on gender and ethnicity. Contemporary Educational Psychology, 31 (2), 186-207.

Moreno, R., Mayer, R. E., Spires, H. A., & Lester, J. C. (2001). The case for social agency in computer-based teaching: Do students learn more deeply when they interact with animated pedagogical agents? Cognition and Instruction, 19 (2), 177-213.

Nass, C., & Lee, K. M. (2001). Does computer-synthesized speech manifest personality? Experimental tests of recognition, similarity-attraction, and consistency-attraction. Journal of Experimental Psychology: Applied, 7 (3), 171-181.

Neumann, R., & Strack, F. (2000). "Mood contagion": The automatic transfer of mood between persons. Journal of Personality and Social Psychology, 79 (2), 211.

Niedenthal, P. M., Mermillod, M., Maringer, M., & Hess, U. (2010). The simulation of smiles (SIMS) model: Embodied simulation and the meaning of facial expression. Behavioral and Brain Sciences, 33 (06), 417-433.

Ochs, M., Niewiadomski, R., Brunet, P., & Pelachaud, C. (2012). Smiling virtual agent in social context. Cognitive processing, 13 (2), 519-532.

Ochs, M., & Pelachaud, C. (2013). Socially aware virtual characters: The social signal of smiles [Social Sciences]. Signal Processing Magazine, IEEE, 30 (2), 128-132.

Ozogul, G., Johnson, A. M., Atkinson, R. K., & Reisslein, M. (2013). Investigating the impact of pedagogical agent gender matching and learner choice on learning outcomes and perceptions. Computers & Education, 67, 36-50.

Pertaub, D. P., Slater, M., & Barker, C. (2002). An experiment on public speaking anxiety in response to three different types of virtual audience. Presence: Teleoperators and Virtual Environments, 11 (1), 68-78.

Plant, E. A., Baylor, A. L., Doerr, C. E., & Rosenberg-Kima, R. B. (2009). Changing middle-school students' attitudes and performance regarding engineering with computer-based social models. Computers & Education, 53 (2), 209-215.

Qu, C., Brinkman, W. P., Ling, Y., Wiggers, P., & Heynderickx, I. (2014). Conversations with a virtual human: Synthetic emotions and human responses. Computers in Human Behavior, 34, 58-68.

Reeves, B., & Nass, C. (1996). How people treat computers, television, and new media like real people and places (pp. 19-36). Cambridge, UK: CSLI Publications and Cambridge university press.

Rehm, M., & André, E. (2005, July). Catch me if you can: exploring lying agents in social settings. In Proceedings of the fourth international joint conference on Autonomous agents and multiagent systems (pp. 937-944). ACM.

Rosenberg-Kima, R. B., Baylor, A. L., Plant, E. A., & Doerr, C. E. (2008). Interface agents as social models for female students: The effects of agent visual presence and appearance on female students' attitudes and beliefs. Computers in Human Behavior, 24 (6), 2741-2756.

Schroeder, N. L., Adesope, O. O., & Gilbert, R. B. (2013). How effective are pedagogical agents for learning? A meta-analytic review. Journal of Educational Computing Research, 49 (1), 1-39.

Searls, D. B. (2012). Ten simple rules for online learning. PLoS Comput Biol, 8 (9), e1002631.

Tinwell, A., Grimshaw, M., Nabi, D. A., & Williams, A. (2011). Facial expression of emotion and perception of the Uncanny Valley in virtual characters. Computers in Human Behavior, 27 (2), 741-749.

Tsai, J., Bowring, E., Marsella, S., Wood, W., & Tambe, M. (2012, January). A study of emotional contagion with virtual characters. In Y. Nakano, M. Neff, A. Paiva, & M. Walker (Eds.), Intelligent Virtual Agents (pp. 81-88). Berlin Heidelberg: Springer.

Um, E., Plass, J. L., Hayward, E. O., & Homer, B. D. (2012). Emotional design in multimedia learning. Journal of Educational Psychology, 104 (2), 485-498.

Veletsianos, G. (2010). Contextually relevant pedagogical agents: Visual appearance, stereotypes, and first impressions and their impact on learning. Computers & Education, 55 (2), 576-585.

Veletsianos, G., & Russell, G. S. (2014). Pedagogical agents. In M.Spector, D. Merrill, J. Elen, & M.J. Bishop (Eds.), Handbook of Research on Educational Communications and Technology, 4th Edition (pp. 759-769). New York: Springer.

Wang, C. C., & Yeh, W. J. (2013). Avatars with sex appeal as pedagogical agents: Attractiveness, trustworthiness, expertise, and gender differences. Journal of Educational Computing Research, 48 (4), 403-429.

Wang, N., Johnson, W. L., Mayer, R. E., Rizzo, P., Shaw, E., & Collins, H. (2008). The politeness effect: Pedagogical agents and learning outcomes. International Journal of Human-Computer Studies, 66 (2), 98-112.

Watson, D., & Clark, L. A. (1999). The PANAS-X: Manual for the positive and negative affect schedule-expanded form. Iowa City: University of Iowa.

Wong, J. W. E., & McGee, K. (2012, January). Frown more, talk more: Effects of facial expressions in establishing conversational rapport with virtual agents. In Y. Nakano, M. Neff, A. Paiva, & M. Walker (Eds.), Intelligent Virtual Agents (pp. 419-425). Berlin Heidelberg: Springer.

Yılmaz, R., & Kılıç-Çakmak, E. (2012). Educational interface agents as social models to influence learner achievement, attitude and retention of learning. Computers & Education, 59 (2), 828-838.

Yung, H. I., & Paas, F. (2015). Effects of cueing by a pedagogical agent in an instructional animation: A cognitive load approach. Journal of Educational Technology & Society, 18 (3), 153-160.

Yusoff, M., & Zin, N. (2013). Exploring suitable emotion-focused strategies in helping students to regulate their emotional state in a tutoring system: Malaysian case study. Electronic Journal of Research in Educational Psychology, 11 (3), 717-742.

Zanbaka, C. A., Ulinski, A. C., Goolkasian, P., & Hodges, L. F. (2007, April). Social responses to virtual humans: Implications for future interface design. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 1561-1570). ACM.

The Effects of a Pedagogical Agent's Smiling Expression on the Learner's Emotions and Motivation in a Virtual Learning Environment by Tze Wei Liew, Nor Azan Mat Zin, Noraidah Sahari, and Su-Mae Tan is licensed under a Creative Commons Attribution 4.0 International License.