|

|

Brenda Cecilia Padilla Rodriguez1 and Alejandro Armellini2

1Universidad Autonoma de Nuevo Leon, Mexico, 2University of Northampton, UK

Although interaction is recognised as a key element for learning, its incorporation in online courses can be challenging. The interaction equivalency theorem provides guidelines: Meaningful learning can be supported as long as one of three types of interactions (learner-content, learner-teacher and learner-learner) is present at a high level. This study sought to apply this theorem to the corporate sector, and to expand it to include other indicators of course effectiveness: satisfaction, knowledge transfer, business results and return on expectations. A large Mexican organisation participated in this research, with 146 learners, 30 teachers and 3 academic assistants. Three versions of an online course were designed, each emphasising a different type of interaction. Data were collected through surveys, exams, observations, activity logs, think aloud protocols and sales records. All course versions yielded high levels of effectiveness, in terms of satisfaction, learning and return on expectations. Yet, course design did not dictate the types of interactions in which students engaged within the courses. Findings suggest that the interaction equivalency theorem can be reformulated as follows: In corporate settings, an online course can be effective in terms of satisfaction, learning, knowledge transfer, business results and return on expectations, as long as (a) at least one of three types of interaction (learner-content, learner-teacher or learner-learner) features prominently in the design of the course, and (b) course delivery is consistent with the chosen type of interaction. Focusing on only one type of interaction carries a high risk of confusion, disengagement or missed learning opportunities, which can be managed by incorporating other forms of interactions.

Keywords: Interaction; course effectiveness; online courses; interaction equivalency theorem

Interactions with the content, peers or the teacher have long been recognised as an essential component of any course. Their value in web-based courses has been reported by learners (Rhode, 2009) and teachers (Su, Bonk, Magjuka, Liu & Lee, 2005). Online interactions have been associated with increased satisfaction rates (Chang & Smith, 2008), higher course grades (Zimmerman, 2012) and decreased course dropouts (Lee & Choi, 2011). However, commercial organisations can offer a challenging setting to create interactive online courses. Courses with limited opportunities for communication with others (e.g., Padilla Rodriguez & Armellini, 2013a; Padilla Rodriguez & Fernandez Cardenas, 2012; Welsh, Wanberg, Brown & Simmering, 2003), such as ‘page turners’ and courses consisting mostly of static text, are common.

The evaluation of course effectiveness poses different challenges. While in academic settings learning is usually considered the ultimate goal, in corporate environments, business executives tend to prioritise other indicators, such as knowledge transfer (Kirkpatrick & Kirkpatrick, 2010). Even so, comprehensive evaluations are uncommon in companies and tend to be limited to a satisfaction survey and a final exam (Kim, Bonk & Teng, 2009; Macpherson, Elliot, Harris & Homan, 2004; Vaughan & MacVicar, 2004). Sometimes the value of the training is calculated using consumption metrics like the number of programmes offered and the duration of completed courses (Kirkpatrick & Kirkpatrick, 2010; Macpherson et al., 2004). Some organisations lack proper monitoring of training (Kim et al., 2009) and do not even track which employees completed which course (Vaughan & MacVicar, 2004).

This paper aims to provide an understanding of how people interact with content, teachers and peers in online courses, a comprehensive evaluation of course effectiveness, and the identification of successful course designs in a corporate setting. It addresses the above challenges by reporting on a research study focused on the experience of a large commercial food organisation (+6000 employees) with 30 distribution centres and offices in Mexico. In 2012, this company was interested in improving its offer of educational opportunities for its staff by taking advantage of their technological resources to deliver effective, interactive online courses.

The interaction equivalency theorem (Anderson, 2003) provides guidelines for effective online course design through its two theses: 1) Meaningful learning can be supported as long as one of three types of interactions (learner-content, learner-teacher or learner-learner) is present at a high level. The other two forms can be offered in a minimal degree, or not at all, without decreasing the quality of learning. 2) High levels of more than one type of interaction are likely to provide more satisfying educational experiences. However, the cost and design time requirements of these experiences might make them less efficient. This theorem is attractive to organisations wishing to expand and improve their offering of online programmes, as it addresses limitations in social interactions (i.e., between people) by suggesting that meaningful learning will occur if one of the other types of interaction can be maximised (Rhode, 2009).

Online learners tend to reject the idea of different types of interaction being equivalent or interchangeable (Padilla Rodriguez & Armellini, 2013a; Rhode, 2009). Nonetheless, perceptions may be different from actual behaviours and outcomes (e.g., Caliskan, 2009; Picciano, 2002). In a meta-analysis encompassing 74 studies, Bernard et al. (2009) reported that all three types of interactions are important for students’ academic achievement. The presence of interactions at high and moderate levels was preferable. This finding is consistent with the notion that a high level of at least one type of interaction supports meaningful learning.

Research comparing online course designs emphasising different types of interactions provides further insights. Four groups participated in one of such studies (Russell, Kleiman, Carey & Douglas, 2009). Group 1 had a high level of social interactions (i.e., learner-teacher and learner-learner). Group 2 focused on learner-learner interactions. Group 3 had a teacher but no embedded means for communications between students. Learner-teacher interactions happened via email. Group 4 had a high level of learner-content interactions, no discussion forums and minimum human support available. As Anderson (2003) predicted, outcomes were comparable across all four course variations. Participants rated the quality of all courses highly and achieved the expected objectives. A similar finding was reported by another study (Tomkin & Charlevoix, 2014), in which participants of a massive open online course were divided into two groups, one with a high level of learner-teacher interactions and one without (but with high levels of learner-learner interactions). Completion and participation rates were similar across both groups, as well as students’ perceptions on the course.

A similar study was conducted in a large organisation (Padilla Rodriguez & Armellini, 2014). A group of employees experienced three different online courses, each emphasising a different type of interaction (learner-content, learner-teacher or learner-learner). Participants in all courses achieved the intended learning outcomes. They also reported being satisfied, feeling ready to apply the acquired knowledge in their workplace and having their expectations met. Nonetheless, findings were mostly based on learners’ perceptions, which indicate that the relationship between designed interactions and course effectiveness is not as straight-forward as the interaction equivalency theorem (Anderson, 2003) suggests. This article presents the results of a subsequent study, which aimed to explore learners’ interactions and incorporate other indicators of course effectiveness, following Kirkpatrick’s (1979) guidelines.

The four-level model of training effectiveness (Kirkpatrick 1979) is the most widely used one in corporate online learning (DeRouin, Fritzsche & Salas, 2005; Peak & Berge, 2006). It evaluates course effectiveness in terms of (1) reactions (satisfaction), (2) learning (acquisition of knowledge and skills), (3) behaviours (performance in the workplace, or knowledge transfer) and (4) business results (organisational level outcomes, such as sales increase). A fifth level (return on investment) was subsequently added, and it evolved into return on expectations, or the extent to which clients’ expectations are met (Kirkpatrick & Kirkpatrick, 2010). To obtain a benchmark for comparison, Kirkpatrick (1979) suggests using either a control group or a pre-post approach when conducting evaluations.

Reports of online courses at organisations tend to be positive. Employees usually express positive reactions towards online courses (e.g., Gunawardena, Linder-VanBerschot, LaPointe & Rao, 2010; Joo, Kim & Park, 2011) and believe that online learning contributes to their personal development (Skillsoft, 2004; Vaughan & MacVicar, 2004). Learning outcomes are usually met (see review by DeRouin et al., 2005), although sometimes students just want to find specific information in the course and leave without completing it once they have learned what they need (Skillsoft, 2004; Welsh et al., 2003). While there is limited information about the application of levels three and four of Kirkpatrick’s (1979) model, some studies hint that online courses are useful to help improve job performance (e.g., Korhonen & Lammintakanen, 2005) and achieve business results (DeRouin et al., 2005).

Could the interaction equivalency theorem be supported when incorporating all of these indicators of course effectiveness? This paper addresses this issue. Specifically, data collection and analysis were guided by the following research questions:

Since 2004, course designers at the participating organisation had developed a face-to-face Leadership Programme, composed of eight five-hour courses, or modules. Four of these courses were selected for redesign and online delivery via the Moodle learning platform. The outcomes of the first three are reported in Padilla Rodriguez & Armellini (2014). The fourth one forms the basis of this study.

A pre-existing face-to-face course on Feedback on Performance was redesigned into three versions, each emphasising a different type of interaction (learner-content, learner-teacher or learner-learner). Planned outcomes matched Kirkpatrick’s (1979) levels of evaluation. Participants were expected to experience an enjoyable, interactive, practical online course (reactions), acquire theoretical knowledge on how to provide feedback on performance (learning), and apply this knowledge in their job with the members of their teams (behaviours). Students who completed the four online courses of the Leadership Programme were expected to increase the coverage of their monthly sales quota (business results).

All versions of the course required approximately five study hours. Their design incorporated ten reading texts, five non-assessed activities, a non-assessed final project focused on the application of knowledge in the workplace and a final exam. It also included a general discussion forum available as an open space for questions and comments, an ethics statement about the study, the objectives and structure of the course, and diagnostic and evaluation surveys. To test the first thesis of the interaction equivalency theorem (Anderson, 2003) in each version of the online course, the researchers attempted to design high levels of only one type of interaction and low levels of the rest. Course designers at the organisation validated all materials and activities. A brief description of each course version is next.

The design and development of this version of the course required a major time investment, as content needed to be self-explanatory to compensate for the lack of social interactions. The researchers tried to think of all the possible questions students could ask when navigating through the course, and to provide answers. Reading texts fostered learner-content interactions by including hyperlinks, images and embedded podcasts. Activities required explicit, observable responses from the learners. Resources included multiple choice questions with automated feedback; a poll that allowed students to see the responses of the group; three podcasts with their transcripts; and a personal wiki, which served only as a space for students to write their reflections (blogs were blocked).

Besides the general discussion forum, there were no other embedded communication tools. The role of teachers was to monitor student progress without directly intervening. If required, teachers could use the general discussion forum to answer questions and clarify tasks.

Online activities in this course version followed Salmon’s (2002) e-tivity framework, which promotes a conversation between participants, and included examples of expected responses. To maintain the focus on learner-teacher interactions and to prevent inadvertently fostering peer exchanges, activity instructions referred specifically to teacher feedback. Sometimes teachers were asked to reply to each student, and at other times they would address the whole group by summarising contributions. Teachers were expected to be an active part of the course, maintaining contact, having a regular presence in online discussions (Dennen, Darabi & Smith, 2007), moderating online learning and providing guidance. Online tools included five discussion forums and a wiki per student, available only to the teacher and the learner. Reading materials did not include multimedia, as this could increase the number of learner-content interactions.

Activities in this course version also followed Salmon’s (2002) e-tivity framework, which requires learners to comment on the work of others (thus generating interactive loops). All five activities included a discussion forum and examples. Participants were expected to post at least two messages under each activity, one with their solution to the task and a second one replying to others’ contributions. No multimedia content was available in this version of the course. Teachers were advised to moderate ‘by exception’: Teachers were asked to stand back and let students interact among themselves.

Sales supervisors (n=146, 28 women and 118 men) participated as students of the Feedback on Performance course. Their ages ranged from 25 to 57 with a mean of 38 years. On average, they had worked at the organisation for five years. Most of them (≈62%) had some university studies. Others (≈31%) had only completed high school (9-12 years of formal education). Few (≈7%) had only secondary education (6-9 years).

In their daily jobs, sales supervisors were usually out in the field, visiting supermarkets and convenience stores, negotiating sales and talking to retailers in their work team. Most of them were not used to office work. Nine months before the study, sales supervisors had received a netbook computer with internet access. They had had weekly compulsory training to learn the basics of using this technology and how to learn online effectively. They were also enrolled in three previous online courses, all part of the Leadership Programme, each emphasising a different type of interaction (see Padilla Rodriguez & Armellini, 2014).

Learners were divided into 18 groups of between 5 and 16 participants (median = 8). The distribution was decided by the organisation, on the basis that these groups had worked together effectively in the past and should remain unchanged. The average student:teacher ratio was 5:1. Sales managers and directors (n=30, 2 women and 28 men) participated as teachers. The participating organisation selected them for this role mainly due to their experience in and knowledge of the topic.

Teachers’ age ranged from 27 to 55 with a mean of 41 years. Their average tenure with the organisation was six years. All but two of them had at least some university studies. They had taken part in training on how to perform as face-to-face and online teachers, and had experience in this role. They received a manual with guidance on how to act according to the course version they were teaching and specific examples of expected behaviours.

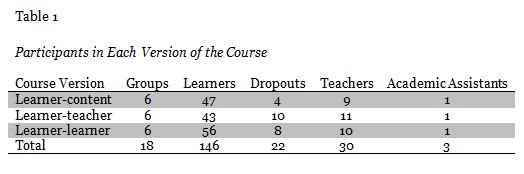

Three education staff members performed as academic assistants to the courses, monitoring activities and providing general support for participants. Table 1 summarises the participants in each version of the Feedback on Performance course.

Human resources (HR) personnel from offices around Mexico participated by conducting observations of students. Administrative staff from the sales department provided access to sales records. Staff of the systems department (IT services) offered technical support.

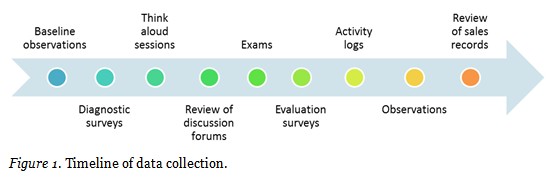

Data collection included several steps that incorporated Kirkpatrick’s (1979) suggestions of a pre-post approach. Figure 1 summarises this process.

Before the start of the Leadership Programme, HR staff located in the different offices around Mexico attended regular monthly sales meetings and observed the performance of sales supervisors running the meetings (49 students and 14 members of a control group). They used a guide created by the researchers to evaluate feedback-related behaviours using a 10-point scale.

At the beginning of each course version, a diagnostic survey was available online. It consisted of closed questions that gauged students’ initial course expectations, previous knowledge of the topic and their perceived competence when providing feedback to their team members. Students’ self-assessment of their own knowledge and performance served as a reference point for comparison with learning and behaviors after the course.

Students had one week to review the resources and complete all the activities. A convenience sample of eight students enrolled in the course version emphasising learner-content interactions participated in individual think aloud sessions. This method consisted of observing participants, while they verbally articulated their behaviours, feelings and thoughts as they engaged with their online course. Throughout the session, the researcher’s input was minimum, generally limited to prompts to keep talking when participants fell quiet (Young, 2005). Data were audio recorded and transcribed. Transcripts were coded and analysed using NVivo, a qualitative data analysis computer software package. Themes for categorisation were based on learning strategies students used when interacting with the content.

To obtain an insight into social interactions (learner-teacher and learner-learner), after the end of the course, the researchers navigated through the discussion forums, aiming to identify trends, salient features and unusual behaviours, keeping notes of findings.

A final exam with ten multiple-choice, matching and true-false questions evaluated knowledge acquisition at the end of the course. Five items related to a case that students had to analyse. After answering, participants could check their grades and feedback. A slightly different version of this exam, which included a brief information consent form and could be answered anonymously, was available for ten members of a control group, who were not enrolled in the course. Average grades for participants in each course version were calculated.

An evaluation survey included closed questions (5-point Likert scales) about perceived engagement with the activities, interactions with content, teacher and peers, and students’ evaluation of the course in terms of satisfaction, learning, workplace behaviors and fulfilment of expectations. An additional question asked if they had used the private messaging system of the online learning platform. Frequencies and percentages were calculated. Open questions in the same survey explored learners’ favourite aspect of the course and their suggestions to improve the course. Coding focused on references to the different types of interactions.

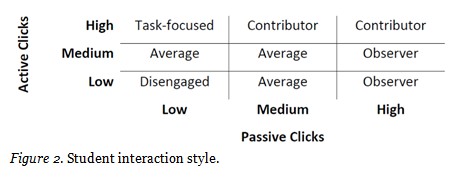

Moodle log entries provided additional information about all types of interactions within the course. Each log entry contained an action and an information field, which indicated that a click happened and specified what the user did. Entries were categorised as passive or active. Viewing a resource (e.g., a discussion forum, a wiki or a reading text) was considered passive. Views of the front (landing) page of the course were excluded. Active contributions were clicks that resulted in an observable response (e.g., adding a message, editing a wiki or selecting a poll answer). Medians were obtained, and ranges (low, medium and high) were determined. The medium range was the one that included 50% of participants.

Learners were characterised in terms of their online behaviours as measured by the number and type of clicks (Figure 2). Task-focused students are those for whom finishing tasks takes priority over reading support materials. Contributors are those typically considered ‘good students’ who review resources, complete activities and share outputs. Average learners are those who fulfil requirements to a minimum acceptable level. Observers spend more time looking at resources and activities than responding to them. Sometimes they are referred to as lurkers (Salmon, 2011). Finally, disengaged students are those who participate little or not at all. The percentage of each type of student was calculated.

After the end of the Leadership Programme, new observations were conducted at the monthly sales meetings. Average grading of pre and post observations were compared. Data were triangulated with students’ perceptions of their own improvement.

The organisation facilitated access to sales records from 2012, before and after the Leadership Programme. These documents provided information about business results (level four in Kirkpatrick’s effectiveness evaluation model, 1979). Twenty-two students dropped out of the Leadership Programme at different stages or failed to complete it within the allocated time. When available, their sales records were taken on board. The average sales quota fulfilment three months before and three months after the programme were calculated. Results for sales supervisors who successfully completed the Leadership Programme (n=101) and those who did not (n=38) were compared. A Kolmogorov Smirnov test was used to check if differences between groups (i.e., those who completed the Leadership Programme versus those who did not) were statistically significant.

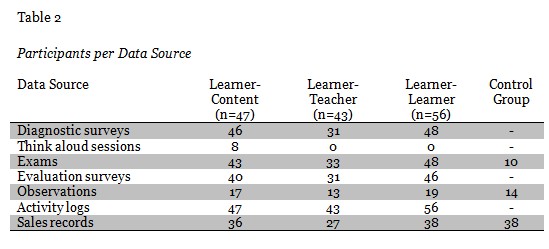

Table 2 matches data sources and the sample for each group.

Results are grouped in terms of the topics of the research questions: participants’ interactions within the course, course effectiveness and the comparison between course designs.

Students were asked whether they had used Moodle’s private messaging system. Most of them had sent at least one private message to another participant (LC: 14/18, LT: 8/14, LL: 10/12). They had not been taught how to access the private messaging system. Learners of all groups tended to rate peer and teacher participation favourably, by expressing their agreement with the following statements:

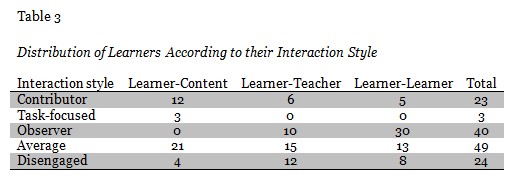

Engagement with the courses varied. In the versions with predominantly social interactions, online communications faced several problems, such as untimely answers. Students tended to become observers, checking discussion forums regularly. In the course emphasising learner-content interactions, more participants were contributors and task-focused (Table 3). This finding highlights the importance of delivery, which can influence the quality of interactions. The following sections detail the interactions in each course version.

Students could self-pace throughout the online course, as they did not depend on others’ input to move forward. Activity logs provided evidence of engagement with the content. Most participants had an average interaction style (21/47) or were contributors (12/47; Table 3). Less than half of the students (19/47) checked optional resources such as glossary entries. Most students (35/40; 88%) reported being engaged or very engaged with the activities.

Think aloud data revealed that when interacting with the content, students used different strategies to make information relevant or personalised. For example:

Some students read superficially, skimming through the text. However, activities (i.e., devices designed to promote learner-content interactions) seemed to encourage them to go back and spend time on deeper readings.

During the think aloud sessions, six out of the eight participants had questions that were not answered by the content. At times, they would ask the researcher what to do. On other occasions they would read their own notes for answers. According to the activity logs, all but one student (46/47) checked the automated feedback received in at least one activity. Incorrect answers encouraged students to read again (e.g., “I think I have an incorrect answer… I am going to read again and then I will answer [again]”).

In the evaluation surveys, 29/40 respondents had no suggestions to improve the course. Three people mentioned the importance of having embedded social interactions. Yet, students did not use the general discussion forum, which was available for questions and comments. Only six participants accessed it during the course.

Although this version of the course fostered no social interactions, the think aloud sessions provided evidence of peer exchanges happening outside the online learning environment. During all the sessions, either via phone calls or face-to-face interactions, work colleagues interrupted students to discuss job matters, which were directly or indirectly related to the content of the course.

An early analysis of this course version is available in Padilla Rodriguez & Armellini (2013b).

Most students (28/31; 90%) reported being engaged or very engaged with the activities. While 23/31 participants had no suggestions to improve the course, three mentioned the importance of social interactions.

Messages reviewed in the discussion forums revealed that no real communication with the teachers happened online. Most teachers (8/11) simply did not participate in the course. They appeared to be under the impression that the course would teach itself. Three teachers provided feedback but in an untimely manner (after the course was over). The quality of these belated comments, however, was generally high: Teachers referred to participants by their names, expressed their views concisely, provided examples, questioned, summarised, answered, etc. Yet, learners who had a response from the teacher did not engage in further dialogue, which questions whether they had benefited from those comments.

Course activities required students to obtain teachers’ feedback to move forward. Learners checked the discussion forums regularly, and some of them (10/43) took the role of observers (Table 3). On average, each student viewed the five activity-related discussion forums 53 times and sent eight messages in total (ten was the minimum required). Many messages were left unanswered.

Even if task instructions did not require it, some students responded to the lack of support by taking the role of the teacher themselves and responding to their peers. Other students responded to their peers in a shallow way and often just to agree. Either way, learners moved forward, regardless the lack of online teacher participation.

Almost a third of students (12/43) were disengaged and reduced their efforts to the bare minimum (e.g., posting shallow messages and failing to complete activities). In the evaluation survey at the end of the course, one of the participants requested: “Let there be support from the facilitator.”

Most students (44/46; 96%) reported being engaged or very engaged with the online activities, which required students to respond to others and to check others’ comments on their contributions. Participants checked the discussion forums regularly. Most students (30/56) took the role of observers (Table 3). On average, each student viewed the six activity-related discussion forums 83 times and sent 12 messages, which is consistent with the minimum number of posts expected.

Some messages were left unanswered. Other answers were posted too late to benefit others. Teachers in this version of the course provided no clarification, although they were expected to: Moderation was supposed to occur in cases of confusion. Messages reviewed in the discussion forums revealed that no meaningful communications between learners happened online. A high number of replies consisted of brief comments agreeing to other participants’ contributions, or telegraphic, hard-to-interpret messages, as if students were just responding to fulfil the activities’ requirement and tick the box. Learners who had a response from others would rarely or never reply.

Some participants did use the discussion forums to elaborate on the topic (e.g., It may be very motivating to acknowledge the efforts of our collaborators, but it is more important the way in which we provide the feedback), provide suggestions (e.g., “Your feedback is motivating, but you need to be more specific. If you generalise, you might neglect important details”), and show support (e.g., “You have the experience and skill to achieve [your goal]. Remember that each retailer has a different level of motivation, and you have the way of making them be motivated”).

Some learners found contributions from others beneficial. In the evaluation surveys, when asked about the aspect of the course they had enjoyed most, nine answers (out of 46) referred to learner-learner interactions. For example: “The participation of my course mates, which at the same time served me as feedback.”

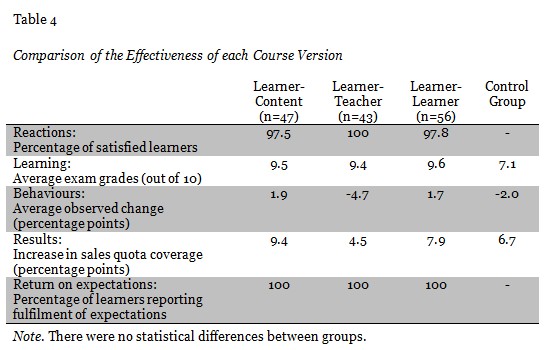

Course effectiveness was generally high, in line with studies reporting positive reactions towards online courses (Gunawardena et al., 2010; Joo et al., 2011), learning (DeRouin et al., 2005), improvement of job performance (Korkhonen & Lammintakanen, 2005) and achievement of business results (DeRouin et al., 2005). Despite the issues identified above (i.e., unanswered questions, lack of feedback and shallowness of interactions), students managed to meet the intended course outcomes. Table 4 summarises the findings in terms of each level of effectiveness, for each course version.

Twenty-two employees dropped out of the courses. Eighteen of them were enrolled in the versions emphasising social interactions. Perhaps they felt disengaged due to the mostly shallow and untimely exchanges online, or they considered they had learned what they needed before completion (Skillsoft, 2004; Welsh et al., 2003).

Participants’ initial course expectations encompassed all the effectiveness levels defined by Kirkpatrick (1979). Most learners (81-88%) expected to acquire and apply knowledge and to translate this into increased sales. At the end of the course, regardless of the type of interactions emphasised, all participants reported in the evaluation surveys that their expectations had been met. These data are in line with reports of other employees who perceive that online learning contributes to personal development (Skillsoft, 2004; Vaughan & MacVicar, 2004), and with students’ perceptions of being satisfied with their course. Participants in this research claimed they had learned and felt prepared to provide effective feedback to their collaborators.

Considering student satisfaction, learning outcomes and return on expectations, all courses were equally effective, regardless of the type of interactions emphasised (Table 4). A Kruskal-Wallis test was run to compare the exam grades of the learners who took the different course versions. Results were not statistically significant, which is consistent with previous studies (Bernard et al., 2009; Padilla Rodriguez & Armellini, 2014; Russell et al., 2009; Tomkin & Charlevoix, 2014). They suggest that the interaction equivalency theorem (Anderson, 2003) is applicable in a corporate setting.

There were differences when considering behaviours and business results. Students in the course emphasising learner-teacher interactions did worse than those in all other groups (Table 4). Since disengaged teachers were also students’ line managers, it is likely that participants received no encouragement to apply in the workplace what they had learned in their online courses and translate it into business results. Organisational support has been linked to knowledge transfer (Gunawardena et al., 2010; Joo et al., 2011).

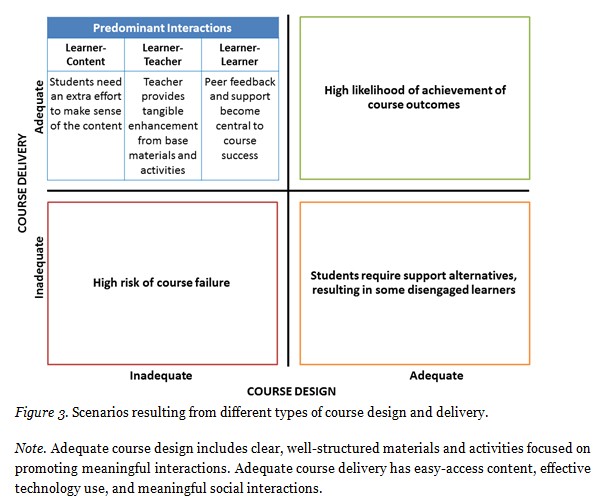

Delivery and online moderation posed new challenges for course effectiveness, which were not fully incorporated into the design phase. As described, these problems included learners having questions not answered in the course materials, teachers being disengaged and students sending shallow contributions. Both design and delivery are important for effectiveness. Figure 3 exemplifies possible scenarios for online courses emphasising a single type of interaction.

Note. Adequate course design includes clear, well-structured materials and activities focused on promoting meaningful interactions. Adequate course delivery has easy-access content, effective technology use, and meaningful social interactions.

When both design and delivery are adequate, there is a high likelihood that course outcomes will be achieved. When both design delivery are inadequate, the course will probably fail. When there is adequate design but delivery is inadequate (as happened in this study in course versions with predominantly social interactions), students will require additional support alternatives, which they may find within or outside the course boundaries. Some learners will be successful in finding them; others will become disengaged. Delivery can provide a tangible enhancement to an online course, even when its design is inadequate.

The interaction equivalency theorem (Anderson, 2003) applied in the corporate setting of this study: Deep, meaningful learning can be supported as long as one of three types of interaction (learner-content, learner-teacher or learner-learner) is present at a high level. Results from course versions emphasising different types of interactions showed that participants obtained similar exam grades and reported similar perceptions about their learning. Thus, this study tested and provided empirical support for the theorem in a commercial organisation.

This study considered indicators of course effectiveness relevant in organisational contexts: learner satisfaction, behaviours in the workplace, business results (Kirkpatrick, 1979) and return on expectations (Kirkpatrick & Kirkpatrick, 2010). All participants reported feeling satisfied with their courses and having their expectations fulfilled. Those students in courses with predominantly learner-content or learner-learner interactions also showed improvement in their communication skills and increased sales results. In courses emphasising learner-teacher interactions, most teachers were disengaged. While students achieved learning outcomes, their behaviours in the workplace and business results were not as good as those of participants in other groups. The quality of course delivery is crucial for success.

Findings suggest that the interaction equivalency theorem can be reformulated as follows: In corporate settings, an online course can be effective in terms of satisfaction, learning, knowledge transfer, business results and return on expectations, as long as it meets the following criteria: (a) at least one of three types of interaction (learner-content, learner-teacher or learner-learner) features prominently in the design of the course, and (b) course delivery is consistent with the chosen type of interaction.

Criterion (a) refers to what Anderson (2003a) called a ‘high level’ of at least one of the three types of interactions. It implies the design of multiple online activities, which require observable responses from participants and generate interactions with the content, the teacher or other learners. Criterion (b) requires designers and other practitioners to consider course delivery, which should be planned and managed to maximise the benefit and impact of the predominant type of interaction designed into the course.

While designers and educators cannot control participants’ online behaviours or guarantee that interactions will be meaningful, they should incorporate sufficient opportunities for exchanges and ensure that support channels are available. In this study, learners were resourceful when they faced the disadvantages of the interactions designed into their courses. They engaged in informal, unplanned learning activities beyond course requirements, on and offline. If interactions embedded in their courses did not provide answers to their questions, they looked for alternatives, such as reviewing their own notes, communicating privately with others via Moodle messages or talking face to face with colleagues. These activities relate to all three types of interactions and have a potential impact on course effectiveness.

Incorporating more than one type of interaction may compensate for the disadvantages of the chosen form of embedded interactions. This idea may appear to be similar to the second thesis of Anderson’s (2003) interaction equivalency theorem: High levels of more than one of three types of interaction (learner-content, learner-teacher and learner-learner) are likely to provide a more satisfying educational experience but at a higher monetary and time cost than less interactive courses. However, the recommendation is to ensure the course does not rely on a single type of interaction, rather than to include high levels of several types.

This reformulation of the interaction equivalency theorem provides guidelines for the design and delivery of effective online courses at organisations. It may be valuable for academics and practitioners interested in corporate online learning.

Special thanks to the following organisations in Mexico: National Council of Science and Technology (CONACYT), the government of Nuevo Leon, and the Institute of Innovation and Technology Transfer (I2T2).

Anderson, T. (2003). Getting the mix right again: An updated and theoretical rationale for interaction. The International Review of Research in Open and Distance Learning, 4(2). Retrieved from http://auspace.athabascau.ca:8080/dspace/bitstream/2149/360/1/GettingtheMixRightAgain.pdf

Bernard, R. M., Abrami, P. C., Borokhovski, E., Wade, C. A., Tamim, R. M., Surkes, M. A. & Bethel, E. C. (2009). A meta-analysis of three types of interaction treatments in distance education. Review of Educational Research, 79(3), 1243-1289.

Caliskan, H. (2009). Facilitators’ perception of interactions in an online learning program. Turkish Online Journal of Distance Education, 10(3), 193-203.

Chang, S.- H. H. & Smith, R. A. (2008). Effectiveness of personal interaction in a learner-centered paradigm distance education class based on student satisfaction. Journal of Research on Technology in Education, 40(4), 407-426.

Dennen, V. P., Darabi, A. A. & Smith, L. J. (2007). Instructor-learner interaction in online courses: The relative perceived importance of particular instructor actions on performance and satisfaction. Distance Education, 28(1), 65-79.

DeRouin, R. E., Fritzsche, B. A. & Salas, E. (2005). E-learning in organizations. Journal of Management, 31(6), 920-940.

Gunawardena, C. N., Linder-VanBerschot, J. A., LaPointe, D. K. & Rao, L. (2010). Predictors of learner satisfaction and transfer of learning in a corporate online education program. American Journal of Distance Education, 24(4), 207-226.

Joo, Y. J., Lim, K. Y. & Park, s. Y. (2011). Investigating the structural relationships among organisational support, learning flow, learners’ satisfaction and learning transfer in corporate e-learning. British Journal of Educational Technology, 42(6), 973–984.

Kim, K.-J., Bonk, C. J. & Teng, Y.-T. (2009). The present state and future trends of blended learning in workplace learning settings across five countries. Asia Pacific Education Review, 10, 299-308.

Kirkpatrick, D. (1979). Techniques for evaluating training programs. Training and Development Journal, 33(6), 78-92.

Kirkpatrick, J. D. & Kirkpatrick, W. K. (2010). Training on trial: How workplace learning must reinvent itself to remain relevant. New York, USA: AMACOM.

Korhonen, T. & Lammintakanen, J. (2005). Web-based learning in professional development: Experiences of Finnish nurse managers. Journal of Nursing Management, 13, 500-507.

Lee, Y. & Choi, J. (2011). A review of online course dropout research: Implications for practice and future research. Educational Technology Research and Development, 59(5), 593-618.

Macpherson, A., Elliot, M., Harris, I. & Homan, G. (2004). E-learning: reflections and evaluation of corporate programmes. Human Resource Development International, 7(3), 295-313.

Padilla Rodriguez, B. C. & Armellini, A. (2013a). Interaction and effectiveness of corporate e-learning programmes. Human Resource Development International, 16(4), 1-10.

Padilla Rodriguez, B. C. & Armellini, A. (2013b). Student engagement with a content-based learning design. Research in Learning Technology, 21(2013). http://dx.doi.org/10.3402/rlt.v21i0.22106

Padilla Rodriguez, B. C. & Armellini, A. (2014). Applying the interaction equivalency theorem to online courses in a large organisation. Journal of Interactive Online Learning, 13(2), 51-66.

Padilla Rodriguez, B. C. & Fernandez Cardenas, J. M. (2012).Developing professional competence at a Mexican organization: Legitimate peripheral participation and the role of technology. Procedia - Social and Behavioral Sciences, 69(2012), 8-13.

Peak, D. & Berge, Z. L. (2006). Evaluation and eLearning. Turkish Online Journal of Distance Education, 7(1), 124-131.

Picciano, A. G. (2002). Beyond student perceptions: Issues of interaction, resence, and performance in an online course. Journal of Asynchronous Learning Networks, 6(1), 21-40.

Rhode, J. F. (2009). Interaction equivalency in self-paced online learning environments: An exploration of learner preferences. International Review of Research in Open and Distance Learning, 10(1). Retrieved from http://www.irrodl.org/index.php/irrodl/article/viewArticle/603/1178

Russell, M., Kleiman, G., Carey, R. & Douglas, J. (2009). Comparing self-paced and cohort-based online courses for teachers. Journal of Research on Technology in Education, 41(4), 443-466.

Salmon, G. (2002). E-tivities: the key to active online learning. Sterling, VA: Stylus Publishing Inc.

Salmon, G. (2011). E-moderating: the key to online teaching and learning. New York, USA: Routledge.

Skillsoft. (2004). EMEA e-learning benchmark survey: The users’ perspective. Retrieved from http://www.oktopusz.hu/domain9/files/modules/module15/365BAEFCF22C27D.pdf

Su, B., Bonk, C. J., Magjuka, R. J., Liu, X. & Lee, S. (2005). The importance of interaction in web-based education: A program-level case study of online MBA courses. Journal of Interactive Online Learning, 4(1), 1-19.

Tomkin, J. H., & Charlevoix, D. (2014). Do professors matter?: Using an a/b test to evaluate the impact of instructor involvement on MOOC student outcomes. Proceedings of the first ACM conference on Learning@ scale conference. Retrieved from http://dl.acm.org/citation.cfm?id=2566245

Vaughan, K. & MacVicar, A. (2004). Employees’ pre-implementation attitudes and perceptions to e-learning: A banking case study analysis. Journal of European Industrial Training, 28(5), 400-413.

Welsh, E. T., Wanberg, C. R., Brown, K. G. & Simmering, M. J. (2003). E-learning: Emerging uses, empirical results and future directions. International Journal of Training and Development, 7(4), 245-258.

Young, K. A. (2005). Direct from the source: The value of ‘think-aloud’ data in understanding learning. Journal of Educational Enquiry, 6(1), 19-33.

Zimmerman, T. D. (2012). Exploring learner to content interaction as a success factor in online courses. The International Review of Research in Open and Distance Learning, 13(4), 152-165.

© Padilla Rodriguez and Armellini