Royce M Kimmons

University of Idaho, United States

This study seeks to understand how to use formal learning activities to effectively support the development of open education literacies among K-12 teachers. Considering pre- and post-surveys from K-12 teachers (n = 80) who participated in a three-day institute, this study considers whether participants entered institutes with false confidence or misconceptions related to open education, whether participant knowledge grew as a result of participation, whether takeaways matched expectations, whether time teaching (i.e., teacher veterancy) impacted participant data, and what specific evaluation items influenced participants’ overall evaluations of the institutes. Results indicated that 1) participants entered the institutes with misconceptions or false confidence in several areas (e.g., copyright, fair use), 2) the institute was effective for helping to improve participant knowledge in open education areas, 3) takeaways did not match expectations, 4) time teaching did not influence participant evaluations, expectations, or knowledge, and 5) three specific evaluation items significantly influenced overall evaluations of the institute: learning activities, instructor, and website / online resources. Researchers conclude that this type of approach is valuable for improving K-12 teacher open education literacies, that various misconceptions must be overcome to support large-scale development of open education literacies in K-12, and that open education advocates should recognize that all teachers, irrespective of time teaching, want to innovate, utilize open resources, and share in an open manner.

Keywords: Open education; K-12; literacies; professional development

Despite decades of work in the area and hundreds of initiatives and research studies focused on utilizing technology to improve classroom teaching and learning, effective technology integration remains a “wicked problem,” complicated by diverse learning contexts, emerging technologies, and social trends that make formalized approaches to technology integration and theory development difficult (Kimmons, in press; Mishra & Koehler, 2007). Within this space, those intent upon improving K-12 teaching and learning with technology have had difficulty agreeing upon what constitutes effective integration, what the purposes of integration might be, and how such integration might help to solve some of the persistent problems plaguing educational institutions without falling prey to technocentric approaches to change (Papert, 1987).

In response to technocentrism, open education has arisen as an approach for integrating technology into the learning process with a vision for “building a future in which research and education in every part of the world are … more free to flourish” (Budapest Open Access Initiative, 2002, para. 8) and increasing “our capacity to be generous with one another” (Wiley, 2010, para. 39). That is, technology in open education is seen merely as a tool for encouraging and empowering openness. As such, open education encompasses a variety of movements and initiatives, including open textbooks (Baker, Thierstein, & Fletcher, 2009; Hilton & Laman, 2012; Petrides, Jimes, Middleton-Detzner, Walling, & Weiss, 2011) and other open educational resources (Atkins, Brown, & Hammond, 2007; OECD, 2007; Wiley, 2003), open scholarship (Garnett & Ecclesfield, 2012; Getz, 2005; Veletsianos & Kimmons, 2012), open access publishing (Furlough, 2010; Houghton & Sheehan, 2006; Laakso, 2011; Wiley & Green, 2012), and open courses (Fini, 2009; Kop & Fournier, 2010; UNESCO, 2002).

Most proponents of open education focus exclusively upon higher education, despite much excitement among teachers for expanding open practices to K-12 and preliminary evidence that open education can help to address persistent K-12 problems. Reasons for lack of spill-over into K-12 vary, but it is likely that this difference stems in part from the fact that change in K-12 must either occur at the highly bureaucratic state level or at the hidden local level, whereas higher education institutions and their professors have more flexibility to try innovative approaches and also enjoy greater visibility for sharing results. Nonetheless, advances are being made in bringing open practices to K-12 through both practice and research.

Perhaps the most well-known study in this regard was completed by Wiley, Hilton, Ellington, and Hall (2012), wherein they conducted a preliminary cost impact analysis on K-12 school use of open science textbooks and found that these resources may be a cost-effective alternative for schools if certain conditions are met (e.g., high volume). Beyond driving down costs, however, others have suggested that open education can help support the emergence of “open participatory learning ecosystems” (Brown & Adler, 2008, p. 31), can counterbalance the deskilling of teachers that occurs through the purchasing of commercial curricula (Gur & Wiley, 2007), and can provide a good basis for creating system-wide collaborations in teaching and learning (Carey & Haney, 2007). These potentials represent promising aims for K-12 and have even led to the development of open high schools intent upon democratizing education and treating access to educational materials as a fundamental human right (Tonks, Weston, Wiley, & Barbour, 2013).

However, it is also recognized that the shift to open is problematic for a number of reasons (Baraniuk, 2007; Walker, 2007), not least of which is the fact that K-12 teachers must develop new information literacies to become effective open educators (Tonks, Weston, Wiley, & Barbour, 2013), and little work has been done to study how to best support these professionals in developing literacies and practices necessary to embrace openness or to utilize and create their own open educational resources (cf. Jenkins, Clinton, Purushotma, Robinson, & Weigel, 2006; Rheingold, 2010; Veletsianos & Kimmons, 2012). If advocates of open education seek to diffuse open educational practices, then a lack of understanding in how to support literacy development among K-12 teachers is a clear problem. To combat this, this study seeks to move forward the state of the literature and practice on how to effectively train teachers in developing open education literacies.

As personnel in a center for innovation and learning at a public university in the United States, the researchers have taken on the challenge of improving K-12 teaching and learning in their state through effective technology integration and believe that open education may be a way forward for enacting real, scalable change in public K-12 schools. They also believe that open education can serve as an empowering vision that schools may use to move ahead with meaningful technology integration initiatives. However, open education is a new concept to most K-12 teachers and administrators, and knowledge and skills necessary for effectively utilizing and creating open educational resources are not standard topics of teacher education courses or professional development trainings.

As a result, the researchers have sought to push forward a new, grassroots initiative in their state focused upon helping K-12 personnel to develop the knowledge, skills, and attitudes necessary for becoming effective open educators. The first wave of this initiative consisted of conducting a series of Technology and Open Education Summer Institutes for K-12 teachers in the target state, wherein over one hundred teachers participated in a 3-day collaborative learning experience focused on learning about issues related to open education (e.g., copyright, copyleft, Creative Commons) and creating and remixing their own open educational resources.

As we conducted these institutes, we faced a number of challenges and uncertainties due to lack of previous work in this area. Some of these included wondering 1) whether participants entered the institutes with an accurate understanding of open education concepts, 2) whether such an institute setting could be effective for increasing teacher knowledge in this area, 3) whether participant takeaways would match their expectations, 4) whether time teaching or teacher veterancy had any impact on participant perceptions of the learning experience, and 5) what factors might influence an overall evaluation of the institute as a valuable learning experience.

In this study, we explored five research questions emerging from these concerns which will help to inform on-going efforts to promote open education practices in K-12. These questions included the following:

RQ1. Did participants enter the institute with false confidence or misconceptions related to open education concepts (e.g., copyright)?

RQ2. Did participant self-assessments of open education knowledge grow as a result of the institute?

RQ3. Did participant takeaways match initial expectations or change as a result of the institute?

RQ4. Did time teaching (i.e., teacher veterancy) have an effect on participants’ expectations, knowledge, or evaluation metrics?

RQ5. What specific evaluation items influenced participants’ overall evaluations of the institute?

As part of our mission to improve K-12 teaching and learning with technology, our research team conducted a series of three-day Technology and Open Education Summer Institutes with K-12 teachers in our state. Each institute involved up to 30 participants and was organized according to grade level, with two institutes focusing on elementary and two focusing on secondary education.

In total, over one hundred K-12 teachers from all over the target state participated in the summer institutes, representing all grade levels, a variety of subject areas, and all of the state’s educational regions (cf. Idaho State Department of Education, 2007). To our knowledge, there has never been any professional development experience quite like this attempted anywhere, in terms of subject, scale, scope, and diversity of participants, and this study extends prior work in this area by introducing and evaluating an approach to supporting K-12 teacher open education literacy development that is not bounded by a single school or subject area. The overarching institute vision was to help educators across the state to develop open education literacies that they could then take back to their schools for enacting change and supporting innovation in open educational practices. Such a grassroots, broad-spectrum approach to open education is unique and untested, and our goal was to yield research outcomes that could help us move forward with ongoing innovation in this area.

When applying to attend the institutes, potential participants identified subject areas and grade levels that were of most interest to them. If accepted, participants were then assigned to a professional learning community or PLC (DuFour, 2004) within their institutes that was focused on their subject area and/or specific grade level. This meant that though each institute was either focused on elementary or secondary education, each participant had a focused experience in one of five PLCs. PLC focus areas varied by institute but typically included subject area specialization (e.g., science, mathematics).

The actual structure of learning activities at each institute was also atypical as compared to most K-12 professional development experiences. Each institute consisted of roughly 3 phases or days. Day One was more traditional in the sense that it was largely instructor-centered and focused on presentations, provocative videos, and class-wide discussions. During Day One, a small portion of the time was also devoted to helping participants to get to know their PLCs and to begin making plans for how they would work together through the institute. Day Two was completely different. At the start, participants immediately took a few minutes for a planning session with their PLCs to set goals and to gather thoughts from the day before and then began a series of development sprints where each PLC worked together to create open educational resources that would be valuable to their members’ schools and classrooms. During Day Two, the instructor interjected occasionally to provide guidance and support, but all learning and activities were driven by the goals established by each PLC autonomously. During Day Three, the PLCs were given time to wrap up their projects, the instructor provided final guidance on sharing, and each PLC presented their products to the larger group and also made their resources available to the public on the web.

Throughout this process, technology was heavily used to support collaboration and communication. The open course website was made available to participants and the public before the institute began and remains open and available indefinitely (Kimmons, 2014). This decision was surprising to participants, who were accustomed to professional development experiences where information was initially provided but severed upon completion. Making information and resources perpetually available to participants gave them more freedom to focus on working on their own products and critically evaluating learning experiences as opposed to spending time laboriously taking notes in preparation for the time when access to information resources would cease.

Within the lab space utilized for the institutes, each PLC was assigned to a horseshoe-shaped table with a display switching matrix and large-screen interactive display along with personal computing devices to connect into their tables. This allowed each participant to wirelessly access information resources and work on institute materials individually but also to work within the context of a group setting where they could autonomously and effectively collaborate, share, and present their information to other group participants. Throughout this process, collaborative document creation software (i.e., Google Drive) was used so that participants could work on the same documents simultaneously and share resources in a common, cloud-based folder.

Before these institutes, many participants had never experienced using these types of software and hardware tools before, and most had never used them in a synchronous, collaborative setting. Furthermore, the lab also provided access to a variety of other cutting-edge technologies like an interactive table, wearable devices, a telepresence videoconferencing robot, and kinesthetic learning games, which participants were given opportunities to try out and consider their applications for local school use during Technology Exploration sessions.

Though a variety of technologies were provided, technology was not the focus of the institutes but was rather a tool that was used to inspire participants to think creatively and to collaborate in open ways. Because it was anticipated that most teachers would have had little exposure to open education, technology was also used as a marketing tool for the institute, because though most teachers may not have had initial interest in the unknown topic of open education, it was expected that access to new technologies would be a motivator for eliciting interest in the institutes.

This study employed a longitudinal survey design methodology (Creswell, 2008) to collect and analyze data from institute participants before and after the institute. This method was deemed to be appropriate, because research questions lent themselves to quantitative analysis of trends among institute participants over the course of the three-day experience.

Survey respondents included eighty (n = 80) participants in the targeted Technology and Open Education summer institutes. In total, over one hundred K-12 educators participated in the institutes, but not all elected to participate in the study. Participants were predominantly female, reflecting an uneven gender distribution of the K-12 labor force in the target state, came from all geographic regions of the target state, and were generally veteran teachers (72% having taught for five or more years). More detailed participant demographic information was not collected, because it was deemed unnecessary to answer the research questions.

Throughout the institutes, both quantitative and qualitative feedback was elicited from participants, but this report deals primarily with quantitative results. Data sets for this study included two online surveys: one conducted immediately before the institute and one conducted immediately after the institute.

Both surveys were delivered online, and participants completed them by following a link on their personal or provided laptops or mobile devices while at the institute. Surveys consisted of a number of questions that may be categorized as eliciting one of the following:

The pre-survey consisted of the following two factual questions, knowledge question, and expectation question:

The knowledge question consisted of six separate items and yielded a reliable Cronbach’s alpha of .74.

The post-survey consisted of two knowledge questions, five evaluation questions, one expectation question, and three open response questions:

Knowledge questions each consisted of six separate evaluations and yielded a reliable Cronbach’s alpha of .86. Evaluation questions consisted of fifteen total items and yielded a reliable Cronbach’s alpha of .85.

A complete response was determined by the presence of both a pre-survey and post-survey for each participant. Since all study participants were encouraged to complete surveys on-site, the response rate was high (80%), and missing surveys likely reflected improper entry of unique identification numbers or accidental failure to complete one survey.

Data from the pre-survey and post-survey were merged using a unique identifier provided by participants in each survey. Participant data that did not include both surveys were considered incomplete and were excluded from analysis. If multiple responses existed for participants, timestamps were used to select the earliest submission for the pre-survey (to avoid post-surveys mistakenly taken as pre-surveys) and the latest submission for the post-survey (to avoid pre-surveys mistakenly taken as post-surveys). All other submissions were discarded. Several tests were run on the data to answer pertinent research questions, and an explanation of each research question and its accompanying test(s) is now explained.

H0: There was no difference between self-evaluations of prior knowledge collected before the institute and after the institute.

H1: Self-assessments of prior knowledge collected before the institute were different than self-assessments of prior knowledge collected after the institute.

In the pre-survey, participants were asked “How well do you understand each of the following concepts or movements?” and then were expected to self-evaluate their understanding of six open or general education knowledge domains (“Common Core”, “open education,” “copyright,” “fair use,” “copyleft,” and “public domain”) according to a 5-point Likert scale. It was believed that participants might initially rate themselves one way on these knowledge areas but that upon completion of the institute, they might come to realize that their initial self-assessments were incorrect. For this reason, the post-survey included the same question, which was reworded as follows: “How well did you understand each of the following concepts or movements before the institute?” These data were analyzed using paired samples T-tests on each knowledge domain to determine if there was a significant difference between pre-survey assessments of prior knowledge and post-survey assessments of prior knowledge with the expectation that a negative change would reflect a realization on the part of participants that their initial self-assessments had been overstated or based upon a misconception of what the knowledge domain entailed. When completing the post-survey, participants were not given access to their pre-survey assessments, which required them to self-evaluate without reference to their former assessments. In this analysis, the phrase “false confidence and misconceptions” is used to inclusively address all possibilities wherein a participant’s pre-survey assessment of prior knowledge does not match her post-survey assessment of prior knowledge and would include instances where participants might have forgotten the complexity of a topic.

H0: Participants reported no knowledge growth as a result of the institute.

H1: Participants reported knowledge growth as a result of the institute.

In the post-survey, participants were also asked to self-assess their final knowledge with the question “How well do you understand each of the following concepts or movements now?” in connection with the six open education knowledge domains mentioned above and were provided with the same 5-point Likert scale. Two sets of paired samples T-tests were run: one comparing pre-survey prior knowledge with post-survey final knowledge and the other comparing post-survey prior knowledge with post-survey final knowledge. It was anticipated that if knowledge growth occurred, both of these sets of tests would reveal significant differences.

H0: Valued takeaways from the institute matched initial expectations.

H1: Valued takeaways from the institute did not match initial expectations.

In the pre-survey, participants were asked “What do you hope to gain from this institute (please rank with the most valuable at the top)?” and were provided with the following four items:

All of these were topics addressed in the institute. In the post-survey, participants were again asked to rank these same four items in accordance with this question: “What was the most valuable knowledge or skills that you gained from this institute (please rank from most valuable to least)?” Paired samples T-tests were then run on each item with the expectation that a change in average ranking of an item would reflect a difference between participants’ initial expectations of the institute and actual takeaways.

H0: Time teaching has no effect on expectation, knowledge, or evaluation metrics.

H1: Time teaching has an effect on expectation, knowledge, or evaluation metrics.

In the pre-survey, participants were asked “How long have you been teaching?” and were provided with the following three options: “1 year or less,” “2-5 years,” or “more than 5 years.” A one-way ANOVA with Bonferroni post hoc test was then run with time teaching as the factor and each expectation, knowledge, and evaluation item from the pre-survey and post-survey as a dependent variable. It was expected that this test would reveal any cases where time teaching had an effect on survey outcomes.

H0: There is no linear correlation between participants’ overall evaluations and specific evaluation items.

H1: There is a linear correlation between participants’ overall evaluations and specific evaluation items.

In the post-survey, participants were asked “How would you rate this institute?” and were then expected to evaluate the institute overall and in ten specific evaluation items according to a 5-point Likert scale. Categories included: instructor, support staff, schedule / organization, learning activities, your PLC, tech explorations, website / online resources, lab / venue, food / refreshments, and lodging. A stepwise linear regression model was then used with overall evaluation as the dependent variable and all ten specific evaluation items as the independent variables to determine whether linear correlations existed between specific evaluation items and the overall score, thereby revealing which specific evaluation items informed the overall rating.

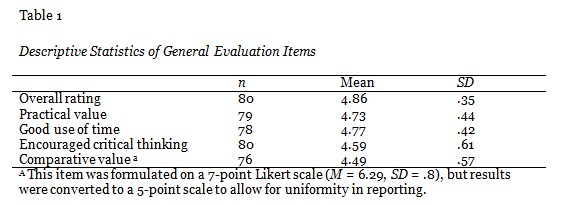

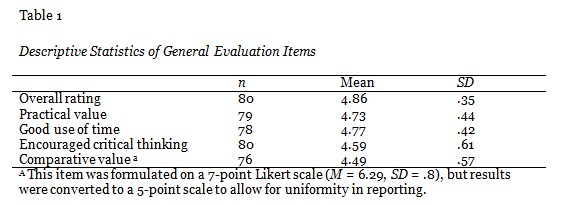

Descriptive statistics revealed that participants believed their institutes to be highly valuable and effective. The average participant overall rating for the institute was 4.86 on a 5-point Likert scale, and 44% of participants believed their institute was the best professional development experience they had ever experienced, and another 44% believed that it was much better than most other professional development experiences that they had experienced in the past. In their evaluations, participants rated all aspects of the institute highly, and participants strongly agreed that the institutes were a good use of their time, that they were of practical value to their classroom practice, and that the institutes encouraged them to think critically about technology integration (cf. Table 1). Findings emerging from statistical analysis related to each research question now follow.

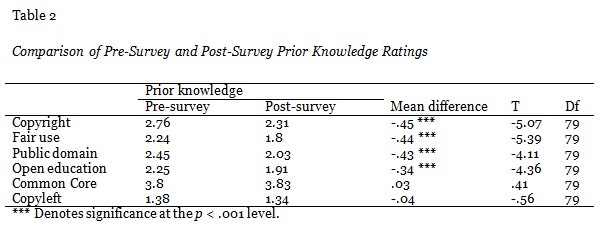

The comparison of pre-survey prior knowledge with post-survey prior knowledge yielded a number of significant differences between how participants initially evaluated their knowledge on topics related to open education and how they later came to assess their prior knowledge. In the cases of open education, copyright, fair use, and public domain, participants’ self-assessments went down in the post-survey, so we must reject the null hypothesis and conclude that self-assessments differed significantly before and after the institute for these cases (cf. Table 2). This finding suggests that initial participant self-assessments might have been based on false confidence or misconceptions about what the terms meant, but that as participants became more familiar with terms through the institutes, they came to recognize how little they actually knew before entering the institute. Differences on Common Core and copyleft were not significant, suggesting that the institute did not change participant understanding of what these terms meant (as is likely the case with Common Core) or that participants had no prior knowledge of the term (as is likely the case with copyleft).

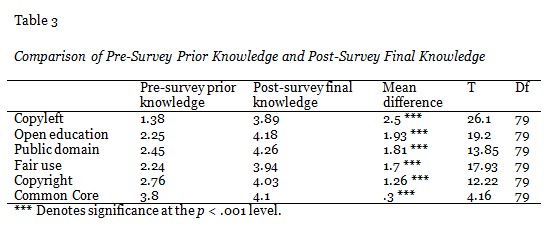

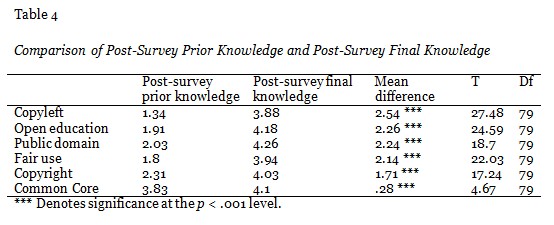

The comparison of pre-survey prior knowledge with post-survey final knowledge and also the comparison of post-survey prior knowledge with post-survey final knowledge yielded significance in every case (cf. Table 3 and Table 4). Thus, we must reject the null hypothesis and conclude that participants reported knowledge growth as a result of the institute in every domain.

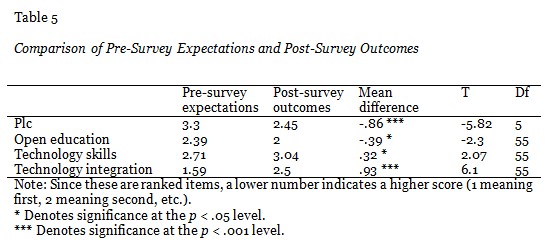

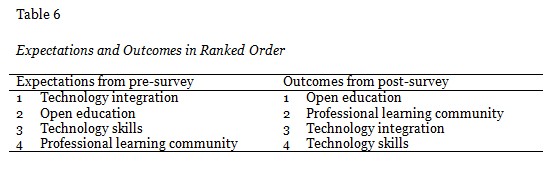

The comparison of pre-survey expectations with post-survey outcomes yielded significant results in every case (cf. Table 5). Thus, we must reject the null hypothesis and conclude that valued takeaways did not match initial participant expectations.

To clarify this finding further, if we were to list expectations and outcomes in accordance with their rankings, we would see that the largest changes occurred in the cases of technology integration, wherein participants expected to learn about technology integration but did not count it as a valuable outcome, and PLCs, wherein participants did not expect their PLCs to be valuable but then evaluated them highly as an outcome (cf. Table 6).

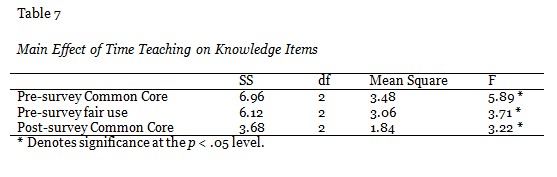

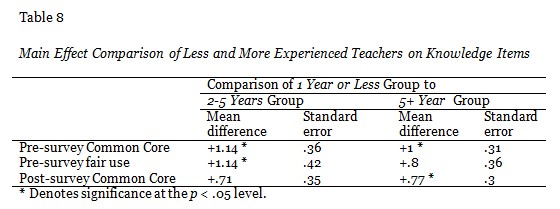

ANOVA tests on knowledge items generally did not reveal differences between participants when grouped according to time teaching or teacher veterancy. The only significant main effects between groups were found on the Common Core and fair use items in the pre-survey and on the Common Core item in the post-survey (cf. Table 7). Bonferonni post hoc tests revealed that this difference can be attributed to the least experienced teaching group, which self-assessed lower than more experienced groups in all three metrics, with an average difference ranging between .71 and 1.14 points on the 5-point scale (cf. Table 8).

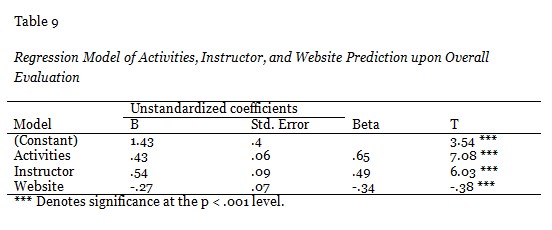

Participants rated sessions highly across all ten specific evaluation items, but the stepwise linear regression revealed that three specific evaluation items (activities, instructor, and website) significantly predicted overall ratings (cf. Table 9). The regression model for all three of these predictors also explained a significant proportion of variance in overall ratings, R2 = .649, F(3, 68) = 41.94, p < .001. Of these factors, activities and instructor had a positive linear correlation with overall ratings, while website had a negative linear correlation. All other factors were excluded from the regression model due to lack of significance.

A variety of implications arise from these findings. First, it may be concluded that the institutes were considered to be a valuable learning experience for participants and that utilizing this type of approach for developing open education literacies in practicing teachers can yield positive results and help to address this need (cf. Tonks, Weston, Wiley, & Barbour, 2013). This finding was corroborated in the knowledge growth analysis, which found that participants’ self-evaluations on specific knowledge items increased significantly both when comparing pre-survey prior knowledge with post-survey final knowledge and when comparing post-survey prior knowledge with post-survey final knowledge. Making both of these comparisons allowed us to determine more surely that participants’ knowledge grew than would have been possible by simply asking participants to reflect on their learning.

Second, it seems that part of the challenge with open education revolves around misconceptions and false confidence related to key components. It is telling that participants changed their initial ratings of themselves on knowledge of copyright, fair use, public domain, and open education between the pre-survey and the post-survey and rated themselves lower on prior knowledge after having experienced the institute. This corroborates our anecdotal findings that teachers tend to believe that they understand what these concepts mean and what they entail, but that upon examination and the completion of focused learning activities, participants come to recognize that they did not understand the concepts very well to begin with. This is problematic for open education, because it is difficult to appeal to a need when teachers do not recognize that a need exists. If teachers already believe that they understand copyright and fair use, for instance, then they have no impetus to learn about these concepts and may consider themselves to be open educators when in fact they have very little understanding of what this entails and what it means to share in open ways utilizing copyleft or Creative Commons licensing. Further research in this area would be valuable for gaining a more nuanced understanding of misconceptions and false confidence via qualitative analysis, but such analyses were beyond the scope of the current study and were not essential for answering the research questions.

Third, we found it noteworthy that perceived importance of both open education and professional learning communities increased through the course of the institutes, while importance of technology integration and skills decreased. This suggests that if we truly seek to create open participatory learning ecosystems (Brown & Adler, 2008, p. 31), teachers need experiences like these institutes that allow them to experience collaborations with other teachers in an open manner. It seems dubious that system-wide collaborations (Carey & Haney, 2007) can occur otherwise, because open education requires teachers to rethink fundamental aspects of how they operate as educators, to reevaluate basic collaborative practices, and to share in ways that may be new and uncomfortable. In addition, as teachers recognize collaborative potentials with one another across traditional school and district boundaries and recognize that they have value to contribute to the profession through sharing, this may help to counteract deskilling influences upon teachers, wherein they are relegated to serving as technicians rather than professionals (Gur & Wiley, 2007).

Fourth, though there is no theoretical basis for assuming that innovation adoption is correlated with age factors (cf. Rogers, 2003), it has been our experience that many advocates for innovation and technology integration resort to a narrative of innovation which considers younger teachers to be more willing to innovate than their more experienced peers. Our findings, however, reveal that time teaching had no impact on participants’ expectations of the institutes or their evaluations of the experience, which means that veteran teachers responded just as positively to the learning activities as did their less experienced counterparts. The only significant differences we found related to two knowledge items: Common Core (pre-survey and post-survey) and fair use (pre-survey only). In the case of Common Core, it makes sense that more veteran teachers would self-assess higher than less experienced teachers, because they have had more experience teaching and adapting to new standards or ways of teaching and also work in districts that have devoted a sizable amount of training to Common Core, while the less experienced teachers would have just recently completed their teacher education programs and likely would not have completed many district or school level trainings.

The difference with fair use, on the other hand, reveals that veteran teachers entered the institute with greater perceived knowledge of fair use than did their novice counterparts but that this difference disappeared by the end of the institute. This means that either veteran teachers truly began the institute with a greater knowledge of fair use than their novice counterparts or they had more false confidence in this regard. Given the fact that training on issues of copyright and fair use are uncommon for teachers, we believe that the latter interpretation is likely more accurate and that as teachers spend time in the classroom and use copyrighted works, they develop a false sense of confidence related to fair use. This interpretation is corroborated by the fact that when novice teachers and veteran teachers self-assessed their prior knowledge on the post-survey, differences between groups disappeared, meaning that after participants had focused training related to fair use, they self-evaluated themselves equally low on initial knowledge. This is problematic, because it suggests that as teachers gain experience in the classroom, they also develop a false sense of confidence related to fair use and therefore likely begin utilizing copyrighted materials in ways that may not be permissible. This also means that although the development of open education literacies is essential for ongoing diffusion (Tonks, Weston, Wiley, & Barbour, 2013), teachers may not recognize the need to learn more about open education, because they assume that they already sufficiently understand these topics.

And fifth, if open education leaders seek to help K-12 teachers develop literacies necessary to utilize open educational resources in their classrooms and to share their own creations through open practices, then we need to understand what factors influence these teachers’ ratings of learning experiences toward this end. From our results, we find that the learning activities themselves, which involved collaborative group work with other professional educators, and the instructor, who modeled open educational practices and facilitated collaborative learning, were the most important factors for creating a positive open education experience.

Interestingly, though participants provided anecdotal feedback that the website and online resources were valuable, their ratings in this regard are negatively correlated with overall satisfaction with the institutes. The reason for this is unknown, but it may be that those teachers who valued the ability to peruse resources on their own and to learn at their own pace via provided online resources found the face-to-face institute to be less valuable, whereas those who found the online resources to be less useful needed to rely more heavily on the institute and valued the experience more as a result. This may mean that some educators might be more effectively introduced to open education via online, asynchronous learning experiences, while others may be more effectively reached through face-to-face, synchronous experiences.

Given these findings and implications, we conclude that this type of open education institute can be valuable for practicing teachers if coupled with effective and collaborative learning activities and a strong instructor to model open education practices and collaborative learning for participants. We also conclude that there may be a number of misconceptions related to open education that make it difficult for practicing teachers to recognize the need for this type of work but that as they participate in learning activities increasing their knowledge of concepts like copyright, fair use, public domain, and copyleft, teachers come to recognize the value of these subjects and to value learning experiences devoted to them. And finally, advocates of open educational practices should eschew narratives of innovative change that categorize educators based upon veterancy factors and recognize that all teachers want to innovate and share; teachers merely need learning experiences that empower them to overcome false confidence and misconceptions in a manner that is positive and that treats them as competent professionals.

Atkins, D., Brown, J. S., & Hammond, A. (2007). A review of the open educational resources (OER) movement: Achievements, challenges, and new opportunities (Report to the William and Flora Hewlett Foundation). Retrieved from http://www.hewlett.org/uploads/files/ReviewoftheOERMovement.pdf

Baker, J., Thierstein, J., Fletcher, K., Kaur, M., & Emmons, J. (2009). Open textbook proof-of-concept via connexions. The International Review of Research in Open and Distance Learning, 10(5). Retrieved from http://www.irrodl.org/index.php/irrodl/article/view/633/1387

Baraniuk, R. G. (2007). Challenges and opportunities for the open education movement: A Connexions case study. In T. Iiyoshi & M. S. V. Kumar (Eds.) Opening up education: The collective advancement of education through open technology, open content, and open knowledge (pp. 229-246).

Brown, J. S., & Adler, R. P. (2008). Minds on fire: Open education, the long tail, and learning 2.0. Educause Review, 43(1), 16-20.

Budapest Open Access Initiative (2002). Read the Budapest Open Access Initiative. Budapest Open Access Initiative. Retrieved from http://www.soros.org/openaccess/read.shtml

Carey, T. & Hanley, G. L. (2007). Extending the impact of open educational resources through alignment with pedagogical content knowledge and instututional strategy: Lessons learned from the MERLOT community experience. In T. Iiyoshi & M. S. V. Kumar (Eds.) Opening up education: The collective advancement of education through open technology, open content, and open knowledge (pp. 181-196)

Creswell, J. (2008). Educational research: Planning, conducting, and evaluating quantitative and qualitative research (3rd ed.). Upper Saddle River, New Jersey: Pearson.

DuFour, R. (2004). What is a” professional learning community?” Educational Leadership, 61(8), 6-11.

Fini, A. (2009). The technological dimension of a massive open online course: The case of the CCK08 course tools. International Review of Research in Open and Distance Learning, 10(5).

Furlough, M. (2010). Open access, education research, and discovery. The Teachers College Record, 112(10), 2623-2648.

Garnett, F., & Ecclesfield, N. (2012). Towards a framework for co-creating open scholarship. Research in Learning Technology, 19.

Getz, M. (2005). Open scholarship and research universities. Internet-First University Press.

Gur, B., & Wiley, D. (2008). Instructional technology and objectification. Canadian Journal of Learning and Technology / La revue canadienne de l’apprentissage et de la technologie, 33(3). Retrieved from http://www.cjlt.ca/index.php/cjlt/article/view/159/151

Hilton, J., & Laman, C. (2012). One college’s use of an open psychology textbook. Open Learning: The Journal of Open, Distance and E-Learning, 27(3), 265–272.

Houghton, J., & Sheehan, P. (2006). The economic impact of enhanced access to research findings (Centre for Strategic Economic Studies). Victoria University, Melbourne. Retrieved from http://www.cfses.com/documents/wp23.pdf

Idaho State Department of Education. (2007). Idaho school district profiles 1997-2006. Idaho State Department of Education. Retrieved from http://www.sde.idaho.gov/Statistics/districtprofiles.asp

Jenkins, H., Clinton K., Purushotma, R., Robinson, A.J., & Weigel, M. (2006). Confronting the challenges of participatory culture: Media education for the 21st century. Chicago, IL: The MacArthur Foundation.

Kimmons, R. (2014). Technology & open education summer institute. University of Idaho Doceo Center Open Courses. Retrieved from http://courses.doceocenter.org/open_education_summer_institute

Kimmons, R. (in press). Examining TPACK’s theoretical future. Journal of Technology and Teacher Education.

Kop, R., & Fournier, H. (2010). New dimensions to self-directed learning in an open networked learning environment. International Journal of Self-Directed Learning, 7(2), 2-20.

Laakso, M., Welling, P., Bukvova, H., Nyman, L., Björk, B.-C., & Hedlund, T. (2011). The development of open access journal publishing from 1993 to 2009. PLoS ONE, 6(6), e20961.

Mishra, P., & Koehler, M. J. (2007). Technological pedagogical content knowledge (TPCK): Confronting the wicked problems of teaching with technology. Technology and Teacher Education Annual, 18(4), 2214.

OECD. (2007). Giving knowledge for free: The emergence of open educational resources. Center for Economic Co-Operation and Development Center for Educational Research and Innovation. Retrieved from http://www.oecd.org/edu/ceri/38654317.pdf

Papert, S. (1987). Computer criticism vs. technocentric thinking. Educational Researcher, 16(1), 22-30.

Petrides, L., Jimes, C., Middleton‐Detzner, C., Walling, J., & Weiss, S. (2011). Open textbook adoption and use: implications for teachers and learners. Open Learning: The Journal of Open, Distance and E-Learning, 26(1), 39–49.

Rheingold, H. (2010). Attention, and other 21st-century social media literacies. EDUCAUSE Review, 45(5), 14-24.

Rogers, E. (1962). Diffusion of innovations. New York: Free Press.

Tonks, D., Weston, S., Wiley, D., & Barbour, M. (2013). “Opening” a new kind of school: The story of the Open High School of Utah. The International Review of Research in Open and Distance Learning, 14(1), 255-271. Retrieved from http://www.irrodl.org/index.php/irrodl/article/view/1345/2419

Veletsianos, G., & Kimmons, R. (2012). Assumptions and challenges of open scholarship. The International Review of Research in Open and Distance Learning, 13(4), 166-189.

Walker, E. (2007). Evaluating the results of open education. In T. Iiyoshi & M. S. V. Kumar (Eds.) Opening up education: The collective advancement of education through open technology, open content, and open knowledge (pp. 77-88).

Wiley, D. (2003). A modest history of OpenCourseWare. Autounfocus blog. Retrieved from http://www.reusability.org/blogs/david/archives/000044.html

Wiley, D. (2010). My TEDxNYED talk. iterating toward openness. Retrieved from http://opencontent.org/blog/archives/1270

Wiley, D., Hilton III, J., Ellington, S., & Hall, T. (2012). A preliminary examination of the cost savings and learning impacts of using open textbooks in middle and high school science classes. The International Review of Research in Open and Distance Learning, 13(3), 262-276.

Wiley, D., & Green, C. (2012). Why openness in education? In D. Oblinger (Ed.), Game changers: Education and information technologies (pp. 81-89). Educause.

UNESCO (2002). Forum on the impact of open courseware for higher education in developing countries: Final report. UNESCO. Retrieved from http://unesdoc.unesco.org/images/0012/001285/128515e.pdf

© Kimmons