|

|

|

|

Svetlana M. Stanišić Stojić, Gordana Dobrijević, Nemanja Stanišić, and Nenad Stanić

Singidunum University, Serbia

This paper presents an analysis of teachers’ ratings on distance learning undergraduate study programs: 7,156 students enrolled in traditional and 528 students enrolled in distance learning studies took part in the evaluation questionnaire, assessing 71 teachers. The data were collected from the Moodle platform and from the Singidunum University information system, and then analysed with SPSS statistical software. The parameters considered as potentially affecting teacher ratings are: number of teachers engaged in a particular course, total number of courses in which the teacher is engaged, teacher’s gender and age, total number of the available resources, and so forth. The results imply that scores assigned to individual teachers are consistent in both traditional and distance learning programs. The average rate was perceived to be lower when there were several teachers in a single course; such an effect was enhanced in cases where there was a significant age discrepancy among them. The other factors considered did not show a significant association with teacher ratings. Students’ main remarks about the work of the teachers have been summarised at the end of this paper. Possible explanations and implications of the results are discussed and recommendations are given.

Keywords: Distance learning; teacher rating; student satisfaction

Distance learning is becoming increasingly recognised as a suitable and valuable educational experience (Davies, Howell, & Petrie, 2010). Many universities across the globe offer distance learning courses through the introduction of learning management systems that allow them to have both on- and off-campus students. Since recruitment and retention of students has a significant financial impact on today’s universities, there have been numerous studies on student satisfaction and academic achievements from distance learning programs (e.g., Endres, Chowdhury, Frye, & Hurtubis, 2009; Eom, Wen, & Ashill, 2006).

One of the main factors influencing student satisfaction is the quality of teaching. This is an especially important consideration for university managers and decision makers, who can organise distance learning in a way that allows them to provide ongoing guidance and improvement strategies for teaching staff. On the contrary, some other factors are not easily influenced and managed, such as student self-motivation, student learning style, required/elective courses ratio, and so forth. Rating teachers should be a valuable procedure for students as well, because it can lead to improvement of teaching quality, based on the stated opinions of the students (Marzano, 2012; Nargundkar & Shrikhande, 2012). The study of Taylor and Tyler (2012) strongly confirms the opinion that teachers develop skills and otherwise improve due to student evaluation. They found that teachers were more successful at improving student achievement during the school year when they were evaluated than in the years before the evaluation, and were even more successful in the years after the evaluation. However, some reports (e.g., Toch & Rothman, 2008) show that teacher evaluations have not helped in the formation of highly skilled teachers. We presume that teachers’ ratings could influence their skills development provided that their tenure depends on the rating, the rating is publicly announced (which puts additional pressure on the teacher), and there are some consequences for the teachers with lowest scores. Since there are no widely accepted standards of teacher performance, universities around the world mainly use student evaluation of teachers as the principal source of data on teachers’ performance and quality of teaching (Lalla, Frederic, & Ferrari, 2011; Carr & Hagel, 2008). Some previous studies have dealt with the issue of whether and to what extent the evaluation results truly reflect students’ attitudes. According to most authors, teacher rating proved to be a good indicator of teaching effectiveness (e.g., Beran & Violato, 2005; Nargundkar & Shrikhande, 2012; Wiers-Jenssen, Stensaker, & Grogaard, 2003). Beran and Violato (2005) also found that teacher rating is, to a lesser extent, biased by some factors that are not related to the teachers themselves, such as students’ grade expectations, attendance, and types of courses being evaluated. For example, teachers of elective courses have always been rated higher than teachers of compulsory courses, as shown by all findings to date. In previous research on teacher-related factors that affect ratings, interaction, teacher feedback, and communication appear most often (e.g., Kuo, Walker, Belland, & Schroder, 2013; Loveland, 2007; Rothman, Romeo, Brennan, & Mitchell, 2011). Some other factors having the highest impact on learner satisfaction in online education are: teacher knowledge and facilitation, course structure (Eom et al., 2006), appropriateness of readings and tasks, technological tools, course organisation, clarity of outcomes and assignments, and content format (Rothman et al., 2011). According to the community of inquiry framework (Garrison, Anderson & Archer, 2000, 2010), a deep and meaningful online learning experience happens through the evolution of three interconnected elements: cognitive, social, and teaching presence. In an educational environment, teaching presence is normally the main responsibility of the teacher. It includes selection, organization, and presentation of the course content, and the design and development of learning activities. Although the teacher’s personality is known to be a crucial factor in establishing a successful online learning atmosphere (Northcote, 2010), the most apparent teachers’ characteristics, such as their qualification experience, do not always appear to be associated with teaching quality (Hanushek & Rivkin, 2010). Still, expressing the personality beyond the provision of mere resources is important because just the interaction is not enough to achieve a sense of teacher presence in online learning contexts (Garrison,Cleveland-Innes, & Fung, 2010).

Our research was aimed at defining the characteristics and activities that will influence the rating of teachers on distance learning study programs. The data from the evaluation questionnaire, conducted regularly for the quality control of teaching and study processes at Singidunum University, have been used for the research. Singidunum University is the largest private university in Serbia, with more than 10,000 students enrolled in degree programs in the fields of finance, banking, accounting, marketing and trade, tourism and hotel management, engineering management, computer science, and electrical and computer engineering. Six percent (about 600 students) of this number attend distance learning programs from all the abovementioned fields except computer science and electrical and computer engineering. The research results are expected to benefit teaching staff as well as the management of higher education institutions, which aims to improve the quality of teaching delivery and increase satisfaction of students on distance learning programs.

The research covered all four years of various study programs. The data were collected from both the University information system and the Moodle platform, then cross-analysed by using the SPSS statistical software.

Numerous parameters were analysed as potential correlates of teacher rating, some of them being the number of teachers engaged per course, number of courses assigned to a particular teacher, gender and age of a teacher, total number of the available resources, and students’ activities, and so forth. Available resources and students’ activities were considered collectively as well as individually against the following categories: files, quizzes, forums, lessons, assignments, labels, and dictionaries. The Pearson correlation was used to measure the ratio between the two variables. Linear regression was used to define the relationships between the variables, while t test was used to test the differences in the average values. The method of Spearman’s rank-order correlation was used to determine the association between variables that are not normally distributed. In order to test for association between two non-normally distributed variables while simultaneously controlling for the effect of already known confounders, the method of partial Spearman’s rank correlation was used.

In order to create a comparison with the traditional studies, this research was conducted on a sample of 71 teachers, simultaneously engaged with both traditional classroom programs and distance learning studies. The total number of students that assessed the work of teachers in traditional classroom programs was 7,156, whereas 528 students assessed the work of teachers on distance learning studies. Each student rated the teachers only during the term s/he was currently in attendance, awarding grades from 1 to 5, 1 being the lowest, and 5 the highest. The total number of polls completed was 36,151 on traditional studies and 2,675 on distance learning study programs. The evaluation questionnaire comprised five questions:

Students were also able to leave a comment at the end of the poll, related to the teacher’s performance. As the mean evaluation score for the teachers involved more than one course, we used the grand mean they received for all the courses they were involved in. At the end of this paper, we also summarised students’ remarks, which were usually given descriptively in the polls.

Because of the differences between online and traditional courses, some researchers have examined if and to what extent the same student ratings can be used in online courses. For example, students of traditional studies establish more frequent personal contact with their teachers, as opposed to the students of distance learning studies. Due to the lack of personal contact and empathy, the rating of distance learning teachers is often more reflective of the quality of the course content than the quality of the overall work of the teacher. This can provide misleading results, particularly with the courses involving more than one teacher. For these reasons, we divided the courses into modules, with the name of the author clearly given for each online resource. A large number of recorded lectures were also uploaded; the communication on each of the courses was personalised so that the students could easily master the materials and have a clear idea of whom they were communicating with.

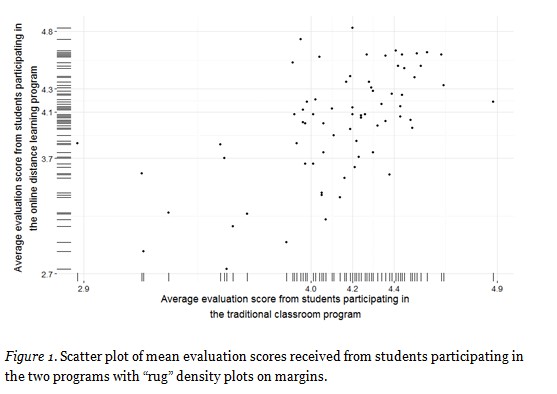

Figure 1 represents the distribution of scores that the students awarded to the teachers on traditional studies and distance learning studies. The average values, standard deviations and the sample size are shown in this graph. The obtained results show that there is a positive association between the score awarded to the teacher on traditional studies and the score awarded to the teacher on distance learning studies (r(69) = 0.575, p < 0.01).

The linear model has the following form:

Average evaluation score from students participating in the online distance learning program = 0.880 + 0.756 x average evaluation score from students participating in the traditional classroom program

The reliability analysis has also confirmed that the evaluation scale items are reasonably interrelated (5 items; Cronbach’s α = 0.82). However, Sijtsma (2009) reminds us that a high value of Cronbach’s alpha does not provide evidence of unidimensionality.

Although distance learning involves reduced learner-tutor interaction due to asynchronous computer-mediated (ACM) conferences, it is obvious that certain teachers are able to meet the requirements, regardless of format or mode of delivery. This points to a very important conclusion: “good teaching” is just “good teaching” regardless of the medium. Comparing traditional and online courses, Beattie, Spooner, Jordan, Algozzine, and Spooner (2002) found similar results across course, teacher, and general ratings, regardless of the mode of delivery. Also, in the IDEA survey 2002-2008 by Benton, Webster, Gross, and Pallett (2010), students’ ratings of teachers, both of online and traditional courses, as well as ratings of the courses themselves, were all very much alike. They found some minor differences (e.g., that teachers of online courses were perceived as using educational technology more effectively), which is expected given the specifics of the mode of educational material delivery.

According to Alonso Díaz and Blázquez Entonado (2009), teachers’ roles in online education are not very different from those of traditional courses. In both teaching modes teachers still have to deal with facilitating the teaching/learning process, combining activities with theoretical content, and encouraging student interaction. Kelly, Ponton, and Rovai (2007) also compared students’ ratings of overall instructor performance and overall satisfaction of online and traditional courses. They found no significant difference in students’ ratings between online and traditional courses. The authors propose that teachers of online courses should be fair and unbiased, and enthusiastic and helpful, and they should show real interest in student progress and needs.

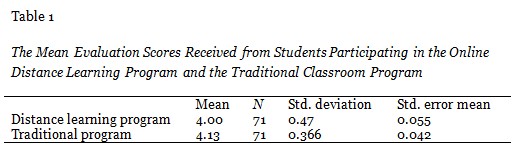

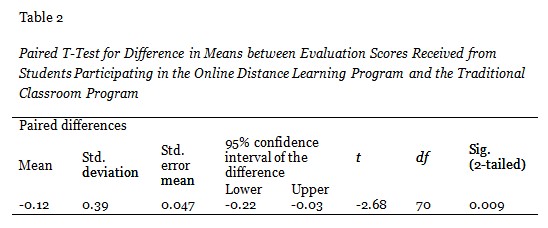

Based on the data from Table 1, which shows that the average teacher rating on traditional studies is higher than on distance learning studies by 0.13, and the results of paired samples of s test shown in Table 2, it can be concluded that such a difference is statistically significant (t(70) = -2.68, p < 0.01). The effect size for this analysis (d = 0.31) is moderate according to the general conventions (Cohen, 1992). One of the reasons for this difference may be the lack of personal contact and empathy. It is well known that communication is experienced differently through different forms of communication media. It is much easier to develop personal understanding and empathy in face-to-face communication than in online communication, which cannot convey delicate social cues beyond the literal meaning of the words from the text (Lewicki, Barry, & Saunders, 2010).

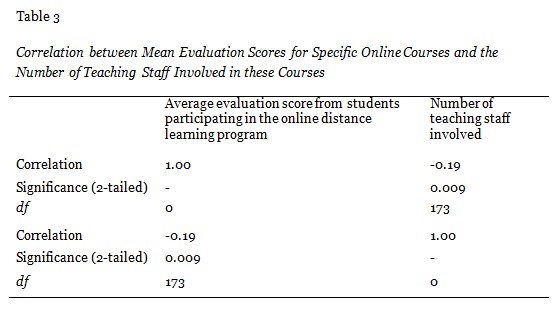

The second part of our research shifts the point of view from course-oriented to a rather more teacher-oriented one. The results showed that the number of teachers engaged per course is negatively associated with their average score. This association is significant, even when it is controlled for the score on traditional studies, which can be seen in Table 3 (rs(173) = -0.196, p < 0.01). We assume that this is because of the lack of coordination among different teachers, due to their different ages, personalities, backgrounds, communication styles, and preferences. Another problem in these cases may be an excess of learning material and too many activities on the online platform, because each teacher supplies his/her own resources.

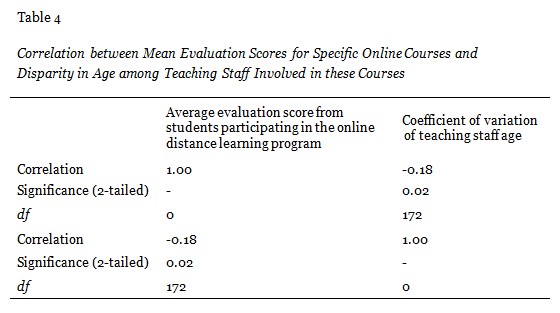

The results also showed that the age difference of the teachers engaged in a particular course reduced the overall average score (rs(172) = -0.177, p < 0.05). The age difference is measured by the coefficient of variation (standard deviation of age divided by the average age of the teaching staff).

It is interesting that no statistically significant correlation between other variables has been perceived. The average teacher rating on distance learning studies, for example, is neither related to the number of uploaded teaching resources nor to the number of teachers’ activities on the online platform. These results lead to a conclusion that the mere number of the uploaded resources does not indicate their quality, and has no influence on student satisfaction. Estelami (2012), who tested student satisfaction in both hybrid-online courses (a combination of online and traditional classroom teaching) and purely online courses, reached similar conclusions. He defined several key factors that influence student satisfaction and that depend on teachers either directly or indirectly: course content, student-teacher communication, the use of effective learning tools, and the teacher him/herself. Considering the importance of learning tools, he concluded that the usefulness of textbooks and other support material, and not the number of resources, greatly influence students’ general feelings and attitudes. Carr and Hagel (2008) also confirm that good quality teaching resources and higher levels of online activity are associated with higher satisfaction levels.

Benton, Cashin, and Kansas’s (2012) review of literature and empirical studies revealed that the teacher’s personality does not influence student evaluation of teachers, and neither does the teacher’s race, gender, age, or research productivity. Paechter, Maier, and Macher (2010) investigated the factors that students regard as important for their satisfaction and performance. The teacher’s expertise in online learning and his/her level of support offered seem to be the best predictors for student satisfaction and learning achievements.

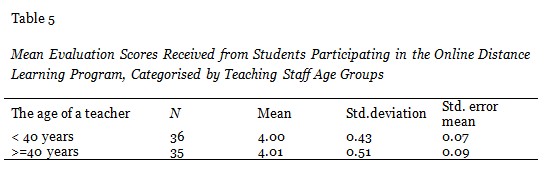

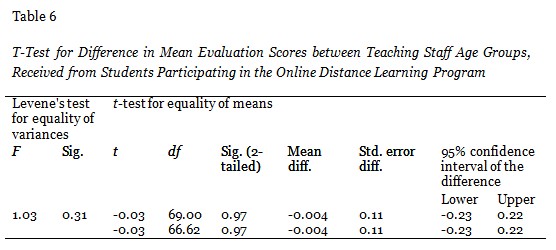

The fact that no correlation between the average rating and the age of the teacher was observed also speaks in favor of older generations of teachers being capable of adjusting successfully to the new teaching trends. Table 5 shows the average values and standard deviations of scores on distance learning study programs for two age groups of teachers, while Table 6 shows the results of t test, both of which confirm that difference in the average score does not depend on the teacher’s age.

It was not observed that the teachers engaged in more than one course had an average score lower than the mean value, which indicates that the workload of teachers, as long as it is within acceptable limits, is not a factor that affects the quality of work.

Finally, we have summarised the most common objections to the work of teachers, which the students presented descriptively in the evaluation questionnaire. We believe that these remarks may help to understand the factors that affect students’ satisfaction, and may also help to improve the teaching quality in distance learning study programs. As Kelly, Ponton, and Rovai (2007) posit, if there are differences between quantitative ratings of online and traditional courses, analysis of qualitative comments can provide us greater insight into those differences.

First of all, some teachers delay responses to students’ messages without apparent reason, and communication is sometimes scant, without necessary details. Communication with students on distance learning programs requires special attention, due to the fact that this type of study involves the increasing use of asynchronous video communication, as well as a lack of personal contact, which is essential for the development of trust (Dennen, Aubteen Darabi, & Smith, 2007). According to Wade, Cameron, Morgan, and Williams (2011), distance education students desire relationships with group members more than their colleagues enrolled in traditional study programs, and we can add that the same applies to their relationships with teachers. Furthermore, it has been established that facilitating students’ participation is one of the essential pedagogical competencies specific for e-learning practices (Muñoz Carril, González Sanmamed, & Hernández Sallés, 2013).

Second, students find that some teachers do not upload enough learning and testing resources suitable for distance learning. The Moodle platform, which is nowadays used in many countries, offers a wide variety of content, applications, and forms of communication available in the e-learning environment, with a detailed description of options (Akhmetova, Vorontsova, & Morozova, 2013). Therefore, distance learning can rely on many technologies and features such as multimedia, streaming audio and video, web conferencing, instant messaging, peer-to-peer file sharing, and so on (McGreal & Elliott, 2008). However, apart from video tutorials and assessment tests with automatic feedback, such possibilities are rarely used, fully due to the omission in work of the teaching staff.

The third objection, which is frequently encountered, is that most teachers set deadlines for the preparation of tests, mid-terms and finals, which do not fit all the students. The cause of the problem probably lies in the fact that the flexibility of online learning is too often taken for granted, and students interpret it as doing online assignments whenever it suits them. Although this interpretation is true of most online courses, sometimes students can have problems resulting from regular absence from online classes. Learners need to rely on stable terms and tasks, so that they can plan for their absence in advance. On the other hand, it is essential that the teachers provide more support to the students when they (the students) underestimate the time and effort required in online learning, since the lack of support is shown to be one of the main reasons for dropping out of university (Conrad, 2009).

In the end, we should consider certain constructive remarks given by some authors with respect to teacher evaluations. Wiers-Jensen et al. (2003) state that evaluating student satisfaction remains controversial due to the contextual factors that can influence students’ perception of teaching quality. Several other authors (e.g., Becker & Watts, 1999; D’Apollonia & Abrami, 1997) also found that students’ ratings are often influenced by teacher characteristics that have nothing to do with effectiveness, such as popularity or grading style. Still, many universities use student evaluation of teachers as the main factor in faculty promotion and salary, which raises a number of issues (e.g., Olivares, 2003), including the validity (whether the results can accurately predict student learning) and external biases (whether some other unrelated factors could influence student opinion) of such evaluations. It can also induce teachers to manipulate their grading policies, in order to boost their evaluations, which can ultimately lead to deterioration of education quality (Johnson, 2002). Griffin, Hilton, Plummer, and Barret (2014) analysed the grade point averages (GPAs) and teacher ratings over 2,073 courses at a large private university. They found a moderate correlation between GPAs and teacher ratings, although this overall correlation did not hold true for individual teachers and courses. Although student ratings are useful in assessing the quality of teaching and of overall courses, they are not sufficient and therefore should not be used as a sole factor in determining teacher salary and promotion.

In this paper we analysed the main teacher-related factors that affect student evaluation of teachers on distance learning programs. The results show only a small reduction in ratings on DL studies, compared to traditional studies. The reason for this may be the lack of personal contact between students and teachers. We also found that the number of teachers engaged per course, as well as their age difference, lowered the average rating of the teachers involved in the course. On the other hand, no correlation was found between teacher ratings and teachers’ activities on the online platform, or the number of resources they uploaded. It shows that students appreciate more the quality and suitability than the quantity of learning materials. In order to improve distance learning education, teachers should devote special attention to communicating with students. When it comes to learning materials, teachers should use the advantages of the Moodle platform and give priority to the resources adapted to online learning, such as online tutorials and feedback on assignments.

Akhmetova, D., Vorontsova, L., & Morozova, I. G. (2013). The experience of a distance learning organization in a private higher educational institution in the Republic of Tatarastan (Russia): From idea to realization. International Review of Research in Open & Distance Learning, 14(3).

Beattie, J., Spooner, F., Jordan, L., Algozzine, B., & Spooner, M. (2002). Evaluating instruction in distance learning classes. Teacher Education and Special Education: The Journal of the Teacher Education Division of the Council for Exceptional Children, 25(2), 124-132.

Becker, W. E., & Watts, M. (1999). How departments of economics evaluate teaching. American Economic Review, 89(2), 344-349.

Benton, S. L., Webster, R., Gross, A. B., & Pallett, W. H. (2010). Technical report No. 15: An analysis of IDEA student ratings of instruction in traditional versus online courses 2002-2008 data. The IDEA Center: Manhattan, Kansas.

Benton, S. L., Cashin, W. E., & Kansas, E. (2012). IDEA paper No. 50: Student ratings of teaching: A summary of research and literature. The IDEA Center: Manhattan, Kansas.

Beran, T., & Violato, C. (2005) Ratings of university teacher instruction: How much do student and course characteristics really matter? Assessment & Evaluation in Higher Education, 30(6), 593-601.

Carr, R., & Hagel, P. (2008, January). Students’ evaluations of teaching quality and their unit online activity: An empirical investigation. In ASCILITE 2008 Melbourne: Hello! Where are you in the landscape of educational technology?: Program and abstracts for the 25th ASCILITE conference (pp. 152-159). Deakin University: ASCILITE.

Cohen, J. (1992). A power primer. Psychological Bulletin, 112(1), 155-159.

Conrad, D. (2009). Cognitive, instructional, and social presence as factors in learners’ negotiation of planned absences from online study. International Review of Research in Open & Distance Learning, 10(3).

D’Apollonia, S., & Abrami, P. C. (1997). Navigating student ratings of instruction. American Psychologist, 52(11), 1198.

Davies, R. S., Howell, S. L., & Petrie, J. A. (2010). A review of trends in distance education scholarship at research universities in North America, 1998-2007. International Review of Research in Open & Distance Learning, 11(3).

Dennen, V. P., Aubteen Darabi, A., & Smith, L. J. (2007). Instructor–learner interaction in online courses: The relative perceived importance of particular instructor actions on performance and satisfaction. Distance Education, 28(1), 65-79.

Díaz, L. A., & Entonado, F. B. (2009). Are the functions of teachers in e-learning and face-to-face learning environments really different? Journal of Educational Technology & Society, 12(4), 331-343.

Endres, M. L., Chowdhury, S., Frye, C., & Hurtubis, C. A. (2009). The multifaceted nature of online MBA student satisfaction and impacts on behavioral intentions. Journal of Education for Business, 84(5), 304-312.

Eom, S. B., Wen, H. J., & Ashill, N. (2006). The determinants of students’ perceived learning outcomes and satisfaction in university online education: An empirical investigation. Decision Sciences Journal of Innovative Education, 4(2), 215-235.

Estelami, H. (2012). An exploratory study of the drivers of student satisfaction and learning experience in hybrid-online and purely online marketing courses. Marketing Education Review, 22(2), 143-156.

Garrison, D. R., Anderson, T., & Archer, W. (2000). Critical inquiry in a text-based environment : Computer conferencing in higher education. The Internet and Higher Education, 2(2-3), 87-105.

Garrison, D. R., Anderson, T., & Archer, W. (2010). The first decade of the community of inquiry framework: A retrospective. The Internet and Higher Education, 13(1), 5-9.

Garrison, D. R., Cleveland-Innes, M., & Fung, T. S. (2010). Exploring causal relationships among teaching, cognitive and social presence: Student perceptions of the community of inquiry framework. The Internet and Higher Education, 13(1), 31-36.

Griffin, T. J., Hilton III, J., Plummer, K., & Barret, D. (2014). Correlation between grade point averages and student evaluation of teaching scores: Taking a closer look. Assessment & Evaluation in Higher Education, 39(3), 1-10.

Hanushek, E. A., & Rivkin, S. G. (2010). Generalizations about using value-added measures of teacher quality. The American Economic Review, 267-271.

Johnson, V. E. (2002). Teacher course evaluations and student grades: An academic tango. Chance, 15(3), 9-16.

Kelly, H. F., Ponton, M. K., & Rovai, A. P. (2007). A comparison of student evaluations of teaching between online and face-to-face courses. Internet and Higher Education, 10, 89-101.

Kuo, Y. C., Walker, A. E., Belland, B. R., & Schroder, K. E. (2013). A predictive study of student satisfaction in online education programs. International Review of Research in Open & Distance Learning, 14(1).

Lalla, M., Frederic, P., & Ferrari, D. (2011). Students’ evaluation of teaching effectiveness: Satisfaction and related factors. In Statistical Methods for the Evaluation of University Systems (pp. 113-129). Physica-Verlag HD.

Lewicki, R.J., Barry, B., & Saunders, D.M. (2010). Negotiation (International edition). Singapore: McGraw-Hill.

Loveland, K. A. (2007). Student evaluation of teaching (SET) in web-based classes: Preliminary findings and a call for further research. Journal of Educators Online, 4(2), n2.

Marzano, R. J. (2012). The two purposes of teacher evaluation. Educational Leadership, 70(3), 14-19.

McGreal, R., & Elliott, M. (2008). Technologies of online learning (e-learning). In T. Anderson (Ed.), Theory and practice of online learning (2nd ed., pp. 143-166). Edmonton, AB: AU Press.

Muñoz Carril, P. C., González Sanmamed, M., & Hernández Sellés, N. (2013). Pedagogical roles and competencies of university teachers practising in the e-learning environment. International Review of Research in Open & Distance Learning, 14(3).

Nargundkar, S., & Shrikhande, M. (2012). An empirical investigation of student evaluations of instruction: The relative importance of factors. Decision Sciences Journal of Innovative Education, 10(1), 117-135.

Northcote, M. T. (2010). Lighting up and transforming online courses: Letting the teacher’s personality shine. Education Papers and Journal Articles, Paper 33.

Olivares, O. J. (2003). A conceptual and analytic critique of student ratings of teachers in the USA with implications for teacher effectiveness and student learning. Teaching in Higher Education, 8(2), 233-245.

Paechter, M., Maier, B., & Macher, D. (2010). Students’ expectations of, and experiences in e-learning: Their relation to learning achievements and course satisfaction. Computers & Education, 54(1), 222-229.

Rothman, T., Romeo, L., Brennan, M., & Mitchell, D. (2011). Criteria for assessing student satisfaction with online courses. International Journal for e-Learning Security, 1(1-2), 27-32.

Sijtsma, K. (2009). On the use, the misuse, and the very limited usefulness of Cronbach’s alpha. Psychometrika, 74(1), 107-120.

Taylor, E. S., & Tyler, J. H. (2012). Can teacher evaluation improve teaching? Education Next, 12(4), 10.

Toch, T., & Rothman, R. (2008). Rush to judgment: Teacher evaluation in public education. Washington DC: Education Sector.

Wade, C. E., Cameron, B. A., Morgan, K., & Williams, K. C. (2011). Are interpersonal relationships necessary for developing trust in online group projects? Distance Education, 32(3), 383-396.

Wiers-Jenssen, J., Stensaker, B. R., & Grogaard, J. B. (2002). Student satisfaction: Towards an empirical deconstruction of the concept. Quality in Higher Education, 8(2), 183-195.