|

|

Hanna Teräs1 and Jan Herrington2

1Curtin University, Australia, 2Murdoch University, Australia

Teaching in higher education in the 21st century can be a demanding and complex role and academic educators around the globe are dealing with questions related to change. This paper describes a new type of a professional development program for teaching faculty, using a pedagogical model based on the principles of authentic e-learning. The program was developed with the help of an iterative educational design research process and rapid prototyping based on on-going research and redesign. This paper describes how the findings of the evaluations guided the design process and how the impact of the measures taken was in turn researched, in order to eventually identify and refine design principles for an authentic e-learning program for international teaching faculty professional development.

Keywords: Authentic learning; e-learning; educational design research; professional development

Being a teacher in higher education in the 21st century is, in many ways, a demanding and complex place to be. Academic educators everywhere are dealing with questions related to change: the pressure of integrating technology in education, changing curriculum, quality standards and measures, and increasingly multicultural and diverse groups of learners. For many teachers, the mysterious “net generation” learners that populate universities provide further pressure to be “innovative” to meet their different learning needs. Very often, however, little or no adequate training is provided, and opportunities for informed discussion and critical evaluation of the ever-changing world outside the university gates are scarce. Innovation also tends to be translated quite literally as “technology”, whereas pedagogy—either online or offline—seldom receives equal attention.

These realities motivated Tampere University of Applied Sciences (TAMK) to design 21st Century Educators, an international, fully online postgraduate certificate program that was designed for teachers in higher education to enhance their theoretical understanding as well as practical application of teaching, learning, assessment, and education technology in the global knowledge economy context. The learning design of the program was based on the principles of authentic e-learning as described by Herrington, Reeves, and Oliver (2010), and it was developed and implemented using an iterative educational design research process (e.g., Reeves, McKenney, & Herrington, 2011; Reeves, 2011; McKenney & Reeves, 2012).

Typically for educational design research, the goal of the research process is twofold. One of the goals is practice-driven: to design an intervention (in this case, a postgraduate certificate program) as a useful solution to a complex educational problem (lack of support and professional development resources for higher education teachers in an increasingly complex, global working environment). The other goal is theory-oriented: to produce knowledge about whether and why a certain type of intervention (a fully online program based on authentic e-learning principles) works effectively in a given context (multicultural cohort studying alongside teaching work) and, based on this knowledge, produce design principles that may assist designers in other projects to develop effective and workable interventions (Plomp, 2007).

This paper discusses the stages of formative evaluation and the resulting redesign in the research process. We will describe how the findings of the evaluations guided the design process and how the impact of the measures taken was in turn evaluated, in order to eventually tighten the net and identify design principles for an authentic e-learning program for international teaching faculty.

Educational design research was chosen to guide this particular research context primarily because there was a complex educational problem that had to be addressed in a way that would have potential for high-level practical impact and relevance (Plomp, 2007; Anderson & Shattuck, 2012). Unfortunately, as much as the latter might expect to be the default in any research, this is not always the case. Reeves, McKenney, and Herrington (2011) ask a very fundamental question: Why is it that while the number of educational research publications has increased dramatically, at the same time educational attainment is either declining or remaining stagnant? Reeves (2011) suggests that one of the reasons for this is that most studies concentrate on the wrong variables: Instead of meaningful pedagogical dimensions, such as design factors, feedback, or aligning learning outcomes and assessment, the focus tends to be on comparing instructional delivery methods, such as traditional versus online instruction, face-to-face versus video lectures, or computer-based versus pencil and paper assessment. As Reeves observes, these types of studies almost without exception render results of “no significant differences” (Reeves, 2011), and thus they do not have the potential to significantly improve educational practice either. Indeed, Reeves labelled such research “pseudoscience” and claimed it was so flawed that it has little relevance “for anyone other than the people who conduct and publish it” (Reeves, 1995, p. 9).

Although there are subtle variations, design research is also known as design-based research (Kelly, 2003), development research (van den Akker, 1999), and design experiments (Brown, 1992). As such, it is a research approach that has the capacity to address complex and relevant educational problems for which there are no clear guidelines or solutions available (Anderson & Shattuck, 2012). The approach is very different from the comparative research approach criticized by Reeves: Instead of attempting to compare whether method A is better in a given context than method B, the aim is to develop an optimal, research-based solution for the problem, perhaps best described by Reeves (1999) as seeking “to improve, not to prove” (p. 18).

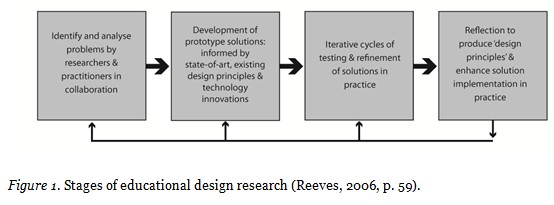

Although educational design research has one foot firmly in practice, the other one is just as firmly in theory. In the words of Cobb, Confrey, diSessa, Lehrer, and Shauble (2003), ‘the theory must do real work’ (p. 10). According to McKenney and Reeves (2012), the unusual characteristic of the theoretical orientation in educational design research is that scientific understanding is not only used to frame the research, but also to shape the design of the intervention. The hypotheses embodied in the design are validated, refined, or refuted through empirical testing, evolving through multiple cycles of development, testing, and refinement. Figure 1 illustrates these iterative phases of the approach, as depicted by Reeves (2006).

The role of evaluation in design research is paramount: A design is continuously improved based on information gained through evaluation. In the next section, we describe the critical role of evaluation in educational design research.

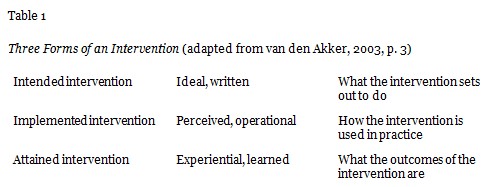

Evaluation—either formal or informal—is always a part of developing almost any kind of educational intervention. In design research, evaluation is systematic, and it aims at concurrently producing theoretical knowledge and developing the intervention. Evaluation is accompanied with reflection upon findings and observations to refine theoretical understanding and inform decisions for a redesign (Reeves & Hedberg, 2003). Anyone who has been involved in designing an educational intervention of any sort will know that there can be a major gap between the intention and the actual outcomes. Van den Akker (2003) makes a distinction between three representations of a curriculum: the intended, the implemented, and the attained. The same distinction is useful also from the educational design research point of view. Table 1 below illustrates this.

In an educational design research process, interventions are carried out in actual settings instead of a controlled test environment. This can be seen as a limitation, but, on the other hand, the strength of educational design research is that it is authentic and provides information of how designs work in real life, not only in ideal, controlled settings that have little to do with the complexity of an actual classroom (Collins, Joseph, & Bielaczyc, 2004; Reeves, 2006). In the current study, this was seen as especially important for adult learners who were taking the program alongside very demanding and hectic work schedules, had family responsibilities, and whose learning was thus affected by the whole spectrum of real life events. Therefore, the implemented and attained forms of the intervention (see Table 1) are directly influenced by the complexity of the real life context within which it was implemented.

In the following sections, the aforementioned factors will be considered in more detail, specifically by introducing the educational problem that necessitated the design research process as well as the real life context where the intervention would take place; by describing the intended intervention and introducing the design principles that were used, explaining why they were chosen, and describing what the intervention was intended to achieve; and finally by presenting an analysis of the implemented and attained intervention.

An educational design research process begins with identifying and analysing the problem or need (see Figure 1). In this case, TAMK was to develop and deliver a fully online postgraduate certificate for teaching in higher education for a cohort of international higher education practitioners in the United Arab Emirates.

For the past decade, TAMK had been developing more engaging and authentic ways of conducting online pedagogical qualification studies for in-service teachers, which had yielded very promising results with regard to using social media tools and authentic learning approaches (Teräs & Myllylä, 2011). At the same time, Higher Colleges of Technology (HCT) in the United Arab Emirates (UAE), a major provider of higher education in the Middle East, was looking for ways of supporting the professional development of its teaching faculty in the areas of teaching and learning, assessment, and innovative use of new pedagogies and technologies. All the teachers worked on-campus, but the role of technology in classroom and blended approaches to teaching and learning was constantly increasing.

The model that had worked well for in-service teachers of vocational subjects in Finland (Teräs & Myllylä, 2011) was used as a starting-point for development. However, the context in the UAE was in many ways very different, and the original Finnish teacher education program would need to be developed further to meet the needs of the diverse group of learners. Therefore, the first step of the educational design research process was to identify these needs. This stage involved negotiations with HCT representatives, as well as a web conference where all the interested faculty members were invited to share their views and express their expectations regarding the program. These discussions were combined with a curriculum analysis of the original program to help customize the content adequately.

An important driver for the need of professional development for teaching faculty was the ongoing paradigm shift towards a networked knowledge society (e.g., Castells, 2007; Siemens, 2005) and its implications for education. The education-related discussion in the past years has been dominated by this construct; however, the focus has often been on individual phenomena rather than attempting to develop a holistic understanding of the underlying paradigm. This discussion can be very challenging for the educators, especially as it is often underpinned with an undefined but insistent demand to change in order not to fall behind. Therefore, one of the aims in developing the program was to demystify this discourse and offer a forum for critical and informed discussion. Also futures studies and trends were examined, such as the Horizon Report (Johnson, Smith, Willis, Levine, & Haywood, 2011), which regularly predicts a set of key trends—based on a yearly analysis of current articles, interviews, papers, and new research—considered to be the major drivers of educational technology adoptions during the next five years. To avoid a superficial showcase of trends and technologies, the aim was to combine theoretical knowledge of teaching, learning, and assessment with key trends in education, and bring both down to practice.

The next step was to develop a prototype solution, informed by existing theoretical knowledge, design principles, and technological solutions.

The principles of authentic e-learning as defined by Herrington, Reeves, and Oliver (2010) were chosen as the framework for the design. Firstly, it was clear that the approach of a program that aims to transform teaching practice could not follow a traditional, top-down, one-to-many content delivery model that characterized the industrial age paradigm of learning (Castells, 2007). Secondly, it was crucial to ensure that the learning design would not fall into the pit that is extremely common in online learning: simply adapting new technology to traditional systems, practices, and methods (Herrington et al., 2010), rather than using authentic learning principles that complement the affordances and characteristics of online learning.

The designers were cautious to avoid the pitfalls often identified with regard to teacher professional development. Very often, the professional development is implemented rather poorly, typically in the form of isolated workshops that concentrate on developing teachers’ technical skills with specific technologies (Dabner, Davis, & Daka, 2012). Many teacher professional development programs remain superficial and fail to provide ongoing support for teachers when they attempt to apply the new curricula or pedagogies (Dede, Ketelhut, Whitehouse, Breit, & McCloskey, 2009). The information is fragmented and does not fit with the professional contexts of the participants (Dede et al., 2009; Dabner et al., 2012). There are often limited opportunities for participants to interact with each other (Cho & Rathburn, 2013). Therefore, impactful professional development opportunities that lead not only to increased knowledge, but also to improved teaching practice is very much needed (Dede et al., 2009, Ostashewski, Moisey, & Reid, 2011). The principles of authentic e-learning were seen as a useful design framework in order to meet these requirements.

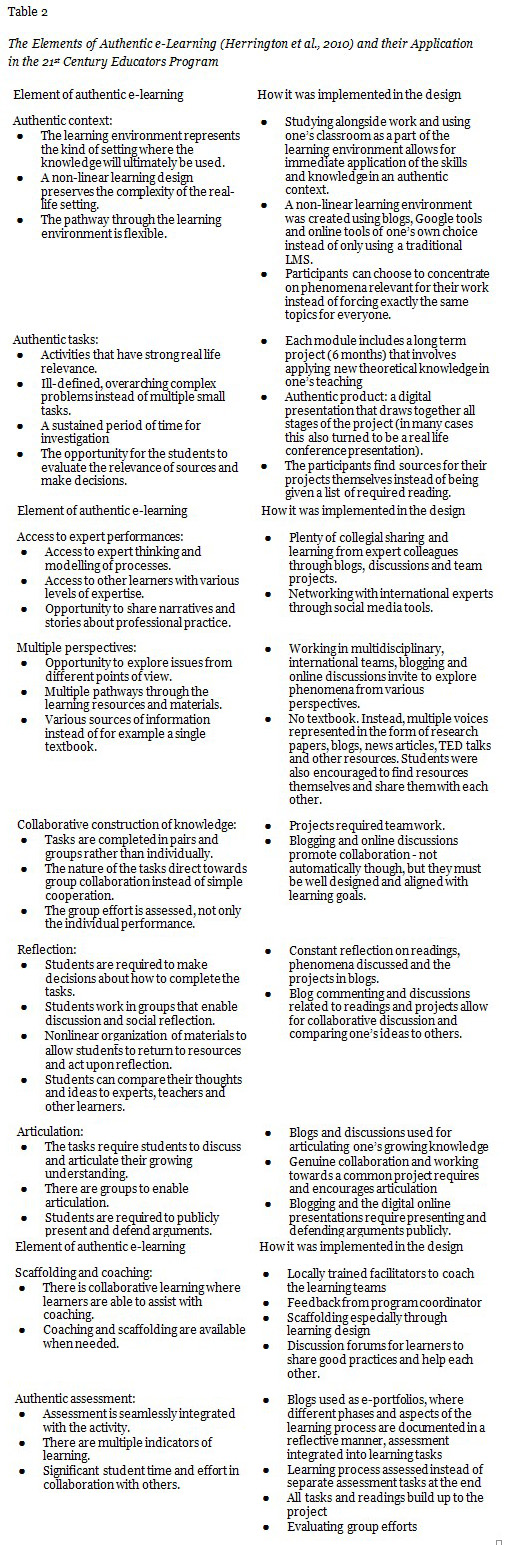

Table 2 illustrates the characteristics of nine principles of authentic e-learning and how each was instantiated in the learning design of the program.

Once the intended intervention or the prototype of the solution was designed, it was evaluated and tested internally at TAMK. The design team guided a review team through the program, documented their recommendations, and implemented the final changes before the program went live in September 2011.

Divided into three modules, the program was designed to run through three semesters. After each module, a survey was conducted to evaluate the appropriateness and effectiveness of the intervention. This section discusses the first iteration and evaluation and how it was used to inform the redesign.

The first formative evaluation of the program was conducted in January 2012. The method chosen was an online survey that was designed within an online survey tool (SurveyMonkey). The survey included both multiple choice and open-ended questions, out of which quantitative data was used to obtain an overview of the trends, and then the qualitative data were analysed in more detail. Out of the 30 participants who completed the module and the nine facilitators involved, 27 people completed the survey.

A thematic analysis was conducted of the data received through the open-ended questions. A framework for the analysis was constructed using the elements of authentic e-learning for the categorization of the data. The respondents’ comments were first arranged into the nine categories, according to the element of authentic e-learning to which they best belonged. In the second phase of the analysis, the categorized comments were sorted into challenges and opportunities regarding each given element. Once all the responses were categorized, recurring themes were sought and they were arranged thematically. The findings of the first evaluation have been reported earlier (Teräs, Teräs, & Herrington, 2012; Teräs, 2013), allowing this paper to concentrate on the most significant challenges that were identified, and explain how they informed the iterative design research process.

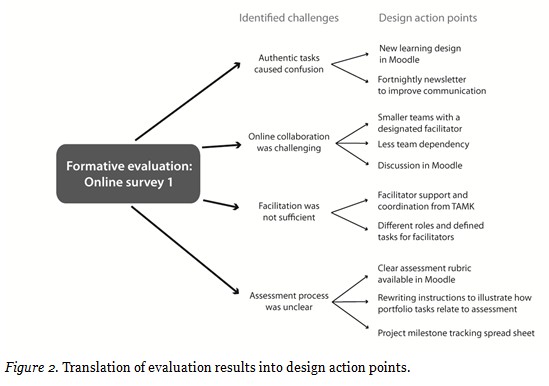

The analysis of the data revealed that especially four elements had caused challenges to the participants: authentic tasks, collaborative construction of knowledge, scaffolding and coaching, as well as authentic assessment. The open-ended quality of authentic tasks was new and challenging for many, and often it had been unclear for the participants what was expected of them. The same problem was reflected in the uncertainty with regard to authentic assessment: The communication of the intertwined nature of the authentic tasks and assessment had been ambiguous and the idea of assessing the learning process instead of clearly defined assessment tasks remained unclear. Moreover, collaboration and working in teams had been difficult. Team members not adhering to schedules, communication difficulties, and different expectations caused friction. Scaffolding at the metacognitive level was also often seen as insufficient when more active facilitator directions and feedback were expected (Teräs, Teräs, & Herrington, 2012).

The first evaluation stage was followed by translating the gathered information into a refined redesign. As McKenney and Reeves (2012) point out, the challenge in educational design research is to redesign in a way that remains true to the original intervention goals. This requires careful reflection instead of hastily jumping to conclusions with regard to the usefulness of the intervention. For example, although in this study there appeared to be uncertainty and dubiety regarding authentic tasks, this should not automatically lead to the conclusion that traditional assignments are “better” than authentic tasks. Indeed, a closer examination of the nature of the challenges suggested room for improvement in the implementation of the authentic e-learning principles in the learning design.

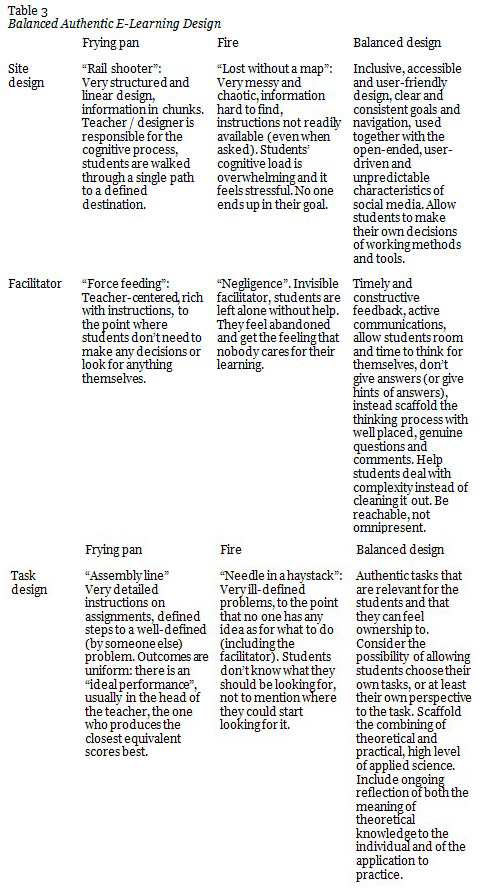

The analysis of the data emphasised the crucial role of scaffolding and coaching in the success of an authentic e-learning design. Three areas (site design, facilitator’s role, and learning task design) were identified where a balance needs to be sought, in order to avoid a jump from the frying pan into the fire—in other words, trying to change an unwanted situation by going to the other extreme that is equally dangerous, or that sacrifices the principles upon which the approach was based. Each of the pitfalls is illustrated in Table 3 by a metaphor: As for site design, the extremes are “rail shooter” (the term refers to a type of video game where the player has no control over the path of her or his avatar but is taken from beginning to end as if tied to rails) and a “lost without a map” scenario. The facilitator should avoid “force feeding” as well as “negligence”, and the task design should resemble neither “assembly line” nor “needle in a haystack”.

The redesign of the learning environment involved practical adjustments that are described in more detail in the following section.

One of the biggest individual challenges regarding the redesign was the learning management system (LMS) used. The LMS that had been in use during Module 1 did not seem to lend itself easily to the constructivist, authentic e-learning design. Being rather content-driven it allowed for little flexibility in the way the site could be presented, and the embedded tools, such as the synchronous meeting tool, were extremely teacher-centric. Relying fully on social media was not an option, due to privacy and legal issues related to assessment and student information. Therefore, a bold decision of changing the learning management system in the middle of the program was made and a new learning design was implemented in the Moodle LMS. The aim of the LMS transition was to improve communication and reduce the confusion with the learning tasks and assessment with the help of a clearer design, as well as to provide more user-centric forums for discussion. Moreover, a fortnightly email newsletter was introduced. The newsletter was visually appealing and informal in tone, with the twofold purpose of improving communication between the program leaders, facilitators, and participants, and promoting a sense of community by introducing brief participant and facilitator biographies, news, and examples of participants’ work.

In order to better support online collaboration, the teams were restructured. They were reduced in size and each small team was allocated a designated facilitator. Moodle discussion forums were established to allow for spontaneous discussion related to the topic at hand. In addition to these measures that aimed to better support collaboration, the team project of Module 2 was redesigned to be less dependent on individual team members’ performance.

To clarify the authentic assessment process, three scaffolding measures were employed. A clearer assessment rubric specifically adapted for blog writing and online collaboration was introduced. Moreover, the instructions for the project and blog writing tasks were rewritten in a way that illustrated more clearly how they formed a reflective part of the assessment. Finally, a Google spreadsheet for project milestone tracking was linked into Moodle. The spreadsheet allowed for the participants to mark completed milestones themselves, thus also making their progress visible for other team members. They could also share information about the scope and goals of their project through the spreadsheet, as well as share addresses to their blogs. The challenges related to facilitation were addressed in two ways. Facilitators’ tasks were reorganized to reduce the workload and to clarify responsibilities, and the team facilitators were offered more systematic support from TAMK.

Figure 2 illustrates the way the identified challenges were translated into redesign.

A new survey was conducted at the end of Module 2, in order to evaluate the adequacy of the redesign measures, and identify new challenges and successes. The methods of gathering, thematising, and analysing data were similar to the first survey. This time, 10 participants out of the 19 that completed the module responded to the survey. Responding to both surveys was optional, so the significant decrease in the response rate is noteworthy. It might indicate that people had fewer pressing concerns after the second module and did not therefore feel the need to respond to voice their concerns. It could, of course, also suggest decreased interest, perhaps due to disappointment regarding how impactful the earlier feedback was. However, judging by the positive trend identified in the responses, this would seem less probable.

In the following section, the question of the adequacy of the redesign is addressed first, then a discussion of the new challenges and successes revealed by the data.

Authentic tasks was an area that was addressed through several changes in the learning design. This proved to be successful: Nine out of 10 respondents found the requirements of the tasks clearer compared to the first module. The majority felt that the newsletter had brought added value. All respondents found Moodle a more suitable and more intuitive learning management system for the purposes of the program. Two respondents would still have hoped for clearer instructions, whereas some had found it difficult to implement the authentic task in practice.

However, this time the successful areas outweighed the challenges. Almost all the respondents reported that working on the project had been a highly rewarding learning experience. Learning to integrate relevant technology in one’s own teaching, improving one’s teaching skills with new ideas and methods, as well as positive impact on student experience were mentioned in the comments. This also became evident in the blogs where the participants continuously reflected upon the different stages of the project, in relation to theoretical knowledge and experiences from implementing and evaluating it. It is noteworthy that not all projects ended up being successes—sometimes they simply did not work out as planned. However, this also constituted a useful and rewarding learning experience—one of the respondents mentioned that the best part of the module had been “reflecting what went wrong with my project”.

Collaborative construction of knowledge also improved, but remained one of the most challenging areas. Half of the respondents found that collaboration had improved, whereas the other half found no difference. Six out of 10 found that the discussion forums in Moodle supported collaboration, mainly by allowing informal discussion and interaction between people from different teams. Sharing experiences and realizing that others struggled with similar questions had been very important for some of the respondents. However, others felt the discussions had not added value.

As for remaining challenges, two themes could be identified. There appeared to be a tendency of perceiving some other participants as hindrances to collaboration, either due to lack of knowledge, interest, experience, commitment, or engagement. As one of the respondents put it: “Too many participants think all they need to do is make a post. They don’t seem to try to engage in the discussion or respond to what others say.”

Respondents reported that peers had not provided feedback, or that they did not offer in-depth contributions or engage in discussion. Some participants hoped there would have been a way to find colleagues with similar working methods as themselves and form teams with them. One respondent even doubted that collaboration could ever be successful between people with such different levels of experience.

The few suggestions for better supporting collaboration all involved increasing the number of synchronous meetings, for example, through Google Hangouts. This had indeed been the intention in the redesign, however, the way these meetings were realized in the end varied greatly. Some facilitators made a much more systematic use of it than others. Some teams had found it hard to find common timeslots. This would probably always be the case in a program that is taken alongside work and other life commitments. A development consideration for the future might be to include more regular, pre-scheduled synchronous meetings, with the recommendation to attend a certain number of them.

Some participants felt that collaboration had greatly improved, predominantly due to the introduction of new collaboration channels. It could also be seen in the data that the tasks being less heavily dependent on collaboration made the process easier. However, the design team felt this as a slight compromise in the authentic e-learning design: Collaboration should not be an optional and additional extra, but a built-in requirement for the successful completion of the authentic task (Herrington, Reeves, & Oliver, 2010). Therefore, reducing the dependency on the team was “the easy way out”. Collaborative learning is in many ways more demanding than traditional individual ways, even more so in online environments, so it is very easy for the learning designers and teachers to simply revert to traditional practices. We feel, though, that a closer examination of the element of scaffolding and coaching and development of appropriate design principles is a more promising way forward in order to ensure that students can benefit from the strengths of collaborative endeavor.

The redesign regarding scaffolding and coaching turned out to be partly very successful, partly less so. Overall it could be said that the redesign of scaffolding—the aspects that could be improved with learning design—resulted in desired outcomes, whereas coaching—the aspect that required changes in the facilitators’ work—was more difficult to improve. It was quite obvious that the new learning design was successful in reducing the anxiety and confusion that some participants had experienced during Module 1. The balance that was sought between the “rail shooter” and the “lost without a map” scenarios (see Table 3) seemed to be well achieved. However, the same balance was not found with regard to facilitation. The comments concerning facilitation displayed considerable variation. Some would not stop praising their team facilitator, whereas others felt that the team had been mostly working on their own.

The two main themes observed in the data were: 1) a need for more timely and better-focussed feedback to support the learning process, and 2) a need for more active involvement of the facilitators to improve the sense of community. The respondents suggested that the facilitators’ workload would have to be adjusted more adequately (“they are doing a great job considering the little time that they have”), or that they should receive more training. Although the workload issue was beyond the influence of the design team, the important observation was that the role of the facilitator is central for the successful authentic e-learning process, and it should be ensured that facilitators have sufficient resources, relevant knowledge and experience, and sound understanding of the authentic e-learning model to be able to avoid the extremes of “force-feeding” and “negligence” as described in Table 1.

The authentic assessment in Module 2 consisted of a development project where the teachers were requested to choose a technology that they would study, integrate in their teaching, and evaluate. They were to search for literature and earlier research regarding the technology, write an implementation plan of how and why they would be using it, reflect upon the different stages of the project in their blog, and, in the end, design and share an interactive electronic presentation about the project. During the course of the module, theoretical background regarding online pedagogies was also introduced and the participants reflected upon the theory and its applicability in their project in their blogs. The process was explained in detail, and the milestone tracking tool was used to facilitate keeping up to date and to offer a support structure to the process. Compared to Module 1, there was significantly more scaffolding in place; however, the project still fulfilled the requisites for an authentic assessment task: The interactive presentation was a polished, refined product; students participated in the activity for an extended period of time (6 months), and the students were assessed on the product of an in-depth investigation.

The second evaluation indicated that all the areas that were redesigned had improved, and no new major challenges were identified. With regard to the five other elements of authentic e-learning, the most important observations were related to reflection and articulation. The ways in which the personal blogs were used in the program seemed to support these areas very well. For many, writing the blog was the most rewarding learning experience as it supported ongoing reflection in a systematic way. The way the blog and other activities contributed to the project and supported reflection was also appreciated:

I enjoyed keeping track of the project and now have the possibility to look back. For me, that is a new experience and one that I appreciate, i.e. to have written down a teaching process and having shared it publicly.

The idea of public articulation of one’s growing understanding was at first new and challenging to some participants, but it soon proved to be beneficial. In the words of one of the respondents:

Writing my blog was not always easy as my learning process was now public. However, I have appreciated the challenge and regard it as one the best learning opportunities of this course. It has made me reflect a lot on teaching practices.

Thus a fruitful connection could also be found between articulation and reflective practice: Being encouraged to continuously make the learning process public supported the formation of a practice of reflection. Considering Schön’s definitions of reflection-in-action, the type of reflection that takes place while we work, and reflection-on-action, where we look back and evaluate our own performance (1983), the process of articulation could also be seen as a way of making the reflection-in-action visible and public. Traditionally, students are usually required to publish polished, well-structured arguments that are evaluated and assessed. Therefore learners may at first feel quite uncomfortable with publishing unfinished thoughts, initial ideas, and works in progress, just as the above quote suggests. However, it seems that this type of pedagogical use of blogs and discussion forums might be more effective in supporting the systematic development of reflective skills, which in turn seems to have a positive impact on professional development. When asked what the most rewarding experience during the program was, one of the respondents said the following: “Writing my blog, because it gave me the opportunity to reflect. I appreciate that as in my day to day I don’t have much time for reflection and it is an essential part of learning and personal/professional development.”

As for access to expert performances and multiple perspectives, some participants found the discussion forums very useful. The forums provided an informal channel for collegial sharing and support. Some of the participants made an extensive use of the forums, whereas others did not find them that useful and chose not to take part in them. The discussions were not a formal requirement, but the opportunity was provided on a regular basis. Keeping in mind that the participants were busy educators studying alongside work, it is noteworthy that so many took the opportunity to engage. This suggests that the need for an informal way of interacting and sharing with colleagues is very genuine and should be taken into account in the learning design.

This paper has described the use of an educational design research process in finding the right balance in an authentic e-learning design of a fully online postgraduate certificate program. Educational design research has proved to be a very fruitful approach for designing, implementing, and improving an educational intervention in a complex setting. It allows for rapid prototyping and very agile, targeted redesign through iterative cycles in order to gain a deeper understanding of the learner experience during the process. The iterative cycles of implementation and revision enables the learning design to be user-centered and significantly improved where required, and the strengths of the program can be identified at an early stage in order to further enhance the successful elements.

When implementing a fully online authentic e-learning program, it is helpful to identify the challenges and potential pitfalls. It is worthwhile to recognize and be aware of the extremes—the frying pans and the fires—and resist the temptation of hasty corrective measures. Authentic e-learning differs in many ways from some traditional educational approaches to which the students may be accustomed. Therefore, especially at the beginning of the learning process, the students may experience difficulties with some of the elements of authentic e-learning. These challenges are best addressed with adequate scaffolding and coaching measures. We close by suggesting four strategies for planning and implementing effective scaffolding and coaching to enhance the authentic e-learning experience.

Authentic e-learning was found to be very useful as a framework for both design and evaluation. The authentic approach allowed for a better transfer of learning and impact on teaching practice: Instead of merely gaining knowledge of pedagogy or learning technologies, or even learning how to use new teaching methods and technologies in practice, the participants had the chance to fully incorporate these into their teaching on a deeper level and thus transform their practice.

Anderson, T., & Shattuck, J. (2012). Design-based research: A decade of progress in education research? Educational Researcher, 41(1), 16-25.

Brown, A. L. (1992). Design experiments: Theoretical and methodological challenges in creating complex interventions. Journal of the Learning Sciences, 2(2), 141-178.

Castells, M. (2007). Communication, power and counter-power in the network society. International Journal of Communication, 1(1), 238-266.

Cho, M., & Rathbun, G. (2013). Implementing teacher-centred online teacher professional development (oTPD) programme in higher education: A case study. Innovations in Education and Teaching International, 50(2), 144-156.

Cobb, P., Confrey, J., diSessa, A., Lehrer, R., & Schauble, L. (2003). Design experiments in educational research. Educational Researcher, 32(1), 9-13.

Collins, A., Joseph, D., & Bielaczyc, K. (2004). Design research: Theoretical and methodological issues. The Journal of the Learning Sciences, 13(1), 15–42.

Dabner, N., Davis, N., & Zaka, P. (2012). Authentic project-based design of professional development for teachers studying online and blended teaching. Contemporary Issues in Technology and Teacher Education, 12(1), 71-114.

Dede, C., Ketelhut, D. J., Whitehouse, P., Breit, L., & McCloskey, E. M. (2009). A research agenda for online teacher professional development. Journal of Teacher Education, 60, 8-19.

Herrington, J., Reeves, T., & Oliver, R. (2010). A guide to authentic e-learning. London: Routledge.

Johnson, L., Smith, R., Willis, H., Levine, A., & Haywood, K. (2011). The 2011 horizon report. Austin, Texas: NMC. Retrieved from http://www.nmc.org/publications/2011-horizon-report

Kelly, A. E. (2003). Research as design. Educational Researcher, 32(1), 3-4.

McKenney, S., & Reeves, T. C. (2012). Conducting educational design research. New York: Routledge.

Ostashewski, N., Moisey, S., & Reid, D. (2011). Applying constructionist principles to online teacher professional development. The International Review of Research in Open and Distance Learning, 12(6), 143-155.

Plomp, T. (2007). Educational design research: An introduction. In T. Plomp & N. Nieveen (Eds.), An introduction to educational design research. SLO: NICD.

Reeves, T. C. (1995). Questioning the questions of instructional technology research. Invited Peter Dean Lecture, National Convention of the Association for Educational Communications and Technology. Anaheim, CA. Abbreviated version retrieved from http://itforum.coe.uga.edu/paper5/paper5a.html

Reeves, T. C. (1999). A research agenda for interactive learning in the new millennium. In P. Kommers & G. Richards (Eds.), Proceedings of EdMedia 1999 (pp. 15-20). Norfolk, VA: AACE.

Reeves, T. C. (2006). Design research from a technology perspective. In J. van den Akker, K. Gravemeijer, S. McKenney & N. Nieveen (Eds.), Educational design research (pp. 52-66). London: Routledge.

Reeves, T. C. (2011). Can educational research be both rigorous and relevant? Educational Designer, 1(4).

Reeves, T. C., & Hedberg, J.G. (2003). Interactive learning systems evaluation. Englewood Cliffs, N.J.: Educational Technology.

Reeves, T., McKenney, S., & Herrington, J. (2011). Publishing and perishing: The critical importance of educational design research. Australasian Journal of Educational Technology, 27(1), 55-65.

Schön, D. A. (1983). The reflective practitioner: How professionals think in action. London: Temple Smith.

Siemens, G. (2005). Connectivism: A learning theory for the digital age. International Journal of Instructional Technology and Distance Learning, 2(1), 3-10.

Teräs, H. (2013). Dealing with “learning culture shock” in multicultural authentic e-learning. In T. Bastiaens & G. Marks (Eds.), Proceedings of World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education 2013 (pp. 442-472). Chesapeake, VA: AACE.

Teräs, H., & Myllylä, M. (2011). Educating teachers for the knowledge society: Social media, authentic learning and communities of practice. In S. Barton et al. (Eds.), Proceedings of Global Learn 2011 (pp. 1012-1020). AACE.

Teräs, H., Teräs, M., & Herrington, J. (2012). A reality check: Taking authentic e-learning from design to implementation. In T. Amiel & B. Wilson (Eds.), Proceedings of World Conference on Educational Multimedia, Hypermedia and Telecommunications 2012 (pp. 2219-2228). Chesapeake, VA: AACE.

van den Akker, J. (1999). Principles and methods of development research. In J. van den Akker, N. Nieveen, R. M. Branch, K. L. Gustafson & T. Plomp (Eds.), Design methodology and developmental research in education and training (pp. 1-14). The Netherlands: Kluwer.

van den Akker, J. (2003). Curriculum perspectives: An introduction. In J. van den Akker, W. Kuiper & U. Hameyer (Eds.), Curriculum landscapes and trends (pp. 1-11). The Netherlands: Kluwer.