“Reusable learning objects” oriented towards increasing their potential reusability are required to satisfy concerns about their granularity and their independence of concrete contexts of use. Such requirements also entail that the definition of learning object “usability,” and the techniques required to carry out their “usability evaluation” must be substantially different from those commonly used to characterize and evaluate the usability of conventional educational applications. In this article, a specific characterization of the concept of learning object usability is discussed, which places emphasis on “reusability,” the key property of learning objects residing in repositories. The concept of learning object reusability is described as the possibility and adequacy for the object to be usable in prospective educational settings, so that usability and reusability are considered two interrelated – and in many cases conflicting – properties of learning objects. Following the proposed characterization of two characteristics or properties of learning objects, a method to evaluate usability of specific learning objects will be presented.

Keywords: Learning objects; reusability; usability evaluation; learning technology standards

Growing interest in Web-based learning has accelerated steps towards the standardization of electronic learning contents (Anido et al., 2002). Work on defining reference models for learning objects is currently underway, with the ultimate aim of facilitating their inter-changeability, composition, and even their use in highly personalized learning contexts (Martinez, 2001). Diverse organizations supporting standardization are converging towards the Sharable Content Object Reference Model (SCORM) (ADL, 2001a). The most current version of SCORM (1.2) comprises both a model for the description and aggregation of contents (ADL, 2001b), and a specification for run-time interaction between client applications and Learning Management Systems (ADL, 2001c). While the SCORM, the Institute for Mathematical Statistics (IMS), and others conform to the Learning Objects Metadata (LOM) standard, (IEEE, 2002), the IMS Global Consortium (http://www.imsglobal.org/) provides an even more comprehensive collection of specifications. The concept of reusable learning objects (Wiley, 2001) is a key feature currently driving efforts oriented towards standardization and specification of Web-based educational content. This industry is growing, and it is growing fast.

Both the concept of learning object and its realization in the SCORM model are not free of controversy (Polsani, 2003; Bohl et al., 2002). As a consequence, studies regarding learning objects are first forced to commit to a concrete definition of the term. According to Williams (2000), clarification is of central importance when approaching evaluation – as we are attempting here – since such criteria must be formulated according to some previous definitions of the desirable characteristics of learning objects. As pointed out by Sosteric and Hesemeier (2002), definitions found in specification documents are too loose or vague to serve as a source for the determination of the key characteristics of learning objects. For example, the IEEE LOM standard definition states that a learning object is “any entity – digital or non-digital – that may be used for learning, education or training” (IEEE, 2002), a vague definition which leaves unspecified any specific property beyond the mere fact of usage in educational contexts. These vague definitions may paradoxically result in learning objects that are not designed for reusability, simply because the “everything goes” principle neglects the fact that learning object design requires following specific guidelines, such as those described in (Boyle, 2003), which allow them to be used in diverse educational contexts.

Our focus here is on the development of learning objects intended for reuse that would typically be published in “learning object repositories” (Richards et al., 2002). Thus, more precise definitions that explicitly consider reusability are required. As a starting point, we shall use the critical definition given by Polsani (2003) “A Learning Object is an independent and self-standing unit of learning content that is predisposed to reuse in multiple instructional contexts.” This definition is consistent with those given by Sosteric and Hesemeier (2002) and Hamel and Ryan-Jones (2002). However, we shall add to this definition two additional constraints: first, learning objects are “digital entities” (i.e., digital files or streams); and second, they possess a related “metadata record” which describes the potential contexts in which they may be used. These metadata records contain descriptions about authorship and technical and educational properties of the learning object (among others), according to the information elements described in the above-mentioned specifications.

The characteristic of “predisposition to reuse” must be further analyzed to derive properties that are more concrete. Learning object specifications often refer to: 1) durability; 2) interoperability; 3) accessibility; and 4) reusability. The first three characteristics are essentially of a technical nature. “Durability” and “interoperability” are characteristics related to software and hardware platform independence, which can be obtained by adhering to public Web languages and conventions. The third characteristic, “accessibility,” is understood in this context as the capability of being searched for and located, which is achieved by the presence of an appropriate searchable metadata record.

Consequently, the fourth characteristic, “reusability” remains the most difficult to define, since it is related mainly to instructional design, and not to digital formats or content structure that are the main concern of interoperability and accessibility. Additionally, the desirable “granularity” of a learning object is determined by the imposed reusability requirements; therefore, objects must be decoupled from each other (Boyle, 2003) to achieve both educational context independence and technical independence (i.e., not being linked to other digital contents). Several authors point out that, in consequence, granularity must be limited to describing a concept or a small number of related concepts (Polsani, 2003), or to a single educational objective (Hamel and Ryan-Jones, 2002). This is also consistent with the position argued by Wiley, Gibbons, and Recker (2002), who regard coarser granularity learning objects as more challenging to combine due to the multiple layers of elements that are integrated in the design of the object (e.g., instructional approach or learning design).

Reusability, therefore, is an essential and arguably the most important characteristic of learning objects. However, since reusability refers to prospective and future usage scenarios, it is difficult to measure. This entails that the specification of possible usage contexts determines the degree of reusability of the learning object, and that overall reusability may be measured as the aggregated degree of adequacy for each of the possible contexts specified. Unfortunately, both the estimation of that degree of adequacy and the determination of possible contexts are challenging tasks. Our research departs from Feldstein’s view (2002) in which the “usability” of a learning object is defined as a context-dependant measure of “goodness,” giving rise to the problem that a given learning object is likely to be fairly usable in a context or a set of contexts, but less usable or simply not appropriate in different contexts. This leads us to consider usability and reusability as two, somewhat conflicting properties that must be balanced when designing learning objects.

Based on preliminary results described by Sicilia, García, and Aedo (2003), we will determine measures of the quality of learning objects focusing on reusability and usability. To do so, our article is structured as follows. First, as the point of departure in the search for measures of quality of learning objects, the relationship between usability and reusability of learning objects is discussed. Then, in the light of that relationship, a concrete “discount” evaluation method for learning objects is sketched. Finally, conclusions and future research directions are described.

According to Feldstein (2002) “Usability in e-Learning is defined by the ability of a learning object to support or enable [ . . . ] a very particular concrete cognitive goal.” The specific sense of the term “usability” suggests that “very particular goals” become the center of the evaluation, and in consequence, the context of the evaluation, including the pedagogical or instructional intention, must be to some extent pre-determined. Theoretically, a (finite) set C of possible contexts of use may be identified from the specifications of an appropriately defined metadata record. Each element ci - C is then a “possible context of use.” For the evaluation to be feasible, at least the cognitive goal and some kind of user characterization must be described through metadata for each of those possible contexts. Then, some kind of usability evaluation must be carried out for each context. It is possible that the usability of a given learning object is appropriate in a concrete context, but inappropriate in others. For example, a learning object about the inheritance mechanism in Java may be highly appropriate for the objective of a first course of programming if it provides only the essential information, and if it is written in such a way as to take into consideration that prospective users are novices. Obviously, however, that learning object would not be appropriate for the goal of preparing senior engineers for an official Java certification test.

For simplicity sake, suppose that the usability evaluation procedure results in a value in the interval [-1, 1], with negative numbers meaning significant usability problems. This way, for a given context ci we have an associated evaluation outcome denoted by Usability(ci). Since these usability evaluation outcomes are typically determined prior to any actual use of the learning object in an educational application, such outcomes are only estimations that would be subject to subsequent refinement, as will be discussed later. Nonetheless, they serve the purpose of constituting a valuable pre-assessment.

In abstract and idealized terms, the above relationship between usability and reusability may be described mathematically as:

Reusability = SC Usability(ci) |C| (1)

The expression (1) describes reusability as the aggregation of the adequacy of the learning object to each of its possible contexts of use, multiplied by the number of those contexts. It should be noted that possible values for reusability thus depend on that cardinality of possible contexts and, in consequence, it ultimately depends on the scope of the object as specified in the metadata record. Of course, we do not pretend that this formula is the magic key to learning object evaluation, but it does provide descriptive properties that are useful in reasoning about methods of evaluation that make an explicit consideration of reusability.

Let us consider now the following two illustrative situations:

Learning object A represents a case of minimal reusability; it is intended for a single particular situation, and other usage contexts are simply not evaluated. Of course, experts inspecting the learning object may eventually decide that it is also appropriate for non-declared contexts, but they do so at their discretion. In contrast, software modules searching for learning objects to use in concrete situations are restricted to consider only the contexts declared explicitly. Learning object B is potentially “ten times more reusable” than learning object A, but this is only true if its degree of usability is high for every of its ten specified possible contexts of use. It should be noted that simply broadening the scope of the object in the metadata record is not enough for the object to be considered more reusable; some form of usability evaluation is required for each of those contexts. In an extreme case, the overall reusability grade of learning object B may fall below the one of learning object A. This indicates that metadata specifications should become as precise and narrow as possible, so that only contexts in which the object is really usable must be considered in metadata records. It should be noted that our use of the term “preciseness” in this article refers to any context declared in the metadata record as a context in which the learning object can be reasonably expected to be usable. A different problem is that of how to determine all the possible contexts of use for a learning object, prior to the creation of its metadata. In many cases, this would probably be a difficult task, but it does not affect the concepts of reusability and usability discussed here, since they are connected only to the contexts “explicitly declared” in the metadata record (that may be extended or restricted along the usage history of the learning object). For example, a learning object concerning introductory material on “Hoare triples” (a formal program verification method) created for the context of higher education in mathematics, can be extended to the context of a training module for senior programmers regarding “Design by Contract,” since the latter subject borrows some terminology from the former. If the learning object on Hoare triples is simple and clear enough to be useful for the second context, the overall reusability of the learning object increases with the specification of that new context in the metadata, and it becomes searchable and accessible to tools looking for material regarding the second context.

Even if we consider that obtaining an expression like (1) is unrealistic or unpractical, usability and reusability are clearly two intimately connected properties, and the metadata record thus becomes the central element in the early stage of the learning object life cycle.

Some exceptions may be added to the evaluation procedure suggested in expression (1). Concretely, a number of prerequisites can be evaluated independently of any specific context. These prerequisites include both definitional characteristics and elemental usability aspects.

The specification of possible contexts through metadata bears some resemblance to the technique of “design by contract” introduced by Bertrand Meyer in the field of object-oriented development (Meyer, 1997). According to this technique, the contract of an object specifies what that object expects of its clients and what clients can expect of it. The metadata record can then be considered as the contract of the learning object, which sanctions permissible usage contexts for automated tools. Just as software code is required to be tested against the requirements implicit in its contract, a learning object must be evaluated against its possible contexts of use. In this respect, it is important that an educator may use a learning object for a context that is not declared in the metadata record, but even so, software tools cannot proceed in such imaginative ways, so that they are restricted to what is provided in the metadata.

An important research problem, associated with the evaluation described immediately above, is that of the appropriateness of existing metadata schemas for the task of specifying the domain of possible contexts of use for a given learning object. In other words, are current metadata specifications precise enough to determine set C for a given object? Although this controversial issue is beyond the scope of this article, some initial reflections are provided here in an attempt to set the stage for future studies.

In our view, such an analysis must be approached from the viewpoint of software construction – i.e., a metadata record must be machine-understandable in a manner that enables a piece of software (or agent) to decide if it fits a concrete educational setting. In consequence, educational-oriented metadata items should not be considered as “optional” in metadata records. This raises the need for a concept of “completeness” of metadata records, intended as a quality indicator of the extent to which the required machine-readable metadata is available for a learning object. For example, the “educational objective” LOM value, that can be put into the “Purpose” sub-element of a “classification” instance, provides room for the definition of expected learning outcomes, and the “prerequisite” value can be used (in the same element) to specify a given target learner profile. Nonetheless, these descriptors are optional, and without common consensual or standardized practices for their specification, it would be difficult to characterize unambiguous intended usage contexts.

In addition, the basic collection given in IEEE LOM (IEEE LTSC, 2002) should be supplemented with richer item collections as the one described in the Educational Modeling Language (Koper, 2001), and now further specified as IMS Learning Design (IMS, 2003) to make explicit the educational process and roles involved in educational contexts. An analysis of the space of possibilities and the consensual nature of those schemas may be subject of future studies, including its integration in logic-based frameworks providing more complex mechanisms for stating assertions about learning objects (Sicilia and García, 2003).

Additionally, current metadata annotation practices put a metadata record for each single content object. Perhaps more sophisticated encapsulation techniques may provide more information regarding contexts of use. For example, an instructional designer may elaborate three different learning components with the same overall cognitive objective, but providing different levels of depth or narrative structure targeted at different levels of student expertise. These three alternative learning components may be considered a single learning object with a metadata record indicating which one of the alternatives is appropriate for the each situation – in fact, this could be specified using the level C of the IMS Learning Design specification (IMS, 2003). Obviously, this process is more expensive in terms of resources than trying to provide a single content for different users, but this may be considered an option in situations in which different target communities of users require substantially different narrative or expositional characteristics, such as typically occurs when considering learning in multiple environments such as academia and the workplace.

Several evaluation methods for learning objects have been proposed, as summarized by Williams (2000). Nonetheless, many of these approaches are intended for evaluation in a given context of use, while in the case of evaluation of reusable learning objects, those contexts of use may not be precisely determined . priori, and it may be difficult to find users and other stakeholders at the stage of learning object design. In consequence, we provide a simpler, more straightforward evaluation alternative, inspired by the philosophy of “discount techniques” that has emerged in recent years in the field of human-computer interaction evaluation (Nielsen, 1989). According to the discount philosophy, simpler evaluation methods stand a much better chance of actually being used in practical design situations, so that they, in turn, provide a practical, cost-effective alternative to more comprehensive and expensive approaches. For example, Nielsen’s “simplified” thinking aloud has demonstrated similar effectiveness to the thinking aloud protocol while lowering costs significantly (Nielsen, 1992).

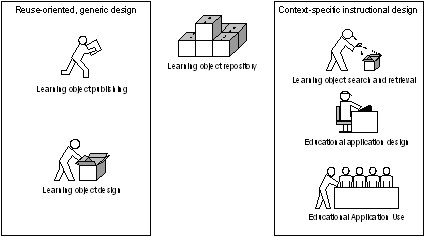

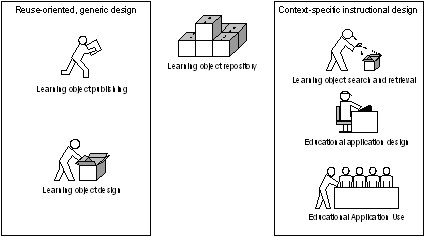

The first step in sketching an evaluation method for learning objects is that of contextualizing design in reuse-oriented situations. Figure 1 shows the overall configuration of such a situation.

Figure 1. Learning object and educational application design as two differentiated processes

The left rectangle in Figure 1 shows learning object design and its subsequent publishing in repositories as a process independent of the design of concrete educational applications using them (depicted in the right rectangle). Even if the two forms of design are carried out simultaneously, learning object design can be considered a separate process (or sub-process), since it focuses on reusability (rather than in a concrete setting), and requires some explicit guidelines that are not a concern for the design of an educational application not intended for reuse. Thus, here we are concerned only with evaluation at the stage of learning object design and the evaluation of actual educational applications may follow existing commonly used techniques.

Departing from the concepts described above, we have sketched the following evaluation procedure consisting in four steps:

Pre-Evaluation follows the objective of deciding whether or not a given digital element can be considered a learning object. This decision can be informed by inspecting the digital format of the entity (i.e., checking standard compliancy and other essential granularity and structure requirements, as those mentioned above). A possible good reference for this check is found in Hamel and Ryan-Jones’s (2002) article regarding principles of learning object design.

Generic Evaluation is oriented towards checking any usability aspect that is independent of the context. This may include stylistic considerations in writing (to the extent that they are context independent, for example, clarity and correctness of expression can be checked irrespective of the possible use of the learning object and other simple checks as those mentioned above.

Once the object has passed the two previous filters, Pre-Evaluation and Generic Evaluation, prospective contexts of use may be analyzed. The Prospective Context Evaluation filter may result in finding the need to improve or complete the existing metadata record of the object, to come up with a more precise definition of its possible context of use. After that, we may proceed to carry out the usability evaluation for each of the identified prospective contexts of use. Since these possible contexts are still not actual ones, usability evaluation methods must be carefully selected to achieve a trade-off between cost and reliability. Our first proposal for that selection is using one or both of the following techniques:

Once steps one to three of the proposed method have been carried out, an initial reusability index may be obtained for the object, and several iterations may follow if serious flaws are found, resulting in narrowing the scope of the learning object, or alternatively enhancing it to fit better to some contexts. At this stage of Continuous Evaluation Data Gathering, a continuous evaluation can take place. In this process, actual uses of the learning object in concrete applications would result in a historical record of evaluation data. In this manner, the practice of reuse gives feedback to the evaluation of the object, a process that ideally may result in more precise estimations of reusability and usability. For this last phase to become a reality, however, learning object repositories should provide support to forms of feedback, and standardization organizations should provide common evaluation data formats and transfer mechanisms. In addition, policies and procedures that support and recognize a collaborative culture in the design of learning contents, such as those described by McNaught et al. (2003), play a crucial role in these kind of collaborative assessment practices.

Once a learning object has been defined, measuring its “goodness” must take into account its essential properties. However, if we want learning objects to become the central component of a more efficient industry of educational content, existing definitions that focus on reusability must become the key property of learning objects. Therefore, assessment techniques for learning objects must approach the concept of reusability in their evaluation criteria.

In this article, we have analyzed the relationship between reusability and context-specific usability in learning objects, giving rise to a novel approach to formulate evaluation criteria for learning objects. In addition, we have sketched a tentative evaluation procedure that we have borrowed from the field of human-computer interaction, as well as from our own experiences.

Our ultimate aim has been to invite development of novel devices for the measurement of the quality of learning objects that go beyond expert rating-based approaches used so far. Two main research directions must follow the initial inquiry described in this article. First, the appropriateness of existing metadata schemas and metadata annotation practices must be considered in the light of assessment and automated selection of learning objects. Second, measurement to determine learning object reusability must be subjected to more ambitious procedures that go beyond mere compliance with to specifications regarding format and structure of contents, and that dig deeper into the difficult issues of compatibility regarding learning objectives.

Advanced Distributed Learning (ADL) Initiative. (2001). Sharable Courseware Object Reference Model (SCORM). The SCORM Overview, October 1, 2001. Retrieved June 17, 2003 from: http://www.adlnet.org/Scrom/

Advanced Distributed Learning (ADL) Initiative. (2001) Sharable Courseware Object Reference Model (SCORM). The SCORM Content Aggregation Model, October 1, 2001. Retrieved June 17, 2003 from: http://www.adlnet.org/Scrom/

Advanced Distributed Learning (ADL) Initiative. (2001). Sharable Courseware Object Reference Model (SCORM). The SCORM Run-Time Environment, October 1, 2001. Retrieved June 17, 2003 from: http://www.adlnet.org/Scrom/

Anido, L. E., Fernández, M. J., Caeiro, M., Santos, J. M., Rodríguez, J. S., and Llamas, M. (2002). Educational metadata and brokerage for learning resources. Computers & Education, 38(4), 351 – 374.

Bohl, O., Schellhase, J., Sengler, R., and Winand, U. (2002). The Sharable Content Object Reference Model (SCORM) – A Critical Review. In Proceedings of the International Conference on Computers in Education (ICCE02), Auckland, New Zealand, 950 – 951.

Boyle, T. (2003). Design principles for authoring dynamic, reusable learning objects. Australian Journal of Educational Technology, 19(1), 46 – 58.

Feldstein, M. (2002) What Is “Usable” e-Learning? ACM eLearn Magazine. Retrieved October 11, 2003 from: http://www.elearnmag.org/

Hamel, C. J., and Ryan-Jones, D. (2002). Designing Instruction with Learning Objects. International Journal of Educational Technology, 3(1). Retrieved October 11, 2003 from: http://www.ao.uiuc.edu/ijet/v3n1/hamel/

Hanley, G. L., and Zweier, L. (2001). Usability in design and assessment: taste testing Merlot. In Proceedings of 2001 EDUCASE Conference.

IEEE Learning Technology Standards Committee (2002). Learning Object Metadata (LOM), Final Draft Standard, IEEE 1484.12.1-2002.

IMS Global Learning Consortium Inc. (2003). IMS Learning Design Information Model Version 1.0 Final Specification. 20 January 2003.

Koper, R. (2001). Modelling units of study from a pedagogical perspective. The pedagogical meta-model behind EML. Retrieved June, 17, 2003 from: http://eml.ou.nl/introduction/articles.htm

Longmire, W. (2000). A primer on learning objects. ASTD Learning Circuits, March 2000. Retrieved June 17, 2003 from: http://www.learningcircuits.org/mar2000/primer.html

Martinez, M. (2001). Successful Learning – Using Learning Orientations to Mass Customize Learning. International Journal of Educational Technology, 2(2). Retrieved June 17, 2003 from: http://www.outreach.uiuc.edu/ijet/v2n2/martinez

McNaught, C., Burd, A., Whithear, K., Prescott, J., and Browning, G. (2003). It takes more than metadata and stories of success: Understanding barriers to reuse of computer facilitated learning resources. Australian Journal of Educational Technology, 19(1), 72 – 86.

Meyer, B. (1997). Object Oriented Software Construction ( 2nd Edition). Prentice Hall.

Nielsen, J. (1989). Usability engineering at a discount. In G. Salvendy and M. J. Smith (Eds.) Designing and Using Human-Computer Interfaces and Knowledge Based Systems, (p. 394-401). Amsterdam: Elsevier Science Publishers.

Nielsen, J. (1992). Evaluating the thinking aloud technique for use by computer scientists. In H.R. Hartson and D. Hix (Eds.) Advances in Human-Computer Interaction 3, 69 – 82.

Nielsen, J. (1994). Chapter 2: Heuristic Evaluation. In Usability Inspection Methods, J. Nielsen and R. Mack (Eds.) (p. 25-62). New York: John Wiley and Sons.

Polsani, P. R. (2003). Use and Abuse of Reusable Learning Objects. Journal of Digital information, 3(4). Retrieved October 11, 2003 from: http://jodi.ecs.soton.ac.uk/Articles/v03/i04/Polsani/

Richards, G., McGreal, R., and Friesen, N. (2002). Learning Object Repository Technologies for TeleLearning: The Evolution of POOL and CanCore. Proceedings of the 2002 Informing Science + IT Education Conference, 1333–1341.

Sicilia, M. A., and García, E. (2003). On the integration of IEEE-LOM metadata instantes and ontologies. Special Issue on Implementation(s) of learning objects metadata standard, IEEE LTTF Learning Technology Newsletter, 5(1).

Sicilia, M. A., García, E., and Aedo, I. (2003). Sobre la Usabilidad de los Objetos Didácticos. In M. Pérez-Cota (Ed.) Proceedings of the Fourth Conference of the Spanish Association of Human-Computer Interaction (iNTERACCION 2003).

Sosteric, M., and Hesemeier, S. (2002). When is a Learning Object not an Object: A first step towards a theory of learning objects. International Review of Research in Open and Distance Learning Journal, 3(2). Retrieved June 17, 2003 from: http://www.irrodl.org/content/v3.2/soc-hes.html

Smulders, D. (2001). Web Course Usability. ASTD Learning Circuits, August 2001. Retrieved June 17, 2003 from: http://www.learningcircuits.com/2001/aug2001/elearn.html

Wiley, D. A. (2001). The Instructional Use of Learning Objects. Association for Educational Communications and Technology, Bloomington.

Wiley, D. A., Gibbons, A., and Recker, M. M. (2000). A reformulation of learning object granularity. Retrieved July 2003 from: http://reusability.org/granularity.pdf

Williams, D. D. (2000). Evaluation of learning objects and instruction using learning objects. In D. A. Wiley (Ed.) The Instructional Use of Learning Objects: Online Version. Retrieved June 17, 2003 from: http://reusability.org/read/chapters/williams.doc