|

|

Yu-Hui Ching and Yu-Chang Hsu

Boise State University, USA

There has been limited research examining the pedagogical benefits of peer feedback for facilitating project-based learning in an online environment. Using a mixed method approach, this paper examines graduate students’ participation and perceptions of peer feedback activity that supports project-based learning in an online instructional design course. Our findings indicate that peer feedback can be implemented in an online learning environment to effectively support project-based learning. Students actively participated in the peer feedback activity and responded positively about how the peer feedback activity facilitated their project-based learning experiences. The results of content analysis exploring the peer feedback reveal that learners were mostly supportive of peers’ work and they frequently asked questions to help advance their peers’ thinking. The implications and challenges of implementing peer feedback activity in an online learning environment are discussed.

Keywords: Peer feedback; online learning; project-based learning; online discussion

The increasing prevalence of online programs and courses in higher education leads to burgeoning research on the effective instructional methods and strategies to promote and facilitate students’ learning in this context. Among various instructional methods, the constructivist methods exemplify meaningful learning through hands-on problem solving activities that promote active knowledge construction (Jonassen, 1998). Project-based learning (PjBL) is one of the methods for creating meaningful learning experiences. PjBL uses a driving problem to trigger inquiry activities in which students ask questions, search for information, brainstorm, design, and test alternative solutions (Blumenfeld, et al., 1991). During this inquiry process, learners create a series of culminating artifacts by applying what they learned previously or what they have searched and acquired along the way. The created artifacts are external representations of students’ solutions to the problem and can be shared and critiqued for further improvement. As projects are often relevant to the individual learner’s context, PjBL promotes student-centered learning where students are offered the opportunity to assume more responsibility and independence of learning in a personally meaningful way.

Project-based learning has been studied in adult online learning settings for its effectiveness. Adult learners are often goal-directed and motivated to apply learning in solving real-life problems such as those in their professional contexts (Knowles, Holton, & Swanson, 2012, p. 66). PjBL that engages learners in solving real-life problems can be a particularly motivating and useful instructional method because it encourages and enables knowledge and skill application for adult learners. Koh, Herring, and Hew (2010) explored graduate students’ levels of knowledge construction during asynchronous online discussions with respect to engagement in project-based learning. They found that a higher level of knowledge construction activities were more likely to occur during project-based learning when compared to non project-based learning. Based on their study, Koh, et al. (2010) proposed four guidelines for implementing online PjBL: 1) assigning students a design problem; 2) structuring project milestones to facilitate knowledge construction; 3) having students articulate their learning through the development of learning artifacts; 4) (instructor) facilitating activities toward higher level learning. This study adopted these guidelines in an attempt to design an effective PjBL online environment that inspires higher order knowledge construction.

Learning in an online PjBL environment is not without challenges. In such an environment, learners not only acquire new content knowledge, but also need to apply newly learned knowledge to solve complex problems. The application nature of learning is likely to impose heavy cognitive loads on learners, especially novices in the field. Purposefully structured tasks and learning support are essential to scaffold students’ learning in an online PjBL environment (Koh et al., 2010). Learning support can be presented in several formats, and feedback is one of them. Feedback refers to “information communicated to the learner that is intended to modify his or her thinking or behavior for the purpose of improving learning” (Shute, 2008, p. 154). Formative feedback, one type of feedback, is presented in a nonevaluative, supportive, and timely manner during the learning process for the purpose of improving learning (Shute, 2008) and can be given by instructors or peers (Phielix, Prins, Kirschner, Erkens, & Jaspers, 2011). Feedback has been suggested as one of the crucial instructional components for improving knowledge and skill acquisition, and for motivating learning (Shute, 2008). The lack of feedback can impact students’ learning adversely and it has been argued as a reason for students’ withdrawing from online courses (Ertmer, et al., 2007). Some research findings suggested that feedback may be more important in online learning environments than in face-to-face learning environments (Lynch, 2002; Palloff & Pratt, 2001), due to the lack of regular face-to-face interaction in the former. In addition to the affective impact, research suggested that a more explicit feedback process is needed in online learning in order to achieve the levels of student learning experiences and the depth of learning similar to those in traditional learning environments (Rovai, Ponton, Derrick, & Davis, 2006).

Given the importance of the role feedback plays in learning, educators need to make sure of the frequency and quality of this instructional component when designing and teaching online courses. However, providing frequent and extensive feedback to every student can be an impractical task for online instructors (Liu & Carless, 2006). Feedback provided by equal-status learners, called peer feedback, can be a solution to meet students’ needs of receiving frequent feedback to help them improve their learning process (Gielen, Peeters, Dochy, Onghena, & Struyven, 2010). Peer feedback refers to “a communication process through which learners enter into dialogues related to performance and standards” (Liu & Carless, 2006, p. 280), and can be considered as a form of collaborative learning (Gielen, et al., 2010). Peer feedback is mostly formative in nature with no grades involved. It provides comments on strengths, weaknesses, and/or tips for improvement (Falchikov, 1996), with the purpose of improving learning and performance. When students mutually provide feedback, they participate in collaborative learning where they construct their knowledge through social exchange (Gunawardena, et al., 1997) during the process of providing and receiving feedback.

The theoretical foundation of peer feedback is the social constructivist view of learning. This view emphasizes learning as a social activity and asserts that learners’ interactions with people in the environment stimulate their cognitive growth (Schunk, 2008). During the peer feedback process, learners present their ideas to peers, receive and provide constructive feedback, and revise and advance their thinking for solving complex problems. Through this interactive process, learners collaboratively construct knowledge when they clarify their own thinking and gain multiple perspectives on a given issue, which enables the creation of more comprehensive and deeper understanding toward learning.

Benefits have been found for both receiving and providing peer feedback. When receiving feedback, learners invite peers to contribute experiences and perspectives to enrich their own learning process (Ertmer, et al., 2007). When providing feedback, learners actively engage in articulating their evolving understanding of the subject matter (Liu & Carless, 2006). They also apply the learned knowledge and skills when assessing others’ work. This process involves learners in thinking about quality, standards, and criteria that they may use to evaluate others’ work, which helps them become critical thinkers and reflective learners (Liu & Carless, 2006). Li, Liu, and Steckelberg (2010) investigated the impact of peer assessment in an undergraduate technology application course. They found a positive and significant relationship between the quality of peer feedback that students provided for others and the quality of the students’ own final products, controlling for the quality of the initial projects. However, they did not find any relationships between receiving feedback and the quality of final products. They concluded that active engagement in reviewing peers’ projects might facilitate student learning performance. Cho and Cho (2011) studied how undergraduate peer reviewers learned from giving comments. They found that students improved their writing more by giving comments than by receiving comments because giving comments involves evaluative and reflective activities in which students identified good writing, problematic areas in the writing, and possible ways to solve the problem.

Nevertheless, peer feedback activity may impose cognitive or affective challenges on learners. It is also likely that students may not possess the skills of providing useful and meaningful feedback (Palloff & Pratt, 1999) because students are not domain experts. Studies showed that students did not learn much from providing low quality comments (Li et al., 2010). Students can also have anxiety about giving feedback (Ertmer, et al., 2007) if they are not used to this activity, as they do not want to appear to be criticizing peers’ work. In addition, peer feedback may not be perceived as valid by the receivers as peer reviewers are usually not regarded as a “knowledge authority” by feedback receivers (Gielen, et al., 2010), and, thus, learners refuse to take the feedback seriously.

In the context of project-based learning, peer feedback has the potential to facilitate learning processes in different ways. Reviewing peers’ project drafts may help learners reflect on their own work and improve their own project performance. However, there has been limited research examining the pedagogical benefits of peer feedback for facilitating project-based learning in an entirely online environment. Lu and Law (2011) studied online peer assessment activities to support high school students’ project-based learning and examined the effects of different types of peer assessment on student learning. These high school students were enrolled in face-to-face public high schools while they participated in online peer assessment activities in the study. Lu and Law found that the feedback consisting of identified problems and suggestions was a significant predictor of the feedback providers’ performances. They also found that positive affective feedback was related to feedback receivers’ performances. While Lu and Law’s study examined online peer assessment in a face-to-face learning context, our study aimed to examine how peer feedback supports graduate students’ project-based learning in an entirely online environment.

In this paper, we investigated whether a peer feedback strategy can facilitate project-based learning in an entirely online learning environment. We explored graduate students’ participation in peer feedback activity in an online learning environment where students needed to solve complex instructional design problems. We also examined their perceptions of peer feedback in supporting project-based learning, and the quality of the provided peer feedback. Specifically, we asked the following research questions:

This study was conducted in the context of an online master’s level course in a public university in northwestern USA. The subject matter of this course was instructional design. Moodle learning management system was the online learning platform used in this course. Twenty-one students were enrolled in this course and these students were geographically dispersed, with most of them living in different states in the United States. Many of the students were K-12 school teachers, while others were college instructors, technology coordinators, technical writers, and instructional designers in corporate settings.

This graduate course was project-based, and required students to work on a semester-long individual instructional design project accounting for 40% of their course grade. In this project, students were responsible for conceptualizing, planning, designing, and developing an instructional unit on a topic of their choice with the help of peers and the instructor. The project required students to apply knowledge and skills of instructional design when conceptualizing, brainstorming, designing, and exploring alternative solutions for their design problems, which is the core characteristic of project-based learning as defined by Blumenfeld et al. (1991).

The complex instructional design project was structured into five project milestones and each milestone was supported by a task-oriented discussion where peer feedback activity took place. Students submitted their project artifacts for peer feedback at each milestone. These milestones include: 1) proposing a plan for needs assessment; 2) conducting a task analysis to draw a task flowchart and identify a list of learning objectives; 3) creating a plan to assess learning outcomes; 4) creating instructional strategies; and 5) developing a plan for different types of formative evaluation. Each milestone lasted for two weeks. For each milestone, students read the textbook on the specific instructional design process, applied the knowledge to create a draft of each milestone task, and posted the draft to the designated discussion forum for peer feedback. Each project milestone task had its own dedicated discussion forum. In total, students participated in five peer feedback discussions, one for each milestone task, throughout the semester.

At the beginning of the semester, twenty-one students were assigned to three heterogeneous groups of six to eight learners by the instructor, based on their self-reported skills and experiences of instructional design. Within each milestone cycle, three deadlines were set for students: 1) post their artifacts within their group; 2) provide feedback to three peers within their group; and 3) address any questions, suggestions, or comments in the feedback they received. This design aimed to promote participation and foster reflection on whether students understand, accept, and agree on the feedback. At the end of the semester, students were encouraged to fill out an anonymous course evaluation survey on a voluntary basis to express their perceptions of the course activities.

The human subjects approval was obtained for analyzing students’ participation data, their course work, and the anonymous course evaluation survey data. This study applied a mixed-method design that collects both quantitative and qualitative data to answer our research questions. To answer Research Question 1, we examined students’ postings on the five online discussion forums and tallied the frequency of discussion postings to examine student participation in the peer feedback activity. To answer Research Question 2, we collected qualitative data of students’ perceptions of the PjBL and peer feedback activity through open-ended questions as part of the anonymous course evaluation survey at the end of the semester. The open-ended questions on the course evaluation survey read

Please provide feedback on the following assignments. Indicate whether you think it helps you learn the subject or not. If it helps, please explain how it helps you learn. If you do not find it helpful, what changes would you suggest to make the assignment meaningful to your learning experience.

The students were specifically asked to comment on “Module Discussions” where they provided and received feedback from peers, and on “Instructional Design Project” where they worked on creating an instructional design document and developing instructional materials throughout the semester. Eighteen out of the 21 students provided qualitative feedback as part of the evaluation. We did not ask the students for permission to use their comments in the survey as quotes in our paper due to the anonymous nature of the survey data. We used thematic analysis to examine the responses to these open-ended questions for emerging themes.

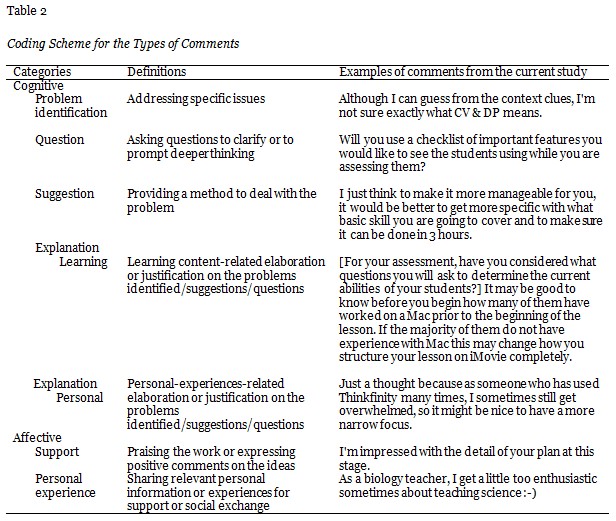

To answer Research Question 3, we downloaded the discussion messages posted on the Moodle discussion forums and analyzed the messages to further explore the quality of the provided feedback. Specifically, we conducted a content analysis on the peer feedback entries posted in two forums, Discussion 1 on “Needs Assessment Plan” and Discussion 2 on “Task Analysis.” Due to the large amount of data, we purposefully selected and analyzed these two discussions because of the differing structure of the feedback activity. In Discussion 1, students did not receive specific guidance and directions on the aspects to comment on—they were simply asked to look over peers’ needs assessment plans and give constructive feedback regarding the ideas. Hoping to improve peer feedback quality, the instructor provided specific questions to guide student feedback efforts in Discussion 2 and the three following discussions. For example, one guiding question reads, “Does the stated learning goal appear clear, concise, and show an obvious outcome? How can the goal be improved?” The feedback entries were coded based on the coding scheme adapted from Lu and Law (2011) (see the next section for the coding scheme table). After examining the peer feedback in this study against the adapted coding scheme, we added some categories and sub-categories for the purpose of capturing the complexity of the peer feedback data. We expect that using the modified coding scheme will better capture the complexity of the data that help generate meaningful pedagogical implications.

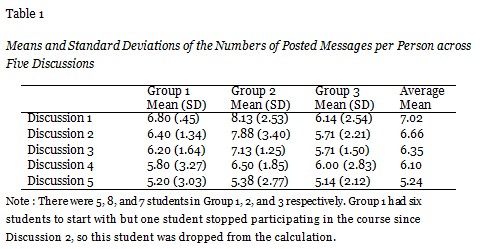

The descriptive data that shows the detailed breakdown of the frequency of messages across five discussions by groups are presented in Table 1. The requirement of the peer feedback activity asked students to post one original post to share the design artifacts they created, respond to three original peer postings, and answer all the questions and comments from peers in one or several posts. As such, five messages per discussion can be considered the minimal posting requirement students have to achieve. The data show that students actively participated in the peer feedback activity and they achieved the required five postings throughout the discussions. On average, members in Group 1 posted 6 messages, those in Group 2 posted about 7 messages, and those in Group 3 posted about 5.7 messages throughout the five discussions. In general, the frequency of posted messages decreased in the later discussions across the groups.

We analyzed learners’ responses to the open-ended questions to help understand students’ perceptions of their experiences in project-based learning and the peer-feedback activity. Students generally enjoyed the online project-based learning activity where they were able to work on an interesting topic of their choice and to apply their newly acquired design knowledge and skills. Some students considered the hands-on activity of creating an actual instructional design project the best component of the course. Other students specifically commented on the effective design of the project-based learning activity in which the project was organized into five milestones where learners were able to undertake a complex instructional design project one step at a time. The project would have been overwhelming and created a high level of anxiety if it were not broken down into several milestones or did not provide the opportunities of receiving peer feedback during the process. Two comments from the students read,

Overall I enjoyed this process. It was a lot of work, but I liked how it was systematically designed to be put together throughout the semester and it was nice to have the same three peers helping you out in the discussion group.

I liked the module discussions, especially since it forces you to work on portions of the project and get feedback. I think they are GREAT!

Overall, students were mostly positive about the peer feedback activity as revealed in the following comments:

I liked how the discussions served as a rough draft to the overall ID project. Peer feedback is always good to get. I was pleased with the quality of the feedback.

I thought it was very helpful and enlightening to give and receive feedback from peers.

I thought this was helpful especially getting feedback from my peers because it made my project better.

The most valuable part for me was seeing what other students were doing.

A few students perceived the peer feedback activity more negatively, mostly because they did not get useful feedback and had spent a great deal of time providing quality feedback to their peers. Students commented that

As far as commenting, two students generally gave me useful feedback, though most others did not.

I perhaps worked too hard at giving substantial feedback during the first few modules even though I got much less on my project. It was very time consuming for me.

The only downside was the discussion peer reviews started lagging near the end. Keeping students focused on the importance of giving quality feedback will really help.

These comments pointed out an important issue in the peer feedback activity. Despite that guiding questions were in place for most of the discussion activities to help students construct peer feedback, some students did not or could not provide helpful feedback. Providing constructive feedback requires critical thinking skills for evaluating the artifacts based on standards or criteria, identifying gaps or discrepancy in the artifacts, or offering different perspectives to consider alternative solutions. Students in this class varied widely in terms of their educational or instructional design related experiences. While the majority of the students were able to provide alternative perspectives, not everyone was capable of doing so due to the lack of experiences to draw from. Thus, advanced intervention is needed to enhance student performance on providing quality and constructive feedback. Admittedly, providing quality feedback also takes a considerable amount of time. Poor feedback quality may suggest that learners lacked motivation in helping peers out and that they failed to see the benefits of engaging in the evaluative and reflective activities of providing feedback.

Across five discussions, strict deadlines for feedback postings were established and reinforced through assessment criteria on the timeliness of postings. This design was intended to ensure sufficient time for students to provide feedback and respond to peer questions. However, some students did not like the multiple deadlines for the discussions. They commented that

I found it difficult to have so many due dates as a large part of the class is driven by discussion boards. I like having one due date as in prior semester classes.

It would be nice if there were not many steps to assignments that are due on various dates. This was a little confusing as far as what was due on each date.

One of the benefits of online learning is its flexibility (Ally, 2004). Although this was not a self-paced online course, some students still expected to have maximal freedom in terms of controlling the pace of their learning and completing their assignments. They may consider losing the flexibility of online learning when multiple discussion participation deadlines were imposed. However, the instructor’s past online teaching experiences showed that students might wait until the last minute to participate if the discussion activities were not structured around the deadlines, which may lead to lower quality of provided feedback and lacking time for responding to or reflecting on the received feedback. As such, there is a fine balance between imposing more structured discussion to maximize learning and diminishing flexibility of online learning.

In total, 60 entries of peer feedback in Discussion 1 and 63 entries in Discussion 2 were coded using the coding scheme presented in Table 2. This coding scheme was adapted based on the scheme in Lu and Law (2011). Each peer feedback entry was broken into idea units for coding. A substantial entry of peer feedback usually contains several idea units that can be coded into different categories of comments depending on the coding scheme.

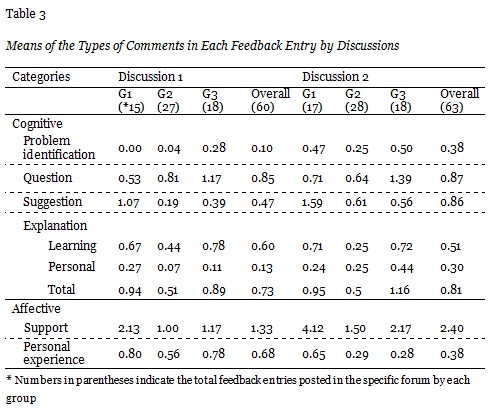

Table 3 presents the results of the content analysis. As it is not our intention to compare groups, we discussed the findings using aggregated data from three groups as shown in the column “Overall.” Based on the descriptive statistics, among six types of coded feedback categories, “Support” was the most common type of comment found in both peer feedback discussion activities. In Discussion 1, on average, one entry of peer feedback contained 1.33 pieces of the “Support” type of comment whereas one piece of comment contained one idea unit; in Discussion 2, one entry of peer feedback contained 2.4 pieces of Support. “Question” was the second most common type of comment in both discussions (M = .85 in Discussion 1; M = .87 in Discussion 2). “Problem Identification” was the least common type of feedback in Discussion 1 (M = .10) but its frequency increased in Discussion 2 (M = .38).

When we examined the postings across two discussions, the descriptive data showed that four types of comments, including “Problem Identification”, “Suggestion”, “Explanation-Personal”, and “Support”, increased from Discussion 1 to Discussion 2. However, “Explanation-Learning” and “Personal experience” types of comments decreased. These different patterns may be associated with the structure of the feedback activity; that is, guiding questions were provided in Discussion 2 but not in Discussion 1. For example, in Discussion 1, students provided more personal experiences in their feedback, which may be explained by the fact that students are more likely to relate to their own prior experiences instead of relating to the newly learned materials when they are not guided to do so. When students were asked to “assess” how well peer work addressed specific content knowledge and to “provide suggestions” for improvement in Discussion 2, they generated more comments on “Problem Identification” and “Suggestion.” As found in previous research by Lu and Law (2011), the ability to identify problems and give suggestions was a significant predictor of the feedback providers’ learning performance. Our findings revealed the potential of guiding questions in eliciting feedback types that may benefit student performance. Despite that descriptive statistics in this exploratory study seem to suggest that guiding questions make a difference, future studies with appropriate and deliberate research design are needed to examine if there is a causal relationship between the use of guiding questions and the generation of specific types of peer comments.

Based on the self-explanation research, explanations can enhance deeper learning when they go beyond given information (Chi et al., 1989). As such, it is likely to benefit learners if they can generate more explanations in their feedback (Gielen, et al., 2010). The learning potential of explanations led us to further code explanation types of comments into two categories, learning and personal, in hoping to understand what students explained in the peer feedback activity. Overall, learners generated more learning explanations than personal explanations in their feedback. While the purpose of the explanation is usually to support and strengthen one’s argument, it is not clear if either learning or personal explanations are perceived as more persuasive as justifications for feedback receivers in terms of improving their work. However, we suspect that learning explanations have greater learning benefits for feedback providers than explanations based on personal experiences or opinions because when generating learning explanations, feedback providers are likely to apply newly learned knowledge and skills to justify or elaborate on their ideas in novel contexts (i.e., peers’ projects). Future studies may further explore the roles of these two types of explanations in the peer feedback processes in terms of the perceived convincingness for feedback receivers and the learning benefits for feedback providers.

In general, the content analysis of the peer feedback showed that students were mostly very supportive of their peers’ work and rarely criticized peers’ work harshly. In the cases when students tried to identify problems or pinpoint areas for improvement, they tended to use disclaimers or gentle tones. This interaction pattern aligns with the findings in the study by Yu and Wu (2011). Yu and Wu examined identity revelation modes in an online peer-assessment learning environment and found that no severe level of negative comments or irrational emotions was presented in the group that used real names. However, negative comments and irrational emotions were found, although rarely, in the anonymity and nickname groups. They suggested that using real names benefited interpersonal relationships between assessors and those being assessed. In this study, the peer feedback activity was administered in online forums where students knew the identities of feedback providers and receivers. Students’ mostly positive feedback and use of disclaimers or gentle tones may be the result of avoiding risking the interpersonal relationships among group members. In addition, the current peer feedback activity emphasized the formative feedback where learners collaboratively helped each other improve their plans and instructional design project ideas. It is also possible that learners were aware of the formative nature of the feedback activity so they were mostly supportive as they realized everyone was in the stage of idea forming and developing.

This study examined graduate students’ participation in and perceptions of peer feedback activity that supported project-based learning where students engaged in instructional design projects in an online environment. Several implications are discussed here. First, similar to the findings of Koh et al. (2010), students are likely to have positive project-based learning experiences when the complex projects are structured into attainable milestones that help reduce project complexity and scale, as it makes hands-on experiences manageable for learners in an online environment. Second, a series of peer feedback activities offering useful formative comments can enhance learners’ project development processes. Having the opportunities to receive peer feedback can help learners validate ideas, identify issues, and revise drafts into a well thought-out project while peers contribute experiences and perspectives to enrich one’s own learning process (Ertmer, et al., 2007). Receiving peer feedback has a considerable profit for learners who engage in the process of exchanging peer feedback (van der Pol, van den Berg, Admiraal, & Simons, 2008). Having the opportunity of providing peer feedback allows for the occurrence of higher order learning opportunities. Viewing examples of knowledge application in different contexts also helps broaden one’s understanding of the applicability of knowledge. When reviewing their peers’ work, learners can apply newly learned knowledge and their evolving understanding of the subject matter (Liu & Carless, 2006) in novel contexts (i.e., peers’ projects) by critically evaluating the appropriateness of peers’ application of knowledge and elaborate and justify their own thinking.

Three challenges were identified in this study. First, some learners were against the strict deadline structure imposed on the peer feedback activity to ensure feedback was provided and received in a timely manner. Admittedly, adult learners taking online courses mostly value the flexibility of online learning and scheduling. Educators and designers need to seek the fine balance between imposing structure to the peer feedback activity for the purpose of maximizing learning and diminishing flexibility of online learning. Second, the qualitative data revealed the issue of the low quality of peer feedback that could have resulted from learners not spending sufficient time on tasks or not being capable of providing constructive feedback. Guiding questions were incorporated into the design of the later discussions (Discussion 2 to Discussion 5) in order to scaffold learners’ generation of more constructive feedback. Although the results of the content analysis on the provided feedback seem to show a positive impact, future research is needed to explore this intervention in depth.

Lastly, while learners perceived positive benefits of peer feedback, this study did not investigate whether learners really took into account the peer feedback to revise and improve their projects. Although many learners responded to the feedback they received due to the course requirement, not everyone responded or provided quality responses to the feedback they received. This may suggest that some feedback was not deemed as useful or it may simply indicate that some learners were not as engaged in the peer feedback activity as others were. Future design and implementation of the peer feedback activity may build in a conclusion activity that requires students to write reflections on how the received feedback helped advance their projects or shape their ideas. In addition, instructional interventions such as having students complete a posteriori reply forms may help raise mindful reception of the feedback (Gielen et al., 2010).

Due to the exploratory nature of the current study, we used rich qualitative and content analysis data to reveal the investigated phenomenon, while no inferential statistics were used to reach conclusions that extend beyond the collected data. As such, the findings should not be generalized beyond the described learning context. Future research is encouraged to further examine the pedagogical effects of peer feedback on students’ learning outcomes with research design that can lead to findings appropriate for generalizations.

This paper examined students’ participation in and perceptions of the peer feedback activity that supported project-based learning in an online graduate course. Our findings indicate that peer feedback activity can be effectively implemented in an online learning environment to facilitate students’ problem solving and project completion. Students actively participated in the peer feedback activity and were mostly positive about it—they perceived the activity as helpful support for their project-based learning. Content analysis of the provided feedback revealed that students were mostly supportive of peers’ work, and they provided constructive feedback that can help improve peers’ work by asking their peers questions. In addition, providing guiding questions seems to be a useful instructional strategy which elicited peer feedback that identified problems and provided suggestions. This study provides empirical evidence to support the adoption of a peer feedback strategy for facilitating project-based learning in an entirely online learning environment. This study also reveals a variety of comments learners may provide when participating in peer feedback activities. As the literature indicates that learners may lack the skills to provide critical comments, educators and instructional designers may use the findings of this study to identify instructional intervention if they want to further guide learners’ in constructing particular types of feedback to strengthen learners’ critical thinking skills. Future research is encouraged to examine how peer feedback plays a role in learners’ development of projects and in their project outcomes.

Ally, M. (2004). Foundations of educational theory for online learning. In T. A. Anderson & F. Elloumi (Eds), Theory and practice of online learning (pp. 3-31). Edmonton: Athabasca University.

Blumenfeld, P., Soloway, E., Marx, R., Krajcik, J., Guzdial, M., & Palincsar, A. (1991). Motivating project-based learning: Sustaining the doing, supporting the learning. Educational Psychologist, 26(3/4), 369-398.

Chi, M. T. H., Bassok, M., Lewis, M. W., Reimann, P., & Glaser, R. (1989). Self-explanations: How and use examples in learning to solve problems. Cognitive Science, 13, 145–182.

Cho, Y. H., & Cho, K. (2011). Peer reviewers learn from giving comments. Instructional Sciences, 39, 629-643.

Ertmer, P. A., Richardson, J. C., Belland, B., Camin, D., Connolly, P., Coulthard, G., et al. (2007). Using peer feedback to enhance the quality of student online postings: An exploratory study. Journal of Computer-Mediated Communication, 12(2), article 4. Retrieved from http://jcmc.indiana.edu/vol12/issue2/ertmer.html

Falchikov, N. (1996, July). Improving learning through critical peer feedback and reflection. Paper presented at the HERDSA Conference 1996: Different approaches: Theory and practice in Higher Education, Perth, Australia.

Gielen, S., Peeters, E., Dochy, F., Onghena, P., & Struyven, K. (2010). Improving the effectiveness of peer feedback for learning. Learning and Instruction, 20(4), 304-315. doi:10.1016/j.learninstruc.2009.08.007

Guanawardena, C. N., Lowe, X., Constance, A., & Anderson, T. (1997). Analysis of a global debate and the development of an interaction analysis model for examining social construction of knowledge in computer conferencing. Journal of Educational Computing Research, 17(4), 397-431.

Jonassen, D. H. (1998). Designing construcvist learning environments. In C. M. Reigeluth (Ed.), Instructional-design theories and models (2nd ed.) (pp. 215-239). Mahwah, NJ: Lawrence Erlbaum.

Knowles, M., Holton, E., & Swanson, R. (2012). The adult learner: The definitive classic in adult education and human resource development (7th ed.). London: Elsevier.

Koh, J. H. L., Herring, S. C., & Hew, K. F. (2010). Project-based learning and student knowledge construction during asynchronous online discussion. Internet and Higher Education, 13, 284-291.

Li, L., Liu, X., & Steckelberg, A. L. (2010). Assessor or assess: How student learning improves by giving and receiving peer feedback. British Journal of Educational Technology, 41(3), 525-536.

Liu, N. -F., & Carless, D. (2006). Peer feedback: The learning element of peer assessment. Teaching in Higher Education, 11(3), 279-290.

Lu, J., & Law, N. (2011). Online peer assessment: Effects of cognitive and affective feedback. Instructional Science, 40(2), 257-275.

Lynch, M. M. (2002). The online educator: A guide to creating the virtual classroom. New York: Routledge Falmer.

Palloff R. M., & Pratt, K. (2001). Lessons from the cyberspace classroom: The realities of online teaching. San Francisco, CA: Jossey-Bass.

Palloff, R. M., & Pratt, K. (1999). Building learning communities in cyberspace. San Francisco, CA: Jossey-Bass.

Phielix, C., Prins, F. J., Kirschner, P. A., Erkens, G., & Jaspers, J. (2011). Group awareness of social and cognitive performance in a CSCL environment: Effects of a peer feedback and reflection tool. Computers in Human Behavior, 27, 1087-1102.

Rovai, A. P., Ponon, M. K., Derrick, M. G., & Davis, J. M. (2006). Student evaluation of teaching in the virtual and traditional classrooms: A comparative analysis. Internet and Higher Education, 9, 23-35.

Schunk, D. H. (2008). Learning theories: An educational perspective (5th ed.). Upper Saddle River, NJ: Pearson.

Shute, V. J. (2008). Focus on formative feedback. Review of Educational Research, 78(1), 153-189.

Yu, F. -Y., & Wu, C. -P. (2011). Different identity revelation modes in an online peer-assessment learning environment: Effects on perceptions toward assessors, classroom climate and learning activities. Computers & Education, 57(3), 2167-2177.

Van der Pol, J., Van den Berg, I., Admiraal, W. F., & Simons, P. R. J. (2008). The nature, reception, and use of online peer feedback in higher education. Computers & Education, 51, 1804-1817.