This article presents an overview of the instructional models of Gagne, Briggs, and Wager (1992) and Laurillard (1993; 2002), followed by student evaluations from the first year of an online public health core curriculum. Both online courses and their evaluations were developed in accordance with the two models of instruction. The evaluations by students indicated that they perceived they had achieved the course objectives and were generally satisfied with the experience of taking the courses online. However, some students were dissatisfied with the feedback and learning guidance they received; these students’ comments supported Laurillard’s model of instruction. Discussion captured in this paper focuses on successes of the first year of the online curriculum, suggestions for solving problem areas, and the importance of the perceived relationship between teacher and student in the distance education environment.

One of the most widely used sets of guidelines for instructional designers stems from the instructional theory of Robert Gagne. His cognitive theory delineates nine sequential instructional events that provide the necessary conditions for learning (Gagne, Briggs, and Wager, 1992; Figure 1). In this model, the emphasis is on enhancing learner performance. As the events of learning are clearly defined, this model of instruction is compatible with Web-based courses – especially those that make extensive use of group or individual communication capabilities of the Internet. Many Web-based courses are designed according to Gagne’s instructional events, or similar models (Beetham, 2002).

Laurillard (1993, 2002) offers a somewhat different perspective on instructional design in technologically mediated instruction. In her book, Laurillard (1993) outlines five steps for the design of instruction that are necessary for “deep” learning to occur. In her Conversational Framework, she (2002) emphasizes the importance of dialogue between student and teacher rather than the transmission of information from teacher to student. In contrast with Gagne, Laurillard’s model of instruction was designed with interactive technology in mind.

Many studies have compared some form of computer-based learning with traditional classroom settings, and findings have generally supported that computer formats can be as effective, or in some cases more effective, than traditional classroom presentations (Kulik and Kulik, 1986; Russell, 1999). Some of the research focuses on online supplements to traditional classes, rather than entire online courses (e.g., Schulz and Dahale, 1999). One study comparing computer based and traditional lecture versions of the same material revealed that students performed equally well after both types of instruction, but preferred traditional presentations (Dewhurst and Williams, 1998). Although these studies have clearly demonstrated the effectiveness of online learning as defined by student performance, it is less clear how students experience the learning process online, and how this experience shapes their overall impressions of a course. Twigg (2001) recommends using strong assessments, a variety of interactive materials and activities, individualized study plans, built-in continuous assessment, and varied human interaction to improve the quality of online courses.

In this paper, we will present the methods and findings for an evaluation of five public health core courses offered online during the 1999-2000 academic year. The instructional design template used to create online versions of these courses was influenced and supported by the work of both Gagne and Laurillard. As the creation and evaluation of these courses was informed by the research of both Gagne and Laurillard, we begin by comparing their perspectives. To simplify this presentation, we compare Laurillard’s model with five of the nine steps of instruction proposed by Gagne – those that are similar to the components of Laurillard’s model. Findings reported will emphasize items and comments relevant to one or more of the five instructional events reviewed in the introduction.

Our evaluation was designed to answer the following questions:

1. To what degree did students believe they had attained the course objectives?

2. How did the teaching methods used in our online courses provide for five of the major instructional events described by Gagne, and supplemented by Laurillard’s Conversational Framework? In what ways, if any, did our methods fall short of these theorists’ recommendations?

3. How satisfied were students with the instructional features corresponding with each event of instruction in the online courses?

4. In what ways do our findings about student satisfaction with learning online support the theories of Gagne and Laurillard? Do our findings add new insights to their theories?

Gagne et al., and Laurillard present somewhat different perspectives on how best to design the learning environment, content, and context. Gagne argues that learning stimuli should be driven by what students will be asked to do with the material. In other words, there must be congruence between the learning material and the assessment of student learning. Students also need to be able to generalize concepts and rules to all applicable contexts, so instructors should present a variety of examples. Laurillard emphasizes the importance of students perceiving the structure of ideas being taught, if they are to understand those ideas at a “deep” level. While she does not discourage the use of examples, she does point out that many students become engrossed in the example and miss the underlying principle(s).

Although Gagne’s and Laurillard’s recommendations for presenting stimulus materials are not opposed to one another, they do reflect different conceptions of learning. Gagne’s suggestions are in keeping with the notion that students have learned the material if they perform well on an assessment; if students are able to do what the teacher asks of them, they have learned successfully. On the other hand, Laurillard appears to be more concerned that students comprehend the concepts being taught on a structural level. She stresses the importance of students understanding how important concepts within a course are related to one another, given that students often learn individual concepts well enough to define them for an examination but fail to see how they are interdependent. Thus, although assessment methods may influence the presentation of stimulus materials, they would not necessarily determine that presentation.

Simply being exposed to stimulus materials is not generally sufficient for learning to take place. Students are aided in the learning process by questions or prompts that take the students’ thoughts in the proper direction (Gagne et al., 1992). How direct these questions or prompts are, and how many are used, should depend on both the nature of the material being taught and the abilities of the students. Laurillard (1993) adds that teachers should be aware of students’ “likely misconceptions” about a given subject, based on available literature about teaching that subject, and prior experience. Teachers’ efforts to provide learning guidance should include questions to elicit these errors and give opportunities for clarification. However, rather than simply asking students questions and correcting their mistakes, Laurillard’s approach emphasizes the importance of establishing interactive dialogue between teacher and student to aid the learning process.

Before undergoing a formal assessment, such as an examination or term paper, it is helpful for students to have an opportunity to demonstrate what they have learned via homework assignments, student discussions, or other media. This performance allows students to gain confidence that they are learning the material, and enables the teacher to evaluate student learning and provide feedback (Gagne et al., 1992). This performance also provides further opportunities for the teacher to identify and correct common mistakes and misconceptions.

There is some difference of opinion between Gagne et al. (1992) and Laurillard (1993) about the most important aspects of feedback on student performance. Gagne et al., emphasize the necessity of students receiving information about the correctness of their performance. Laurillard, on the other hand, seems more concerned that students not just receive feedback about whether or not the performance was correct, but that the feedback aids the learning process. She differentiates between intrinsic feedback, which is a natural consequence of student action, and extrinsic feedback, which is simple approval or disapproval. In order for deep learning to occur, students need to be able to integrate the feedback they receive with the larger learning goals of the course. In other words, students need to be able to perceive their performance and feedback as the implementation of newly acquired knowledge, rather than as simply meeting an instructor’s requirements.

For the purposes of this paper, “assessment” will refer to formal examinations and papers rather than course activities and assignments. According to Gagne et al. (1992), teachers need to establish whether a student’s correct performance is reliable and valid. In order to determine reliability, students need to be able to repeat the correct performance using a variety of examples. The validity of the correct performance is determined more by the assessment itself, in that a valid performance genuinely reveals the desired learning capability. Thus, a student could perform correctly on an inappropriate assessment in the absence of proper learning.

Laurillard (1993) offers a slightly different view of the nature of assessment, in part because she specifically addresses new teaching technologies. “Part of the point of new teaching methods is that they change the nature of learning, and of what students are able to do. It follows that teachers then have the task of rethinking the assessment of what they do” (p. 218). Laurillard refers to assessment not as a concrete endpoint of learning, but rather as a step in the cycle of learning wherein students have the opportunity to describe their own conceptions of the material and concepts they have learned. Laurillard’s Conversational Framework represents more give and take between students and teachers, and less passivity on the part of students. However, given the circular nature of the framework, the role of end of course assessment receives less emphasis in Laurillard’s model than in Gagne’s.

The School of Public Health of the University of North Carolina at Chapel Hill (UNC-CH), requires all students to complete a core curriculum consisting of five courses. Whereas, the Council on Education for Public Health (CEPH) requires all public health students in the United States take these courses (or close equivalents) in order to receive a Master’s degree in the field of public health. Each course provides an introduction to the key concepts of one department within the school (Biostatistics, Environmental Science and Engineering, Epidemiology, Health Behavior and Health Education, and Health Policy and Administration). Due to the CEPH’s requirements, UNC-CH elected to offer the entire core curriculum on the World Wide Web in addition to traditional, “live” classroom settings. Four of the five courses were taught online for the first time during the 1999-2000 academic year. The data presented in this paper largely reflect students’ evaluations of those new online courses.

Typical students enrolled in the Web-based core classes include: a) residential students who prefer (or in some cases are required) to take core classes outside their major online; and b) students involved in distance learning programs. Most students completing the core curriculum online are enrolled in one of the distance education degree programs offered by the School of the Public Health. Students are professionals with full-time jobs (and often families as well); their motivations for enrolling in distance degree programs often include improving their current practice as public health professionals. Therefore, the online courses must be perceived as relevant to students’ current professions in order to benefit and motivate the students.

Although the courses vary in terms of faculty and material being presented, all core classes follow the same basic format of instruction. For the purposes of this article, we will focus on methods that are employed for the entire curriculum. Each course is subdivided into several units. In most courses, units are further subdivided into several lessons. Each lesson follows a common model presented below, in keeping with the steps in Gagne et al.’s (1992) instructional model. This common model allows students to know exactly what to expect after one or two lessons.

Students first read introductory material, which acquaints them with the faculty and teaching assistants, course objectives, lesson plan and schedule, and information about evaluation and grading. Each course is broken into several units; in most courses, these units are further divided into lessons. Lessons begin with stated learning objectives, which are followed with audio tutorials with slide presentations and (usually) reading assignments. All courses provide students with an email address for questions about the material; questions sent to this address are answered by the professors and teaching assistants. Students are also encouraged to turn to each other with questions and insights about the learning material via the course discussion forums.

There is some variation across courses in the methods employed to elicit student performance. In general, the courses use a case study approach; after learning the lesson concepts, students are exposed to a direct application of those concepts. Students then have to answer specific questions about the case to demonstrate that they can successfully apply the new concepts in a given situation. Most of the courses include a weekly discussion forum in which students are required to post their comments and insights about the current lesson or a related topic. Some courses also provide post-tests at the end of each lesson or unit. Every course uses at least one of these methods of eliciting student performance.

Feedback about student performance depends on the type of performance. Case study answers are generally evaluated by teaching assistants; students receive a quantitative grade and/or qualitative comments. Students receive feedback from each other for the discussion forums, as they often respond to and build on each other’s contributions. In some courses, teaching assistants also contribute to the discussion forums and comment on students’ remarks. Lesson post-tests are automatically graded on the Web, unless they include open-ended questions.

In addition to the lesson activities described above, all of the courses include formal examinations and some also include final projects or papers. Students either post their exams and papers to the course webpage or email their completed exams and projects directly to the professor. Students’ final grades are determined based on performance in discussion forums, completion of case studies and other activities, and scores on examinations (and final papers when applicable).

During the 1999-2000 academic year, students in all five courses were asked to complete an online evaluation at the end of the semester which consisted of 30 questions that assessed their satisfaction with the course and effectiveness of the teaching methods. These questions were constant across courses (all items used a four point scale, 1 = Strongly Disagree, 4 = Strongly Agree). Each course evaluation also included a unique set of questions to assess students’ achievement of the course objectives; these questions were based on input from discussions and interviews with the teaching faculty. Finally, every course evaluation provided space for open-ended comments. Over the course of one year (two semesters and two summer sessions), 273 students were enrolled in the online core courses. Of these students, 214 (78 percent) completed evaluations, and 171 (63 percent) wrote additional comments.

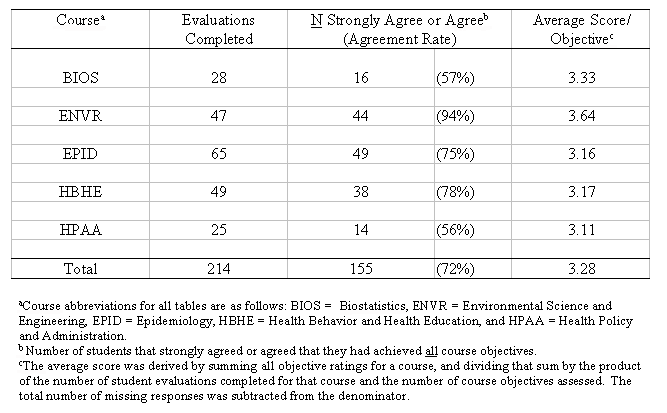

Each course’s online evaluation included a list of learning objectives for the course; students were asked the extent to which they agree that they achieved each learning objective. Students’ responses to these items were assessed in two ways (Table 1). First, we determined the proportion of students in each course who agreed that they had achieved every learning objective (i.e., selected the response “Strongly Agree” or “Agree” for every learning objective item). This proportion varied widely between courses, with as many as 94 percent of the students in one course agreeing that they had achieved every learning objective, and as few as 56 percent of the students in another course reporting that they had accomplished all learning objectives.

Second, we calculated the average score of all learning objective items within each course. This analysis yielded a much more unified picture across courses, indicating that students in all courses were likely to agree that they had achieved any individual learning objective (overall M = 3.28; 1 indicates “Strongly Disagree” and 4 indicates “Strongly Agree”). Together, these analyses suggest that students felt moderately to very successful in learning the desired material in a distance learning format. Student grades were consistent with student evaluations of achievement of learning objectives; virtually all of the students received a passing grade.

The online evaluation form included nine questions assessing students’ satisfaction with how course materials were presented (Table 2). The majority of students in our sample were pleased with the delivery of learning materials, particularly the professors’ overall presentation of material (M = 3.31), and the orchestration of activities, readings, and assignments (Ms = 3.31). Students were least satisfied with guest lecturers (M = 2.88) and with article readings (M = 2.98). However, the qualitative comments indicate that students’ dissatisfaction in these areas may have been at least partially due to technical difficulties, some of which are included as examples:

The reading material does not print well and is hard to read on the screen. Maybe next year just supply a CD with all of the readings on it.

The guest speakers need to speak more fluently and more slower.

Students’ qualitative comments about course materials generally pertained to the readings and online tutorials, but occasionally included information about other resources provided with the course materials (Web-links, etc.). A few students complained that lectures were dry or hard to follow. In most courses, students were pleased with the level of the material, but some felt that the material was too simplistic. These students’ comments indicated that they were not aware that the courses included undergraduate and graduate students in addition to professional students.

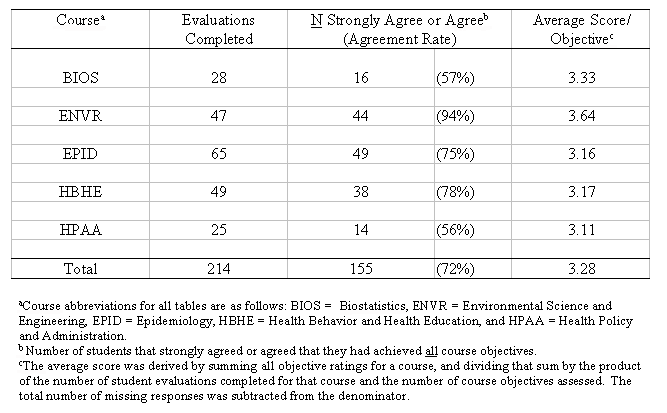

The online evaluation form included five questions concerning the helpfulness of course assignments and activities (Table 3). In general, students agreed that performing the various exercises aided their learning. Specifically, students agreed that the number of course assignments was reasonable (M = 3.09), that the activities and case studies helped their learning (M = 3.23), that practice problems helped their learning (M = 3.19), and that quizzes helped their learning (M = 3.25). The one activity that students generally found less helpful was the discussion forum (item M = 2.86). In all four courses that included a discussion forum, students offered suggestions for how the forum could be improved. Several students made statements to the effect that the forum did not add to the course and should be dropped. Only a few students were unequivocally positive about the forum experience:

The discussion forums were frustrating to me. Other group members often did not post in a timely fashion. . . . Besides the frustration factor, I didn’t learn a lot from the group forum – I would rather learn from an expert (the professor) than other students.

I would recommend changing the format of the discussion forum to more of an INTERACTIVE forum. This was not the case with the current format. We essentially posted individual responses and did not respond to the other students’ postings. This interaction can be a significant learning experience.

There were many suggestions for how to change the forums, but no consensus emerged. This is one issue that is difficult to resolve because it is so dependent on student input.

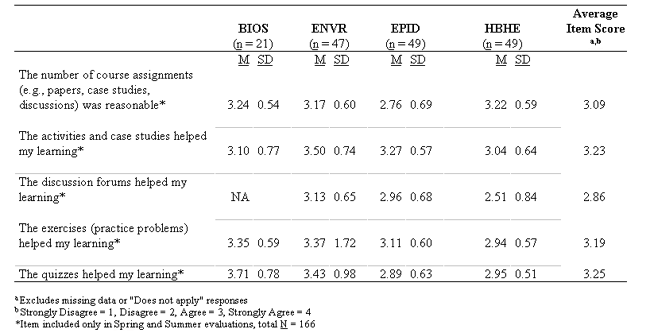

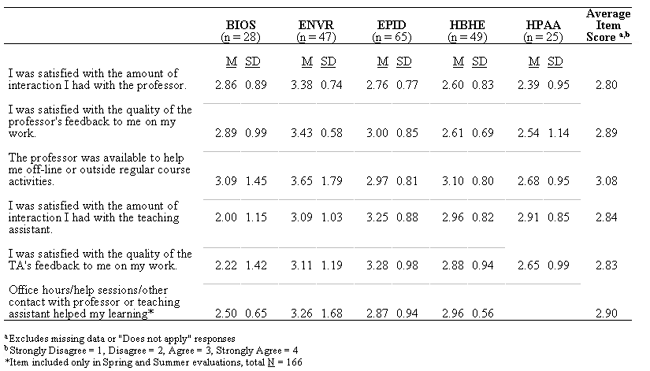

Students answered six questions about the helpfulness of feedback given on their work and quality of interaction with professors and teaching assistants (Table 4). Students’ answers to these items reflected less satisfaction in this area than with presentation of course materials or eliciting student performance. Item means indicated that several students disagreed with statements that they were satisfied with the amount of interaction they had with the professor (M = 2.80) and that they were satisfied with the quality of the professor’s feedback on their work (M = 2.89). Items concerning amount of interaction with the teaching assistants (M = 2.84) and the quality of the teaching assistant’s feedback on work (M = 2.83) received similar ratings. Examination of students’ written comments reveals that direct feedback from the faculty and teaching assistants, consisting of comments and corrections on submitted work (rather than scores alone), played a large role in students’ experience of the course. In courses where there were problems with returning assignments, many students felt that their learning had been compromised. When students received grades without comments or corrections of their work, they specifically requested either individualized feedback or examples of ideal responses:

I also would have loved some feedback on my case study work. I think the last time I received any was on lesson three. We were being given exams before we knew if our work was correct or not being provided information at all.

I received very little feedback from my TA about my work. When we did have a response to our case study assignments, they were too late to help in taking the exam. Eventually all case study reviews stopped and it was difficult to know if you were doing anything right.

The issue of feedback was raised by students in every course. It may be that the lack of opportunities for “live” interactions (for questions and discussions about the material) leaves students feeling less sure of themselves when completing assignments and exams, thus heightening the importance of qualitative feedback.

In addition to highlighting the importance of feedback on assignments, many students expressed strong feelings about their attempts to interact with their professors or teaching assistants individually via email. Students who received prompt replies to their questions were pleased, while students whose emails were not answered promptly (or at all) were negative.

Overall, I would rank this class number one among the three Web-based classes I took. The professor was very organized and responded to questions and inquiries promptly.

There were several times beginning with the mid-ter, that we asked for help and never received answers or guidance from the professor or TA. This was very frustrating.

In their comments about responses to emails, some students mentioned the importance of this method of communication in the absence of a traditional classroom.

Students answered three questions about the extent to which examinations and grading aided their learning of the material (Table 5). Overall, students in our sample seemed moderately satisfied with examinations, although there was considerable variation both within and across courses. In several of the courses, a few students complained that the material covered in the readings and tutorials did not match the material in the exams, or that test questions were poorly worded. However, these types of comments were limited in number. Furthermore, these types of comments frequently occur in traditional learning environments as well. Most of the students’ test-related comments concerned how feedback was delivered. As with case studies and assignments, students were upset when they did not receive feedback (beyond a simple score), or when feedback was not delivered quickly.

I really wish we had gotten our exams back. I learn the most through my mistakes.

I would also recommend faster grading of submissions. I have the final exam this week and still do not know about my Exam II results.

A few students suggested posting “ideal” responses to qualitative test questions, and/or explanations of why the correct responses were the best answers for forced-choice items. This may be an attractive solution to the problem of grading examinations, because the professor would only have to write the information once, but most students’ questions about how individual items were graded would be answered.

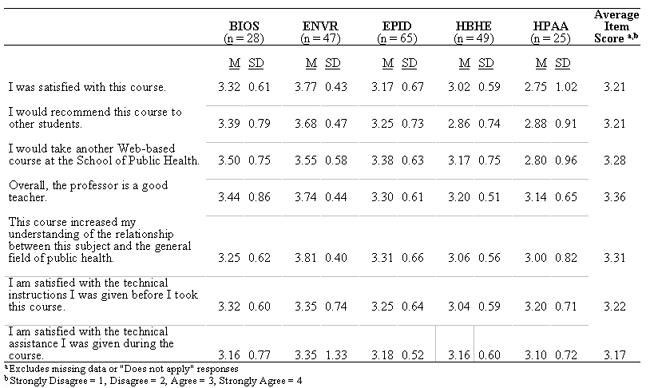

Five questions assessed students’ overall satisfaction with the course. Two questions concerning the technical assistance they received (Table 6). Despite some of the problem areas noted above, most students agreed that they were satisfied with the courses (all item Ms > 3.00). In each of the five courses, several students wrote general comments reflecting positive experiences.

This class was great. I can’t say enough great things about how clear [the professor] has been in the tutorials, how helpful the quizzes were in promoting my learning, and how logically organized everything was.

This was a very well designed course. It is obvious that a lot of time, effort, and thought went into developing this Web-based course. As a distance learner, I really appreciate this! I learned a lot about our environment and I feel my tuition money was well spent on this course.

I enjoyed the class, learned a lot, and was very impressed with the overall organization of the material, website, etc. Thanks for a great semester.

The online format of the course was great. I learned just as much via this format as I would have learned if it had been an “on campus” course.

I enjoyed taking this course. I learned a great deal and have a much better understanding of the US health care system. This was the first time I had taken a Web-based course and I was a little apprehensive at first, but in the end I found that I really liked the online course.

It is interesting to note that most students’ positive comments were broad or general in nature. Sweeping negative statements were relatively rare; students who reported being dissatisfied with an entire course were more likely to have a list of specific complaints. Most of these problem areas were addressed above, and included issues such as slow feedback. Only a few students felt that the distance learning format of the courses was inherent to their dissatisfaction.

Students’ perceptions of their achievement of course objectives, combined with the high percentage of students passing the courses, indicate that students were able to learn effectively online. Overall, both the quantitative and qualitative evaluations given by students indicated satisfaction with the online core courses. Students were more pleased with some learning events than others (for example, evaluation items and comments relevant to presenting the stimulus material were generally more positive than the items and comments referring to providing feedback). However, almost all item means indicated that most students agreed with positively worded statements about the courses. Examination of students’ qualitative comments revealed that many students felt that they had learned a lot and enjoyed the distance learning format.

The learning event that generated the lowest item ratings and the highest proportion of negative comments was providing feedback. Students’ concerns in this area tended to focus on: a) speed with which feedback was given; and b) whether qualitative feedback was given. Few students had any complaints about whether the grading was fair; instead they were concerned that meaningful (e.g., qualitative) feedback be given quickly enough to guide their future performance. This finding supports Laurillard’s (1993) assertion that “. . . it is not just getting feedback that is important, but also being able to use it” (p. 61). In current versions of these courses, there has been extended training of teaching assistants to ensure rapid grading of assignments and responses to students’ email questions. Faculty members are also increasing their use of class listservs to provide uniform responses to common questions and clarification of difficult concepts. For example, one course now includes a special page in every unit that students may use to ask the professor any remaining questions about the material. The professor then sends the question and the answer to the entire class via the class listserv (cf. Angelo and Cross, 1993). Student evaluations of these online courses in the past two years indicate that these changes have enhanced student satisfaction with learning guidance and performance feedback.

In general, students were less pleased with the discussion forum than with other activities and assignments. A few students reported favorable discussion forum experiences, but the majority of the students’ comments reflected a lack of enthusiasm for this activity. Students complained about the lack of involvement on the part of faculty and teaching assistants, the lack of interactivity in the forum, and the problem of other group members not posting responses in a timely fashion. Several students made statements to the effect that the discussion forum did not add significantly to their learning experience and were in favor of removing it from the course activities. In response to these issues, one course changed the format of the discussion forum so that it is now always student led. Students are taught how to lead the discussion forum early in the session. For each unit, the student discussion forum leader sends the professor a list of the insights and questions generated by the forum; the professor posts a response to all students in the forum. This procedure allows the forum to be student led while enabling the professor to give qualitative feedback to students without undue added burden of time. Student satisfaction with the discussion forum has improved in this course.

Students’ comments revealed an aspect of the learning experience that both Gagne et al. (1992) and Laurillard (1993) did not address: the students’ perception of their relationship with their teachers. Although students were primarily concerned with learning the course material, their comments indicated that they also formed judgments about whether the professors and teaching assistants “cared” about them as students. Generally, students who felt that they could contact their professor (or teaching assistant) and receive a timely, thorough, and kindly worded response to concerns or questions, perceived that their instructors wanted them to learn. These students tended to be positive in their general comments about the course. In contrast, students who reported that their questions were not answered, or not answered quickly enough, or who perceived sarcasm or condescension in responses from faculty or teaching assistants, were much more likely to report negative feelings about the course as a whole. Thus, although students rated stimulus materials, learning guidance, and feedback as important to the learning experience, these were not necessarily sufficient. Students were also attuned to their instructors’ manner (even in the absence of live interaction), and it significantly shaped their overall impressions of the course. In light of these findings, one course now includes training for teaching assistants on expectations and proper online responses, as well as offer students the opportunity to evaluate their teaching assistants one-third of the way through the semester. This innovation has improved the consistency of teaching assistants’ performance and aided the faculty in discovering any problems early enough to be corrected.

Our findings indicate that online courses can be an effective method of teaching the core concepts of public health to graduate and professional students. Gagne et al.’s (1992) instructional model can be applied to distance learning, permitting students to achieve course learning objectives and have a positive learning experience. However, there are some pitfalls unique to the learning distance environment. Students want (and expect) quick and detailed responses to their questions and concerns, as well as timely, qualitative feedback on their work. These findings support Laurillard’s Conversational Framework as well; students in distance learning courses apparently expect active interaction with their teachers. Students who felt that these expectations were met tended to be positive about the course, and about distance learning in general. These expectations can be time consuming for professors and teaching assistants to meet, but there are possible compromises that will satisfy both teachers and students, such as posting ideal responses to exam questions and using a course listserv to answer common questions. Student and faculty evaluations of more recent versions of the online courses reveal that these measures have improved satisfaction with distance learning for both parties.

There are several strengths and limitations to the present work. Strengths include the fact that both the course design and evaluation were influenced by the same theories of learning, the breadth of material presented online and evaluated by students, the diversity of students completing the courses, and the high response rate for students completing course evaluations. Unfortunately, due to different methods of evaluation, comparison of students’ evaluations of the online curriculum with those of students in the traditional classroom was not possible. Other research has found little difference between online and traditional presentations of university courses in student satisfaction (Allen, Bourhis, Burrell, and Mabry, 2002). Also reflecting technological difficulties and other problems inherent in new courses, four of the five courses that were being offered online for the first time may have yielded negative evaluations. Future evaluation research of distance learning programs could compare student evaluations of new courses with more established courses, as well as with traditional learning formats of the same material. Continuing to draw on learning theory to develop courses and evaluations will provide distance educators with helpful models to aid in developing new courses.

Table 1. Achievement of All Course Objectives

Table 2. Presenting the Stimulus Material

Table 3. Eliciting Student Performance

Table 4. Providing Feedback and Learning Guidance

Table 5. Assessment of Student Learning

Table 6. Students' General Satisfaction with the Courses

Figure 1. Five events of Instruction Defined by Gagne, Briggs, and Wager (1992) Compared with Laurillard's (1993) Steps in the Learning Process

Allen, M., Bourhis, J., Burrell, N., and Mabry, E. (2002). Comparing student satisfaction with distance education to traditional classrooms in higher education: A meta-analysis. The American Journal of Distance Education, 16, 83-97.

Angelo, T. A., and Cross, K. P. (1993). Classroom Assessment Techniques: A handbook for college teachers (2nd ed.). San Francisco: Jossey-Bass.

Beetham, H. (2002). Design of learning programmes (UK). Retrieved August 2, 2002 from: http://www.sh.plym.ac.uk/eds/elt/session5/intro5

Dewhurst, D. G., and Williams, A. D. (1998). An investigation of the potential for a computer-based tutorial program covering the cardiovascular system to replace traditional lectures.

Gagne, R. M., Briggs, L. J., and Wager, W. W. (1992). Principles of instructional design (4th ed.). Fort Worth TX.: Harcourt Brace Jovanovich.

Kulik, C. L. C., and Kulik, J. A. (1986). Effectiveness of computer-based education in colleges. AEDS Journal, 19, 81 – 108.

Laurillard, D. (1993). Rethinking University Teaching: A framework for the effective use of educational technology. London: Routledge.

Laurillard, D. (2002). Rethinking university teaching in a digital age. Retrieved August 2, 2002 from: http://www2.open.ac.uk/ltto/lttoteam/Diana/Digital/rut-digitalage.doc

Russell, T. R. (1999). The no significant difference phenomenon. Montgomery, AL.: International Distance Education Certification Center.

Schulz, K. C., and Dahale, V. (1999). Multimedia modules for enhancing technical laboratory sessions. Campus-Wide Information Systems 16, 81 – 88.

Twigg, C. (2001). Innovations in online learning: Moving beyond no significant difference. Troy, NY: Center for Academic Transformation, Rensselaer Polytechnic Institute.