Ruth Aluko

University of Pretoria, South Africa

This paper examines the impact of a distance education program offered by the University of Pretoria, South Africa, on the professional practice of teachers. A pilot study was conducted using a combination of surveys and focus group interviews. Findings reveal that the program was beneficial to graduates’ personal development, professional practice, schools, learners, and colleagues. Further, principals who participated in the study attested to the differences they observed between the graduates and other teachers who had not been exposed to such a program. Suggestions for improvements included the introduction of subjects taught at school as areas of specialization, involvement of school principals in the assessment of enrolled students, visits to schools by the organizers, and exposure of students to the practical opportunities offered by the program (with portfolios that could be a part of the assessment).

Keywords: Distance Education; program evaluation; impact analysis; summative evaluation; formative evaluation

Distance education methods have been used to teach, develop, and support teachers for many years (United Nations Educational, Scientific and Cultural Organisation [UNESCO], 2001) and the fact that distance education is effective is well recorded. Its numerous advantages are confirmed partly by the demand for continuing education for teachers in a changing world and by the shift of attention from quantity to quality by policy makers and planners (Robinson & Latchem, 2002). However, it is only by systematically evaluating the effectiveness of distance education that one can justify the use of such programs for teacher development and continue to develop their quality (Lockee, Moore, & Burton, 2002). Reasons for evaluating training programs include the need to decide whether to continue offering a particular training program, the need to improve future programs, and the need to validate the existence and jobs of training professionals (Kirkpatrick, 1994).

Moore (1999) claims that one of the few generalizations that can be made about any distance education program is that a good monitoring and evaluation system is key to its success. Furthermore, measuring educational outcomes is a useful way to assess how public service programs are working in the greater public interest (Newcomer & Allen, 2008) Thus, this research study focuses on determining to what extent the Advanced Certificate in Education (Education Management) program under investigation reflects its outcomes as determined by the country’s national Department of Education. Although evaluating distance education programs is quite challenging due to the complex nature of the infrastructure and the rray of personnel involved (Lockee et al., 2002), the data collected from such evaluations may be used to re-design a program, which may include attitudes towards it as well as learning outcomes (Cyrs, 1998).

Measuring the success of any educational program has been recognized as being an important and fundamental form of institutional accountability, however research on the impact of distance education programs is sparse (Fahy, Spencer, & Halinski, 2007). A survey of the literature shows that most work in this area focuses on individual aspects of the mode of delivery, for instance on the impact of new technologies on distance learning students (Keegan, 2008). Very few studies focus on the totality of a program or in particular on the professional practice of teachers (Stols, Olivier, & Grayson, 2007). This study seeks to make a contribution towards addressing this gap in the field.

Evaluation is one of the ten steps that should be carefully considered when planning and implementing an effective training program (Kirkpatrick, 1996). Various models have been suggested for evaluating training programs, some from decades ago and some more recent. Kirkpatrick’s model is the oldest and has been the most reviewed since its inception in 1959 (Naugle, Naugle, & Naugle, 2000). Many more recent models may be considered to be variations thereof (Tamkin, Yarnall, & Kerrin, 2002). Kirkpatrick’s (1996) model has four levels: reaction, a measure of customer satisfaction; learning, the extent to which participants change attitudes, improve knowledge, and/or increase skills as a result of attending the program; behaviour, the extent to which change in behaviour has occurred because participants attended the training program; and results, the final results achieved due to the participants having attended the program, which may include, for instance, increased production, improved quality, and decreased costs.

Kirkpatrick’s model is capable of reducing the risk of reaching biased conclusions when evaluating training programs (Galloway, 2005). Although the model has been in use for decades, scholars have criticized it for its over-simplification of what evaluating training programs involves and its lack of consideration of the various intervening variables that affect learning and transfer (Tamkin, Yarnall, & Kerrin, 2002; Reeves & Hedberg, 2003). Aldrich (2002) remarks that it focuses only on whether outcomes have been achieved. In order to address these limitations, Clark (2008) suggests that the model should have been presented by Kirkpatrick as both a planning and an evaluation tool and should be flipped upside-down to re-organize the steps into a backwards planning tool. Still, the model is seen as offering flexibility to users as it permits them to align outcomes of training with other organizational tools (e.g., company reports and greater commitment of employees) (Abernathy, 1999). Though the model has been used for decades in the evaIuation of commercial training programs, some scholars have suggested its use for research studies of academic programs and have used it for the purpose (Tarouco & Hack, 2000; Boyle & Crosby, 1997), while others have suggested a combination of the model with other professional development tools (Grammatikopoulos, Papacharisis, Koustelios, Tsigilis, & Theodorakis, 2004). In particular, level four of Kirkpatrick’s model is highly relevant to distance learning as it seeks tangible evidence that learning has occurred, as does level two (Galloway, 2005). This researcher has found the model to be relevant and useful in evaluating the impact of a distance education program. The implications and relevance thereof are discussed in this article.

Guba and Lincoln’s (2001) fourth generation evaluation approach views stakeholders as being the primary individuals involved in determining the value of a given program (Alkin & Christie, 2004). In attempting to satisfy national stakeholders, this study was carried out based on the recommendations of the Higher Education Quality Committee (HEQC), the agency with the executive responsibility for quality promotion and quality assurance in higher education in South Africa (HEQC, 2009). This study investigated the views of graduates of the program and of their principals and immediate line managers in order to create multiple realities based on their perceptions (Alkin & Christie, 2004). The role of the researcher was to tease out these constructions and to discover any information that could be brought to bear in terms of evaluating the program (Guba & Lincoln, 1989).

In South Africa, ongoing changes affect the educational landscape, and one of the major areas being focused on in the country is the need for the accountability of education providers. This is driven partly by the need to provide value for money (Council on Higher Education [CHE], 2005). Furthermore, internationally, the importance of improved accountability at all levels of public education is recognized (Fahy et al., 2007). Factors that call for increased accountability in higher education include disappointing completion rates and perceived inadequacies in the preparation of graduates for the demands of the global economy, among others (Shulock, 2006). One of the fundamental forms of institutional accountability is gathering and analyzing information about the careers of students after graduation (Fahy et al., 2007), which informs the need to emphasize quality improvement throughout all facets of an institution’s academic provision (Pretorius, 2003).

Although the qualifications of the teaching force in the country have improved, most reports indicate that the majority of teachers have not yet been sufficiently equipped to meet the education needs of a growing democracy in the twenty-first century (Department of Education [DoE], 2006). Two of the most important factors in determining the quality of education are the academic level and the pedagogical skills of the teacher (Chung, 2005). The government’s policy to provide “more teachers” and “better teachers” is underpinned by the belief that teachers are the essential drivers of good quality education (DoE, 2006), an assertion supported by various research studies (Robinson & Latchem, 2002). Thus in pursuing this goal, it becomes imperative for stakeholders to evaluate the effectiveness of programs.

One of the key principles in the Revised National Curriculum Statement (RNCS) of the South African Goverment focuses on the development of the knowledge, skills, and values of learners (DoE, 2005). This in turn naturally influences the goals of any program designed for educators of such learners. The Advanced Certificate in Education (ACE) is a professional qualification which enables educators to develop their competencies or to change their career path and adopt new educator roles (DoE, 2000). The admission requirement for the ACE is a professional qualification (CHE, 2006), which may be a three-year teacher’s diploma, a bachelor’s degree in education (BEd) or a post graduate certificate in education (PGCE) for those who want to specialize in the field of Education Management (University of Pretoria [UP], 2009). The specified overall learning outcomes of the ACE require a qualified practitioner at this level to be able to fulfill the role of the specialist education manager (DoE, 2001). Successful candidates need to be highly competent in terms of knowledge, skills, principles, methods, and procedures relevant to education management, be prepared for a leadership role in education management, understand the role of ongoing evaluation and action research in developing competence within their chosen aspect of education management, and be able to carry out basic evaluations and action research projects.

The Unit for Distance Education at the University of Pretoria offers three distance education programs, one of which is the ACE (Education Management) program, which was established in 2002. Based on the national specified overall learning outcomes, the university identified four key dimensions for this particular ACE, namely Education Management (two modules), Organisational Management (two modules), Education Law, and Social Contexts of Education and Professional Development. The ACE (Education Management) program was developed based on these six modules, which are presented in three blocks of two modules each. Students may enrol at any time and can complete the ACE program in a minimum of three six-monthly examination sessions or a maximum of eight examination sessions (UP, 2006).

The demographic profile of enrolled students reveals that the majority are geographically dispersed in deep rural areas of the country. Only 1% of them have access to the Internet, but 99% have access to mobile technology (Aluko, 2007). To meet the needs of this student population, paper-based distance learning materials are made available in the form of learning guides, tutorial letters, and an administration booklet. Face-to-face contact and tutorial sessions are organised at various centers around the country (UP, 2009). In order to meet the unique needs of these students, the university has embraced the opportunity to make use of mobile phone technology in the form of SMS text messaging for both administrative and academic purposes.

In terms of evaluation, student feedback (formative evaluation) on the program has often been relied upon to ascertain its strengths and weaknesses, which in turn has guided the improvement of its quality (UP, 2006). However, the HEQC, after reviewing the program in 2006, suggested the university determine its impact on graduates’ professional activities. Thus, the research question posed was the following: What is the impact of the Advanced Certificate in Education (Education Management) program on the professional practice of graduates and what possible suggestions could be proffered for its improvement? One trusts that answers to this question may lead to the improvement of the program.

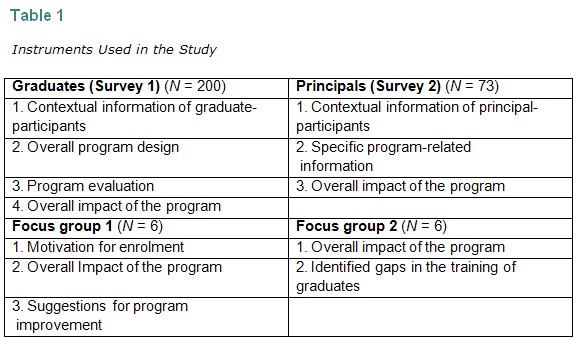

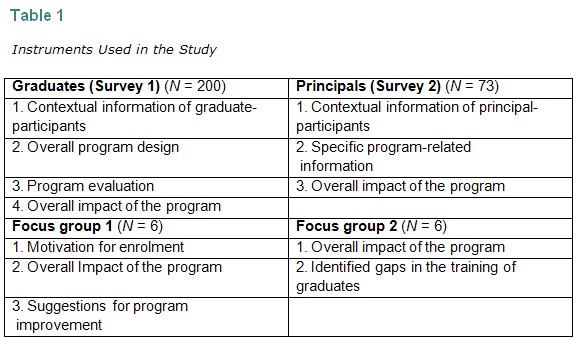

Survey instruments have been identified as being the most popular data collection tools in outcomes measurement and evaluation (Champagne, 2006; Newcomer & Allen, 2008). However, focusing on only one tool is problematic in terms of reaching an in-depth understanding of the phenomenon since it can lead to skewed findings (Lee & Pershing 2002). Researchers are advised to adopt multiple strategies (Reeves & Herdberg, 2003; Newcomer & Allen, 2008). For this study, the researcher combined surveys and focus group interviews. This combination of methods capitalizes on the strengths of both qualitative and quantitative approaches and compensates for the weaknesses of each approach (Punch, 2005). Table 1 shows the instruments used, the participants, and the areas of focus included for each instrument.

Question items for the surveys were mostly open-ended, which put the responsibility for ownership of data much more firmly into the respondents’ hands (Cohen, Manion, & Morrison, 2000). Some closed questions were included where necessary to elicit fixed responses from the respondents, for example the questions relating to contextual information. Thus, with the inclusion of focus group interviews, one could say the study was largely qualitative.

The survey instruments were first piloted on a sample of participants (20 graduates) from Gauteng Province where the university is located in order to enable quicker responses. The pilot survey was distributed by means of postal delivery. The two interview schedules were piloted with a graduate and a principal in the same locality as the university. The pilot process enabled the researcher to improve some questions, which appeared to be ambiguous.

After improving the instruments, both surveys were sent out by post with covering letters attached, which explained the purpose of the study and requested the voluntary participation of the targeted groups. It was made clear that participants could withdraw from the study at any stage. Further, the implications of the non-confidential nature of the second survey were explained in the letter, for those graduates who might be willing to allow us to contact their principals/line managers. According to Konza (1998), it is important to consider the dignity of participants in any research study.

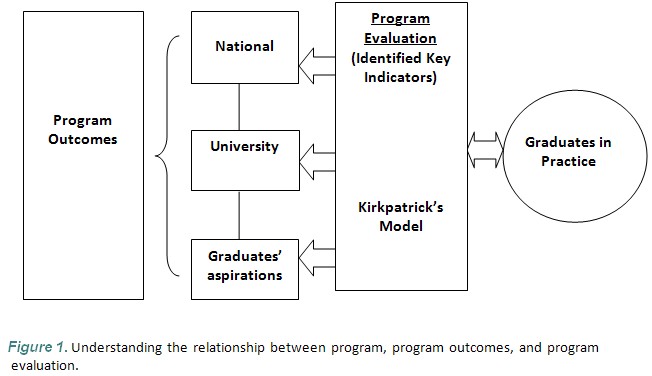

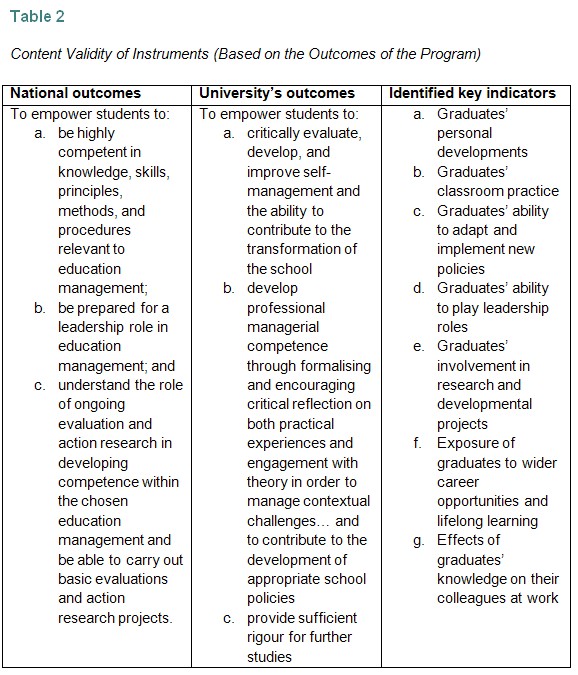

To ensure content validity of the instruments, the questions were based on the stated outcomes of the academic program (see Background to the Study) with a focus on the Kirkpatrick evaluation model. With reference to Kirkpatrick’s model, the researcher developed a schematic diagram that shows the dependent relationships between the outcomes of a program, which should be determined by national needs (that institutions are expected to support), the aspirations of incoming students, and program evaluation. This relationship is depicted in the figure below.

The researcher developed the outcomes of the three identified stakeholders (national, university, and graduates) into key indicators on the impact of the study program. This content validity based on the outcomes is reflected in Table 2.

Research shows that reports of graduates’ careers are often based on varying sample sizes (Fahy, Spencer, & Halinski, 2007). For this study, the first and second cohorts of students that graduated from the program – 2004 and 2005 – were targeted. There was a total of 1,970 graduates of which 1,455 graduated in 2004 and 515 in 2005. Their principals or line managers were also included in the target groups in order to gain a second opinion of the impact of the program, beyond that which was reported by the graduates. This group of graduates was chosen because the researcher felt they would have had some time to put into practice what they had learned. Multi-stage and purposive sampling techniques were used to identify prospective participants (Punch, 2005).

Three provinces out of the nine in the country were targeted. These were Gauteng, Mpumalanga, and Limpopo. These provinces were chosen for three reasons. First, their proximity to the university campus facilitated telephone contact and postal delivery (the project relied mainly on the postal delivery system, which may be unpredictable). Second, the majority of the students in the program tend to be drawn from two of the targeted provinces (Mpumalanga and Limpopo). Third, the choice of nearby provinces enabled the researcher to have adequate time for the planned focus group interviews as participants for the interviews were identified from the returned surveys.

Survey one.

The names and contact information of 1,000 (51%) of the 1970 graduates who had completed the program in 2004 and 2005 were drawn from the university’s mainframe computer. These were all the graduates from the three provinces selected for the study. A short message service (SMS) was sent to them in order to inform them about the study and its aims. This was made possible because the university makes use of mobile technology and bulk SMS messaging as a means of support for students enrolled on the program. Later, surveys were sent through the post to the 1,000 graduates. Each package included a postage-paid return envelope. It was not possible to send the surveys electronically because, as indicated earlier, most students enrolled on the program were from rural areas and had little access to email or the Internet. A follow-up SMS was sent to participants in order to remind and encourage them to complete the surveys and return them on time. In addition, the graduates were asked to indicate their willingness to allow us to contact their principals and line managers by sending us their contact details. There was a return of 228 surveys from graduates. Of these, 20 were returned unopened (probably undelivered) and eight were received after the cut-off date (the date became important in view of the time constraints for the project). A total of 73 graduates gave us permission to contact their principals/line managers. Eventually, 200 surveys (a 20% response rate) from graduate-participants were analyzed for the study. In a similar study (Distance Education and Training Council, 2001), a return of 18% was regarded as being acceptable for mail surveys.

Survey two.

The second survey was sent to the principals/line managers identified by the 73 graduates, again through the post with self-addressed return envelopes. Only thirteen (18%) of the identified principals returned their surveys They were asked to indicate the name of the graduate on behalf of whom they were completing the questionnaire. This to a large extent compromised the level of confidentiality, but it was unavoidable due to the purpose of the study.

Focus group interviews one and two.

Fourteen participants indicated their willingness to attend the two focus group interviews (seven participants per group), but two of them later declined. Thus, 12 interviewees (six graduates and six principals) participated in the two respective focus group interviews in order to validate some of the trends that emerged from the surveys. Several phone calls were made to follow up with those who indicated their willingness to be interviewed. Since most interviewees were from geographically dispersed rural areas, the Unit for Distance Education covered their travel and subsistence costs.

The interviews with the respondents were each one to one-and-a-half hours in duration and were recorded. Two research assistants were employed to assist the interviewer. One assistant manned the electronic device used for recording the interview, while the other took notes in order to make sure that every important aspect was recorded.

The Atlas.ti 5.0 (Computer-Assisted Qualitative Data Analysis software) was used for the analysis of the interviews; relevant quotations and codes were identified based on the concepts and themes frequently mentioned by interviewees (Hardy & Bryman 2004). In addition, codes were developed for the open-ended questions after the questionnaires were returned by the respondents, while the few quantitative aspects of the questionnaires were analyzed by the Statistics Department of the University of Pretoria to arrive at the cumulative frequencies and cumulative percentages. The codes for both the surveys and questionnaires were in line with the key indicators shown in Table 2. The planning, implementation, analysis, and reporting of the project findings took place from September 1 to December 15, 2007.

The major findings of the study are reported below, based on feedback from both surveys and the two focus group interviews.

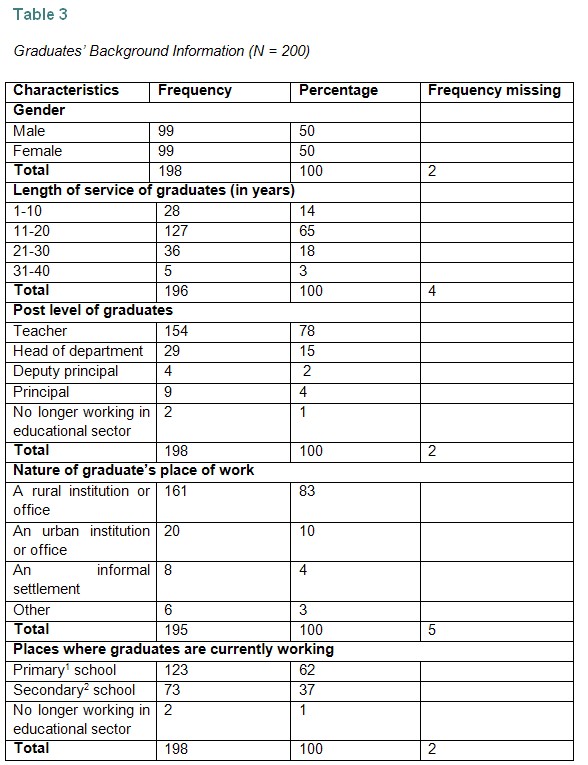

Table 3 shows the response of the graduates to the contextual section of the questionnaire.

The contextual data show that 200 graduates participated in the research project with a gender split of exactly 50%. The data deviate from the norm, which is that most distance education students are female (National Centre for Education Statistics, 2003). A possible reason for the deviation could be the composition of people who responded to the questionnaires because available data from the Unit for Distance Education consistently show that there are more female students enrolled for distance education programs than males (UP, 2007). Career data reveal that 28 (14%) of the respondents have been in the teaching service for 1 to 10 years; 127 (65%) for 11 to 20 years; 36 (18%) for 21 to 30 years and 5 (3%) for 31 to 40 years. The data may suggest that the majority of educators seek further knowledge within the bracket of 11 to 20 years in the service. In addition, the data support the claim that distance education students are already in professions and are economically active (Braimoh & Osiki, 2008; Labuschagne & Mashile, 2005).

Of the graduates who responded to the question on the nature of their place of work, the majority (161, 83%) are employed in a rural institution or office; 20 (10%) worked in an urban institution or office; 8 (4%) work in an informal settlement; and 6 (3%) indicated ‘other.’ This finding supports other data from the Unit for Distance Education on the catchment areas for the program, which are predominantly the rural areas (UP, 2006). Furthermore, this finding may buttress past research on the link between education and rural development, which leads to greater economic growth (Beaulieu & Gibbs, 2005).

In terms of occupation, the highest percentage of respondents (154, 78%) to the first survey are teachers, the majority of whom work in primary schools (123, 62%), while 73 (37%) work in secondary schools. Of all the respondents, there were 29 (15%) heads of departments, four (2%) deputy principals, and nine (4%) principals, while two (1%) were no longer working in the education sector. These findings show that the majority of students enrolled for the ACE (Education Management) program is from the primary school sector.

Contextual information on the principals/line managers was requested in order to ascertain how long they have been working with the graduates and to determine their job position. Of the 13 principals/line managers who returned the survey, 6 (46%) were working in primary schools at the time of the investigation, 6 (46%) in secondary schools, while 1 (8%) was working in a non-education workplace. In terms of job position, 9 (69%) were principals, while the remaining 4 (31%) were a deputy principal, a head of department, a lead teacher, and a manager. At the time of the investigation, 3 principals/line managers (30%) had been working with the graduate/s for a total period of 1-4 years, 7 (60%) for 5-9 years, and 1 (10%) for 12 years.

Adults are motivated by diverse reasons to undertake study by distance learning. In particular, the opportunity for continuous self-improvement can increase teacher motivation (Chung, 2005). One of the graduates who participated in the focus group interview gave the following reasons:

TE: I believe that learning is a life-long process. One, I studied for the certificate in order to obtain more skills and to develop myself. Also, one can motivate others to go further with their studies.

Other reasons given by various participants included these: financial benefits, the need to stay abreast of changes in the South African education system, the quest for knowledge in education law, the desire to improve leadership skills or to compete for leadership positions, and the desire for promotion. All these reasons, including the need to combine family and career responsibilities, concur with findings of past research studies (Aluko, 2007; O’ Lawrence, 2007).

Graduates’ experience of the program tallied strongly with their expectations. Three graduates did not respond to the question on whether or not their expectations had been met. Of the 197 who did respond to this question, 179 (91%) responded positively while 18 (9%) indicated otherwise. Even though this research study is not necessarily a “reaction” or “happy sheet” (Kirkpatrick, 1996), findings in this section can be related to the first level of Kirkpatrick’s model, reaction, which probes customer satisfaction. Clark (2009) is of the opinion that this level is the most frequently used form of evaluation because it is easier to measure, but it provides the least valuable data. It has been suggested that satisfaction is not necessarily related to effective learning and sometimes discomfort in the learning process is essential for deep learning to occur (Tamkin, Yarnall, & Kerrin, 2002). It is the researcher’s opinion that there might be a need to probe further the 18 (9%) graduates whose expectations were not met by means of a longitudinal study of this phenomenon.

Personal development of graduates.

On the topic of the personal development of graduates, one of the interviewees said this:

SJ: … the program has helped me as we are working with people, especially at the school I’ve got problem attitude to tolerate others, and also to work with them in a very good way. Now I listen to people, and I respect their views…

His new approach has led to better interpersonal relationships between him, learners, parents, and other staff members.

Comments by other graduates on personal development included the following: the ability of graduates to take in new ideas and make sense of them (one graduate said, “…the ACE changed my way of perceiving things…”); the ability to think through new ideas (one commented, “It has improved my thinking ability. I am able to analyze challenging ideas”); and the exposure of graduates to what the roles of every stakeholder at the school involve (according to the graduates, they now understand what percentage of work is expected of each stakeholder). Other positive comments included improvement in time management; expanded confidence, which has made it possible for graduates to publicly articulate newly formed ideas (e.g., at staff meetings) and to write about them; the ability to work with other colleagues as a team; and the ability to work under pressure.

Effects of program on graduates’ classroom practice.

Concerning the effects of the program on graduates’ classroom practice, many attested to improvement in their teaching methods, their time management skills, their classroom control, and their relationships with learners and parents. As one said, “My relationship with learners has improved.”

These views were supported by the principals as shown by one of their comments about a graduate-participant:

JL: ...in terms of classroom practice, what I’ve noticed is that… he can handle discipline very well... And secondly, the subject matter… He used to (organize) workshops (for) other educators in preparation of learning programs and work schedule.

This study did not examine the impact of the improvement of teachers’ professional practice on learners’ performance. Nevertheless, there is ample evidence in the literature (Darling-Hammond, 2000; Rice, 2003) to suggest that teachers’ attributes and qualifications determine student achievement to a great extent.

Effects of program on graduates’ ability to adapt and implement new policies.

Comments from both graduates and principals who participated in the study indicated that the program has had a tremendous effect on the former’s ability to adapt and implement new policies. One graduate said, "I never thought I would accept the implementation of OBE3, but now I like and appreciate the implementation." Two other graduates offered these comments:

SJ: Yes… One has been exposed to several acts and policies in ACE, so that when the new ones come, you find that you have got a very good background. ...it helps us to manage change and to adapt easily to anything that comes. I realize that if one has not received that kind of education, you will be negative and resist new things.

MV: I would like to comment on the seven roles of an educator… which everybody would agree with me that when you assess… with this IQMS4, you have those seven roles. Now the difference between us and other educators is that they cannot interpret... That is why IQMS is still a problem. Many teachers are resisting it because they do not understand the language and the terminologies associated with the IQMS. But with us, it’s easy because we know the seven roles. If I have to assess my peers, I know exactly what to assess them on.

According to one principal, even though the national Department of Education is trying its best to disseminate information to teachers, many teachers without this ACE qualification tend to associate legislation with lawyers and courts, and thus they resist new policies. Another principal stressed that “The level of awareness is also very important… because you cannot interpret or implement if you are not aware.” This supports the view that management training programs are important in terms of the roles expected of teachers (DoE, 2000; Martin, 2004).

Effects of program on graduates’ ability to play leadership roles.

In response to the question on the ability of graduates to play leadership roles, the majority of the principals stressed that graduates in their schools are indeed playing a variety of leadership roles. This has led to graduates supporting the school management teams, the school governing bodies, and the principals in their schools. Other graduates have been promoted to head of department or vice principal as a result of the program. A major reason for these advances is the level of confidence displayed by graduates. Many graduates’ colleagues are ready to take instruction from them because they look up to them as role models.

However, a very low number, four (2%) of the graduates who participated, have not been able to play this expanded leadership role because they have not managed to secure a promotion post or because of the rigidity of their principals. The latter finding supports the concern already identified by scholars about the need for evaluators of training programs to take into consideration the factors that can inhibit the transfer of learning in the workplace (Tamkin, Yarnall, & Kerrin, 2002).

Graduates’ involvement in research and developmental projects.

Most of the graduate-participants have been empowered to become involved in research or other developmental projects. Findings from the first survey indicated that of the 157 graduate-participants who responded to the question on whether they have been involved in research projects or not, only 18 (12%) have either not been involved in any research or could not do so because of the rigidity of their leaders. On the other hand, 139 (88%) have initiated and taken lead roles in researching and implementing various school and community projects in areas such as HIV/AIDS, safety, greenery, teenage pregnancy, needy children, counseling, drug-abuse awareness, alternatives to corporal punishment, and extracurricular activities. In addition, some have attracted funding to their schools, while some (as parents) were serving on the governing bodies of their children’s schools at the time of this investigation.

Exposure of graduates to wider career opportunities and lifelong learning.

One of the effects of the study program is that graduates have been exposed to wider career opportunities. They commented, for example, “It has opened up to me a world of opportunities” and “It has built my confidence to apply for promotional posts.” This finding tends to support the view that exposure of graduates to wider career opportunities could lead to promotion (Davidson-Shivers, 2004), though not in all cases (Delaney, 2002).

On completion of the study program, 16 graduates (8%) have completed a higher degree in education, 126 (64%) were currently studying for a higher degree in education, 9 (5%) were currently studying in a different discipline, while only 47 (24%) were not currently studying further. Two graduates did not respond to this question. On the other hand, all the graduates who participated in the first focus group interview have completed a higher degree, indicating that learners have the opportunity to pursue lifelong learning after graduation (Belanger & Jordan, 2004). Furthermore, research shows that educational needs are becoming continuous throughout one’s working life as labor markets demand knowledge and skills that require regular updating (O’Lawrence, 2007).

Effects of graduates’ knowledge on their colleagues at work.

Three-quarters (149, 75%) of the participants attested to the fact that they shared their new knowledge and skills with their colleagues to a large extent, while 50 (25%) did to some extent. This they did by calling colleagues’ attention to the legal implications of some actions. For instance, colleagues had to be alerted to the legal implications of meting out corporal punishment to learners and to learners’ rights. Other effects included conducting workshops in order to inform their colleagues of current school policies and assisting school management with implementing new ideas. In addition, many of their colleagues have been able to emulate graduates in terms of regular pupil class attendance and classroom management, which has led to some being tagged as “Best Educator” by the community. Many of their colleagues have been motivated to further their studies.

Regarding the value of the study program for their practice, 116 (59%) graduates rated this as excellent; 50 (25%) as above average, 30 (15%) as satisfactory, and 4 (0.51%) as poor. On the whole, 129 (66%) felt that their schools or organizations benefited from the program to a large extent, 65 (33%) said to some extent only, and 2 (1%) said not at all, while 4 participants did not respond to the question.

All the principals attested to the fact that graduates have added value to the general quality of their schools. Some principals noticed that there were differences between educators who have completed the study program and those who have not attended such programs. Therefore one may conclude that the ACE program has to a large extent met the specified outcomes indicated earlier in the Research Design section. The value of the program, as indicated by both graduates and principal-participants, can be measured on levels two (learning), three (behavior) and four (results) of Kirkpatrick’s model. Learning is evident in the extent to which participants have changed their attitudes, improved their knowledge, and/or increased their skills as a result of attending the program. A change in behaviour is evident in terms of how they react to colleagues, principals, and parents, and the final results achieved by the participants who attended the program are evident in terms of promotion, leadership positions, and improved quality of classroom practice. Clark (2009) indicates that these higher levels, which are considerably more difficult to evaluate, provide more valuable data. Nevertheless, participants identified some areas of the study program that require improvement.

In this study, the major areas identified by graduates as requiring improvement include the following: the need to review some modules that were either repetitive in nature or contained low-level information on some topics; the non-practical nature of some aspects of the program, which prevented students from gaining hands-on experience; the omission of information on how to handle discipline in schools and how to handle resistance to change by long-service educators and school principals; and the need for the university to improve its administration and student support systems.

Some of the suggestions proffered by participants in this study may seem impractical in view of the distance mode of the program (for example the inclusion of computer studies, especially when most students are from rural areas with little access to computers). Some of their suggestions are also beyond the scope of this study.

The following recommendations and suggestions were made at the time of this study: the need to introduce subjects taught in schools as areas of specialization; the involvement of school principals in student assessment in order to expose students to the practical aspects of the program; and the review of certain modules so as to update, simplify, or make the learning material more user-friendly. Other suggestions included the introduction or review of research projects, which should take place in students’ schools irrespective of the type of school (i.e., primary or secondary); improvement of the existing student support structure; introduction of a workable structure to allow distance education students to contact one another; and the establishment of the program as compulsory for all educators, especially those in management positions. This last suggestion emerged because the findings indicate that most principals who have not been exposed to this program find it difficult to accept changes suggested by educators who have completed the program, which has led in some cases to victimization of such graduates.

Regarding this last point, the government has since embarked on an ACE (Education Management and Leadership Development) program for practising and aspiring principals in order to provide them with a formal professional qualification and to provide an entry criterion to principalship (South African Qualifications Authority, 2008). It is hoped that principals will thus be enlightened as to the importance of the ACE (Education Management) program and, as a result, be able to work with their teachers to implement new ideas to bring improvement to their schools.

This research study was not particulary a focus survey, which is the first level of Kirkpatrick’s model. However, inferences on whether the graduate-participants were satisfied with the study program may be drawn from the research findings. Also, the study did not include the views of the learners taught by the graduate-participants. The findings of this study may not be generalizable because the research study did not involve all the graduates of the program due to time constraints. Another caveat is that one cannot be sure that the positive changes in graduates, as attested to by all the participants, were necessarily due to the course of study they undertook through the university. Other factors such as graduates’ length of service and other in-service training programs could have contributed to their improved performance.

Since this research study is limited in its focus and scope, it is recommended that a longitudinal impact study should be conducted with regard to the revised academic program in order to make the findings more generalizable. The reason for this recommendation is that the sample may not be representative of the behavior changes in all the graduates (Kirkpatrick, 1996). Future studies should investigate the relationship between teachers’ professional development and learners’ performance and measure the influence of such teachers on their colleagues. Surveys and interviews should be complemented by observation of practice, involvement of students being taught by identified graduates, and perhaps some kind of before-and-after research design.

Searching the literature has revealed a paucity of research on the impact of distance education programs on graduates and their workplaces. Hence, it is clear that more studies of this nature are required. The implications of the findings of this study in terms of the research, theory, and practice of distance education point to four important reasons for undertaking more research in the area of program evaluation.

First, such research would help practitioners of distance education programs to determine the extent of learning and the change in behavior as a result of academic programs, the general impact of study programs on those who have graduated, and the impact on the quality of their work when they become employed. Second, program organizers could identify and correct any lapses that may be inherent in their distance education program offerings. Third, future program evaluation research would help to enhance the quality of distance education programs, especially in the context of developing countries where most distance education programs still depend on first generation delivery modes. Quality enhancement would justify the huge expenditure on such programs incurred by distance students and other stakeholders. Fourth, findings from such research could be used to motivate funding from the goverment and other interested agencies.

The inclusion of focus group interviews (qualitative tools) in future research studies would address the concern that South Africa needs to avoid some of the mistakes that have been made internationally (Kilfoil, 2005). An example of ill-advised practice is encouraging universities to report simple, readily available quantitative measures at the expense of complex qualitative assessments (Morrison, Magennis, & Carey, 1995).

Findings from this study strongly suggest that alumni may be regarded as a source of well-informed quantitative and qualitative data, a claim which is supported by Fahy et al. (2007). This study has demonstrated that the program under investigation appears to have positively impacted the professional practice of the graduate-participants as there is a strong relationship between the completion of the program and the improvement of their professional practice performance. Furthermore, participants attested to the fact that differences exist between the professional practice of educators who have completed the program and those who have not. These differences include the understanding, interpretation, and implementation of policies and the ability to handle research and development projects. In addition, there is ample evidence that graduates have better career and education opportunities. Finally, graduates exert positive effects on their colleagues’ professional practice and serve as role models for their colleagues to further their own studies. Also based on these findings, one could posit that the four levels identified by Kirkpatrick (1996) in his model (reaction, learning, behaviour, and results) are key elements to evaluate to what extent a program has met the intentions and expectations of key stakeholders.

Program evaluation is a useful way of assessing how public service programs are working in the greater public interest (Newcomer & Allen, 2008), and it is an important form of institutional accountability (Fahy, Spencer, & Halinski, 2007). Hence institutions have a duty to pay more attention to this practice. The researcher, in support of Kirkpatrick (1996), is of the view that evaluation mechanisms should be built into every academic program at the planning and implementation stages.

Nevertheless, it has been stressed that self-evaluation is rarely the best way to determine whether a person’s behaviour (the third level in Kirkpatrick’s model) has actually changed as a result of a training program (Ford & Weissbein, 1997). Research has shown that supervisors may have emotional links (either positive or negative) with participants, thus creating potential obstacles and bias (Galloway, 2005). This statement is supported by the fact that some of the graduate-partcipants in this study refused permission for the researcher to contact their principals (probably due to a poor relationship between them). There were also those who indicated that principals/line managers sometimes become stumbling blocks in the implemetation of innovations learnt from courses they have undertaken, despite the fact that such innovations might improve the school.

Wisan, Nazma, and Pscherer (2001), who compared the quality of online and face-to-face instruction, suggest that the results of evaluation of distance education programs may be helpful in course design and the development of student support services. In support of the importance of program evaluation, it is of interest to note that the University of Pretoria has since reconceptualised and improved the design of the ACE (Education Management) program based on three levels of planning and development: programme design, course design, and materials development (Welch & Reed, 2005; UP, 2008; Fresen & Hendrikz, 2009). This involved developing extensive academic support structures to help students succeed in their studies, including an orientation program, more contact sessions, tutorial letters, tutorial sessions, assignments, SMS messages, and an academic enquiry service. The re-launched ACE (Education Management) study program was fully accredited by the Higher Education Quality Committee in March 2008 (Mays, 2008). Presently, as a proactive approach, the university has introduced a comprehensive research project to trace the holistic success of the upgraded program.

Abernathy, D.J. (1999). Thinking outside the evaluation box. Training & Development, 553(2), 18-232.

Aldrich, C. (2002). Measuring success: In a post-Maslow/Kirkpatrick world, which metrics matter? Online Learning, 6(2), 30-32.

Alkin, M. C., & Christie, C. A. (2004). An evaluation theory tree. In M.C. Alkin (Ed.), Evaluation roots: Tracing theorists’s views and influences. Thousand Oaks, CA: Sage.

Aluko, R. (2007). A comparative study of distance education programs assessed in terms of access, delivery and output at the University of Pretoria.Unpublished doctoral thesis, Department of Curriculum Studies, University of Pretoria, Pretoria.

Beaulieu, L. J., & Gibbs, R. (Eds.) (2005). The role of education: Promoting the economic and social vitality of rural America. Mississippi State: Southern Rural Development Centre and USDA, Economic Research Service.

Belanger, F., & Jordan, D. H. (2004). Evaluation and implementation of distance learning: Technologies, tools and techniques. Hershey, PA: Idea Group.

Boyle, M., & Crosby, R. (1997). Academic program evaluation: Lessons from business and industry. Journal of industrial Teacher Education, 34(3). Retrieved June 02, 2009, from http://scholar.lib.vt.edu/ejournals/JITE/v34n3/AtIssue.html

Braimoh, D., & Osiki, J. O. (2008). The impact of technology on accessibility and pedagogy: The right to education in Sub-Saharan Africa. Asian Journal of Distance Education, 1(6), 53-62.

Champagne, N. (2006). Using the NCHEC areas of responsibility to assess service learning outcomes in undergraduate health education students. American Journal of Health Education, 37(3), 137-146.

Chung, F. K. (2005, August). Challenges for teacher training in Africa with special reference to distance education. Paper presented at the DETA Conference, Pretoria.

Clark, D. R. (2004). Kirkpatrick’s four-level evaluation model. Retrieved June 02, 2009, from http://www.nwlink.com/~donclark/hrd/isd/kirkpatrick.html

Clark, D. R. (2009). Flipping Kirkpatrick. Retrieved June 02, 2009, from http://bdld.blogspot.com/2008/12/flipping-kirkpatrick.html

Cohen, L., Manion, L., & Morrison, K. (2000). Research methods in education (5th ed.). London: Routledge.

College of the Canyons. (2003). Nursing alumni surveys: 2002 graduates. Retrieved January 10, 2009 from www.eric.ed.gov/ERICWebPortal/recordDetail?accno=ED482189

Council on Higher Education [CHE]. (2005). Higher Education Quality Committee. Retrieved January 01, 2009, from http://www.che.ac.za/heqc/heqc.php

Cyrs, T. E. (1998). Evaluating distance learning programs and courses. Retrieved July 16, 2008, from http://www.zianet.com/edacyrs/tips/evaluate_dl.htm.

Darling-Hammond, L. (2000). Teacher quality and student achievement: A review of state policy evidence. Education Policy Archives, 8(1), 1-44.

Davidson-Shivers, G. V., Inpornjivit, K., & Seller, K. (2004). Using alumni and student databases for program evaluation and planning. College Student Journal, 38(4), 510 - 520. Retrieved January 10, 2009, from www.eric.ed.gov/ERICWebPortal/recordDetail?accno=EJ708791

Delaney, A. M. (2002, June). Discovering success strategies through alumni research. Paper presented at the Annual Forum for the Association for Institutional Research, Toronto, Ontario.

Department of Education. (DoE). (2000). Norms and standards for educators. Pretoria: DoE.

Department of Education. (DoE). (2001). National plan for higher education. Pretoria: DoE.

Department of Education. (DoE). (2005). Revised national curriculum statement. Pretoria: DoE.

Department of Education. (DoE). (2006). The national policy framework for teacher education and development in South Africa: “More teachers; better teachers.” Pretoria: DoE.

Distance Education and Training Council. (2001). DETC degree programs: Graduates and employers evaluate their worth. Retrieved February 20, 2009, from www.detc.org/downloads/2001%20DETC%20Degree%20Programs%20Survey.PDF

Fahy, P.J., Spencer, B., & Halinski, T. (2007). The self-reported impact of graduate program completion on the careers and plans of graduates. Quarterly Review of Distance Education, 9(1), 51-71.

Ford, J.K., & Weissbein, D.A. (1997). Transfer of training: An updated review and analysis. Performance Improvement Quarterly, 10(2), 22-41.

Fresen, J.W., & Hendrikz, J. (in press). Designing to promote access, quality and student support in an advanced certificate programme for rural teachers in South Africa. International Review of Research in Open and Distance Learning. African Regional Special Issue.

Galloway, D. L. (2005). Evaluating distance delivery and e-learning: Is Kirkpatrick’s model relevant? Performance Improvement 44(4).

Grammatikopoulos, V., Papacharisis, V., Koustelios, A., Tsigilis, N., & Theodorakis, Y. (2004). Evaluation of the training program for Greek Olympic education. Retrieved June 02, 2009, from http://www.emeraldinsight.com/10.1108/09513540410512181

Guba, E. G., & Lincoln, Y. S. (1989). Fourth generation evaluation. Thousand Oaks, CA: Sage.

Guba, E. G., & Lincoln, Y. S. (2001). Guidelines and checklist for constructivist (a.k.a fourth generation) evaluation. Retrieved June 18, 2008, from http://www.wmich.edu/evalctr/checklists/constructivisteval.pdf.

Hardy, M., & Bryman, A. (2004). Handbook of data analysis. Thousand Oaks, CA: Sage.

Higher Education Quality Committee (HEQC). (2004). Institutional audit framework. Retrieved November 19, 2008, from http://www.che.ac.za/documents/d000062/CHE_Institutional-Audit-Framework_June2004.pdf

Higher Education Quality Committee (HEQC). (2009). Higher Education Quality Committee. Retrieved February 06, 2009, from http://www.che.ac.za/about/heqc/

Keegan, D. (2008). The impact of new technologies on distance learning students. Retrieved May 10, 2008, from http://eleed.campussource.de/archive/4/1422/.

Kilfoil, W. R. (2005). Quality assurance and accreditation in open distance learning. Progressio, 27(1&2), 4-13.

Kirkpatrick, D. L. (1994). Evaluating training programs: The four levels. San Francisco: Berrett-Koehler Publishers.

Kirkpatrick, D. L. (1996). Evaluating training programs: The four levels. San Francisco: Berrett-Koehler Publishers.

Konza, D. 1998. Ethical issues in qualitative research: What would you do? Retrieved 18 May, 2009, from http://www.aare.edu.au/98pap/kon98027.htm

Labuschagne, M., & Mashile, E. (2005). A case study of factors influencing choice between print and on-line delivery methods in a distance education institution. South African Journal of Higher Education, 19(3),158-171.

Lee, S. H., & Pershing, J. A. 2002. Dimensions and design criteria for developing training reaction evaluations. Human Resource Development International 5(2), 175-197.

Lockee, B., Moore, M., & Burton, J. (2002). Measuring success: Evaluation strategies for distance education. Educause Quarterly, 1, 20-26.

Martin, S. D. (2004). Finding balance: Impact of classroom management conceptions on developing teacher practice. Teaching and Learning, 20(5), 405-422.

Mays, T. (2008). UP takes time to design [Electronic version]. South African Institute for Distance Education Newsletter, 14(2).

Moore, M. (1999). Editorial: Monitoring and evaluation. The American Journal of Distance Education, 13(2). Retrieved January 10, 2009, from http://www.ajde.com/Contents/vol13_2.htm#editorial

Morrison, H. G., Magennis, S.P., & Carey, L.J. (1995). Performance indicators and league tables: A call for standards. Higher Education Quarterly, 49(2), 28-45.

National Centre for Education Statistics (NCES). (2003). A profile of participation in distance education: 1999–2000. Washington, DC: US Department of Education.

Naugle K. A., Naugle, L. B., & Naugle, R. J. (2000). Kirkpatrick’s evaluation model as a means of evaluating teacher performance. Retrieved March 05, 2009, from http://www.highbeam.com/doc/1G1-66960815.html

Newcomer, K.E., & Allen, H. (2008). Adding value in the public interest. Retrieved March 05, 2009, www.naspaa.org/accreditation/standard2009/docs/AddingValueinthePublicInterest.pdf

O’Lawrence, H. (2007). An overview of the influences of distance learning on adult learners. Journal of Education and Human Development 1(1).

Pretorius, F. (2003). Qaulity enhancement in higher education in South Africa: why a paradim shift is necessary: Perspectives in higher education. South African Journal of Higher Education, 17(3), 137-143.

Punch, K. (2005). Introduction to social research: Quantitative and qualitative approaches (2nd ed.) London: Sage.

Reeves, T.C., & Hedberg, J.G. (2003). Interactive learning systems evaluation. Englewood Cliffs, NJ: Educational Technology Publications.

Rice, J. K. (2003). Teacher quality: Understanding the effectiveness of teacher attributes. The Economic Policy Institute. Retrieved May 16, 2008, from http://eric.ed.gov/ERICWebPortal/custom/portlets/recordDetails/detailmini.jsp?_nfpb=true&_&ERICExtSearch_SearchValue_0=ED480858&ERICExtSearch_SearchType_0=no&accno=ED480858

Robinson, B., & Latchem, C. (Eds.) (2002). Teacher education through open and distance learning. London: Routledge.

Shulock, N. B. (2006). Editor’s notes. New Directions for Higher Education, 135, 1-7.

Stols, G., Olivier, A., & Grayson, D. (2007). Description and impact of a distance matehematics course for grade 10 to 12 teachers. Pythagoras, 65 (June), 32-38.

South African Qualifications Authority. (2008). Advanced certificate: Education school management and leadership. Retrieved June 10, 2008, from http://regqs.saqa.org.za/showQualification.php?id=48878

Tamkin P., Yarnall J., & Kerrin M. (2002). Kirkpatrick and beyond: A review of models of training evaluation (Report 392). Brighton, UK: Institute for Employment Studies. Retrieved April 10, 2009, from http://www.employment-studies.co.uk/pubs/summary.php?id=392&style=print

Tarouco, L., & Hack, L. (2000). New tools for assessment in distance education. Retrieved June 02, 2009, from http://www.pgie.ufrgs.br/webfolioead/artigo1.html

United Nations Educational, Scientific and Cultural Organisation (UNESCO). (2001). Teacher education through distance learning: Technology, curriculum, evaluation, cost. Paris: Education Sector, Higher Education Division, Teacher Education Sector.

University of Pretoria (UP). (2009). Advanced certificate in education: Education management - distance education program. Pretoria: UP.

Welch, T. T., & Reed, Y. (Eds.) (2005). Designing and delivering distance education: Quality criteria and case studies from South Africa. Johannesburg: National Association of Distance Education and Open Learning in South Africa (NADEOSA).

Wisan, G., Nazma, S., & Pscherer, C. P. (2001). Comparing online and face to face instruction at a large virtual university: Data and issues in the measurement of quality. Paper presented at the annual meeting of the Association for Institutional Research, Long Beach, CA.

1. Implies graduates who taught specific learning areas in the primary schools based on their areas of specialization

2. Implies graduates who taught specific learning areas in the secondary schools based on their areas of specialization

3. Outcomes Based Education

4. Integrated Quality Management Systems (for school-based educators)