Figure 1. The MOOC platform.

From “Learning Analytics for Instructional Design, Practice, and Research,” Zhejiang University, hosted through icourse, 2020 (https://www.icourse163.org/course/ZJU-1206577810). In the public domain.

Volume 21, Number 3

Fan Ouyang, Xu Li, Dan Sun, Pengcheng Jiao, and Jiajia Yao

Zhejiang University, China

The development of massive open online courses (MOOCs) has proceeded through three generations, and in all three, online discussions have been considered a critical component. Although discussions in MOOCs have the potential to promote learning, instructors have faced challenges facilitating learners’ knowledge inquiry, construction, and management through social interaction. In addition, understanding various aspects of learning calls for more mixed-method studies to provide both quantitative, generalized analysis and qualitative, detailed descriptions of learning. This study fills these practice and research gaps. We designed a Chinese MOOC with the support of a pedagogical strategy, a learning analytic tool, and a social learning environment in order to foster learner engagement in discussions. Mixed methods were used to explore learners’ discussion patterns, perceptions, and preferences. Results indicated that learners demonstrated varied patterns, perceptions, and preferences, which implies a complex learning process due to the interplay of multiple factors. Based on the results, this research provided theoretical, pedagogical, and analytical implications for MOOC design, practice, and research.

Keywords: MOOCs, knowledge inquiry and construction, mixed methods, discussion patterns, learning analytics tools

As one of the prevalent online and distance education modes, massive open online courses (MOOCs) have developed rapidly worldwide (e.g., Coursera, EdX, icourse). Although MOOCs originally focused on social, distributed, learner-centered learning (Cormier & Siemens, 2010; Siemens, 2005), many MOOCs have maintained an instructor-directed, lecture-based teaching mode, which favors one-way knowledge transmission (Gillani & Eynon, 2014; Toven-Lindsey, Rhoads, & Lozano, 2015). MOOCs should continually endeavor to promote social, collaborative learning, as the original aim, since from a sociocultural perspective, learning occurs when learners interact with people, resources, and technologies in socially situated contexts (Bereiter, 2002). To achieve this purpose, MOOC instructors have made extensive use of discussion forums as the main means for interactions to foster learners’ knowledge sharing, inquiry, and construction (Cohen, Shimony, Nachmias, & Soffer, 2019; Gillani & Eynon, 2014; Wise & Cui, 2018).

Although MOOC discussions have the potential to promote large-scale communication, in practice, instructors have faced challenges facilitating social, collaborative learning in MOOCs (Cohen et al., 2019; Gillani & Eynon, 2014). Empirical studies of MOOC discussions have consistently shown a lack of reciprocal interaction among learners, a low level of continued participation, and a lack of knowledge contributions (Brinton et al., 2014; Cohen et al., 2019; Gillani & Eynon, 2014). Previous research has also showed that discussion design, instructor facilitation, and technological affordances significantly influence learners’ engagement in MOOCs (Cohen et al., 2019; Ouyang & Scharber, 2017; Wise & Cui, 2018). Because of this complexity, research on MOOCs needs to include more mixed-method studies to provide both quantitative, generalized analysis as well as qualitative, detailed descriptions of learning. However, relevant work in MOOCs has encountered practical challenges in fostering social, collaborative learning as well as research challenges in fully investigating various aspects of learning.

To address these practical and research challenges, this work conducted an action research with two purposes: (a) to foster social, collaborative learning in MOOCs through the design and facilitation of a Chinese MOOC; and (b) to fully understand learners’ MOOC discussions with a mixed-method research. Based on the results, we propose theoretical, pedagogical, and analytical implications for the future design, practice, and research of MOOCs.

The development of MOOCs has proceeded through three generations, and in all three, social, interactive, online discussions have been a critical component. In the beginning, MOOCs were grounded in connectivism theory. In fact, the first cMOOC titled Connectivism and Connective Knowledge was debuted to create a distributed learning environment across varied platforms (e.g., forum, blog, Wikis, social media), in order to help learners aggregate resources, share thoughts, and manage knowledge (Downes, 2011). Next, rooted in cognitive behaviorism theory (Almatrafi & Johri, 2019; Joksimović et al., 2018), the second-generation of MOOCs (i.e., xMOOCs) aimed to extend the subject matter content of campus-based, university-level courses to a larger population. The discussion forum was the main means whereby learners shared ideas and opinions, summarized and reflected on others’ ideas, and constructed new meanings together (Wise & Cui, 2018). Third, grounded in social-cognitive constructivism, an emerging generation of MOOCs (e.g., hybrid MOOCs) combined traditional single-platform MOOCs with social, networked learning, and integrated content-centric instruction with social learning activities (García-Peñalvo, Fidalgo-Blanco, & Sein-Echaluce, 2018). In summary, one of the primary goals of MOOCs is to foster knowledge inquiry, construction, and management through social, distributed interactions.

Previous MOOC research has shown that content-related pedagogical strategies (Wise & Cui, 2018), learning analytics tools (Yousef, Chatti, Schroeder, & Wosnitza, 2014), and social, connected learning environments (Cormier & Siemens, 2010) are primary means to foster learning in MOOCs. For example, Gillani and Eynon (2014) used a case-study, inquiry-based strategy in a Coursera MOOC to promote weekly discussions around real-world business problems. Fu, Zhao, Cui, and Qu (2017) developed a visual learning analytics tool, called iForum, that allowed for the interactive exploration of heterogeneous MOOC forum data, in order to make users aware of discussion patterns. Rosé et al. (2015) designed a social, individualized, self-directed learning layer for a traditional, scripted xMOOC, supported with help-seeking and collaborative-reflection functions, through which learners could seek help from peers, create reflection discussions together, and develop social learning experiences in the MOOC forum. In summary, pedagogical strategies, online tools, and social learning environment have all been used to foster MOOC learners’ participation, engagement, and reflection.

However, the study of MOOCs has faced practical and research problems that need to be further addressed. First, from a practical perspective, although various design, pedagogical, and technological affordances have been used, there has been a lack of reciprocal interaction, continued participation, and knowledge contributions in MOOCs. For example, Cohen et al. (2019) found that only 8% of learners stayed for the entire MOOC, and a very small portion actively participated and collaborated in the forums. Gillani and Eynon (2014) found that learners started off with a high level of participation in online discussions; over time, however, their commitment to these conversations significantly decreased. In addition, Brinton et al. (2014) concluded that a substantial portion of discussions in MOOCs were not directly course-related. Consistent with Brinton et al. (2014), Wise and Cui (2018) found that a large proportion of discussions in MOOCs was not content-related, idea-centered, or knowledge-based. Last but not least, MOOC learners have demonstrated different participation patterns (Cohen et al., 2019), conversation structures (Wise & Cui, 2018), and linguistic features (Dowell et al., 2015), due to their diverse backgrounds, learning interests, and ways of communicating. Overall, MOOC instructors have faced challenges fostering social, collaborative learning in discussions, as these are influenced by multiple, complicated factors (e.g., course design, pedagogy, tools, learner backgrounds, characteristics, and goals).

Second, from a research perspective, researchers have faced challenges investigating the various aspects of learning in MOOCs, due to the complex interplay of learner interaction, course design, and online technologies. Although several research methods have been used to investigate learning in MOOCs, most studies have used quantitative, algorithm-based methods to examine learners’ knowledge mastery, measure retention or drop-out rate, and predict performance (Joksimović et al., 2018). The relevant literature has provided a high-level snapshot of learning in MOOCs as a generalized, summative endeavor, but has resulted in unclear understanding of learners’ knowledge inquiry and construction. It is necessary to apply a more holistic, mixed method to understand and interpret individual learners’ cognitive inquiry as well as group knowledge construction in MOOCs (DeBoer, Ho, Stump, & Breslow, 2014; Gillani & Eynon, 2014; Joksimović et al., 2018). Echoing this trend, Yang, Wen, Kumar, Xing, and Rosé (2014) used machine learning techniques to model the emerging social and thematic structures in MOOCs discussion forums. Further, these authors used qualitative post-hoc analysis to illustrate the relationship between the learners’ expressed motivations regarding course participation and their cognitive engagement with the course materials. Overall, from a research perspective, MOOC research calls for more mixed-method studies to provide both quantitative, generalized analysis as well as qualitative, detailed descriptions of learning in MOOCs.

This study used action research to address the practical and research challenges. First, from a practical perspective, we used a combination of a pedagogical strategy, a learning analytics tool, and a social learning environment to improve learners’ engagement in a Chinese MOOC. Second, from a research perspective, we used mixed methods (i.e., social network analysis, content analysis, social-cognitive network visualization, thematic analysis, and thick description) to fully investigate the representative learners’ discussion patterns, perceptions, and preferences. Based on the empirical research results, we propose theoretical, pedagogical, and analytical implications for MOOC design, practice, and research.

The purpose of this research study was to address a practical challenge (i.e., fostering social, collaborative learning in MOOC discussions) as well as a research challenge (i.e., understanding various aspects of learning in MOOC discussions) through action research (Carr & Kemmis, 1986). First, we applied a combination of knowledge-construction pedagogy, a learning analytics tool, and a social learning environment to design and facilitate a Chinese MOOC. Then, in the empirical research, we adopted mixed methods to investigate the social, cognitive, and perceived perspectives of learning in this Chinese MOOC’s discussions. The research question for this study was: What were learners’ patterns, perceptions, and preferences in the MOOC’s discussions?

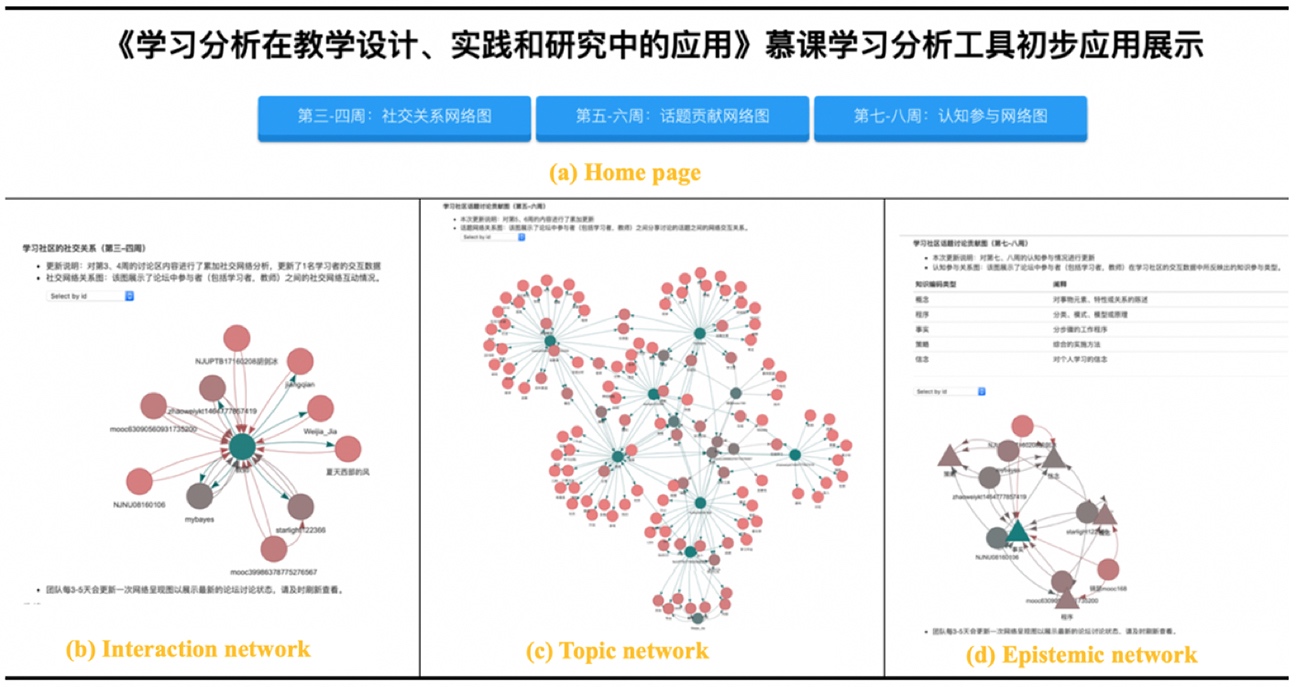

Our research context was an eight-week Chinese MOOC titled Learning Analytics for Instructional Design, Practice, and Research, designed and facilitated by the first author (the instructor), and hosted on China’s largest MOOC platform, namely icourse (see Figure 1). Due to local regulations, all Chinese MOOCs on the icourse platform must be structured as lecture-based xMOOCs, with the purpose of extending the top-university courses to a larger Chinese population (McConnell, 2018). Following this regulation, the instructor made pre-recorded videos to introduce course content, and designed readings, quizzes, and other assignments (see Figure 1). This MOOC’s content included learning and instructional theories, learning analytics concepts, techniques and tools, case studies, and R programming practices. This research was conducted during the first iteration of this MOOC from November 2019 to December 2019; about 850 online learners enrolled in this iteration.

We used a combination of pedagogical strategy, a learning analytics tool, and a social learning environment to foster learner engagement in discussions. First, we used a knowledge-construction pedagogical strategy and related prompts, such as sharing and comparing information, elaborating of opinions, exploring dissonance among ideas, negotiating meanings, and synthesizing knowledge (see Figure 1). Second, from a technological perspective, we designed and devised a student-facing learning analytics tool to demonstrate learners’ discussion processes with the interaction, topic, and epistemic networks (see Figure 2). The interaction network demonstrated learners’ social interactions with others; the topic network demonstrated learners’ use of keywords; and the epistemic network demonstrated five dimensions (i.e., concept, procedure, fact, strategy, and belief) shown by the learners in their posts. This analytics tool was hosted as a Web page and embedded in the course discussion forum. Finally, we built a social learning environment through the use of social media and MOOC forum. We used the group function of a popular Chinese social media tool named WeChat to build a self-organized community through which learners could foster a sense of belonging (see Figure 3). We also designed a social section in the forum for learners to share personal life stories or interesting topics.

Figure 1. The MOOC platform.

From “Learning Analytics for Instructional Design, Practice, and Research,” Zhejiang University, hosted through icourse, 2020 (https://www.icourse163.org/course/ZJU-1206577810). In the public domain.

Figure 2. The learning analytics tool.

From “An Application of a Learning Analytics Tool in MOOC,” Fan Ouyang research team, n.d. (https://8jrscl.coding-pages.com/). In the public domain.

Figure 3. The WeChat group.

Participants. Using a nonprobability, purposive sampling approach (Cohen, Manion, & Morrison, 2013), we deliberately selected participants who engaged in the discussions from within the wider population of registered MOOC learners. Of the 850 online learners enrolled in the first iteration of the MOOC, 23 learners participated in the discussions through the MOOC forum and the WeChat group. This proportion was similar to previous MOOC research which indicated that 5% to 25% of registered learners posted in forums at least once (Almatrafi & Johri, 2019). This study was conducted in an unobtrusive way; we sent a consent form to invite learners to participate in the research via WeChat at the end of the MOOC. Six learners agreed to participate in the research and all consented to the data collection approaches. Like most previous MOOC forum research (e.g., Gillani & Eynon, 2014), the sample was not representative of the total population of MOOC learners, but it did represent a certain level of heterogeneity in terms of the learners’ gender, age, profession, educational level, and academic major (see Table 1). More importantly, the research results (discussed below) indicated that the six participants showed different discussion patterns in terms of social participatory role and knowledge engagement level. This strengthened the representativeness of the sample.

Table 1

Participant Information

| Participant | Gender | Age | Profession | Educational level | Major |

| Hu | Female | 30-39 | University lecturer | MS | Educational technology |

| Jun | Female | 30-39 | Doctoral student | MS | Literature |

| Ling | Male | 20-29 | Data scientist | MS | Psychology |

| Wei | Female | 20-29 | Master student | BS | Computer sciences |

| Xu | Female | 30-39 | University lecturer | PhD | Educational technology |

| Zhao | Male | 40-49 | University associate professor | MS | Educational technology |

Note. Participants are identified by pseudonyms.

Data collection. We collected data from three sources. At the end of the course, we saved all the discussion posts and comments from the MOOC forum and from the WeChat group. Discussion content unrelated to course topics was excluded (e.g., social greetings); this content consisted of 5 MOOC forum posts, 16 forum comments, and 18 WeChat comments. The final dataset of discussions included 10 MOOC forum posts, 198 comments, and 22 WeChat comments. Second, we conducted semi-structured, in-depth interviews with the six participants (30-45 minutes duration) by phone within one week after the course ended. The interview questions addressed learners’ online learning experiences, motivations and goals, weekly MOOC learning procedures, as well as their perceptions about the MOOC’s pedagogy, learning analytics tool, and social learning environment (see Appendix). Finally, one month after the course ended, using the critical event recall approach (Cohen et al., 2013), we invited participants to write a short self-reflection of one or more critical event(s) related to an important learning experience they recalled during or after the course (see Appendix). We specifically asked participants to write about a critical event(s) outside of the MOOC discussions, and through which they applied or relearned the knowledge, such that the critical event(s), to some extent, implied learners’ learning preferences.

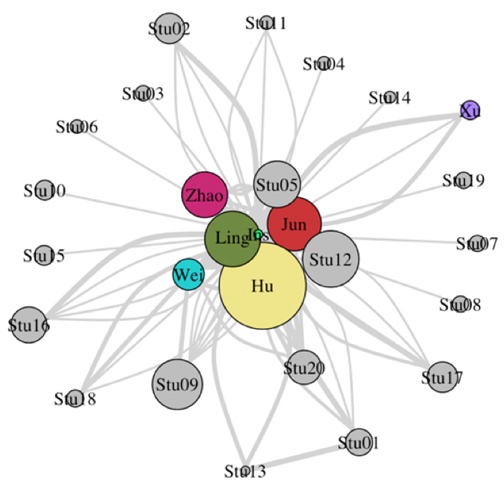

Data analysis strategies. We used mixed methods (i.e., social network analysis, content analysis, social-cognitive network visualization, thematic analysis, thick description) to analyze and understand the participants’ discussion patterns, perceptions, and preferences. First, we analyzed the discussion patterns from both social and cognitive aspects. Specifically, we used the social network analysis method to analyze the social participatory roles of the 23 learners and the instructor. Based on previous research (see Ouyang & Chang, 2019; Ouyang & Scharber, 2017), we identified six types of social participatory roles (i.e., leader, starter, influencer, mediator, regular, and peripheral) in terms of the level of (a) participation (reflected by outdegree and out-closeness); (b) influence (reflected by indegree and in-closeness); and (c) mediation (reflected by betweenness). Then, based on a predefined framework (see Ouyang & Chang, 2019), we used the content analysis approach to analyze individual knowledge inquiry (IKI) capturing three levels of individual knowledge inquiry within learners’ initial comments, and group knowledge construction (GKC) capturing three levels of group knowledge advancement within learners’ responses to others. The superficial, medium, and deep levels of IKI, respectively, indicated learners’ sharing information, presenting their own thoughts without, and with detailed explanation. The superficial, medium, and deep levels of GKC indicated simple (dis)agreement, extension or argumentation of others’ ideas without and with detailed explanation. Two raters (the first author and the third author) discussed the coding framework to reach a full understanding of the codes, then they coded all the content independently, and reached an inter-rater reliability of 0.825 in terms of Cohen’s kappa. We then calculated the participants’ cognitive engagement as a weighted IKI score (N SKI * 1 + N MKI * 2 + N DKI * 3) and a weighted GKC score (N SKC * 1 + N MKC * 2 + N DKC * 3). Finally, based on the work of Ouyang and Chang (2019), we used social-cognitive network visualization to demonstrate participants’ social interaction patterns (i.e., participatory role, network position) and knowledge contribution patterns (i.e., IKI and GKC score). It is worth mentioning that we analyzed the patterns for all 23 learners and the instructor as these could be tracked in the overall network (see Figure 4), but only reported the six participants’ results in order to address the research question.

Second, using the thematic analysis approach (Cohen et al., 2013), we analyzed the learners’ interview transcripts in order to identify the recurring themes of learners’ perceptions regarding the pedagogical strategy, learning analytics tool, and social learning environment. The first author analyzed the original interviews, coded interview transcripts, and identified the themes and evidence. The other authors read transcripts, double-checked the thematic analysis results, and translated the transcripts from Chinese to English.

Finally, the first author read the learners’ self-reflections and identified their learning preferences reflected by the critical events. Based on the analysis, we summarized the learners’ preferences with supporting evidence from their write-ups.

Overall, the six participants demonstrated various patterns in terms of social and cognitive engagement. Socially, they demonstrated six different social participatory roles, and were positioned in three different places in the network (see Table 2 and Figure 4). Regarding the cognitive aspect, they demonstrated low, medium, and high levels of contributions to individual and group knowledge (see Table 2 and Figure 4).

Ling, as a leader (calculated by SNA metrics), not only actively replied to others’ comments but also received frequent responses. In addition, Ling had a high-level IKI (score = 56) and a high-level GKC (score = 34). Hu, an influencer, received a relatively high level of responses from the others and replied to others with a medium-level of frequency. In addition, Hu had a high-level IKI (score = 87), and a low-level GKC (score = 3). Jun, a starter, actively replied to others’ comments, but received responses infrequently. In addition, Jun had a medium-level IKI (score = 54), and a high-level GKC (score = 28). Overall, socially active students (e.g., leader, starter, influencer) tended to make the most knowledge contributions at both individual and group levels. The results of the influencer indicated that learners may reply more frequently to those who demonstrate the high-level IKI in their initial comments. The results of the starter indicated that learners who proactively initiate interactions tended to have a higher-level GKC.

If subgroups naturally formed in the network, Wei, a mediator, had a high mediatory effect as the bridge between sub-groups. Wei both replied to others and received responses with a medium-level of frequency. In addition, Wei had a medium-level IKI (score = 25), and a medium-level GKC (score = 13). Zhao, a regular learner, replied to others and received responses with a medium-level of frequency. Zhao had a medium-level IKI with a score of 41, and a low-level GKC with a score of 5. Overall, the results implied that one’s medium-level social activeness was consistent with the level of contribution to individual and group knowledge.

Finally, Xu was a peripheral who neither actively replied to peers nor did she gain frequent responses. Xu had a low-level IKI with a score of 11, and a low-level GKC with a score of 3. Therefore, socially inactive learners made the fewest knowledge contributions.

Table 2

Pattern Results

| Social engagement | Cognitive engagement | |||

| Participatory role | Network position | IKI level (score) | GKC level (score) | |

| Ling | Leader | Central | Medium (56) | High (34) |

| Jun | Starter | Central | Medium (54) | High (28) |

| Hu | Influencer | Central | High (87) | Low (3) |

| Wei | Mediator | Middle | Medium (25) | Medium (13) |

| Zhao | Regular | Middle | Medium (41) | Low (5) |

| Xu | Peripheral | Peripheral | Low (11) | Low (3) |

Note. Excluding the instructor’s participation results, the range of replies was [1, 20], and range of responses was [1, 11]; the range of IKI scores was [0, 87], and the range of GKC scores was [0, 34].

Figure 4. The overall social-cognitive network.

Node color represents the six participatory roles (i.e., leader in green, starter in red, influencer in yellow, mediator in blue, regular in pink, and peripheral in purple). Node size represents the weighted IKI score, and edge width represents the weighted GKC score. Learners who did not participate in this research are marked as grey nodes; edge directions were deleted for network simplicity.

Learners’ perceptions of their MOOC discussion experiences were elaborated in terms of their perspectives on the pedagogical strategy, learning analytics tool, and social learning environment. First, regarding the pedagogical strategy, among the six participants, active learners (based on the levels of their social engagement and cognitive engagement) tended to perceive the knowledge construction strategy positively. In particular, they perceived discussion participation, social interaction, and peer sharing as positive factors that fostered their knowledge contributions. For example, Ling mentioned the importance of social interactions with peers, noting that “replying to my classmates’ comments... receiving responses for my questions... reading responses from other students... are important approaches to inspire my learning.” Wei also perceived the importance of peer response by saying “If you have someone who supports your point of view, or has questions about your points... this would help you think more and understand deeper.” Jun mentioned the effect of peer sharing, and said that “I read what other students posted, such as sharing of articles, which I may not have read before... this deepened my thinking and enriched my views.” Hu mentioned the importance of participation in discussion activities. “The discussions [assignment] encouraged me to search and post related materials... the learning effect could be better with this participation.”

These socially and cognitively active learners also perceived the importance of instructor participation. In particular, instructor response, idea generation, and discussion facilitation were positive factors that promoted learners’ knowledge contributions. For example, Hu mentioned the influence of instructor response and stated that “when the instructor replied me on the forum, I’d take it as an encouragement, and would do it better next time.” Jun mentioned the importance of the instructor generating and sharing her own ideas. “The instructor’s opinion can help the learners better understand an issue... it also helped promote the participations (sic).” Ling mentioned that the instructor’s facilitation fostered his further learning, as when the instructor “reminded us to look back... and encouraged us to relearn [materials].... I usually had a better understanding [of the knowledge] in the next a couple of days.” Wei viewed the instructor responses as a guidance for learning and said “the instructor’s comments seemed to be directive [for us]. For example, when I saw you [instructor] replying to someone’s post, I would probably read and think about it a few more times.”

Inactive learners, however, tended to perceive the level of difficulty, amount of time consumed, and course design issues in the MOOC. For example, Zhao mentioned the difficulties he faced. “Personally speaking, I only had a limited understanding on certain knowledge. So, I didn’t feel like contributing much in the discussions.” Xu perceived there was a high level of time consumed by the discussions, which might impede her participation. “In this course, the discussion could not be completed easily like [that in] other courses. I must search resources from other channels like the CNKI database... it usually took me another two hours to construct a post.” In addition, both Xu and Zhao mentioned course design issues. For example, Xu questioned the effect of the open-ended inquiry for knowledge construction. “If you want to construct knowledge, what knowledge exactly do you want to build?... The video content was somehow very open.” Zhao mentioned a disconnect between course content and the discussion topics, which negatively influenced his participation. “The forum discussions and instructional videos didn’t have much to do with each other.... I didn’t feel the discussions and video content were closed bonded.” He further suggested a step-by-step scaffolding for the discussion forum. “The course content is quite open... it would be better when the discussion scaffolding was clearer, like [using a] step-by-step [approach].” Interestingly, the active learners (e.g., Ling and Jun) perceived the open-ended inquiry nature of the MOOC discussion positively. For example, Jun said “I took a SPSS MOOC before... I only watched videos to learn techniques.... For courses like this in the social science field, knowledge sharing and constructions were beneficial to improve the higher-level cognitive thinking (e.g., critical thinking).”

Second, regarding technological support in the MOOC discussions, most learners responded that the learning analytics tool helped them understand and reflect on the discussions. They also pointed out the drawbacks of this tool and offered revision suggestions including an integrative function, real-time visualization, and simpler tools to better represent and support the learners’ knowledge construction processes. For example, Ling said that “it would be better to have a real-time function... sometimes the network was shown after I posted something in the forum... so, it was not synchronous.” Wei suggested an integrative function. “You could consider connecting the participant nodes in the interaction network to the keywords that a participant contributed.”

Finally, regarding the social learning environment, both active and inactive learners perceived the importance of building the social, supportive learning community to foster knowledge contributions. Although this research did not focus on the social, off-topic discussions, participants’ responses did reveal the importance of these social discussions. For example, the active learner Jun said that “in this type of knowledge-construction process, learners were more likely to establish a learning community, which was very beneficial and important.” The peripheral learner Xu also perceived a sense of the social community in the forum. “You [instructor] set a discussion module where we could share our lives. I think this was a great way to make me feel like I was a part of the MOOC community... and I was not studying alone.” Ling was the only participant who mentioned the usefulness of synchronous communication in the WeChat group. “I liked to share [information] directly in the WeChat group... we communicated closely on WeChat.... I would like to have more synchronous chatting there.”

Learners’ self-reflections of a critical event(s) of knowledge construction revealed that, in addition to the MOOC discussions, knowledge application, extended learning, and offline collaboration were their three major preferences. For example, although Hu faced difficulty understanding some course literature, she recalled an active participation situation when there was potential to apply the knowledge in practice. “I did seriously participate in some discussions like the topics of social networks... [because] it may benefit my teaching and research in the future.” In recalling a knowledge application event after the course, Ling noted that:

I have been leading a research project in the department.... I analyzed some data from the student cards.... I applied the social network analysis to the student data.... I introduced how to apply those analytic techniques to my colleagues later.

Moreover, several learners employed individual, extended learning to better understand their course knowledge. For example, Wei recalled an extended reading process in which “a peer in this course proposed a real-world problem he encountered... which was a new way to complement my thinking.... I re-read and downloaded several papers to learn how they addressed the similar data analytical issues.” Xu also mentioned an extended process of learning programming. “I was a beginner for programming... so I bought in a series of R videos through an online channel as well as R books to learn more.” Jun recalled an offline collaboration opportunity with another learner she met in the MOOC:

Although I was not good at analytical techniques, there were several experts in the group.... Ling seemed good at data analytics.... I made a phone call to him and he was willing to collaborate with me on my research project.

Although the learners’ participation in the MOOC was inconsistent, as revealed in Gillani and Eynon (2014), this study conducted an action research to design and foster social, collaborative learning in a Chinese MOOC. Through empirical research investigation, we gained a detailed picture of the six representative learners’ patterns, perceptions, and preferences of MOOC discussions supported with specific pedagogy, a learning analytics tool, and social learning environment.

First, from the pattern perspective, the socially active students made the most knowledge contributions, while the socially inactive learners made the fewest. Consistent with previous research results (e.g., Ouyang & Chang, 2019; Wise & Cui, 2018), learners’ social engagement level was a critical indicator of their knowledge contributions. Second, from the perception perspective, the socially and cognitively active learners tended to have a positive perception of the course design, pedagogy, and analytics tool. On the contrary, the inactive learners tended to have negative perception of the MOOC discussions (e.g., the difficulty level, amount of time consumed, and course design issues), which in turn resulted in their inconsistent participation. All the learners perceived the importance of building a social, supportive learning community to foster social interaction. Third, from the preference perspective, the results revealed a complex knowledge construction process that connected the MOOC discussion with further knowledge application, extended learning, and offline communication. Overall, consistent with previous research (e.g., Cohen et al., 2019; Wise & Cui, 2018), our results indicated that learners demonstrated varied patterns, perceptions, and preferences, which implied a complex discussion process due to the interplay of multiple factors (e.g., learner interaction, instructor participation, and pedagogical and technological support). Based on these results, this research offers theoretical, pedagogical, and analytical implications for MOOC design, practice, and research.

As knowledge continues to grow and evolve (Bereiter, 2002), learner agency (Bandura, 2001) for knowledge construction and creation is critical. Regardless of the theoretical foundation upon which a MOOC is grounded (Almatrafi & Johri, 2019; Bell, 2011; Joksimović et al., 2018), the MOOC’s design, instruction, and associated learning should enhance learners’ thinking and cognitive ability, foster social interaction and collaboration, and advance group knowledge (Bereiter, 2002; Damşa, 2014). As our research results initially revealed, the learning process in the MOOC was socially distributed, locally contextualized, and evolved over time in a network composed of interdependent components (Brown, Dehoney, & Millichap, 2015). Compared to inactive learners, active learners took actions to initiate peer interactions and to advance individual and group knowledge (Ouyang & Chang, 2019; Wise & Cui, 2018). Therefore, with the goal of improving knowledge construction, creation, and management, learners should actively interact with their instructor and peers, course content, and the tools available; instructors should serve as learning facilitators and so put learner agency at the center of their practice (Bandura, 2001). The pedagogical implications discussed below can help develop learner agency.

To foster learner agency, instructors should design and facilitate MOOC discussions by considering learner diversity, fostering a coherent communication, and providing appropriate social and technological supports. First, consistent with previous research results (Cohen et al., 2019; Gillani & Eynon, 2014), our research indicated that even though the instructor used the knowledge-construction strategy, the learners still had different levels of social and cognitive engagement. This implies that the active and peripheral learners might have different needs for instructional design, support, and intervention. Even so, most MOOCs have a low quality of instructional design (Margaryan, Bianco, & Littlejohn, 2015) and deficiencies in their support structure (Kop, Fournier, & Mak, 2011). To promote learner agency, instructors should carefully consider learners’ prerequisite knowledge, backgrounds, and learning goals as they design idea-centered, knowledge-construction discussions (Margaryan et al., 2015; Ouyang, Chang, Scharber, Jiao, & Huang, 2020; Wise & Cui, 2018). To facilitate learning among students who are accustomed to a knowledge transmission mode of teaching, instructors should pay specific attention to balancing open-ended discussions and instructional scaffolding (McConnell, 2018). Second, our results showed that MOOC learners preferred diverse ways of communicating, which implies that MOOC communications need to be facilitated via multiple channels, distributed in various locations, and accessed at varied times (Chen, 2019). More coherent communication should be facilitated among multiple communication channels, including Web objects, online platforms, and offline events (Chen, 2019). Finally, as our results showed, social and technological affordances have potential to cultivate a learning community in which the instructor can adopt a different set of approaches to provide a route for ongoing peer support, self-awareness, and social connection (Bereiter, 2002; Wise & Cui, 2018). It is necessary to devise learning analytics tools that can provide ongoing, real-time support based on learners’ social and cognitive engagement, as these are constantly changing during discussions (e.g., Chen, Chang, Ouyang, & Zhou, 2018). Overall, learner needs, ways to communicate, and technological supports are all important factors that need further application and research in order to improve social, collaborative learning in MOOCs.

The next generation of MOOC research should aim to explain the learning process in MOOCs and the various factors that influence it (DeBoer et al., 2014; Joksimović et al., 2018). From an analytical perspective, mixed-method research can help capture a holistic picture of learning and instruction in MOOCs (Gašević, Kovanović, Joksimović, & Siemens, 2014; Joksimović et al., 2018). Most previous studies used quantitative, algorithm-based methods to research learners’ knowledge mastery, dropout rate, and course performance. Taking a step forward, this study used mixed methods to understand learners’ discussion patterns, perceptions, and preferences from the quantitative, qualitative, and perceived perspectives. However, the dataset for this research was small, comparing to the large volume of MOOC data used in other studies. Strategies for the use of mixed methods to deal with a large volume of learners’ data is a critical condition for MOOC research and development (Raffaghelli, Cucchiara, & Persico, 2015). In addition, a measure of post-course learning effect can also enrich stakeholders’ understanding of the wider impact of MOOCs, and better evaluate the value of MOOCs (Joksimović et al., 2018). Overall, integrative, mixed methods, combining qualitative methods with learning analytics, should be used to better understand learning in MOOCs.

In the current knowledge age, MOOCs should foster learners’ knowledge construction, creation, and management in order to meet society’s needs (Gillani & Eynon, 2014; Kop et al., 2011; Siemens, 2005). Taking an initial step towards this goal, we designed a Chinese MOOC with the support of a combination of pedagogy, learning analytics tool, and social learning environment, and investigated learners’ discussion patterns, perceptions, and preferences. Although this empirical research merely demonstrated the results of a very small proportion of the MOOC’s learners, it revealed a complex, interweaving relationship among instructional design, instructor facilitation, as well as social and technological affordances. Moreover, this research opens avenues for future MOOC research and practice. First, it is critical to further understand the learning process in MOOCs, one in which learner agency should be put at the center. Second, it is beneficial to further develop pedagogical strategies that better integrate learner motivations, interests, and goals within MOOCs designs. Finally, empirical research can use mixed methods to capture various aspects of learning in MOOCs. In conclusion, future work should focus on ways to foster learners’ knowledge construction, creation, and management through large-scale interaction, communication, and collaboration in the open, networked age.

Fan Ouyang acknowledges the financial support from the National Natural Science Foundation of China (61907038), China Zhejiang Province Educational Reformation Research Project in Higher Education (jg20190048), and the Fundamental Research Funds for the Central Universities, China (2020QNA241).

Almatrafi, O., & Johri, A. (2019). Systematic review of discussion forums in massive open online courses (MOOCs). IEEE Transactions on Learning Technologies, 12(3), 413-428. doi: 10.1109/TLT.2018.2859304

Bandura, A. (2001). Social cognitive theory: An agentic perspective. Annual Review of Psychology, 52(1), 1-26. doi: 10.1146/annurev.psych.52.1.1

Bell, F. (2011). Connectivism: Its place in theory-informed research and innovation in technology-enabled learning. The International Review of Research in Open and Distributed Learning, 12(3), 98-118. doi: 10.19173/irrodl.v12i3.902

Bereiter, C. (2002). Education and mind in the knowledge age. Mahwah, NJ: Lawrence Erlbaum.

Brinton, C. G., Chiang, M., Jain, S., Lam, H. K., Liu, Z., & Wong, F. M. F. (2014). Learning about social learning in MOOCs: From statistical analysis to generative model. IEEE Transactions on Learning Technologies, 7(4), 346-359. doi: 10.1109/TLT.2014.2337900

Brown, M., Dehoney, J., & Millichap, N. (2015). The next generation digital learning environment: A report on research. EDUCAUSE. Retrieved from https://library.educause.edu/resources/2015/4/the-next-generation-digital-learning-environment-a-report-on-research

Carr, W., & Kemmis, S. (1986). Becoming critical: Education, knowledge and action research. London, UK: Falmer.

Chen, B. (2019). Designing for networked collaborative discourse: An UnLMS approach. TechTrends, 63(2), 194-201. doi: 10.1007/s11528-018-0284-7

Chen, B., Chang, Y. H., Ouyang, F., & Zhou, W. Y. (2018). Fostering discussion engagement through social learning analytics. The Internet and Higher Education, 37, 21-30. doi: 10.1016/j.iheduc.2017.12.002.

Cohen, A., Shimony, U., Nachmias, R., & Soffer, T. (2019). Active learners’ characterization in MOOC forums and their generated knowledge. British Journal of Educational Technology, 50(1), 177-198. doi: 10.1111/bjet.12670

Cohen, L., Manion, L. & Morrison, K. (2013). Research methods in education. New York, NY: Routledge.

Cormier, D., & Siemens, G. (2010). Through the open door: Open courses as research, learning, and engagement. EDUCAUSE Review, 45(4), 30-39. Retrieved from https://er.educause.edu/articles/2010/8/through-the-open-door-open-courses-as-research-learning-and-engagement

Damşa, C. I. (2014). The multi-layered nature of small-group learning: Productive interactions in object-oriented collaboration. International Journal of Computer-Supported Collaborative Learning, 9(3), 247-281. doi: 10.1007/s11412-014-9193-8

DeBoer, J., Ho, A. D., Stump, G. S., & Breslow, L. (2014). Changing “course”: Reconceptualizing educational variables for massive open online courses. Educational Researcher, 43(2), 74-84. doi: 10.3102/0013189X14523038

Dowell, N., Skrypnyk, O., Joksimović, S., Graesser, A. C., Dawson, S., Gašević, D., ... Kovanovic, V. (2015). Modeling learners’ social centrality and performance through language and discourse. In O. C. Santos et al. (Eds.), Proceedings of the 8th International Conference on Educational Data Mining (pp. 250-257). International Educational Data Mining Society.

Downes, S. (2011). Connectivism and connective knowledge 2011 [MOOC]. Retrieved from http://cck11.mooc.ca/.

Fu, S., Zhao, J., Cui, W., & Qu, H. (2017). Visual analysis of MOOC forums with iForum. IEEE Transactions on Visualization and Computer Graphics, 23(1), 201-210. doi: 10.1109/TVCG.2016.2598444

García-Peñalvo, F. J., Fidalgo-Blanco, Á., & Sein-Echaluce, M. L. (2018). An adaptive hybrid MOOC model: Disrupting the MOOC concept in higher education. Telematics and Informatics, 35(4), 1018-1030. doi: 10.1016/j.tele.2017.09.012

Gašević, D., Kovanović, V., Joksimović, S., & Siemens, G. (2014). Where is research on massive open online courses headed? A data analysis of the MOOC research initiative. The International Review of Research in Open and Distributed Learning, 15(5), 134-176. doi: 10.19173/irrodl.v15i5.1954

Gillani, N., & Eynon, R. (2014). Communication patterns in massively open online courses. The Internet and Higher Education, 23, 18-26. doi: 10.1016/j.iheduc.2014.05.004

Joksimović, S., Poquet, O., Kovanović, V., Dowell, N., Mills, C., Gašević, D., ... Brooks, C. (2018). How do we model learning at scale? A systematic review of research on MOOCs. Review of Educational Research, 88(1), 43-86. doi: 10.3102/0034654317740335

Kop, R., Fournier, H., & Mak, J. S. F. (2011). A pedagogy of abundance or a pedagogy to support human beings? Participant support on massive open online courses. The International Review of Research in Open and Distributed Learning, 12(7), 74-93. doi: 10.19173/irrodl.v12i7.1041

Margaryan, A., Bianco, M., & Littlejohn, A. (2015). Instructional quality of massive open online courses (MOOCs). Computers & Education, 80, 77-83. doi: 10.1016/j.compedu.2014.08.005

McConnell, D. (2018). E-learning in Chinese higher education: The view from inside. Higher Education, 75(6), 1031-1045. doi: 10.1007/s10734-017-0183-4

Ouyang, F. (2019). An application of a learning analytics tool in MOOC [Web App]. Retrieved from https://8jrscl.coding-pages.com/

Ouyang, F. (2019). Learning Analytics for Instructional Design, Practice, and Research [MOOC]. Zhejiang University. Retrieved from https://www.icourse163.org/course/ZJU-1206577810

Ouyang, F. & Chang, Y. H. (2019). The relationship between social participatory role and cognitive engagement level in online discussions. British Journal of Educational Technology, 50(3), 1396-1414. doi: 10.1111/bjet.12647

Ouyang, F., Chang, Y. H., Scharber, C., Jiao, P., & Huang, T. (2020). Examining the instructor-student collaborative partnership in an online learning community course. Instructional Science, 48(2), 183-204. doi: 10.1007/s11251-020-09507-4

Ouyang, F. & Scharber, C. (2017). The influences of an experienced instructor’s discussion design and facilitation on an online learning community development: A social network analysis study. The Internet and Higher Education, 35, 33-47. doi: 10.1016/j.iheduc.2017.07.002

Raffaghelli, J. E., Cucchiara, S., & Persico, D. (2015). Methodological approaches in MOOC research: Retracing the myth of Proteus. British Journal of Educational Technology, 46, 488-509. doi: 10.1111/bjet.12279

Rosé, C. P., Ferschke, O., Tomar, G., Yang, D., Howley, I., Aleven, V., ... Baker, R. (2015). Challenges and opportunities of dual-layer MOOCs: Reflections from an edX deployment study. Proceedings of the 11th International Conference on Computer Supported Collaborative Learning (CSCL 2015): Vol. 2, 848-851. Gothenburg, Sweden: International Society of the Learning Sciences.

Siemens, G. (2005). Connectivism: A learning theory for the digital age. International Journal of Instructional Technology & Distance Learning, 2(1), 3-10. Retrieved from https://www.itdl.org/journal/jan_05/article01.htm

Toven-Lindsey, B., Rhoads, R. A., & Lozano, J. B. (2015). Virtually unlimited classrooms: Pedagogical practices in massive open online courses. The Internet and Higher Education, 24, 1-12. doi: 10.1016/j.iheduc.2014.07.001

Wise, A. F., & Cui, Y. (2018). Learning communities in the crowd: Characteristics of content related interactions and social relationships in MOOC discussion forums. Computers & Education, 122, 221-242. doi: 10.1016/j.compedu.2018.03.021

Yang, D., Wen, M., Kumar, A., Xing, E. P., & Rosé, C. P. (2014). Towards an integration of text and graph clustering methods as a lens for studying social interaction in MOOCs. The International Review of Research in Open and Distributed Learning, 15, 214-234. doi: 10.19173/irrodl.v15i5.1853

Yousef, A. M. F., Chatti, M. A., Schroeder, U., & Wosnitza, M. (2014). What drives a successful MOOC? An empirical examination of criteria to assure design quality of MOOCs. Proceedings of 2014 IEEE 14th International Conference on Advanced Learning Technologies (pp. 44-48). Athens, Greece: IEEE. doi: 10.1109/ICALT.2014.23

Please write about a learning experience related to knowledge inquiry and construction you can recall. Choose a critical event(s) outside of the MOOC discussions, through which you applied or relearned the knowledge you gained in the course.

Learners' Discussion Patterns, Perceptions, and Preferences in a Chinese Massive Open Online Course (MOOC) by Fan Ouyang, Xu Li, Dan Sun, Pengcheng Jiao, and Jiajia Yao is licensed under a Creative Commons Attribution 4.0 International License.