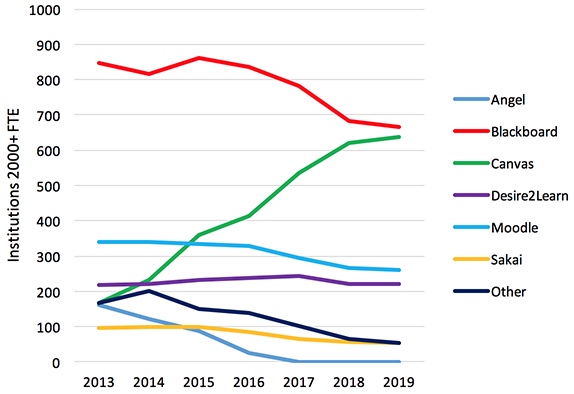

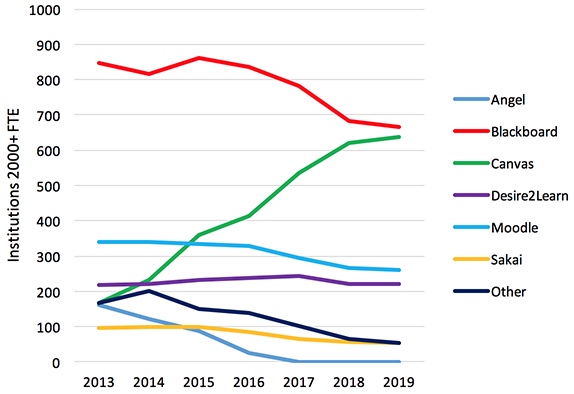

Figure 1. LMS market share in U.S. Higher Education Fall 2013-2019 by institution (2000+FTE; Edutechnica, 2019).

Volume 20, Number 3

Sally Baldwin and Yu-Hui Ching

Boise State University

The evaluation of online courses is an important step in providing quality online courses. There are a variety of national and statewide evaluation tools used to help guide instructors and course designers of online courses (e.g., Quality Matters, OSCQR). This paper discusses a newly released course evaluation instrument from Canvas, the second largest learning management system (LMS) used by higher education institutions in the United States. The characteristics and unique features of the Canvas Course Evaluation Checklist (CCEC) are discussed. The CCEC is also compared to established national and statewide evaluation instruments. This review is helpful for those interested in online course design and developments in the field of online education.

Keywords: Canvas, course design, distance education, evaluation instruments, online education, quality, Quality Matters

Online education has become a mainstream component of higher education. Annually, nearly one-third of students enroll in online courses (Seaman, Allen, & Seaman, 2018), with online course offerings representing the fastest growing sector in higher education (Lederman, 2018). A recent Inside Higher Ed Survey of Faculty Attitudes on Technology (2018; N=2,129) found that 44% of faculty have taught an online course and 38% have taught a blended or hybrid course (Jaschik & Lederman, 2018).

To teach a distance education or blended course, faculty members generally rely on some form of learning management system (LMS; Ismail, Mahmood, & Abdelmaboud, 2018). The LMS has a large impact on the way online education is presented and perceived. The LMS influences “pedagogy by presenting default formats designed to guide the instructor toward creating a course in a certain way” (Lane, 2009, para. 2). In the United States, the current LMS market is dominated by Blackboard, Canvas, Moodle, and Desire2Learn (also known as Brightspace, D2L), which account for 90.3% of institutions, and 92.7% of student enrollment (Edutechnica, 2019). Blackboard, released in 1997, has a 30.9% share of institutions and 33% of student enrollment. Canvas, released in 2011, supplies 30.6% of institutions and 35.47% of student enrollments. In comparison, Moodle has a 17.7% share of institutions and 12.41% share of student enrollment—an indication that smaller schools typically utilize Moodle (Edutechnica, 2019).

Canvas is the fastest growing learning management system in the United States (see Figure 1; Edutechnica, 2019). Nearly 80% of new LMS contracts in U.S. and Canadian higher education result in a move to Canvas (Hill, 2016). According to U.S. News and World Report’s (2018) annual ranking of top 25 online bachelor degree programs, Canvas LMS is used by four of the top five institutions and “14 of the top 25 online bachelor's degree programs” (Instructure, 2018a). Recently, Canvas published a course evaluation checklist to guide the users of the LMS in designing quality online courses. This checklist has the potential to impact online course design by Canvas LMS users at 1,050 institutions, with enrollments totaling 6,647,255 students as of Spring 2019 (Edutechnica, 2019).

Figure 1. LMS market share in U.S. Higher Education Fall 2013-2019 by institution (2000+FTE; Edutechnica, 2019).

Educators have a vested interest in offering quality courses. Nine out of 10 faculty members (N=2,129) surveyed by Inside Higher Ed and Gallup (Jaschik & Lederman, 2018) said they were involved in online or hybrid course design. And, only 25% of these faculty members reported using an instructional designer to help design or revise online courses (Jaschik & Lederman, 2018). Evaluation instruments for online course design can be an important tool to provide support and guidance. Providing easy to use evaluation tools and determining the elements that should be assessed can help guide instructors who design online and blended learning, and highlight best practices.

A review of six publicly available national and statewide online course evaluation instruments for the design of higher education online courses in the United States was recently published (see Baldwin, Ching, & Hsu, 2018). This review identified 12 universal criteria found in all six national and statewide evaluation instruments, nine criteria found in five out of six evaluation instruments, and one criterion found in four out of six evaluation instruments. Since then, the Canvas Course Evaluation Checklist (CCEC), a new national course evaluation instrument has been released (Instructure, 2018b). This evaluation tool is similar to the Blackboard Exemplary Course Program Rubric in that it has been published by a learning management system company. Due to the potentially large impact of this new evaluation tool, this paper aims to explore:

Both authors independently compared the CCEC to the previously reviewed national and statewide evaluation instruments (Baldwin et al., 2018):

Initially we assessed the CCEC’s characteristics to those on the national and statewide evaluation instruments above (e.g., intended usage, audience, ease of adoption, rating scale, cost/availability, training requirements for users; Table 1).

Table 1

Characteristics of Evaluation Instruments

| Organization | Blackboard | CCEC | OEI | OSCQR | QLT | QM | QOCI |

| Intended usage | National | National | California | National | California | National | Illinois |

| Started | 2000 | 2018 | 2014 | 2014 | 2011 | 2003 | 1998 |

| Current version | 2017 | 2018 | 2018 | 2018 | 2017 | 2018 | 2018 |

| Audience | Instructors and course designers. | Canvas LMS users. | Instructors and staff. | Instructors and instructional designers. | Faculty, faculty developers, and instructional designers. | Instructors and instructional designers. | Instructors and staff. |

| New or mature | Mature courses. | New and mature courses. | New courses. | New and mature courses. | Mature courses. | Mature courses. | New and mature courses. |

| Purpose | Identify and disseminate best practices for designing high quality courses. | To elevate the quality of Canvas courses. | Establish standards to promote student success and conforms to existing regulations. | Continuous improvement of quality and accessibility in online courses. | To help design and evaluate quality online teaching and learning. | Look at course design to provide peer-to-peer feedback towards continuous improvement of online courses. Also "certifies course as meeting shared standards of best practice." | Improve accountability of online courses. |

| Rating scale | Incomplete, promising, accomplished, exemplary. | None, but design components are ranked as: Expected, best practice, exemplary. | Incomplete, exchange ready, additional exemplary elements. | Minor revision, sufficiently present, not applicable. | Does not meet/rarely or never, partially meets/sometimes, meets/often, exceeds/always, objective does not apply to the course. | Essential, very important, important. | Nonexistent, developing, meets, exceeds, N/A. |

| Cost | Free | Free | Free | Free | Free | Subscription Fee | Free |

| Availability | Creative Commons | Creative Commons | Creative Commons | Creative Commons | Creative Commons | Subscription | Creative Commons |

| Official review | Yes. | No. | Yes, by OEI trained peers. | No. | Yes, by a team of 3 certified peer reviewers (including a content expert related to the course discipline). | Yes, by team of 3 certified peer reviewers, 1 master reviewer. One reviewer must be external to institution, and one reviewer must be content expert. | No. |

| Training | For official review, a peer group of Blackboard clients review. | None. | Must be California Community College faculty, have online teaching experience and formal training in how to teach online and have attended a three-week training program. | None. | Recent experience teaching or designing online courses, complete a QLT reviewer course and an online teaching course, and experience on “informal” campus review team and applying QLT to courses. | Recent experience teaching online courses, Complete peer review course, and QM rubric course. Peer review course is 15 days, 10-11 hours per week. | None. |

| Success | Scores are weighted, with exemplary courses earning 5-6. | N/A | Course must display all exchange-ready elements to pass. | N/A | Course must meet all 24 core QLT objectives & earn at least 85% overall. | Course must rate "yes" on all 14 of the "essential" standards & earn 85% overall. | N/A |

| Outcome | Earn certificate of achievement and an engraved glass award, if course is rated exemplary by two of the three reviewers. | N/A | Successful courses will be placed on state-wide learning exchange registry. | N/A | Certification and course are recognized on campus and statewide websites. | Earn QM recognition. | N/A |

| Time to review course | Six months for official Exemplary Course program. | N/A | 5-10 hours. | 6-10 hours. | 10-12 hours over 4-6 weeks. | 4-6 weeks. | N/A |

We also compared the CCEC’s physical characteristics to the previously reviewed instruments and identified the breakdown of each instrument, including the number of sections, the section names, and sub-sections (Table 2).

Table 2

Comparison of Evaluation Instruments’ Physical Characteristics

| Organization | Blackboard | CCEC | OEI | OSCQR | QLT | QM | QOCI |

| Number of components | 4 categories, 17 sub-categories, 63 elements. | 4 sections, 33 criteria. | 4 sections, 44 elements. | 6 sections, 50 standards. | 10 sections, 57 objectives. | 8 general standards, 42 specific review standards. | 6 categories, 24 topics, 82 criteria. |

| Number of pages | 10 | 4 | 19 | 3 | 10 | 1 | 25 |

| Sections | Course Design | Course Information | Content Presentation | Course Overview and Information | Course Overview and Introduction | Course Overview and Introduction | Instructional Design |

| Interaction & Collaboration | Course Content | Interaction | Course Technology and Tools | Assessment and Evaluation of Student Learning | Learning Objectives (Competencies) | Communication, Interaction, and Collaboration | |

| Assessment | Assessment of Student Learning | Assessment | Design and Layout | Instructional Materials and Resources Utilized | Assessment and Measurement | Student Evaluation and Assessment | |

| Learner Support | Course Accessibility | Accessibility | Content and Activities | Student Interaction and Community | Instructional Materials | Learner Support and Resources | |

| Interaction | Facilitation and Instruction (Course Delivery) | Learner Activities and Learner Interaction | Web Design | ||||

| Assessment and Feedback | Technology for Teaching and Learning | Course Technology | Course Evaluation (Layout/Design) | ||||

| Learner Support and Resources | Learner Support | ||||||

| Accessibility and Universal Design | Accessibility and Usability | ||||||

| Course Summary and Wrap-up | |||||||

| Mobile Platform Readiness (optional) |

Then, we coded the CCEC against the 22 common criteria found in previously reviewed evaluation instruments by comparing phrases used in the instruments. Next, we compared our analysis, to reach a consensus of the characteristics and unique features of the CCEC. We used our experience in online instruction, instructional design, and online course evaluation instrument research to guide us.

The CCEC focuses on course design within the parameters of the Canvas LMS. The checklist was developed by a team of Instructure employees (Instructure is the developer and publisher of Canvas) and released in 2018. The CCEC is intended for all Canvas users, which conceivably could include instructors and instructional designers. The checklist’s stated purpose is to share universal design for learning (UDL) principles, the checklist creators’ expertise in Canvas, and their “deep understanding of pedagogical best practices” in an effort to “elevate the quality to Canvas courses” (Instructure, 2018b, para. 2). The instrument is available for download from Canvas on the Internet (https://goo.gl/UQbhwR); an editable version of the checklist is also available via Google Docs (https://docs.google.com/document/d/18ovgJtFCiI7vrMEQci-67xXbKHfusAHSrNYGVHXTrN4/copy). The CCEC is offered under a Creative Commons Attribution-Non Commercial-Share Alike 4.0 International License (CC-BY-NC-SA 4.0) on the Internet; sharing, as well as remixing, of the tool is encouraged by Canvas, provided attribution is given.

The checklist can be used with new and mature courses, and is primarily useful to Canvas users since many of its features are Canvas-centric. It is comprised of four sections (course information, course content, assessment of student learning, and course accessibility) and 33 criteria (compared to the average national/statewide evaluation instrument of over six sections, and 56 criteria). The CCEC uses the rating scale of expected, best practice, and exemplary to rank the importance of the design components. The CCEC indicates 19 expected and standard design components, seven best practices/added value design components, and seven exemplary/elevated learning design components (Table 3).

Table 3

Characteristics of Canvas Course Evaluation Checklist

| Canvas course evaluation checklist | |

| Intended usage | National |

| Started | 2018 |

| Current version | 2018 |

| Audience | Canvas LMS users (K-Higher Education) |

| New or mature | New and mature courses |

| Purpose | To elevate the quality of Canvas courses |

| Format | Checklist |

| Rating scale | Expected, Best Practice, Exemplary |

| Weights and values | "A ★ rating indicates an expected and standard design component to online learning; a ★★ rating is considered ‘Best Practice’ and adds value to a course; and ★★★ is exemplary and elevates learning." |

| Number of categories | 4 |

| Categories | Course Information, Course Content, Assessment of Learning, Course Accessibility |

| Subcategories | 0 |

| Number of criteria | 33 |

| Cost | Free |

| Availability | Creative Commons |

| Official review | No |

| Training required | No |

In Baldwin et al.’s (2018) article comparing national and statewide evaluation instruments, 12 universal criteria were included in all of the instruments:

The CCEC indicates each of these criteria, with the following exceptions: Technology is used to promote learner engagement/facilitate learning, Links to institutional services are provided, and Course policies are stated for behavior.

Nine criteria were previously identified in five out of six national and statewide evaluation instruments:

The CCEC includes three of these criteria (Instructions are clearly written, Guidelines for multimedia are available, and Guidelines for technology are available) but not the other six (Learners are able to leave feedback, Course activities promote achievement of objectives, Instructor response time is stated, Collaborative activities support content and active learning, Self-assessment options are provided, and Assessments occur frequently throughout course). The standard found on four out of six national and statewide evaluation instruments, “Information is chunked” (Baldwin et al., 2018, p. 54), was also found on the CCEC. When comparing the CCEC to other national and statewide evaluation instruments, some criteria are subtly different. For example, the CCEC focuses on having a variety of assessments throughout the course, whereas other instruments focus on the frequency of assessments in the course. The Blackboard Exemplary Course Program Rubric states, “Assessment activities occur frequently throughout the duration of the course” (Blackboard, 2017, p. 7).

The CCEC is visionary in some aspects. The CCEC is an easy to use checklist, complete with checkboxes. While checklists have been identified as useful screening devices for evaluating online course design (Baldwin & Ching, 2019; Herrington, Herrington, Oliver, Stoney, & Willis, 2001; Hosie, Schibeci, & Backhaus, 2005), the other established course evaluation tools are in the form of rubrics. Also, the CCEC is relatively short. It is four pages (including citations), compared to an average of 10.71 pages for other evaluation instruments. And, it was released through a Canvas Community discussion page, where conversations between the checklist creators and users occur. This is likely to lead to further discussion about the importance of course quality. In addition, the CCEC instructs users on how to design their courses (e.g., “Home Page provides a visual representation of course; a brief course description or introduction...” “Home Page utilizes a course banner with imagery that is relevant to subject/course materials” (Instructure, 2018b, p. 1)). In contrast, other evaluation tools provide more general information such as, “A logical, consistent, and uncluttered layout is established” (SUNY, 2018, p. 2).

The CCEC has a unique focus on UDL, with 25 of the 33 criteria referenced to the UDL guidelines. UDL guidelines provide a set of principles that offer multiple means of representation, action and expression, and engagement to provide all individuals equal opportunities to learn (CAST, 2019). The concept of UDL is to create education that accommodates the widest number of learners, including those with disabilities, without the need for adaptations or special design (Rose & Meyer, 2002). UDL is used to support the variability and diversity of learners (CAST, 2019; Rose, Gravel, & Gordon, 2013) by how information is presented, how learners express what they learn, and how learners engage in learning (Hall, Strangman, & Meyer, 2014).

Four of the CCEC exemplary design components are linked to personalized learning (“Personalized learning is evident”, “Differentiation is evident (e.g. utilized different due dates),” “MasteryPaths are included,” and “Learning Mastery Gradebook is enabled for visual representation of Outcome mastery” (Instructure, 2018b, p. 2)). Personalized learning is defined as developing learning strategies and regulating learning pace to address individual student’s distinct learning needs, goals, interests, or cultural backgrounds (iNACOL, 2016). Personalized learning has become increasingly popular with the advent of more affordable and available software. Technology can be used to develop learner profiles, track progress, and offer individualized feedback. With both personalized learning and UDL, educators are encouraged to understand learner variability, use multiple instructional delivery and assessment methods, and encourage student engagement (Gordon, 2015; McClaskey, 2017).

Interaction is emphasized (e.g., as a separate category) in all of the previously reviewed state and national evaluation instruments (Baldwin et al., 2018). In contrast, the CCEC instructs course designers to include at least one of three forms [of interaction]:

While these three forms of interaction are individually significant, Moore (1989) instructed educators of the vital importance of including all three forms of interaction in distance education in his perennially cited editorial.

Unlike established instruments like Quality Matters (QM) or the Blackboard Exemplary Course Program Rubric, the CCEC does not have an official review process or certification outcome. No training is required to use the CCEC, but like the Open SUNY Course Quality Review Rubric (OSCQR), the CCEC supplies the user links to explain some of its criteria (e.g., “Canvas Guide - Add Image to Course Card” [Instructure, 2018b, p. 1]).

The CCEC is based on research that is different than the research cited by other well-known national and statewide evaluation instruments. The CCEC cites the UDL guidelines, a K-12 quality course checklist, and an online course best practices checklist from a California community college. In contrast, OSCQR provides research-based evidence for each of its criteria. Likewise, the QM Rubric is supported by an intensive review of literature involving 21 peer-reviewed journals conducted by an experienced staff (Shattuck, 2013). The CCEC is generally grounded in the UDL framework, while other instruments are grounded in a more comprehensive synthesis of research-based pedagogical practices.

Providing a short, easy-to-use checklist that provides specific guidelines for designing an online course and promotes personalization and accessibility is beneficial to Canvas users. The LMS influences the design and pedagogy of online courses (Vai & Sosulski, 2016). However, while the CCEC is innovative in some aspects, this review shows it falls short on evaluating other critical aspects of online design (e.g., interaction). Introducing an easy-to-use evaluation checklist that neglects previously identified research-based practices (established by six other national and statewide evaluation instruments) to potentially a third of higher education online course instructors/designers is disconcerting. It is suggested that the CCEC be revised to include the identified practices to better support Canvas LMS users.

It is critical to support instructors and course designers with guidelines based on best practices to encourage quality course design. Currently, the CCEC may serve as a good starting point for online course designers who wish to use an evaluation instrument to improve course quality. However, we highly recommend that instructors and course designers consult other national and statewide online course evaluation instruments that offer guidance based on more comprehensive research-based practices to supplement the CCEC in their quest to design quality online courses.

Baldwin, S. & Ching, Y.-H. (2019). An online course design checklist: Development and users' perceptions. Journal of Computing in Higher Education, 31(1), 156-172. doi: 10.1007/s12528-018-9199-8

Baldwin, S., Ching, Y. H., & Hsu, Y. C. (2018). Online course design in higher education: A review of national and statewide evaluation instruments. TechTrends, 62(3), 46-57. doi: 10.1007/s11528-017-0215-z

Blackboard. (2017, April 10). Blackboard exemplary course program rubric. Retrieved from https://community.blackboard.com/docs/DOC-3505-blackboard-exemplary-course-program-rubric

California State University. (2019). CSU QLT course review instrument. Retrieved from http://courseredesign.csuprojects.org/wp/qualityassurance/qlt-informal-review/

California Virtual Campus-Online Education Initiative. (2018). CVC-OEI course design rubric. Retrieved from https://cvc.edu/wp-content/uploads/2018/10/CVC-OEI-Course-Design-Rubric-rev.10.2018.pdf

CAST. (2019). The UDL guidelines. Retrieved from http://udlguidelines.cast.org

Edutechnica. (2018, March 4). LMS data-Spring 2018 updates [Blog post]. Retrieved from http://edutechnica.com/2018/03/04/lms-data-spring-2018-updates/

Edutechnica. (2019, March 17). LMS data-Spring 2019 updates [Blog post]. Retrieved from https://edutechnica.com/2019/03/17/lms-data-spring-2019-updates/

Gordon, D. (2015, May 19). How UDL leads to personalized learning [Blog post]. eSchool News. Retrieved from https://www.eschoolnews.com/2015/05/19/udl-personalized-939/

Hall, T., Strangman, N., & Meyer, A. (2014). Differentiated instruction and implications for UDL implementation. Wakefield, MA: National Center on Accessing the General Curriculum. Retrieved from http://aem.cast.org/about/publications/2003/ncac-differentiated-instruction-udl.html#.WvIWky-ZPfY

Herrington, A., Herrington, J., Oliver, R., Stoney, S. & Willis, J. (2001). Quality guidelines for online courses: The development of an instrument to audit online units. In G. Kennedy, M. Keppell, C. McNaught, & T. Petrovic (Eds.) Meeting at the crossroads: Proceedings of ASCILITE 2001 (pp. 263-270). Melbourne: The University of Melbourne.

Hill, P. (2016, July 31). MarketsandMarkets: Getting the LMS market wrong [Blog post]. e-Literate. Retrieved from https://mfeldstein.com/marketsandmarkets-getting-lms-market-wrong/

Hosie, P., Schibeci, R., & Backhaus, A. (2005) A framework and checklists for evaluating online learning in higher education, Assessment & Evaluation in Higher Education, 30(5) 539-553. doi: 10.1080/02602930500187097

Illinois Online Network. (2018). Quality online course initiative. Retrieved from https://www.uis.edu/ion/resources/qoci/

iNACOL. (2016, February 17). What is personalized learning? [Blog post]. Retrieved from https://www.inacol.org/news/what-is-personalized-learning/

Instructure. (2018a, February 14). Canvas used by majority of the top 25 online bachelor's degree programs ranked by U.S. News & World Report [Press release]. Canvas. Retrieved from https://www.canvaslms.com/news/pr/canvas-used-by-majority-of-the-top-25-online-bachelors-degree-programs-ranked-by-u.s.-news-&-world-report&122662

Instructure. (2018b, June 29). Course evaluation checklist [Blog post]. Canvas. Retrieved from https://community.canvaslms.com/groups/designers/blog/2018/06/29/course-evaluation-checklist

Ismail, A. O., Mahmood, A. K., & Abdelmaboud, A. (2018). Factors influencing academic performance of students in blended and traditional domains. International Journal of Emerging Technologies in Learning (iJET), 13(02), 170-187. doi: 10.3991/ijet.v13i02.8031

Jaschik, S., & Lederman, D. (Eds.). (2018). Inside higher ed’s 2018 survey of faculty attitudes on technology. Inside Higher Ed. Retrieved from https://www.insidehighered.com/booklet/2018-survey-faculty-attitudes-technology

Lane, L. M. (2009). Insidious pedagogy: How course management systems affect teaching. First Monday, 14(10). doi: 10.5210/fm.v14i10.2530

Lederman, D. (2018, November 7). Online education ascends. Inside digital learning. Inside Higher Education. Retrieved from https://www.insidehighered.com/digital-learning/article/2018/11/07/new-data-online-enrollments-grow-and-share-overall-enrollment

McClaskey, K. (2017, March). Personalization and UDL: A perfect match [Blog post]. ASCD. Retrieved from http://www.ascd.org/publications/educational-leadership/mar17/vol74/num06/Personalization-and-UDL@-A-Perfect-Match.aspx

Moore, M. G. (1989). Three types of interaction. American Journal of Distance Education, 3(2), 1-7. doi: 10.1080/08923648909526659

Quality Matters. (2018). Specific review standards from the QM higher education rubric, sixth edition. Retrieved from https://www.qualitymatters.org/sites/default/files/PDFs/StandardsfromtheQMHigherEducationRubric.pdf

Rose, D. H., Gravel, J. W., & Gordon, D. T. (2013). Universal design for learning. In L. Florian (Ed.) The SAGE handbook of special education: Two volume set (pp. 475-490). Thousand Oaks, CA: Sage Publications. Retrieved from https://goo.gl/L9ZRQp

Rose, D. H., & Meyer, A. (2002). Teaching every student in the digital age: Universal design for learning. Alexandria, VA: Association for Supervision and Curriculum Development. Retrieved from https://www.bcpss.org/bbcswebdav/institution/Resources/Summer%20TIMS/Section%205/A%20Teaching%20Every%20Student%20in%20the%20Digital%20Age.pdf

Seaman, J. E., Allen, I. E., & Seaman, J. (2018). Grade increase: Tracking distance education in the United States. Babson Park, MA: Babson Survey Research Group.

Shattuck, K. (2013). Results of review of the 2011-2013 research literature. Retrieved from https://www.qualitymatters.org/sites/default/files/research-docs-pdfs/2013-Literature-Review-Summary-Report.pdf

State University of New York. (2018). OSCQR (3rd ed.). Retrieved from https://bbsupport.sln.suny.edu/bbcswebdav/institution/OSCQR/OSCQR%20Assets/OSCQR%203rd%20Edition.pdf

U.S. News & World Report. (2018). Best online bachelor's programs. Retrieved from https://www.usnews.com/education/online-education/bachelors/rankings

Vai, M., & Sosulski, K. (2016). Essentials of online course design: A standards-based guide (2nd ed.). New York, NY: Routledge.

Online Course Design: A Review of the Canvas Course Evaluation Checklist by Sally Baldwin and Yu-Hui Ching is licensed under a Creative Commons Attribution 4.0 International License.