Figure 1. Open textbooks analytics system framework. Adapted from “A Framework for Open Textbooks Analytics System,” by D. Prasad, R. Totaram, and T. Usagawa, 2016, TechTrends 1–6. doi: 10.1007/s11528-016-0070-3.

Volume 17, Number 5

Deepak Prasad1,2, Rajneel Totaram1, and Tsuyoshi Usagawa2

1The University of the South Pacific, 2Kumamoto University

Textbook costs have skyrocketed in recent years, putting them beyond the reach of many students, but there are options which can mitigate this problem. Open textbooks, an open educational resource, have proven capable of making textbooks affordable to students. There have been few educational development as promising as the development of open textbooks to lower costs for students. While the last five years have witnessed unparalleled interest and significant advances in the development and dissemination of open textbooks, one important aspect has, until now, remained unexplored: the praxis of learning analytics for extracting information regarding how learners interact and learn with open textbooks, which is crucial for their evaluation and iterative improvement process.

Learning analytics offers a faster and more objective means of data collection and processing than traditional counterparts, such as surveys and questionnaires, and—most importantly—with their capability to provide direct evidence of learning, they present the opportunity to enhance both learner performance and environment. With such benefits on offer, it is hardly surprising that the optimism surrounding learning analytics is mounting. However, in practice, it has been pointed out that the technology to deliver its potential is still very much in its infancy, which is true in the case of open textbooks. Against this background, the main aim of our study was to develop a prototype open textbook learning analytics system to track individual learners’ online and offline interactions with their open textbooks in electronic publication (EPUB) format, and to present its developmental work as building blocks for future development in this area. We conclude with a discussion of the practical implications of our work and present directions for future work.

Keywords: open textbooks, learning analytics, open textbook analytics system, open educational resources, EPUB

It is no longer a secret—if, indeed, it ever was—that escalating textbook costs are putting them beyond the affordability of many students. David Wiley, one of the pioneers of open education, was recently quoted in CNN Money Magazine as saying that “the degree of unaffordability is getting to the point that it's hurting learning” (Grinberg, 2014). In fact, Senack (2014) in a survey of 2,039 university students, reported that 65% of students had no other choice than opting out of buying a textbook due to expense, and of those students, 94% admitted that doing so would negatively affect their grade in that course. These findings are representative of several other studies (see, for example, Acker, 2011; N. Allen, 2011; Florida Virtual Campus, 2012; Graydon, Urbach-Buholz, & Kohen, 2011; Morris-Babb & Henderson, 2012; Prasad & Usagawa, 2014), showing that affordability of traditional textbooks has become more difficult for many students and thus, in some cases, a barrier to learning.

Despite the problems outlined above, there is no indication that textbook prices will decrease in the foreseeable future; on the contrary, trends point to further increases. However, fortunately, open textbooks hold promise to provide a solution. Weller (2014) appraises open textbooks, a type of open educational resource, as one most amenable to the concept of open education, a concept essentially about elimination of barriers to learning (Bates, 2015). The phrase open educational resource (OER) is an umbrella term used to collectively describe those teaching, learning, or research materials that can be used without charge to support access to knowledge (Hewlett, 2013a). Within the OER context, “freely” means both that the material is openly available to anyone free of charge, either in the public domain or released with an open license such as a Creative Commons license; and that is made available with implicit permission, allowing anyone to retain, reuse, revise, remix, and redistribute the resource (Center for Education Attainment and Innovation, 2015). Conversely, traditional textbooks are extremely expensive and are published under an All Rights Reserved model that restricts their use (Wiley, 2015).

Within the past few years a growing body of literature has examined the potential cost savings and learning impacts of open textbooks. Senack (2014), for example, in a survey of 2,039 university students indicated that open textbooks could save students an average of $100 per course. Similarly, Wiley, Hilton, Ellington, and Hall (2012) in a study of open textbook adoption in three high school science courses found that open textbooks cost over 50% less than traditional textbooks and that there were no apparent differences (neither increase nor decrease) in test scores of students who used open versus traditional textbooks, a finding replicated by Allen, Guzman-Alvarez, Molinaro, and Larsen (2015). This latter finding is in contrast with the findings of Hilton and Laman (2012), who reported that students who used open textbooks instead of traditional textbooks scored better on final examinations, achieved better grades in their courses, and had higher retention rates. A study by Robinson, Fischer, Wiley, and Hilton (2014) also suggests that students who used open textbooks scored as well as, if not slightly better than, those who used traditional textbooks. All in all, these studies have put forth evidence showing that replacing traditional textbooks with open textbooks substantially reduces textbook costs without negatively affecting student learning. Consequently, demand for open textbooks is increasing.

As demand has grown, so too have efforts to develop and distribute open textbooks. Many of these development and distribution practices are accomplished through a combination of government, private, and philanthropic funding (Hewlett, 2013b). The amount of money injected into such projects is significant, and therefore funders, besides requiring usual information about impacts on cost and learning outcomes, are now also increasingly asking for more rigorous information regarding ways—whether, when, how often, and to what degree—in which learners actually engage with their open textbooks. More specifically, as stressed by Stacey (2013), grant recipients are excepted to use such data and evidence to plan and evaluate open textbook implementation and to establish effectiveness of learning designs so as to enable respective adjustments to optimize learning (p. 78). According to Hilton (2016), such information is crucial to help clarify what effects the “open” aspect of open textbook has on learning, as well as to reveal whether and how open textbooks produce improvement in educational outcomes. While claiming that no research evidence exists demonstrating a strong “textbook effect,” Hilton (2016) states that, in general, findings from research thus far (as reviewed in the previous paragraph) exhibit only a “small positive impact on student success, as measured by getting a C or better in the course, withdrawal and drop rates” (para. 1). Considered together, these voices indicate an overall need for new information to advance current understanding of how students learn with open textbooks so as to take appropriate actions to maximize learning.

The above discussion points to the need for more sophisticated methods of monitoring open textbook utilization in order to meet these information needs. New analytical methodologies—particularly learning analytics—have made fulfilling this requirement possible. Compared with more subjective research methods such as surveys and questionnaires, learning analytics can capture learners’ authentic interactions with their open textbooks in real time. This may improve understanding of textbook usage influences on actual usage behavior, which in turn may help improve efficiency and effectiveness of open textbooks. The method can be used either as a standalone method or to support other traditional research methods. Moreover, learning analytics for open textbooks can provide new insights into important questions such as how to assess learning outcomes based on textbook impact; whether student behavior, content composition, and learning design principles produce intended learning outcomes; and the level of association between amount of markups done and the relevance and difficulty level of the book content areas.

Despite these great potential benefits, we know of no studies published to date on systems developed for open textbooks learning analytics. Thus, the main aim of this paper is to close this gap by presenting developmental work and functionalities of an open textbooks learning analytics system which tracks individual learners’ interaction with open textbooks in electronic publication (EPUB) over both online and offline usage. A distinctive feature of this proposed system is its ability to synchronize online and offline interactional data on a central database, allowing both instructors and designers to generate analysis in dashboard-style displays. This system, piloted using one open textbook with 66 users in a postgraduate university course, has proven to function properly.

The remainder of this paper is organized in the following manner. It starts with a brief review of literature related to learning analytics, followed by a summary of the framework that guided our development. We then describe techniques and tools applied in development of the learning analytics system for open textbooks, which is the main focus of this paper. Details of a prototype of the proposed system, along with some results obtained, are presented afterwards. In the penultimate section we discuss the results of the trial, while the final section concludes the paper and talks about future work.

The concept of learning analytics has been making headlines for some years now, firing up interest amongst the higher educational community worldwide but its definition remains unified. One frequently cited definition is “the measurement, collection, analysis and reporting of data about learners and their contexts, for the purposes of understanding and optimizing learning and the environments in which it occurs” (Siemens & Long, 2011, p. 34). In other words, learning analytics applies different analytical methods (e.g., descriptive, inferential, and predictive statistics) to data that students leave as they interact with and within networked technology-enhanced learning environments so as to inform decisions about how to improve student learning. According to many observers, the advent of this concept is “poised to benefit students in previously impossible ways” (Willis, 2014). Succinctly, “learning analytics is first and foremost concerned with learning” (Clow, 2013, p. 685); and “let’s not forget: learning analytics are about learning” (Gašević, Dawson, & Siemens, 2015, p. 64).

A survey of published research shows that learning analytics tactics have been applied in a variety of ways and found useful, some of which include identifying struggling students in need of academic support (Arnold & Pistilli, 2012; Cai, Lewis, & Higdon, 2015; Jayaprakash, Moody, Lauría, Regan, & Baron, 2014; Lonn, Aguilar, & Teasley, 2015; Macfadyen & Dawson, 2010); assessing the quality of online postings and debate (Ferguson & Shum, 2011; Ferguson, Wei, He, & Shum, 2013; Nistor et al., 2015; Wise, Zhao, & Hausknecht, 2014); visualizing usage behaviors, patterns, and engagement levels (Cruz-Benito, Therón, García-Peñalvo, & Lucas, 2015; Gómez-Aguilar, Hernández-García, García-Peñalvo, & Therón, 2015; Morris, Finnegan, & Wu, 2005; Scheffel et al., 2011); sending automated motivational and informative feedback messages (McKay, Miller, & Tritz, 2012; Tanes, Arnold, King, & Remnet, 2011); intelligent tutoring systems (Brooks, Greer, & Gutwin, 2014; Lovett, Meyer, & Thille, 2008; May, George, & Prévôt, 2011; Roll, Aleven, McLaren, & Koedinger, 2011); recommender systems for learning (Liu, Chang, & Tseng, 2013; Manouselis, Drachsler, Vuorikari, Hummel, & Koper, 2011); provoking reflection (Coopey, Shapiro, & Danahy, 2014); improving accuracy in grading (Reed, Watmough, & Duvall, 2015); and contributing to course redesign (Fritz, 2013).

Given the benefits and opportunities offered by learning analytics, researchers and practitioners have expressed concern about the importance of maintaining the privacy of student data. As Scheffel, Drachsler, Stoyanov, and Specht (2014) emphasize, the nascent state of learning analytics has rendered "a number of legal, risk and ethical issues that should be taken into account when implementing LA at educational institutions" (p. 128). It is common to hear that such considerations are lagging behind the practice, which indeed is true. As such, many individual researchers, as well as research groups, have proposed ethical and privacy guidelines to guide and direct the practice of learning analytics. In June 2014, the Asilomar Convention for Learning Research in Higher Education outlined six principles (based on the 1973 Code of Fair Information Practices and the Belmont Report of 1979) to inform decisions about how to comply with privacy-related matters on the use of digital learning data. The principles are: (1) respect for the rights and dignity of learners, (2) beneficence, (3) justice, (4) openness, (5) the humanity of learning, and (6) the need for continuous consideration of research ethics in the context of rapidly changing technology. Pardo and Siemens (2014) in the same year identified four principles: (1) transparency, (2) student control over data, (3) security, and (4) accountability and assessment. Furthermore, in a literature review of 86 articles (including the preceding two publications) dealing with ethical and privacy concepts for learning analytics, Sclater (2014) found that the key principles which their authors aspired to encapsulate were "transparency, clarity, respect, user control, consent, access and accountability" (p. 3). In this context, it is worth noting that “a unified definition of privacy is elusive” (Pardo & Siemens, 2014, p. 442), just like the definition of learning analytics as noted earlier. While there is no unified definition of learning analytics and its privacy practices, there is general agreement that it is crucial for higher educational institutions to embrace learning analytics strategies as a way to improve student learning, but without violating students’ legal and moral rights.

An overview of the conceptual foundation guiding the development of the system is outlined in the next section.

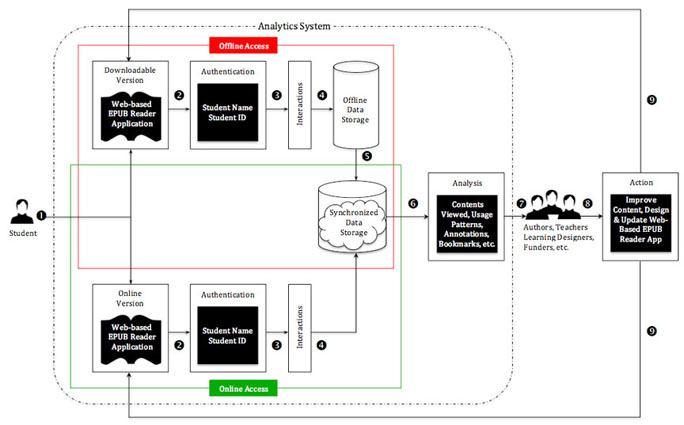

Developmental work was guided by our earlier work proposed in (Prasad, Totaram, & Usagawa, 2016) describing a framework for development of an open textbooks analytics system, as shown in Figure 1. This framework supports textbooks in the EPUB format, a format that has become the international standard for digital books. EPUB file formats are actually advanced html text pages and image files that are compressed and then use a file extension of.epub. Notably, this framework is not specific to open textbooks but equally applicable to other EPUB digital books.

Figure 1. Open textbooks analytics system framework. Adapted from “A Framework for Open Textbooks Analytics System,” by D. Prasad, R. Totaram, and T. Usagawa, 2016, TechTrends 1–6. doi: 10.1007/s11528-016-0070-3.

As illustrated by Figure 1, the framework consists of a nine-step approach beginning with students' initial contact with the text. The figure also illustrates two separate branches of process flow, one for online access and the other for offline access. All nine steps depicted within the framework, including certain stage-specific mandatory technical requirements, are summarized stepwise as follows:

Based on the framework presented above, this section describes methods applied and technologies used in the development of learning analytics system for open textbooks in EPUB format, and is divided into two subsections: data collection, and data analysis and presentation.

Data recording. Reading books in EPUB format requires an EPUB reader application. In line with the suggestions of the framework, EPUB.js (https://github.com/futurepress/epub.js), an open source web-based EPUB reader application, was adopted and customized as a central tool to aid data collection. EPUB.js previously possessed capabilities to record user clicks and annotation data in the local storage of the web browser used by the user to access the EPUB.js application. These capabilities were expanded to record and track a variety of other data, such as user’s IP address, web browser type and version, and the type of device used. Following these modifications to the EPUB.js reader application, the EPUB file of the book was embedded into the reader application for standard data collection. Figure 2 represents the customized EPUB.js reader application’s user interface. This customized version was used for both online and offline delivery. For online use, the customized EPUB.js reader application was hosted on a web server accessible via the Internet from any web browser. To facilitate offline access, an application installer was created for the Windows platform as the majority of users used Windows-based computers. This installer conveniently installed the customized EPUB.js reader application to the users’ computers (Figure 3). However, offline access was limited to a particular web browser: Mozilla Firefox. This is because the customized EPUB reader application used Javascript to send user data to an external data storage server, which most web browsers blocked as a potential security risk. Thus, for offline access, the user interaction recording features were incompatible with most web browsers. Consequently, offline access of the EPUB reader application required the use of Mozilla Firefox (Figure 4). Further development is required to make the code compatible with other web browsers.

Figure 2. Customized EPUB.js reader user interface.

Figure 3. Textbook short cut from start menu.

Figure 4. Required browser for offline access.

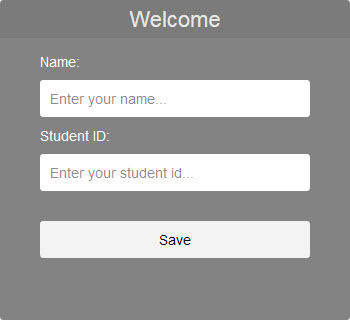

User authentication. A simple authentication system was designed to distinguish and track unique users and their behavior. Figure 5 shows the simple authentication interface. This is the first screen shown when the customized EPUB.js reader application is initiated by the user, requiring the user to enter their name and student ID number. These credentials are stored in the local storage of the user's browser, and all user-interaction data sent to the server (and subsequently processed) is tagged with the user’s authentication details.

Figure 5. Authentication interface.

Data synchronization. Data generated during offline usage are stored in the browser’s local storage. For the purpose of synchronizing data from web browser’s local storage to server, a network-sensing feature was integrated into the EPUB.js reader. This feature checks for an Internet connection at regular intervals (in our case, every 60 seconds) to determine if the user’s device is connected to the Internet, and whenever an Internet connection is detected, data from the browser's local storage are sent to the central database server, where the data is used for analytics. However, when used in online mode, the interactional data is directly sent in a database.

Data storage. A central database server, a combination of a PHP script and MySQL database, was used for data storage. The MySQL database is used to store data, while the PHP script waits for the interaction data to be received from the EPUB reader application. Once receiving new interaction data, the PHP script validates it and records it to the MySQL database. Table 1 shows data types recorded for each user interaction.

Table 1

Data Recorded for Each User Interaction

| Field name | Comments |

| student_id | Unique ID to distinguish a user |

| student_name | Name of the user (optional) |

| chapter | Title of the chapter |

| type | Type of action – page view, jump to chapter, bookmark action, hyperlink click or annotation |

| url | URL of the book page |

| note | User notes/annotation |

| timestamp | Timestamp of user action |

| ip_address | IP address of the user’s device, if accessing online |

| online_status | Flag to determine online/offline access |

| device | Type of device used to access book |

| browser | Web browser used to access book |

| epubdata | Additional data recorded by the epub reader application (for future use) |

Analysis was performed on both individual and aggregate (whole class) data, and was analyzed for various factors listed in the bullet points below. The analysis and data presentation (graphic visualizations in dashboard format) were done using PHP and a Javascript charting library. Computation of interaction data was done using SQL queries, while the rendering (in dashboard display format) was done using PHP with the help of a Javascript charting library. Figure 6 shows snapshot of the developed learning analytics dashboard.

From the data recorded, it was possible to perform the following analysis for any arbitrary date range:

Figure 6. Snapshot of the learning analytics dashboard.

A prototype of the developed system was implemented at the University of the South Pacific, for an open textbook consisting of 17 chapters, adopted for a post-graduate, 15-week blended course in research methods. It was a continuous assessment course with no final exam and consisted of one weekly lecture of two hours. The text was prescribed to be used for the first 10 weeks of the semester, during which generic research methods were taught; the reminder of the course was dedicated to teaching discipline-specific research methods with its own set of learning materials. However, data were recorded and analyzed for the full 15 weeks to discern whether the text was still being used during the non-prescribed period of the course.

For this trial, both offline and online versions of the text were made available at the beginning of the course via the Moodle course page only to those students who consented to their interactional data being recorded and used for this research project. A PDF version was also available to all students, particularly for those who preferred not to participate in the study. Students were informed that their participation was voluntary, their interactional data would be recorded non-anonymously, they could withdraw from the study at any time by informing the course coordinator, and that their course grade would not be affected if they chose not to participate. A total of 66 students out of a class of 95 participated in the study. The overall results obtained from the developed system have proven to be quite insightful and informative. The entire analysis of the results is out of the scope of the paper as the focus of this paper is on the development of learning analytics system for open textbooks; however, some results obtained via usage tracking are graphically presented using the prototype learning analytics dashboard and interpreted below.

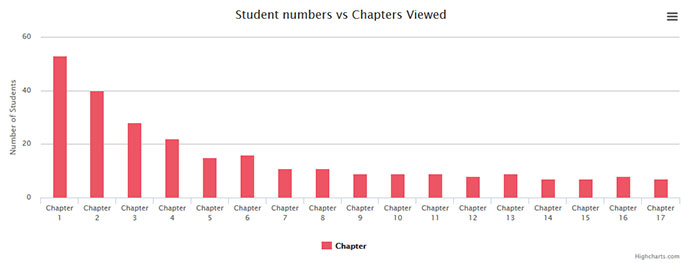

The graph in Figure 7 gives the results for the number of students who viewed each chapter within the duration of the course, which was equivalent to a period of 15 weeks. In total, there were 17 chapters in the book. As can be seen from the figure, Chapter 1 was viewed by the largest number of students but was not accessed by all (66) involved in the study, and that not all of book chapters in either the online or offline versions were viewed by every study participant. In addition, striking differences emerged in the number of students who viewed the first 4 chapters in contrast with other chapters (i.e., the vast majority of the chapters, that is chapters 5-17), were viewed by less than 20 students.

Figure 7. Student numbers vs chapters viewed.

The results of number of chapters viewed by total number of students within the course space are displayed in graph in Figure 8. The most important point that emerges from this figure is that only five students viewed all 17 chapters. One further point of interest which emerges from the figure is the significant variation in the number of chapters that were accessed by the students. Finally, the figure makes evident that majority of the students viewed less than quarter of the total chapters of the book.

Figure 8. Number of student vs number of chapters viewed.

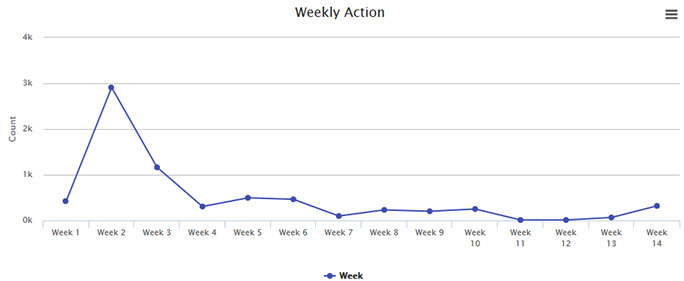

Figure 9 is a line graph representing total interactions (total number of clicks on the textbook) per week by all students. The weekly record shows that Week 2 had the most activity, while no activity took place during week 15, the last week of the course. On a whole, the overall activity trend is generally downwards, but there are slight week-to-week fluctuations. Interestingly, though the book was prescribed to be used only during the first 10 weeks of the course, interactions were recorded for weeks 11-14.

Figure 9. Total weekly interactions.

Open textbooks are increasingly being developed and adopted as amenable alternatives to expensive traditional copyrighted publisher textbooks. Consistent with such notion are the results from a number of recent studies that have conclusively shown that adopting open textbooks in place of traditional textbooks can have positive impacts on student cost savings without impeding the achievement of learning outcomes. Undoubtedly, these results are encouraging, but additional studies are needed to uncover information in key areas such as how much and how often students are reading, when and where they are reading, and how they are engaging with their open textbooks, or if they are using them at all.

Availability of such information can contribute toward better assessment of return on investment in open textbooks development, which in turn is essential for ensuring the growth of open textbooks. Furthermore, such information is important (if not essential) for the evaluation and improvement of the effectiveness and efficiency of open textbooks. While this kind of information can be procured through learning analytics system for open textbooks, the area had previously remained unstudied. Accordingly, the current project was undertaken to develop this needed system, with the developmental works provided in this paper, to encourage stimulating discussion and further development in the field of open textbooks learning analytics.

The newly developed system enables the recording, analysis, and presentation of interactional data that is generated by student interactions with open textbooks. This work offers three main contributions to the state of the art of learning analytics for open textbooks. First, it is built for books in EPUB format, which is an open standard format for the creation of digital books. As such, the system can also be used for other types of open and non-open educational resources that are published in EPUB format. The second contribution stems from the utilization of an open source EPUB.js reader application in the data capture process, which in turn provides benefits including cost-effectiveness and can be modified and adapted by anyone to meet specific user needs. Finally, and most importantly, the work presented in this paper lays the foundation for further development in this direction. One limitation of the system, however, is that with the current configuration for offline reading, the downloadable version of the book is only compatible with the Mozilla Firefox web browser (the rationale for using this browser is provided in the development section to this paper). This issue will be addressed in future work.

The utility of the system was piloted with an open textbook that was prescribed for the first 10 weeks of a 15-week post-graduate course on research methods at the University of the South Pacific. Sixty-six students volunteered to participate in this study after being fully informed of the nature of the study both in written and oral form. Although the book was recommended for the first 10 weeks of the semester, the learning analytics system was operationalized through the end of the semester to elicit book usage beyond the prescribed period. The performance of the system was monitored continuously throughout the trial with no undesirable technical glitches or problems detected, thereby confirming the utility of the system.

The results of the pilot trial were both informative and evaluative as they provide crucial information to undertake more nuanced analysis of the value of open textbooks. Due to space constraints and the specific focus of the current paper, the entire results were not presented and will be reported in a subsequent paper. Excerpts of the results obtained were presented in the previous section in Figures 7 to 9. Taken together, these analytical results clearly show that Chapter 1 was the most viewed out of all the chapters in the book but not by all students, meaning that none of the chapters was viewed by all students; in fact, only 5 out of the 66 student participants viewed all 17 chapters of the book, with most textbook activity occurring during the second week of the semester, after which activities slowed considerably, though book usage beyond the recommended period was also discernible. From these results alone, the versatility and potential of the concept of learning analytics for open textbooks is apparent.

More specifically, learning analytics for open textbooks and other open educational resources opens up a wide range of possibilities. These possibilities include optimizing textbook planning and development; monitoring usage type and degree; evaluating breadth and depth of impact and effectiveness; and revision strategies for improvement. Additionally, resulting datasets can themselves be made available as open educational resources in the form of open data, thus enabling new avenues of transformative research to enrich open textbook related practices. Accordingly, both open textbook producers and their users will be able to engage in collaborative inquiry and exploration into unmasking deeper pedagogical concepts associated with open textbooks.

Looking into the current climate of open education, the necessity for learning analytics has only recently really started to be realized for open educational resources, and this was reflected in a recent Open Education 2015 conference by Bier and Green (2015) who aptly stated:

If open educational resources continue to be focused on the development of relatively static, textbook-like materials that are unable to engage with data-driven feedback loops, then the materials developed by closed approaches will rapidly outpace OER with regard to effectiveness and impact. This state of affairs will likely result in OER being relegated to second-class status... (para. 1)

In view of above, although it might be too early to predict, it is anticipated that learning analytics will play an important role as a key driver in mainstreaming open textbooks (and more broadly OER) in schools and colleges in the future.

Acker, S. R. (2011). Digital textbooks: A state-level perspective on affordability and improved learning outcomes. Library Technology Reports, 47 (8), 41–52.

Allen, G., Guzman-Alvarez, A., Molinaro, M., & Larsen, D. (2015). Assessing the impact and efficacy of the open-access chemwiki textbook project. Retrieved from https://net.educause.edu/ir/library/pdf/elib1501.pdf

Allen, N. (2011). High prices prevent college students from buying assigned textbooks. Student PIRGs. Retrieved from http://www.studentpirgs.org/news/ap/high-prices-prevent-college-students-buying-assigned-textbooks

Arnold, K. E., & Pistilli, M. D. (2012). Course signals at Purdue: Using learning analytics to increase student success. In S. B. Shum, D. Gašević, & R. Ferguson (Eds.), 2nd International Conference on Learning Analytics and Knowledge (LAK ’12) (pp. 267–270). New York, NY, USA: ACM. doi: 10.1145/2330601.2330666

Bates, T. (2015, February). What do we mean by “open” in education? [Web log post]. Retrieved from http://www.tonybates.ca/2015/02/16/what-do-we-mean-by-open-in-education/

Bier, N., & Green, C. (2015). Why open education demands open analytics. Retrieved from http://openeducation2015.sched.org/event/49J5/why-open-education-demands-open-analytic-models

Brooks, C., Greer, J., & Gutwin, C. (2014). The Data-assisted approach to building intelligent technology-enhanced learning environments. In J. A. Larusson & B. White (Eds.), Learning analytics: From research to practice (pp. 123–156). New York: Springer.

Cai, Q., Lewis, C. L., & Higdon, J. (2015). Developing an early-alert system to promote student visits to tutor center. The Learning Assistance Review, 20(1), 61–72.

Center for Education Attainment and Innovation. (2015). Open textbooks: The current state of play. Retrieved from http://www.acenet.edu/news-room/Documents/Quick-Hits-Open-Textbooks.pdf

Clow, D. (2013). An overview of learning analytics. Teaching in Higher Education, 18(6), 683–695. doi: 10.1080/13562517.2013.827653

Coopey, E., Shapiro, R. B., & Danahy, E. (2014). Collaborative spatial classification. In Fourth International Conference on Learning Analytics And Knowledge (LAK ’14) (pp. 138–142). New York, NY, USA: ACM. doi: 10.1145/2567574.2567611

Cruz-Benito, J., Therón, R., García-Peñalvo, F. J., & Lucas, E. P. (2015). Discovering usage behaviors and engagement in an Educational Virtual World. Computers in Human Behavior, 47, 18–25. doi: 10.1016/j.chb.2014.11.028

Ferguson, R., & Shum, S. B. (2011). Learning analytics to identify exploratory dialogue within synchronous text chat. In 1st International Conference on Learning Analytics and Knowledge (LAK ’11) (pp. 99–103). New York, NY, USA: ACM. doi: 10.1145/2090116.2090130

Ferguson, R., Wei, Z., He, Y., & Shum, S. B. (2013). An evaluation of learning analytics to identify exploratory dialogue in online discussions. In Third International Conference on Learning Analytics and Knowledge (LAK ’13) (pp. 85–93). New York, NY, USA: ACM. doi: 10.1145/2460296.2460313

Florida Virtual Campus. (2012). 2012 Florida student textbook survey. Retrieved from http://www.openaccesstextbooks.org/pdf/2012_Florida_Student_Textbook_Survey.pdf

Fritz, J. (2013, April). Using analytics at UMBC: encouraging student responsibility and identifying effective course designs. EDUCAUSE Center for Applied Research. Retrieved from https://net.educause.edu/ir/library/pdf/ERB1304.pdf

Gašević, D., Dawson, S., & Siemens, G. (2015). Let’s not forget: Learning analytics are about learning. TechTrends, 59(1), 64–71. doi: 10.1007/s11528-014-0822-x

Gómez-Aguilar, D. A., Hernández-García, Á., García-Peñalvo, F. J., & Therón, R. (2015). Tap into visual analysis of customization of grouping of activities in eLearning. Computers in Human Behavior, 47, 60–67. doi: 10.1016/j.chb.2014.11.001

Graydon, B., Urbach-Buholz, B., & Kohen, C. (2011). A study of four textbook distribution models. Educause Quarterly, 34(4). Retrieved from http://www.educause.edu/ero/article/study-four-textbook-distribution-models

Grinberg, E. (2014, April 21). How some colleges are offering free textbooks. CNN Money. Retrieved from http://edition.cnn.com/2014/04/18/living/open-textbooks-online-education-resources/index.html?utm_campaign=Feed%3A+rss%2Fcnn_latest+%28RSS%3A+Most+Recent%29&utm_medium=feed&utm_source=feedburner

Hewlett. (2013a). Open educational resources. Retrieved from http://www.hewlett.org/programs/education/open-educational-resources

Hewlett. (2013b). White paper: Open educational resources. Retrieved from http://www.hewlett.org/sites/default/files/OER White Paper Nov 22 2013 Final_0.pdf

Hilton, J. (2016, February). How does the “Open” in OER improve student learning? [Web log post]. Retrieved from http://www.johnhiltoniii.org/how-does-the-open-in-oer-improve-student-learning/

Hilton, J., & Laman, C. (2012). One college’s use of an open psychology textbook. Open Learning: The Journal of Open, Distance and E-Learning, 27(3), 265–272. doi: 10.1080/02680513.2012.716657

Jayaprakash, S. M., Moody, E. W., Lauría, E. J. M., Regan, J. R., & Baron, J. D. (2014). Early alert of academically at-risk students: An open source analytics initiative. Journal of Learning Analytics, 1(1), 6–47.

Liu, C.-C., Chang, C.-J., & Tseng, J.-M. (2013). The effect of recommendation systems on Internet-based learning for different learners: A data mining analysis. British Journal of Educational Technology, 44(5), 758–773. doi: 10.1111/j.1467-8535.2012.01376.x

Lonn, S., Aguilar, S. J., & Teasley, S. D. (2015). Investigating student motivation in the context of a learning analytics intervention during a summer bridge program. Computers in Human Behavior, 47, 90–97. doi: 10.1016/j.chb.2014.07.013

Lovett, M., Meyer, O., & Thille, C. (2008). The open learning initiative: Measuring the effectiveness of the OLI statistics course in accelerating student learning. Journal of Interactive Media in Education, 2008(1), (Art.13). doi: http://doi.org/10.5334/2008-14

Macfadyen, L. P., & Dawson, S. (2010). Mining LMS data to develop an “early warning system” for educators: A proof of concept. Computers & Education, 54(2), 588–599.

Manouselis, N., Drachsler, H., Vuorikari, R., Hummel, H., & Koper, R. (2011). Recommender systems in technology enhanced learning. In F. Ricci, L. Rokach, B. Shapira, & P. B. Kantor (Eds.), Recommender systems handbook (pp. 387–415). US: Springer. doi: 10.1007/978-0-387-85820-3_12

May, M., George, S., & Prévôt, P. (2011). TrAVis to enhance online tutoring and learning activities: Real‐time visualization of students tracking data. Interactive Technology and Smart Education, 8(1), 52–69. doi: 10.1108/17415651111125513

McKay, T., Miller, K., & Tritz, J. (2012). What to do with actionable intelligence: E2Coach as an intervention engine. In S. B. Shum, D. Gašević, & R. Ferguson (Eds.), 2nd International Conference on Learning Analytics and Knowledge (LAK ’12) (pp. 88–91). New York, NY, USA: ACM. doi: 10.1145/2330601.2330627

Morris, L. V, Finnegan, C., & Wu, S.-S. (2005). Tracking Student Behavior, Persistence, and Achievement in Online Courses. Internet and Higher Education, 8(3), 221–231.

Morris-Babb, M., & Henderson, S. (2012). An experiment in open-access textbook publishing: Changing the world one textbook at a time. Journal of Scholarly Publishing, 43(2), 148–155. doi: 10.3138/jsp.43.2.148

Nistor, N., Trăuşan-Matud, Ş., Dascălu, M., Duttweiler, H., Chiru, C., Baltes, B., & Smeaton, G. (2015). Finding student-centered open learning environments on the internet: Automated dialogue assessment in academic virtual communities of practice. Computers in Human Behavior, 47, 119–127. doi: 10.1016/j.chb.2014.07.029

Pardo, A., & Siemens, G. (2014). Ethical and privacy principles for learning analytics. British Journal of Educational Technology, 45(3), 438–450. doi: 10.1111/bjet.12152

Prasad, D., Totaram, R., & Usagawa, T. (2016). A framework for open textbooks analytics system. TechTrends, 1–6. doi: 10.1007/s11528-016-0070-3

Prasad, D., & Usagawa, T. (2014). Scoping the possibilities: Student preferences towards open textbooks adoption for e-learning. Creative Education, 5(24), 2027-2040. doi: 10.4236/ce.2014.524227

Reed, P., Watmough, S., & Duvall, P. (2015). Assessment analytics using turnitin & grademark in an undergraduate medical curriculum. Journal of Perspectives in Applied Academic Practice, 3(2), 92–108. doi: 10.14297/jpaap.v3i2.159

Robinson, T. J., Fischer, L., Wiley, D., & Hilton, J. (2014). The impact of open textbooks on secondary science learning outcomes. Educational Researcher, 43(7), 341–351. doi: 10.3102/0013189X14550275

Roll, I., Aleven, V., McLaren, B. M., & Koedinger, K. R. (2011). Improving students’ help-seeking skills using metacognitive feedback in an intelligent tutoring system. Learning and Instruction, 21(2), 267–280. doi: 10.1016/j.learninstruc.2010.07.004

Scheffel, M., Drachsler, H., Stoyanov, S., & Specht, M. (2014). Quality indicators for learning analytics. Educational Technology & Society, 17(4), 117–132.

Scheffel, M., Niemann, K., Pardo, A., Leony, D., Friedrich, M., Schmidt, K., ... Kloos, C. (2011). Usage pattern recognition in student activities. In C. Kloos, D. Gillet, R. Crespo García, F. Wild, & M. Wolpers (Eds.), Towards ubiquitous learning (Vol. 6964, pp. 341–355). Berlin Heidelberg : Springer. doi: 10.1007/978-3-642-23985-4_27

Sclater, N. (2014). Code of practice for learning analytics: A literature review of the ethical and legal issues. Retrieved from http://repository.jisc.ac.uk/5661/1/Learning_Analytics_A-_Literature_Review.pdf

Senack, E. (2014). Fixing the broken textbook market: how students respond to high textbook costs and demand alternatives. Retrieved from http://www.washpirg.org/sites/pirg/files/reports/1.27.14 Fixing Broken Textbooks Report.pdf

Siemens, G., & Long, P. (2011). Penetrating the fog: Analytics in learning and education. EDUCAUSE Review, 46(5), 30–40.

Stacey, P. (2013). Government support for open educational resources: Policy, funding, and strategies. The International Review of Research in Open and Distributed Learning, 14(2), 67–80. Retrieved from http://www.irrodl.org/index.php/irrodl/article/view/1537/2481

Tanes, Z., Arnold, K. E., King, A. S., & Remnet, M. A. (2011). Using Signals for appropriate feedback: Perceptions and practices. Computers & Education, 57(4), 2414–2422. doi: 10.1016/j.compedu.2011.05.016

Weller, M. (2014). Battle for open: How openness won and why it doesn’t feel like victory. London: Ubiquity Press. doi: http://dx.doi.org/10.5334/bam

Wiley, D. (2015, May 20). On the relationship between OER adoption initiatives and libraries. Retrieved from http://opencontent.org/blog/archives/3883

Wiley, D., Hilton, J., Ellington, S., & Hall, T. (2012). A preliminary examination of the cost savings and learning impacts of using open textbooks in middle and high school science classes. The International Review of Research in Open and Distributed Learning, 13(3), 262–276. Retrieved from http://www.irrodl.org/index.php/irrodl/article/view/1153/2256

Willis, J. E. (2014). Learning analytics and ethics: A framework beyond utilitarianism. Educause review online. Retrieved from http://er.educause.edu/articles/2014/8/learning-analytics-and-ethics-a-framework-beyond-utilitarianism

Wise, A. F., Zhao, Y., & Hausknecht, S. N. (2014). Learning analytics for online discussions: Embedded and extracted approaches. Journal of Learning Analytics, 1(2), 48–71.

Development of Open Textbooks Learning Analytics System by Deepak Prasad, Rajneel Totaram, and Tsuyoshi Usagawa is licensed under a Creative Commons Attribution 4.0 International License.