|

|

J. Buckley Harrison and Richard E. West

Brigham Young University, United States

This design-based research study explored whether sense of community was maintained while flexibility in the course was increased through an adoption of a unique blended learning model. Data collected in this study show a significant drop in the sense of connectedness score from a mean of 50.8 out of 66 to a mean of 39.68 in the first iteration. The score then began to gradually increase, reaching 50.65 in the third iteration. Results indicate that transitioning to a blended learning environment may be a suitable option to increase flexibility while maintaining a sense of community in a project-based course. Future research into specific aspects of course design such as maturity of design, age-level of participants, and context would further develop understanding in this area.

Keywords: Blended learning; sense of community; design-based research; connectedness

In recent years, blended learning adoption has increased rapidly (Graham, Woodfield & Harrison, 2013). Commonly defined as “learning experiences that combine face-to-face and online instruction” (Graham, 2012, p. 7), blended learning is adopted primarily for three reasons: (a) improved pedagogy, (b) increased access/flexibility, and (c) increased cost effectiveness (Bonk & Graham, 2006). The access that blended learning provides goes beyond physical distance, also allowing for greater flexibility in the time both the student and instructor engage in a course (Picciano, 2006). This increased flexibility can provide instructors with more individualized time to spend with those struggling in a course; however, though blended learning offers solutions to rigid course structure, the introduction of online instruction may bring potential challenges of its own.

One concern in moving to a blended environment is that a lack of in-person experience could diminish the students’ overall sense of community (SOC) and social presence in the class. Aragon (2003) defined social presence as salient interaction with a “real person” (p. 60) and extolled its importance to SOC, stating that “social presence is one of the most significant factors in improving instructional effectiveness and building a sense of community” (p. 57). Diminished SOC was seen by Stodel, Thompson, and MacDonald (2006) while researching social presence in their online course. They observed that “although there were indicators of social presence” it appeared “that [social presence was] still what the learners missed most when learning online” (p. 8). Rovai (2001) supported the necessity of social presence in building a strong sense of community by imploring that “instructors must deliberately structure interactions to overcome the potential lack of social presence” (p. 290) in an online course. Rovai argued that as social presence goes down, so does SOC. This, in turn, can affect student learning as Wegerif (1998) found in his study in a class for professional educators on teaching and learning online that “individual success or failure on the course depended upon the extent to which students were able to cross a threshold from feeling like outsiders to feeling like insiders” (p. 34). Rovai later (2002c) found a significant relationship to exist between students’ perceptions about their sense of community and their cognitive learning. Given the importance of a strong SOC, it is necessary to understand the impact on this psychological construct in transitioning a course to a blended format.

In order to understand issues surrounding sense of community and how it can relate to online learning, we will first review the literature regarding social interactions in distance education in general and how these interactions are part of establishing a SOC among students. Second, we will review the literature regarding the importance of SOC.

Rovai (2001) claimed that Moore’s (1991) theory of transactional distance was especially helpful in understanding online learners’ SOC. In Moore’s theory, he stated that special considerations should be taken regarding dialogue and structure in order to mitigate the negative impact of distance education. Moore determined that “the success of distance teaching is the extent to which the institution and the individual instructor are able to provide the appropriate opportunity for, and quality of, dialogue between teacher and learner, as well as appropriately structured learning materials” (p. 5). Similarly Rovai (2001) offered two considerations on structure and dialogue with regard to SOC. First, Rovai argued that since additional structure tends to increase psychological distance, SOC in turn decreases. Second, by utilizing communications media appropriately, dialogue could be increased and transactional distance reduced, which would theoretically increase SOC (p. 289). To better understand these considerations, each of Moore’s (1989) three elements of interaction are discussed in turn, along with a fourth element added by Bouhnik and Marcus (2006). This fourth element is similar to the learner-interface element discussed by Hillman, Willis, and Gunawardena (1994). These aspects of learning interactions include (a) interaction with content; (b) interaction with the instructor; (c) interaction with the students; and (d) interaction with the system.

Interaction with content occurs as the learner is exposed to new information and attempts to integrate this new content with the learner’s previous knowledge on the subject. In today’s technological landscape, this interaction could take place online or face-to-face, individually or collectively, alone, with peers, or with a teacher. Moore (1989) argued that interaction with content is the “defining characteristic of education” since without it there could be no education (p. 1). According to Moore, it is the process of “interacting with content that results in changes in the learner’s understanding.”

When discussing transactional distance, Moore (1989) warned that physical distance between the learner and the teacher may result in a psychological and communication gap between them. Moore discussed how a teacher could provide effective support, motivation, clarity, and experience with the material and concluded that interaction with the instructor is most valuable during testing and feedback. This interaction leads to better application of new knowledge by the learner.

Hara and Kling (2001) found that students were more likely to feel frustration, anxiety, and confusion when taking an online class if they encountered communication problems. In particular, during an ethnographic study of a small, graduate-level online distance education course, they found that lack of communication with the teacher produced stress in students. They stated “students reported confusion, anxiety, and frustration due to the perceived lack of prompt or clear feedback from the instructor, and from ambiguous instructions” (p. 68).

From a voluntary survey of 699 undergraduate and graduate online students at a mid-sized regional university, Baker (2010) recorded that instructor presence had a statistically significant positive impact on effective learning, cognition, and motivation. Here, instructor presence was described as being actively engaged in an online discussion, providing quick and personal feedback to assignments, or being available frequently throughout the course (p. 6).

Moore (1989) was also concerned with the transactional distance between the students themselves. Moore posited that inter-learner interaction could become an “extremely valuable resource for learning” (p. 2). Although important, Moore observed that inter-learner interaction was most impactful among younger learners. It was not as important for most adult and advanced learners who are more self-motivated. That may be one reason as to why Baker (2010) found that instructor presence had a greater influence on reducing frustration and increasing social presence than peer presence (p. 23).

Bouhnik and Marcus (2006) added to Moore’s (1989) original three interactions by including the interaction students have with the system itself. They posited, “there is a need to make sure that the technology itself will remain transparent and will not create a psychological or functional barrier” (p. 303). If interaction with the system produced conflicts that were not resolved quickly, a student’s level of satisfaction and ability to accomplish learning outcomes could be negatively impacted. Specifically, when designing a technological system, Bouhnik and Marcus stressed the need for “building a support system, with maximum accessibility” (p. 303).

Building such a support system as Bohnik and Marcus discussed can enable these four varying kinds of learner interactions to occur more easily. This, in turn, can increase a student’s sense of community (SOC). Sarason (1974), when coining the term, defined SOC as “the perception of similarity to others, an acknowledged interdependence with others, a willingness to maintain this interdependence by giving to or doing for others what one expects from them, and the feeling that one is part of a larger dependable and stable structure” (p. 157). Though Sarason coined the term SOC, it has been the subject of much research, either directly or indirectly, over the last century (Glynn, 1981, p. 791). Due to its broad nature, research on SOC can be found in many fields of study. McMillan and George (1986) stressed the need for more research and understanding on the SOC in order to better inform public policy and “strengthen the social fabric” (p. 16) with more concrete solutions on how to increase community in a variety of settings. Their hope was that research into the SOC would foster open, accepting communities built on understanding and cooperation.

The concept of SOC is not new to distance education. While developing an instrument for measuring SOC in distance education, Rovai (2002a) defined SOC in education as something that occurs when “members of strong classroom communities have feelings of connectedness” (p. 198). He went on to mention that members “must have a motivated and responsible sense of belonging and believe that active participation in the community” (p. 199) could satisfy their needs.

In his review of the SOC research, Rovai (2002b) concluded that classroom community “can be constitutively defined in terms of four dimensions: spirit, trust, interaction, and commonality of expectation and goals” (p. 2). In regards to interaction, Rovai noted that if interaction could not occur in abundance, then the focus should be on the quality of interaction. The instructor controls these interactions, and care should be taken to mitigate negative interactions while strengthening SOC. Interactions with the instructor should include both feedback as well as more personable information (Rovai, 2002b).

Shea, Swan, and Pickett (2005) determined through regression analysis that SOC was also influenced by effective directed facilitation, instructional design, and student gender. Their survey of 2,036 students measured students’ perceptions of teaching presence and learning community. The more engaged an instructor seemed in the course, the stronger sense of community and belonging students felt (p. 71). Garrison (2007) cited similar issues when reviewing research on teaching presence in an online community of inquiry. He stated “that teaching presence is a significant determinate of student satisfaction, perceived learning, and sense of community” (p. 67). Baker (2010) stressed the need for further research into the impact of teacher presence in an online environment on the sense of community (p. 23).

However, in order to address this need for research on online sense of community, instruments and methods are needed to detect SOC. One of the first instruments to objectively measure sense of community was developed by Glynn (1981) for face to face settings. Glynn argued that SOC could be identified through context-specific attitudes and behaviors. Glynn uncovered 178 attitude and opinion statements that might be associated with SOC. Examples included whether a community member felt that, in an emergency, they would have support or whether they felt SOC is context-specific and research should focus on the community level.

Rovai and Jordan (2004) have more recently conducted research on blended environments and SOC. Their study involved a comparative analysis between traditional, online, and blended graduate courses. The traditional course covered educational collaboration and consultation. Online technologies were not used in this course, instead relying on textbook activities, lectures, some group work, and authentic assessments for individual students. The blended course focused on legal and ethical issues with teaching disabled students. Both face-to-face and asynchronous online components were used. The blended course began with a face-to-face session with two more sessions spaced throughout the semester. The online course covered curriculum and instructional design, relying heavily on the institution’s learning management system to provide content and communication. Rovai and Jordan’s analysis consisted of a 20-point Likert scale survey with items such as “I feel isolated in this course” and “I feel that this course is like a family.” Each item was self-reported by the participants consisting of 68 graduate students each enrolled in a graduate-level education course. All participants were full-time K-12 teachers seeking a master’s degree in education. Their findings suggested that the SOC was strongest in the blended course, with traditional courses having the next strongest community.

Though Rovai and Jordan’s results were promising, they are not easily applied to all contexts. In this study, the courses were independent from one another. The traditional, blended, and online courses each focused on different subjects and were established in their respective educational modes, leaving the possibility open that differences in the data could have been due to the type of course the students participated in. Another possible difference in the data could come from where the students resided related to one another. In both the traditional and blended courses students resided in the same geographic area, while the online course had a student population dispersed throughout the country. Finally, Rovai and Jordan’s work was published nearly a decade ago, and many technologies (such as video-based technologies and course management systems) have emerged and evolved to provide powerful new ways of supporting human interactions in blended learning environments. Thus, it is important to update the work of these scholars and understand the nature of supporting students’ SOC in today’s learning environments.

In particular, there is very little literature available that explains the nature of transitioning a course from traditional to blended learning, and how this transition affects students’ feelings of being connected to their instructors and peers in the various iterations of the course. This is an important issue, because as online learning grows, more instructors and instructional institutions are transitioning courses to online and blended settings. More research using approaches such as design-based research is needed to understand the iterative effects of these transitions. Design-based research (DBR) is a method of inquiry that is especially suited to this type of project because DBR strives to improve practice through designed interventions while increasing local and generalizable theory (Barab & Squire, 2004).

Thus, the purpose of this study was to carry out a design-based research agenda of producing an improved course for teaching preservice teachers technology integration skills in a blended learning environment while simultaneously seeking to understand the impact on SOC and student satisfaction. In line with design-based research, we began our study with loosely formed research questions supported by clear pedagogical expectations (Edelson, 2002, p. 106). Thus, our primary research question was how we could design the course so it would

Design-based research (DBR) is concerned with three areas of a learning environment: inputs of the system, outputs of the system, and the contribution of theory to the system (Brown, 1992). Literature has shown that DBR studies often have the following characteristics (The Design-Based Research Collective, 2003).

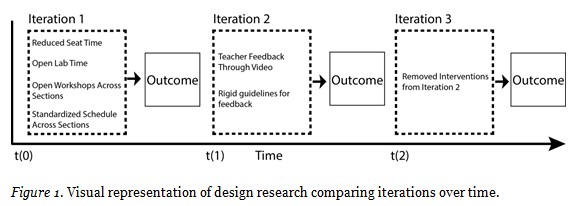

These characteristics informed our DBR study’s design and documentation by providing a framework to follow. In addition, Graham, Henrie, and Gibbons (2013) described various approaches to conducting research in blended learning that can attempt to create new knowledge by either exploring, explaining, or designing interventions. Our study fell in the paradigm of design, and thus Graham et al.’s suggested model for studying design iterations was helpful. In their model, the authors argued that a study of each iteration should include a discussion of the core attributes affected by an intervention and a measurement of the outcome. This model represented well the nature of our project because we analyzed outcomes after each iteration and sought over time to develop a course that would be more effective. Figure 1 is a representation of this study in the context of their model.

In following this model, we engaged in the following design iterations. These interventions are more fully explained in a subsequent section.

Interventions to the course in this iteration included the following:

Interventions to the course in this iteration included the following:

Interventions to the course in this iteration included the following:

Participants consisted of 247 preservice secondary education teachers enrolled in a technology integration course. This course teaches basic educational technology skills and practices to nearly all secondary education majors on campus. However, the class is limited because it is only 1 credit, forcing instructors to be as efficient as possible in their instruction. In addition, students enter the class with various technological abilities, with some needing much scaffolding and others more able and desirous to work at their own pace to complete the course. In the course, students complete 3 major units: Internet Communications (where they typically create class websites), Multimedia (where they typically create instructional videos), and Personal Technology Projects (where they select a technology specifically useful to their discipline). In addition, they complete smaller units related to copyright/creative commons, internet safety, and mobile learning. Students taking the course are in various stages of their academic careers, with many taking the course their first semester as education majors, while others complete the course nearer to graduation.

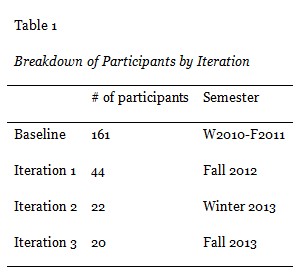

A breakdown of the participants is shown in Table 1. Two limitations in the data need clarification. Sense of community data was not collected for the winter 2012 semester, as there was no major intervention at that time and we had sufficient baseline data. Once the decision to move to a blended format was made, data collection continued. Also, responses for Iteration 2 and Iteration 3 were unusually low, though the enrollments in the course were on par with other semesters. We are not sure of the direct cause of this low response rate, although it is likely that the instructors for these iterations did not incentivize participation in the survey like previous instructors did. In each semester, four sections of the course participated in the study. The baseline consisted of participants’ data collected from four semesters, totaling 16 sections. Each iteration consisted of participants’ data collected from only one semester, totaling four sections each.

Students were placed into different sections of the course based on their major field of study. These fields of study included physical and biological science, social science, physical education, family and consumer education, and language arts. This way, each student shared with peers their ideas for using technologies specifically for the context of their field of study.

A faculty member trained in educational technology along with his mentored graduate students taught the course. The faculty member taught two sections each semester resulting in the majority of students being taught by him. With input from the faculty member, the graduate students were given some autonomy to design and teach their section. Graduate students typically taught for two semesters only, rotating in new graduate students every semester.

Participants in the traditional face-to-face course were required to attend one hour each week. In order to complete their projects, they were expected to devote an additional two hours or more per week to their coursework. The course was divided into multiple units, one for each project or technological concept. Each unit typically consisted of an introductory class period with demonstration of the technology and discussion. Subsequent class sessions would be in a required lab setting where students could work on their projects and the class would have additional demonstrations in a workshop style. Some of the smaller units or units not specifically tied to learning a technology were only one week long, omitting the lab.

For example, the first unit was to learn an Internet communications technology. The first week of this unit was devoted to a teacher-led demonstration of a website-creation program followed by discussion on where and how it could be used in the classroom. The next week was devoted to students working on a project that would provide hands-on application of the program. If needed, the teacher would demonstrate additional features of the program to help better student understanding. The following week would be devoted to a new unit and the previous assignment involving the website program would be due. For units involving educational concepts such as Internet safety, where new technologies did not need to be learned, one week was taken to provide resources on the topic and discussion with the assignment for that unit being due the following week.

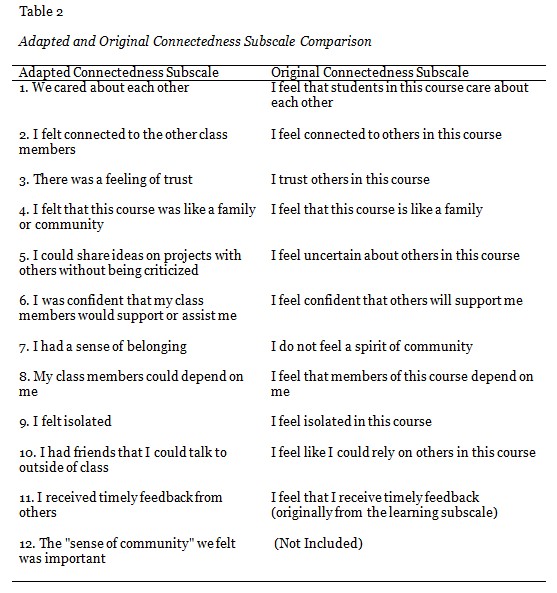

For this study we chose to adapt the Classroom Community Scale (CCS), developed by Rovai, in order to collect the necessary SOC data. The original scale from Rovai consisted of 20 items split equally into two subscales: the connectedness subscale and the learning subscale (Rovai, 2002b). A factor analysis of this scale performed by Rovai confirmed that the overall results of using the scale reflected the classroom community construct.

In order to better fit our specific objectives on measuring connections and trust, and to avoid student survey fatigue, we only used the connectedness subscale, and altered some of the items to better reflect the context of our course. For example, the item “I feel uncertain about others in this course” was too broad and vague for our context. It was altered to “I could share ideas on projects with others without being criticized” in order to measure a specific example of trust and connectedness with others. A seven-point response scale was used to allow for greater variance and to match other formative evaluation items asked of the students, instead of the five-point scale originally proposed by Rovai. One item was added to the survey that directly addressed the learner’s opinion on how important SOC is in the class. This item was not designed to measure the participants’ sense of connectedness and is thus not included in our connectedness score; however, responses to this item are included below to provide additional context within each iteration. These modifications were made prior to collecting data and were consistent throughout this study. Our adapted CCS is found in Table 2.

Participants in each iteration completed the adapted CCS as part of an end-of-course online survey each semester. Responses were then compiled, and missing values were removed from the data. If one participant submitted two surveys accidentally, the most recent survey was kept while the other was discarded. Only responses in which the participant provided permission for inclusion in the research study were used.

To obtain the connectedness score, the weights of each item were added. Total scores ranged from a maximum of 66 to a minimum of 0. For all items except No. 9, the following scoring scale was used: strongly agree = 6, agree = 5, somewhat agree = 4, neutral = 3, somewhat disagree = 2, disagree = 1, strongly disagree = 0. For item No. 9, “I felt isolated,” the scoring scale was reversed to ensure the most favorable choice was assigned the higher value in order to be similar to the other items: strongly agree = 0, agree = 1, somewhat agree = 2, neutral = 3, somewhat disagree = 4, disagree = 5, strongly disagree = 6.

Similarly, we obtained student ratings through a separate end-of-course survey provided by the institution. Students were asked to rate their experience in the course. The following scoring scale was used: Exceptionally Good = 7, Very Good = 6, Good = 5, Somewhat Good = 4, Somewhat Poor = 3, Poor = 2, Very Poor = 1, Exceptionally Poor = 0.

Once SOC data were collected, Levene’s test of homogeneity of variances was used to assess homogeneity. To determine whether the data were normally distributed, a Shapiro-Wilk test was performed. Finally a one-way ANOVA followed by Tukey post-hoc analysis was performed on the connectedness scores to look for SOC differences between iterations. Since we were comparing data across multiple iterations, a one-way ANOVA was required as opposed to a t-test.

In order to determine differences in student ratings a one-way ANOVA was again used due to the multiple iterations. An independent-sample t-test was used to uncover any differences in the perceived SOC importance among participants. An independent-sample t-test was chosen as opposed to a one-way ANOVA since we were only comparing two groups: those who agree that SOC is important and those who do not.

In order to understand the findings in this study, we will begin by thoroughly explaining the different design iterations of the course, followed by the findings related to the sense of connectedness felt by students in each iteration. We will then discuss overall findings.

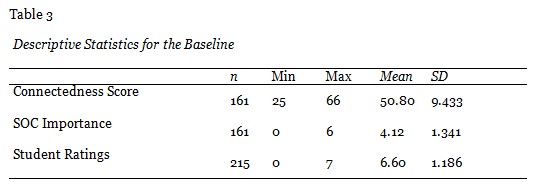

The mean and standard deviation for the baseline version of the course, for both the connectedness score and perception of SOC importance, are reported in Table 3. The connectedness score mean was 50.80 out of a possible 66. The SOC importance mean was 4.12 out of a possible 6. The student ratings mean was 6.60 out of a possible 8.

Several challenges were present in the traditional face-to-face model of this course, particularly related to time and space restrictions. The course was allotted only one credit hour, which left little time for demonstration, discussion, and application of the technologies students would most likely encounter in the classroom. Students’ technical abilities also varied greatly, which made it more difficult to pace the course according to need. With limited time and resources, instructors were typically forced to pace the class in line with the average technical ability. In order to address these challenges, we designed a blended model specifically for our needs. Our design removed the requirement for students to come to each class period, opting instead to require only introductory days for new units. This meant that roughly 60% of the class time became optional for students. For the required in-class days, instructors typically utilized that time for discussion on the impact of technology or technology-related concepts. Demonstrations of the specific technologies were moved online in the form of video tutorials.

Our design also consisted of turning the remaining class periods into labs that were open to all sections of the course. That increased the possibility for students to come and receive help every day that class was offered. Since it was optional, we only encouraged or required the lab days for students who were struggling in the course. Instructors were then able to devote more of their time in assisting these students while the more capable students did their work off-campus.

The final design consideration affected the last few weeks of class when students worked on their personal technology projects—an activity where they selected a technology specific to their subject domain to learn. Instead of demonstrating one technology each week for students in our individual sections, which limited the technological options for student projects, we developed open workshops that any student from any section could attend. We required that each student attend at least two of these workshops. Now, instead of only 3-5 technology workshops available to any one student, they would have 12-15. These interventions increased flexibility in time and space for our students, as well as in choice. In order for this design to work, we standardized the schedule across all six sections so class time and instructors could be shared across sections.

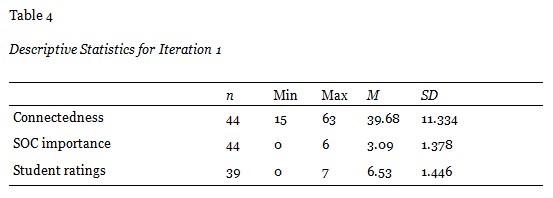

The mean and standard deviation of the connectedness score and perception of SOC importance for Iteration 1 are reported in Table 4. The student ratings mean was 6.53 out of a possible 8.

One intervention was added in the second iteration of the course. As instructors, we felt less connected with our students and worried students might feel the same, so we increased our usage of video recordings for assignment feedback (our assumption was that the use of video might improve perceptions of instructor social presence) along with an increased emphasis on developing a stronger relationship with each student. Specifically, each instructor followed the guidelines below when providing both video and text feedback for the three major assignments:

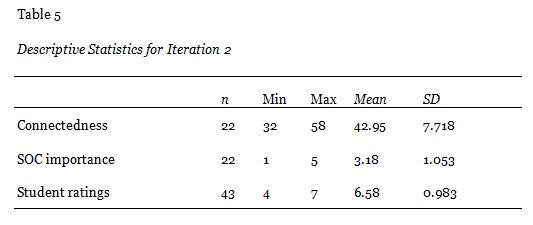

The mean and standard deviation of the connectedness score and perception of SOC importance are reported in Table 5. The student ratings mean was 6.58 out of a possible 8.

Though our increased attentiveness when providing feedback in order to establish personal connections with students in prompt and positive ways, and our introduction of video feedback may have had a positive impact on SOC, the improvement shown did not seem to justify the increased time and effort required to provide that type of feedback. At the same time, our blended learning model had matured, which we felt may be a cause of the improved SOC, as we felt we were better teachers than we had been at the beginning of the blended learning intervention. Thus, for this third iteration, we removed the requirement for instructors to follow the rigid guidelines for providing feedback, as described in Iteration 2. No other interventions were purposefully made to the course. There were minor changes to instructors and updates to course content; however, these changes are common in this course in each previous iteration.

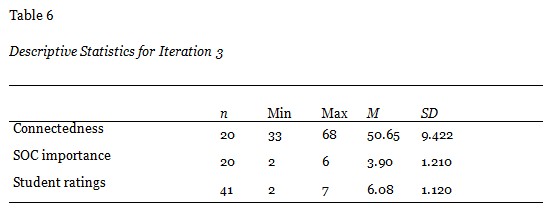

The mean and standard deviation of the connectedness score and perception of SOC importance are reported in Table 6. The student ratings mean was 6.08 out of a possible 8.

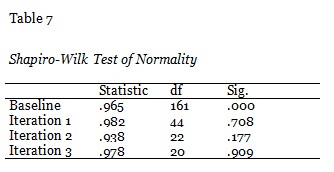

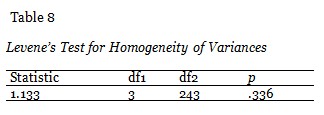

The data were not normally distributed for the Baseline, while the three iterations had normally distributed data, as assessed by Shapiro-Wilk test (p > .05), reported in Table 7. There was homogeneity of variances, as assessed by Levene’s test of homogeneity of variances (p = .336) reported in Table 8.

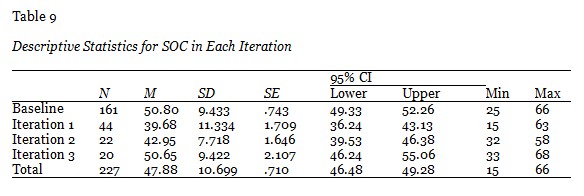

Overall the SOC (connectedness score) decreased from the Baseline (M = 50.80, SD = 9.4), to Iteration 1 (M = 39.68, SD = 11.3), with a slight increase in Iteration 2 (M = 42.95, SD = 7.7). From Iteration 2 to Iteration 3, there was a larger increase in SOC (M = 50.65, SD = 9.4) as reported in Table 9.

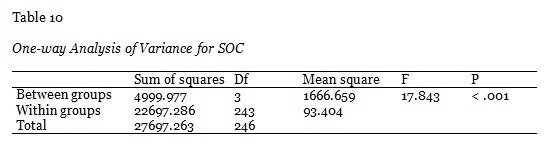

Since the connectedness score was not normally distributed for all iterations, both a one-way ANOVA and a Kruskal-Wallis test were conducted. Results from the Kruskal-Wallis test led to the same conclusion as the one-way ANOVA. Therefore, only the analysis of the one-way ANOVA between iterations is provided. There was a statistically significant difference among the iterations, F(2,224) = 25.9, p < .001.

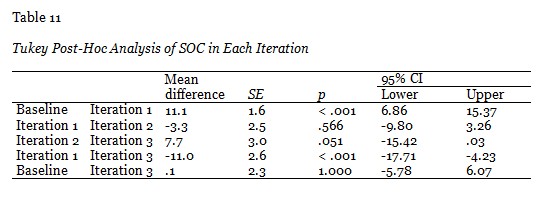

Tukey post-hoc analysis revealed that the mean decrease from the Baseline to Iteration 1 (11.1, 95% CI [6.9, 15.4]) was statistically significant (p < .001) while the mean increase from Iteration 1 to Iteration 2 (-3.3, 95% CI [-9.8, 3.3]) was not significant (p = .566). The mean increase from Iteration 2 to Iteration 3 (7.7, 95% CI [-15.42, .03]) was not significant, though just barely (p = .051). Also, the mean increase from Baseline to Iteration 3 (.1, 95% CI [-5.8, 6.1]) was not statistically significant (p = 1.000).

A one-way ANOVA indicated that there was a statistically significant decline from Iteration 2 and Iteration 3 in student ratings (0.6, 95% CI [-.01, 1.3], p = .054). No significant difference was found between the Baseline, Iteration 1, and Iteration 2. The Baseline showed the highest mean rating of 6.60 followed by Iteration 2 at 6.58, Iteration 1 at 6.53, and finally Iteration 3 at 6.08.

An independent-sample t-test was run to determine if there was a connection between participants’ view on the importance of SOC and the SOC score they provided, based on a question asked in the end-of-course survey about this. The scale used for this data was the same as other SOC items on the survey. Participants were divided into two groups. Group A consisted of participants who agreed that SOC was important and scored that item with a 4 or higher. Group B consisted of participants who disagreed that SOC was important and scored that item with a 2 or lower. Those participants who chose to remain neutral were not included in the comparison.

There were 131 participants in Group A and 30 participants in Group B. Those in Group A had a higher SOC score (M = 54.99, SD = 6.73) than those in Group B (M = 35.40, SD = 10.34). The difference between the two groups was statistically significant, M = 19.59, 95% CI [15.58 to 23.61], t(34.82) = 9.91, p < .001.

By moving to a blended environment, we were able to increase flexibility in the course for both students and instructors without negatively impacting student ratings in the first two iterations. However, impact was seen in the final iteration with a significant decrease in student ratings between Iteration 2 (M = 6.58) and Iteration 3 (M = 6.08). Overall, the average course rating across all iterations was “Good” which shows promise that the transition was successful. Additional research is required to determine whether the decrease was caused by our removal of rigid feedback guidelines for instructors or if there was some other factor that contributed.

Transitioning to a blended environment no longer required students to attend each week, giving them more flexibility in the time and space where they would complete their schoolwork. Also, by opening up our lab days to every section, the students were able to choose which day of the week they could attend lab for support. Instructors also had more flexibility. Instead of teaching to the middle demographics, those students with average technical abilities, instructors were able to devote more of their time on lab days to only the students who came in for help, typically those struggling in the course. By sharing resources between sections, instructors could now offer multiple technologies and projects that they individually couldn’t support. Instructors were no longer limited to the technologies that they personally knew. Students benefited from this sharing of resources with a much larger selection of technologies to select from for projects.

SOC, however, was more volatile. Though we saw a significant drop in students’ sense of connectedness to each other from our baseline to our first iteration, there was no significant difference between our baseline (M = 50.80) and our final iteration (M = 50.65). The students’ opinion of the importance of SOC seemed to also follow this trend, dropping in the first iteration then gradually rising. These results make our findings on the rigorous and personalized feedback that was the main intervention in Iteration 2 inconclusive. We cannot know for sure whether the feedback was the cause for the rise of SOC in Iteration 2 or whether the increase was due to the maturity of our blended learning model.

Thus, our main conclusions from this design-based study is that it seems that SOC can decrease when moving to a blended environment; however, in this case, it rebounded with the continued evolution of skills and materials used to teach the course. This leads us to conclude and recommend that blended learning can be a suitable option for project-based technology courses such as this one as a good compromise between the flexibility of online learning and the sense of connection and community that students need to feel in order to have a satisfactory learning experience. However, in making this transition, instructors and institutions should be patient, as initial effects on students might be negative, but could improve as the course and the instructors mature with this new pedagogy.

In order to identify the specific cause of the decrease in SOC from the Baseline to Iteration 1, further research should be done regarding the maturation of the model. Some of the adjustments to the course that occurred in each iteration over time included the following:

Research into these aspects could provide more insight into what specifically caused the drop and subsequent rise in SOC across the iterations.

Further research is also needed in determining how the importance of SOC to participants affects the SOC felt in a course. Our findings indicate that there might be a connection between participants’ perception of SOC importance and the overall SOC felt, as those who agreed SOC was important gave a higher SOC score than those who disagreed. Research into whether this perception influences or is influenced by SOC in a classroom could provide additional insight into course design.

Age of participants is another factor that requires further research. Moore (1989) mentioned that the interaction between students was more important for younger learners. Since the learners in our study were adult learners and more self-motivated, a study of SOC at different age levels would provide insight into what designs prove best for transitioning to a blended format for younger students.

Finally, as with all research into SOC, further research is required in different contexts. SOC may not have been as important in our course due to its design, which emphasized more individual projects and that required technical skills and not necessarily collaborative and discursive ones. Other blended courses that require more interaction among peers and the instructor may provide additional insights into context-specific blended design pedagogies.

Aragon, S. R. (2003). Creating social presence in online environments. New Directions for Adult and Continuing Education, 2003(100), 57–68. doi:10.1002/ace.119

Baker, C. (2010). The impact of instructor immediacy and presence for online student affective learning, cognition, and motivation. The Journal of Educators Online, 7(1), 1–30.

Barab, S., & Squire, K. (2004). Design-based research: Putting a stake in the ground. The Journal of the Learning Sciences, 13(1), 1-14.

Bonk, C. J., & Graham, C. R. (2006). Blended learning systems: Definition, current trends, and future directions. In C. J. Bonk & C. R. Graham (Eds.), Handbook of blended learning: Global perspectives, local designs. San Francisco, CA: Pfeiffer Publishing.

Bouhnik, D., & Marcus, T. (2006). Interaction in distance-learning courses. Journal of the American Society for Information Science and Technology, 57(3), 299–305. doi:10.1002/asi

Brown, A. L. (1992). Design experiments: Theoretical and methodological challenges in creating complex interventions in classroom settings. The Journal of Learning Sciences, 2(2), 141–178.

Cobb, P., Confrey, J., DiSessa, A., Lehrer, R., & Schauble, L. (2003). Design experiments in educational research. Educational Researcher, 32(1), 9–13. doi:10.3102/0013189X032001009

Edelson, D. C. (2002). Design research: What we learn when we engage in design. Journal of the Learning Sciences, 11(1), 105–121. doi:10.1207/S15327809JLS1101_4

Garrison, D. R. (2007). Online community of inquiry review: Social, cognitive, and teaching presence issues. Journal of Asynchronous Learning Networks, 11(1), 61–72.

Glynn, T. J. (1981). Psychological sense of community: Measurement and application. Human Relations, 34(9), 789–818. doi:10.1177/001872678103400904

Graham, C. R. (2012). Emerging practice and research in blended learning. In M. G. Moore (Ed.), Handbook of distance education (3rd ed., pp. 330–350).

Graham, C. R., Henrie, C. R., & Gibbons, A. S. (2013). Developing models and theory for blended learning research. In A. G. Picciano & C. D. Dziuban (Eds.), Blended learning: Research perspectives (pp. 13–33).

Graham, C. R., Woodfield, W., & Harrison, J. B. (2013). A framework for institutional adoption and implementation of blended learning in higher education. The Internet and Higher Education, 18, 4-14.

Hara, B. N., & Kling, R. (2001). Student distress in web-based distance education. EDUCAUSE Quarterly, 24(3), 68–69.

Hillman, D. C., Willis, D. J., & Gunawardena, C. N. (1994). Learner-interface interaction in distance education: An extension of contemporary models and strategies for practitioners. American Journal of Distance Education, 8(2), 30–42.

McMillan, D. W., & George, D. M. C. (1986). Sense of community: A definition and theory. Journal of Community Psychology, 14(January), 6–23.

Moore, M. G. (1989). Three types of interaction. The American Journal of Distance Education, 3(2), 1–6.

Moore, M. G. (1991). Distance Education Theory. The American Journal of Distance Education, 1(3), 1–6.

Picciano, A. G. (2006). Blended learning: Implications for growth and access. Journal of Asynchronous Learning Networks, 10(3), 95–102.

Rovai, A. P. (2001). Building and sustaining community in asynchronous learning networks. The Internet and Higher Education, 3(4), 285–297.

Rovai, A. P. (2002a). Building sense of community at a distance. International Review of Research in Open and Distance Learning, 3(1), 1–8.

Rovai, A. P. (2002b). Development of an instrument to measure classroom community. The Internet and Higher Education, 5(3), 197–211. doi:10.1016/S1096-7516(02)00102-1

Rovai, A. P. (2002c). Sense of community, perceived cognitive learning, and persistence in asynchronous learning networks. The Internet and Higher Education, 5(4), 319-332.

Rovai, A. P., & Jordan, H. M. (2004). Blended learning and sense of community: A comparative analysis with traditional and fully online graduate courses. International Review of Research in Open and Distance Learning, 5(2), 1–13.

Sarason, S. B. (1974). The psychological sense of community: Prospects for a community psychology. San Francisco, CA: Jossey-Bass.

Shea, P., Swan, K., & Pickett, A. (2005). Developing learning community in online asynchronous college courses: The role of teaching presence. Journal of Asynchronous Learning Networks, 9(4), 59–82.

Stodel, E. J., Thompson, T. L., & MacDonald, C. J. (2006). Learners’ perspectives on what is missing from online learning: Interpretations through the community of inquiry framework. The International Review of Research in Open and Distance Learning, 7(3), 1–15.

The Design-Based Research Collective. (2003). Design-based research: An emerging paradigm for educational inquiry. Educational Researcher, 32(1), 5–8.

Wegerif, R. (1998). The social dimension of asynchronous learning networks. Journal of Asynchronous Learning Networks, 2(1), 34-49.

© Harrison and West