As the use of Web-based instruction increases in the educational and training domains, many people have recognized the importance of evaluating its effects on student outcomes such as learning, performance, and satisfaction. Often, these results are compared to those of conventional classroom instruction in order to determine which method is “better.” However, major differences in technology and presentation rather than instructional content can obscure the true relationship between Web-based instruction and these outcomes. Computer-based instruction (CBI), with more features similar to Web-based instruction, may be a more appropriate benchmark than conventional classroom instruction. Furthermore, there is little consensus as to what variables should be examined or what measures of learning are the most appropriate, making comparisons between studies difficult and inconclusive. In this article, we review the historical findings of CBI as an appropriate benchmark to Web-based instruction. In addition, we review 47 reports of evaluations of Web-based courses in higher education published between 1996 and 2002. A tabulation of the documented findings into eight characteristics is offered, along with our assessments of the experimental designs, effect sizes, and the degree to which the evaluations incorporated features unique to Web-based instruction.

Keywords: Web-based courses; benchmarks; CBI

We would like to caution readers against drawing inappropriate cause-and-effect conclusions from the results presented in this paper. A goal of this paper is to present data based on existing empirical literature. As demonstrated by our finding of only one study in which random assignment of individuals to conditions occurred, there is a tremendous need for experimental studies of Web-based instruction if we are to draw any definitive conclusions about the effectiveness of Web-based instruction in comparison to other methods. The conclusions that one can draw from the results of a meta-analysis are highly dependent on the research designs of the individual studies examined. For example, if all the studies included are correlational, as were many of the studies reviewed in this paper, associational rather than causal conclusions are more appropriate. In this paper, the terms “effect,” “effect sizes,” and “effectiveness” are used in accordance with the accepted technical language of meta-analysis and are not meant to necessarily imply the existence of causal relationships.

The World Wide Web can be used to provide instruction and instructional support. Web-based instruction offers learners unparalleled access to instructional resources, far surpassing the reach of the traditional classroom. It also makes possible learning experiences that are open, flexible, and distributed, providing opportunities for engaging, interactive, and efficient instruction (Kahn, 2001). Phrases such as “flexible navigation,” “richer context,” “learner centered,” and “social context of learning,” are used in the literature to describe Web-based instruction. Furthermore, cognitive-based theories of learning have extended the design and delivery of Web-based instruction, applying the technical nomenclature to instructional practices (Bonk and Dennen, 1999). Indeed, Dills and Romiszowksi (1997) have identified more than 40 instructional paradigms seeking to advance and improve the online learning experience beyond the traditional classroom.

Some researchers have argued, however, that the tried-and-true principles of instructional design, namely interaction and timely feedback, are often absent from Web-based instruction, particularly from individual Websites devised to teach (Eli-Tigi and Branch, 1997). The absence of a sturdy pedagogical underpinning for a Web-based “instructional” program can diminish an otherwise worthy opportunity to improve learning. Well-designed computer-based instruction developed in the 1970s and 1980s, for example, has been demonstrated to enhance learning outcomes when compared to classroom instruction (Kulik and Kulik, 1991). A central question, then, is just how effective is online instruction? In particular, how does it compare to both the conventional classroom and established forms of stand-alone computer-based instruction (CBI)?

For the purposes of this review, online instruction is considered to be any educational or training program distributed over the Internet or an intranet and conveyed through a browser, such as Internet ExplorerTM or Netscape Navigator. Hereafter, it is referred to as Web-based instruction. The use of browsers and the Internet is a relatively new combination in instructional technology. While the effectiveness of traditional CBI has been reviewed thoroughly (Kulik, 1994; Lou, Abrami, and d’Apollonia, 2001), the effectiveness of online instruction has received little analysis. Part of the reason may be that so few cases have been detailed in the literature. This report serves, then, as an initial examination of the empirical evidence for its instructional effectiveness.

Many educational institutions and organizations are seeking to take advantage of the benefits offered by distributed learning, such as increased accessibility and improvements in learning. Learning advantages have consistently been found whenever well-designed instruction is delivered through a computer. Fletcher (2001), for example, has established the “Rule of Thirds” based on an extensive review of the empirical findings in educational and training technology. This rule advises that the use of CBI reduces the cost of instruction by about one-third, and additionally, either reduces the time of instruction by about one-third or increases the effectiveness of instruction by one-third. The analyses for this rule were based primarily on stand-alone CBI, not the contemporary use of online technologies.

Unlike the fixed resources in conventional CBI, Web-based instruction can be conveniently modified and redistributed, readily accessed, and quickly linked to related sources of knowledge, thus establishing a backbone for “anytime, anywhere” learning (Fletcher and Dodds, 2001). Compare these features to, say, a pre-Internet CD-ROM in which instructional messages were encoded in final form, availability was limited to specific computers, and immediate access to a vast array of related materials was not possible. However, many key instructional features, such as learner control and feedback, are shared between Web-based and conventional CBI. A reasonable assumption concerning the effectiveness of Web-based instruction, then, is that it should be at least “as good as” conventional forms of CBI.

Qualities shared by the two delivery media include multimedia formats, self-pacing, tailored feedback, and course management functions. Additionally, the unique features of Web-based instruction, flexible courseware modification, broad accessibility, and online links to related materials, instructors, and fellow students, should make possible improvements in learning outcomes beyond CBI. Learning outcomes from conventional CBI, when compared to conventional classroom instruction, have demonstrated effects significantly above the “no-significant-difference” threshold (Fletcher, 1990; Kulik, 1994). Furthermore, Web-based instruction shares elements of good classroom teaching that are not necessarily available in conventional CBI. Chickering and Ehrmann (1996) outlined seven ways in which technology can leverage practices from the traditional classroom. For example, good practice encourages student contact with faculty, and Web-based environments offer ways to strengthen interactions between faculty and students through email, resource sharing, and collaboration.

The measurement of effect size is simply a way of quantifying the difference between two groups. For example, if one group has had an “experimental” treatment (Web-based instruction) and the other has not (the conventional classroom), then the effect size is an indicator of the effectiveness of the Web-based treatment compared to that of the classroom. In statistical terms, effect size describes the difference between two group means divided by either the pooled standard deviation or the standard deviation of the treatment group (Glass, McGaw, and Smith, 1991). An advantage of using effect size is that numerous studies can be combined to determine an overall best estimate, or central tendency, of the effect. Generally, values of 0.2, 0.5, and 0.8 are considered to correspond to small, medium, and large effect sizes, respectively (Cohen, 1988).

There is no principled reason to expect Web-based instruction to be any less effective than traditional CBI. Both are capable of interactivity, individual feedback, and multi-media presentation. However, technical limitations with current Web-based configurations may dilute some of these advantages. Inherent limitations such as a small viewing area for video, video with a slow frame speed, or delays in responsiveness as a result of high traffic load on the Internet may restrict its current effectiveness. On the other hand, Web-based instruction offers new advantages to the learner, such as interactivity with instructors and students and quick access to supplementary online resources. As the technology improves, Web-based instruction may have an ultimate advantage.

Web-based instruction offers multiple dimensions of use in education and training environments. As with CBI, it is capable of providing direct instruction to meet individual learning objectives. Due to its networking capability, the Web can play additional roles. These include promoting and facilitating enrollment into courses, availing the syllabus or program of instruction, posting and submitting assignments, interacting with instructors and fellow students, collaboration on assignments, and building learning communities.

The Web has become a powerful tool for learning and teaching at a distance. Its inherent flexibility allows application in a variety of ways within an educational context, ranging from simple course administration and student management to teaching entire courses online. Each of these types of use works towards a different goal. These goals should be recognized when evaluating the use of the Web. For example, an instructor may hold face-to-face lectures in a classroom but post the class syllabus, assignments, and grades on the Web. In this case, it may not be appropriate to evaluate the use of the Web with respect to learning outcomes since the Web was not used in a direct instructional role.

There are a host of factors that contribute to a meaningful learning environment. In an attempt to gain a systematic understanding of these factors, Kahn (1997) developed a framework for Web-based learning, consisting of eight dimensions: 1) pedagogical; 2) technological; 3) interface design; 4) evaluation; 5) management; 6) resource support; 7) ethical; and 8) institutional. Kahn (2001) later offered a framework for placing Web-based instruction along a continuum ranging from “micro” to “macro” uses. The “micro” end of the continuum involves the use of the Web as a way to supplement or enhance conventional classroom instruction (e.g., providing students in a biology course with an interactive map of the brain to help them learn brain functions). Further along the continuum are courses that are partially delivered over the Web, such as elective modules that supplement classroom instruction. Clearly, factors beyond pedagogy such as technical reliability, interface design, and evaluation become increasingly important at this level. Finally, at the “macro” end of the continuum are complete distance learning programs and virtual universities.

Other researchers have also recognized the importance of determining the level of Web-use in a course. For example, Galloway (1998) identified three levels of Web-use. In Level 1, the Web is used to post course material with little or no online instruction. The instructor guides students to the relevant information rather than obliging the students to search for information. In Level 2, the Web is used as the medium of instruction. Course assignments, group projects, and lecture notes are posted on the Web. The teacher becomes the facilitator of knowledge, guiding the student rather than telling them what to do. In addition, there is increased student-student interaction. Courses that are offered completely online fall into Level 3. Teachers and students interact only over the Internet, and know how to use the technology is extremely important at this level.

Web-based instruction is still in an early stage of implementation. Nevertheless, educational institutions, private industry, the government, and the military anticipate immense growth in its use. Obstacles to realizing the Web’s full potential for learning clearly remain. These include the appropriateness of pedagogical practices (Fisher, 2000) and the bandwidth bottleneck for certain learner requests (e.g., video on demand) (Saba, 2000). From an evaluation perspective, there has been an inclination to compare the Web-based instruction with conventional classroom instruction (Wisher and Champagne, 2000). However, the historical findings on the effectiveness of conventional CBI may be more be a more appropriate basis for a comparison. An assessment of current practices thus may consider whether the capabilities of the Web are being tapped, how interpretable the findings are, and how those findings compare with conventional CBI.

Our assessment is organized as follows: A review of the historical findings of CBI benchmark to Web-based instruction, is presented, followed by a summary of our literature review. Our review encompassed 40 articles selected from a larger set of more than 500 articles published on the topic of Web-based instruction between 1996 and 2002. A tabulation of the documented findings into eight characteristics is offered in Appendix A, along with our assessment of the experimental designs, effect sizes, and the degree to which each evaluation incorporated features unique to Web-based instruction. The selection of characteristics was based on what is generally considered as indicators of overall quality.

CBI has been a significant part of educational technology, beginning with the first reported use of the computer for instructional purposes in 1957 (Saettler, 1990). Its emergence as a true multimedia delivery device occurred in the early 1980s with the coupling of videodisc players with computers. In recent years, the videodisc has been replaced by the CD-ROM. The combination of a computer controlling high quality video and/or audio segments was a compelling advancement in CBI, and the instructional effectiveness of this pairing has been well documented.

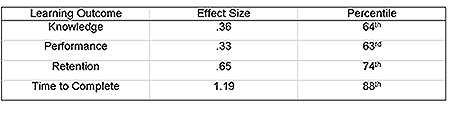

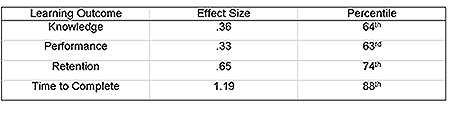

Fletcher (1990) conducted a quantitative analysis of the education and training effectiveness of interactive videodisc instruction. Specifically, empirical studies comparing interactive videodisc instruction to conventional instruction were segmented into three groups: higher education, industrial training, and military training. The various learning outcomes investigated include: 1) knowledge outcomes in terms of a student’s knowledge of facts or concepts presented in the instructional program; 2) performance outcomes which assessed a student’s skill in performing a task or procedure; 3) retention in terms of the durability of learning after an interval of no instruction; and 4) the time to complete the instruction. The effect sizes, or the difference between the mean scores of the treatment and comparison groups divided by the standard deviation of the control group, were computed for each of the 28 studies identified.

The results of the Fletcher (1990) meta-analysis are presented in Table 1, broken down by learning outcome, and in Table 2, broken down by instructional group.

Table 1. Average effect sizes for four types of knowledge outcomes for CBI

Table 2. Average effect sizes for three instructional groups using CBI

Fletcher (1990) concluded on the basis of his analysis, that interactive video instruction was both more effective and less costly than conventional instruction.

In a later analysis of the effectiveness of CBI, Kulik (1994) took into account the conceptual and procedural differences in how the computer was used in the individual studies. In his analysis of 97 studies that compared classes that used CBI to classes that did not, Kulik (1994) computed the overall effect size as well the effect sizes corresponding to five categories of computer use relevant to the present report: 1) tutoring; 2) managing; 3) simulation; 4) enrichment; and 5) programming.

Kulik determined the overall effect size to be .32, indicating that the average student receiving CBI performed better than the average student in a conventional class, moving from the 50th percentile to the 61st percentile. However, when categorized by computer use, the effect sizes yielded somewhat discrepant results. Only the effect size for tutoring, at .38, fell into the category, according to Cohen (1988), of being noteworthy, between a small and moderate effect. All other effect sizes were .14 or lower.

The effect size for computer-based programs used for tutoring (.38) is significantly higher than the rest, indicating that students who use computers for these purposes may achieve better outcomes than students who use CBI for management, simulation, enrichment, or programming purposes. In addition, it is clear from the table that basic programming and simulations had minimal effect on student performance. The conclusion of the Kulik (1994) analysis was that researchers must take into account all types of CBI when trying to assess their effects on student learning.

Finally, Liao (1999) conducted a meta-analysis of 46 studies that compared the effects on learning of hypermedia instruction (e.g., networks of related text, graphics, audio, and video) to different types of non-hypermedia instruction (e.g., CBI, text, conventional, videotape). Results indicated that, overall, the use of hypermedia in instruction results in more positive effects on students learning than non-hypermedia instruction with an average effect size equal to 0.41. However, the effect sizes varied greatly across studies and were influenced by a number of characteristics. Effect sizes were larger for those studies that used a one-group repeated measure design and simulation. In addition, effect sizes were larger for studies that compared hypermedia instruction to videotaped instruction than for studies that compared hypermedia instruction to CBI.

While each of the studies reviewed above provide evidence of the positive effects of CBI on student learning, they also point to the complexity of the issue. Evidence of the relationship between CBI and learning is influenced by many variables including the type of media used, what CBI is being compared to, and the type of research design employed, to name just a few. These issues increase in complexity when applied to Web-based instruction that tends to be less linear and more interactive and dynamic.

The following review is summative in nature and is limited to studies that evaluated the use of the World Wide Web (WWW) at undergraduate and graduate levels of education. The studies included in the review were examined with reference to four key features: 1) degree of interaction in the course; 2) measurement of learning outcomes; 3) experimental design used in evaluating the course; and 4) extent of Web use throughout the course. Since the purpose of this review is to assess current practices in the use and evaluation of Web-based courses, the criteria that guided the choice of studies for this review were broadly defined. The studies had to involve the use of the Web as an instructional tool either as a supplement to conventional classroom instruction or as the primary medium of instruction. In addition, studies had to include an evaluation of the Web-based components of the course.

The evaluations included in these studies fell into two broad categories. The first category of evaluation consisted of comparisons of Web-based instructional approaches to conventional classroom instruction. These evaluations could involve comparison of a control group with an experimental group derived from the same population of students or a simple comparison between a class taught at one time without the use of the Web and the same class taught at a different time using the Web. The second category of evaluations involved assessment of student performance and reactions relative to a single course.

The Educational Resources Information Center (ERIC) and Psychological Abstracts databases were searched using the following combinations of key words: “Web-based courses,” “Web-based instruction,” “Web-based courses and evaluation,” “course evaluation and Web,” “course evaluation and Internet,” “Web and distance education,” and “online course and evaluation.” Because we were trying to assess current practices in evaluating Web-based instruction, the search was restricted to the years 1996 to 2002. This search identified more than 500 qualifying studies conducted between August 2000 and July 2002. However, most of these studies concerned recommendations for the design of online courses or technology concerns rather than an evaluation of a specific course. Such studies were not included in the review. In addition, we found several relevant studies from the Journal of Asynchronous Learning, Education at a Distance, and the previous four years of the Proceedings of the Distance Teaching and Learning Conference. Researchers in the field of distance education referred us to additional useful references and citations that had not previously been identified by our search of the databases.

Appendix A summarizes the findings reported in the 47 studies derived from the original 40 articles, some of which dealt with more than one study. Although most of the studies involved courses in the physical sciences, the instructional content of the courses concerned a variety of subject matters, including philosophy, nutrition, economics, and sports science.

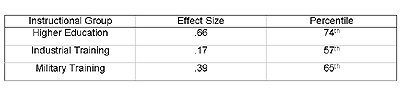

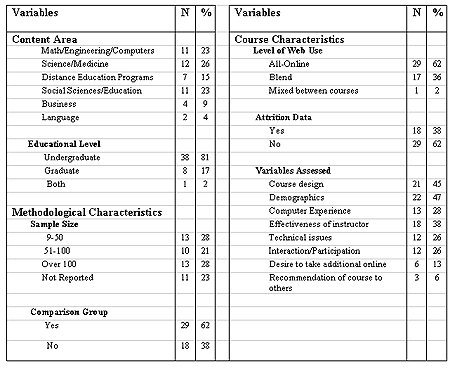

In our review of the literature on Web-based instruction is organized according to three categories: 1) study characteristics; 2) methodological characteristics; and 3) course characteristics. The analysis of these characteristics provides some insight into what questions people are asking about Web-based instruction and how well they are being answered. Descriptive statistics for these characteristics are presented in Table 3.

Table 3. Descriptive statistics for studies included in review

Content areas represented in the studies were wide-ranging. Approximately 23 percent examined the effects of Web-based instruction for teaching math, engineering, and computer courses, whereas 26 percent focused on the teaching of science and medical courses. Another 23 percent focused on the social sciences and education. In addition, about 15 percent of the studies evaluated entire programs of distance learning, which most likely were comprised of many types of courses. The wide variety of content areas discovered in this review demonstrates the flexibility of Web-based instruction to be adapted to the requirements of students and teachers in different subject areas.

Both undergraduate and graduate students were represented in this review. Of the 47 studies, 81 percent evaluated Web-based instruction for undergraduate students, 17 percent evaluated graduate instruction, and two percent evaluated Web-based instruction for both graduate and undergraduate students. Given the differences in course content and teaching styles between undergraduate and graduate classes, it would have been interesting to assess the differential impact of Web-based instruction on student learning between the two. However, insufficient information was provided by the studies to be able to draw any conclusions on this issue. For example, many studies failed to provide means or standard deviations for learning outcome measures. In addition, many studies involving graduate students did not identify the methods used to assess student learning and performance or either course content or level of Web use.

The sample size of a study can significantly affect the statistical power of the underlying tests for differences. Of the 47 studies, 36 reported information about the sample sizes of participants. These ranged from 9 to 1,406. Of these 36 studies that provided sample sizes, most (64 percent) had sample sizes of fewer than 100 participants. Effect sizes were available for 10 of these studies, with the mean effect size of approximately 0.09. For studies in which the sample size exceeded 100, the mean effect size increased to 0.55. In general, the larger the sample size, the stronger the statistical power.

The majority of the studies identified for this review used a comparison group in which students took the same course face-to-face or the same course with no Web-based components. However, 41percent of the studies simply evaluated the Web-based course without any comparison group. A similar pattern was found in evaluations of distance learning technology in training environments in which 55 percent of evaluations did not use a comparison group (Wisher and Champagne, 2000). While a comparison group is not a requirement for course evaluation, the absence of one can threaten the internal validity of the study and restrict data interpretation. Without an equivalent comparison group, it is difficult to draw conclusions about the impact of instruction using the Web on student learning, satisfaction, and other outcomes. Of the 29 studies that had a comparison group, only one (Schutte, 1996) randomly assigned students to conditions. Thus, most of the studies with comparison groups were subject to the influence of many possible confounding variables arising from relationships between Web-based instruction and learning outcomes.

As demonstrated by the results of both Kulik (1994) and Liao (1999), different forms of CBI can differentially affect student outcomes. Thus, it is important to take into account how a particular medium of instruction is applied when evaluating a course. In view of the medium’s tremendous ability to distribute seemingly unlimited resources and information to anyone at anytime, this is especially true of Web-based instruction. The flexibility of the Web enables it to be used for a variety of purposes, from course administration and management to complete course delivery; each of these types of use works towards a different goal.

In all of the studies, the Web was used for more than purely management purposes. Of the 47 studies, 17 evaluated “blend courses” or courses that were a mix of both face-to-face instruction and Web-based components (e.g., posting of course syllabus and lecture notes, online tutorials and graphics, etc.). While in these “blend courses” the Web was used to fulfill many management functions, students were also required to access the Web regularly in order to be productive members of the class. This access led to different types of Web-use. The remainder of the studies involved courses that where completely online with little or no face-to-face interaction. Of the 30 studies of courses that were offered completely online, 10 evaluated student and teacher interactions, availability of instructor feedback, and technological issues.

In terms of learning outcomes, effect sizes were available for six of the “blend” courses and nine of the all-online courses. The mean effect size for the “blend” courses was 0.48, while the mean effect size for the all-online courses was 0.08. While this is not a statistically significant difference (Mann-Whitney U Test, p = .14), it does suggest that Web-based instruction may be more beneficial for student learning when used in conjunction with conventional classroom instruction. The direction of the difference is the same as Liaos’ (1999) analysis, which showed that the mean effect size for courses that used hypermedia as a supplement to conventional instruction was 0.18 standard units higher than courses that replaced conventional instruction with hypermedia.

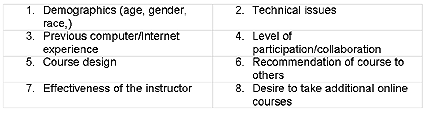

Many of the evaluation studies of Web-based instruction, and distance education as a whole, lack a guiding theoretical framework (Saba, 2000; Berge and Mrozowski, 2001). Furthermore, there is no consensus as to what variables are important to examine when evaluating Web-based courses. As online courses incorporate unique elements such as flexibility, a wide range of corresponding resources and tools, and technological considerations among others, determining the evaluation elements becomes more complicated for online courses than for face-to-face instruction. As a result, researchers have assessed a wide range of variables. For studies included in this analysis, variables assessed can be grouped into eight major categories:

Table 4. Variables grouped into eight major categories

Although many of the studies assessed the design of the Web-based course and the demographic characteristics of the participants, few evaluated the quality of interaction or collaboration in the course, effectiveness of the instructor, or technology itself.

It has been widely recognized that the attrition of students is a greater problem for online courses than classroom courses (Phipps and Merisotis, 1999; Terry, 2001). In addition, some research has shown that blended courses should be considered separately from completely online courses when assessing student attrition as blended courses have lower attrition rates (Bonk, 2001). However, only 14 (34 percent) of the studies reported information about attrition.

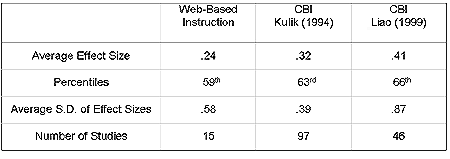

When compared to conventional classroom instruction, the learning outcomes from conventional CBI have demonstrated effect sizes significantly above the “no significant difference” threshold (Fletcher, 1990; Kulik, 1994). The original premise of this article stated that if Web-based instruction were employed effectively, and used to achieve specific learning objectives, it would lead to effect sizes that are at least comparable to CBI. Of the 15 studies in this analysis that provided sufficient information to calculate effect sizes, eight (53 percent) of the effect sizes were positive and favored the group that used Web-based instruction, while seven (47 percent), were negative and favored the group that did not use Web-based instruction. The effect sizes ranged from -.40 to 1.60. The grand mean for all 15 effect sizes was 0.24, and the grand median was 0.095. The standard deviation of 0.58 indicates wide variability of effect sizes across studies.

Because these data did not meet the assumptions of normality and homoscedasticity, non-parametric tests were performed on the mean effect sizes of the current analysis as well as the analyses conducted by Kulik (1994) and Liao (1999) to compare Web-based instruction to CBI. Results of a Kruskal-Wallis Test indicated that there was no significant difference (p = .47) in mean effect size across the three sets of analyses. The average effect sizes and their corresponding standard deviations for the studies included in this article and the previous analyses conducted by Kulik and Liao are listed in Table 5.

Table 5. Comparison of effect sizes across analyses

These results would seem to suggest that Web-based instruction is “as good” as CBI but, as will be discussed in the following section, this may be a premature judgment.

On the basis of a limited number of empirical studies, Web-based instruction appears to be an improvement over conventional classroom instruction. However, it is debatable whether Web-based instruction compares favorably to CBI. On the surface, the overall effect size is smaller, but not statistically significant. This, of course, cannot be interpreted to say that they are equivalent, but rather that there is no detectable difference. This is partly due to inconsistent and widespread variability in the findings. As the number of studies in the future that report comparative data increase, leading possibly to a more stable central tendency in the effect size, a more reliable assessment of how well Web-based instruction compares to CBI may be possible.

There are numerous reasons why the effectiveness of Web-based instruction may not yet be fully realized. For example, many of the early adopters were faculty from a diversity of fields who were not necessarily trained in instructional design. Their comparisons were, in some cases, a first attempt at Web-based instruction compared to a highly practiced classroom rendition of the course. Another restriction may have stemmed from Internet response delays, which are not uncommon during peak usage periods, in contrast to the immediate responses possible with a stand-alone computer. With packet-based networks, variable delays cause latency problems in the receipt of learning material, particularly with graphic images. Previous research has demonstrated a slight decrement in learning due to inherent delays of transmitting complex graphics over the Internet (Wisher and Curnow, 1999).

One objective of this article was to discuss the various roles that the Web plays in educational courses and the importance of taking them into account when evaluating courses. Here, we have limited our analysis to those applications involving direct instruction through the Web. As described earlier, the Web offers many other advantages that the Web offers (e.g., access, flexibility, enrollment, and management) that must be factored in when determining the overall value that the Web offers to a learning enterprise.

How large a learning effect, in terms of an effect size, can we expect from the Web? One possibility comes from research on intelligent tutoring systems. These are knowledge-based tutors that generate customized problems, hints, and aids for the individual learner as opposed to ad hoc, frame oriented instruction. When compared to classroom instruction, evaluations indicate an effect size of 1.0 and higher (Woolf and Regian, 2000). If these individual learning systems are further complemented by collaborative learning tools and online mentoring from regular instructors, effect sizes on the order of two standard deviation units, as suggested by Bloom (1984), may someday be possible. The use of the Web for instruction is at an early stage of development. Until now, there has been a lack of tools for instructional developers to use, but this shortcoming is beginning to change. The potential of Web-based instruction will increase as pedagogical practices improve, advances in standards for structured learning content progress, and improvements in bandwidth are made.

Click here for Appendix

A, Characteristics of Studies On Web-Based Instructional Effectiveness.

Angulo, A. J., and Bruce, M. (1999). Student perceptions of supplemental Web-based instruction. Innovative Higher Education, 24, 105 – 125.

Arvan, L., Ory, J. C., Bullock, C. D., Burnaska, K. K., and Hanson, M. (1998). The SCALE Efficiency Projects. Journal of Asynchronous Learning Networks, 2, 33 – 60.

Bee, R. H., and Usip, E. E. (1998). Differing attitudes of economics students about web-based instruction. College Student Journal, 32, 258 – 269.

Berge, Z. L., and Mrozowski, S. (2001). Review of research in distance education, 1990 to 1999. The American Journal of Distance Education, 15, 5 – 19.

Bloom, B. S. (1984). The 2-Sigma problem: The search for methods of group instruction as effective as one-on-one tutoring. Educational Researcher, 13, 4 – 16.

Bonk, C. J., and Dennen, V. P. (1999). Teaching on the Web: With a little help from my pedagogical friends. Journal of Computing in Higher Education, 11, 3 – 28.

Bonk, C. J. (2001). Online teaching in an online world. Bloomington, IN: Courseshare.com. Retrieved July 30, 2002 from: http://www.courseshare.com/reports.php

Chickering, A.W., and Ehrmann, S.C. (1996). Implementing the seven principles: Technology as Lever. American Association for Higher Education Bulletin, 3 – 6.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd Ed.). Hillsdale, NJ.: Lawrence Erlbaum Associates.

Cooper, L. W. (2001). A comparison of online and traditional computer applications classes. T.H.E. Journal 28, 52 – 58.

Davies, R. S., and Mendenhall, R. (1998). Evaluation comparison of online and classroom instruction for HEPE 129 – Fitness and lifestyle management course. Report to Brigham Young University.

Dills, C., and Romiszowski, A. J. (1997). Instructional development paradigms. Englewood Cliffs, NJ.: Educational Technology Publications.

Eli-Tigi, M., and Branch, R. M. (1997). Designing for interaction, learner control, and feedback during Web-based learning. Educational Technology37, 23 – 29.

Fisher, S. G. (2000). Web-based Training: One size does not fit all. In K. Mantyla (Ed.), The 2000/2001 Distance learning yearbook. New York: McGraw-Hill

Fletcher, J. D. (2001). Technology, the Columbus effect, and the third revolution in learning. Institute for Defense Analyses. Paper D-2562.

Fletcher, J. D. (1990). Effectiveness and cost of interactive videodisc instruction in defense training and education. Institute for Defense Analyses Paper P-2372.

Fletcher, J. D., and Dodds, P. (2001). All about ADL. In K. Mantyla and J. A. Woods (Eds.) The 2001/2002 ASTD distance learning yearbook. (p. 229-235). New York: McGraw Hill.

Fredericksen, E., Pickett, A., Shea, P., Pelz, W., and Swan, K. (2000). Student satisfaction and perceived learning with on-line courses: Principles and examples from the SUNY Learning Network. Journal of Asynchronous Learning Networks, 4, 1 – 28. (Includes separate evaluations of two courses)

Gagne, M., and Shepherd, M. (2001). Distance learning in accounting: A comparison between a distance and traditional graduate accounting class. T.H.E. Journal, 28, 58 – 65.

Galloway, G. M. (1998). A model of Internet usage for course delivery. Paper presented at the 9th Annual International Conference of the Society for Information Technology and Teacher Education (SITE): Washington DC.

Glass, G. V., McGaw, B, and Smith, M. L. (1981). Meta-analysis in social research. Beverly Hills, CA.: Sage Publications

Green, R., and Gentemann, K. (2001). Technology in the Curriculum: An assessment of the impact of on-line courses. Retrieved July 30, 2002 from: http://assessment.gmu.edu/reports/Eng302

Johnson, S. M. (2001). Teaching introductory international relations in an entirely web-based environment. Education at a Distance, 15, 5 – 14.

Johnson, S. D., Aragon, S. R., Shaik, N., and Palma-Rivas, N. (2000). Comparative analysis of online vs. face-to-face instruction. ERIC Document No. ED 448722.

Jones, E. R. (February, 1999). A comparison of an all Web-based class to a traditional class. Paper presented at the 10th Annual International Conference of the Society for Information Technology and Teacher Education, San Antonio, TX.

Kahn, B. H. (1997). A framework for Web-based learning. Paper presented at the Instructional Technology Department, Utah State University, Logan, Utah.

Kahn, B. H. (2001). Web-based training: An introduction. In B.H. Kahn (Ed.) Web-based Training. Englewood Cliffs, NJ.: Educational Technology Publications.

Kulik, C. L., and Kulik, J. A. (1991). Effectiveness of Computer-based Instruction: An updated analysis. Computers in Human Behavior, 7, 75 – 94.

Kulik, J. A. (1994). Meta-analytic studies of findings on computer-based instruction. In E. L. Baker, and H. F. O’Neil (Eds.) Technology assessment in education and training (p. 9-33). Hillsdale, NJ.: Lawrence Erlbaum Associates.

LaRose, R., Gregg, J., and Eastin, M. (July, 1998). Audiographic Telecourses for the Web: An experiment. Paper presented at the International Communication Association, Jerusalem, Israel.

Leasure, A. R., Davis, L., and Thievon, S. L. (2000). Comparison of student outcomes and preferences in a traditional vs. World Wide Web-based baccalaureate nursing research course. Journal of Nursing Education, 39, 149 – 154.

Liao, Y. K. C. (1999). Effects of hypermedia on students’ achievement: A meta-analysis. Journal of Educational Multimedia and Hypermedia 8, 255 – 277.

Lou, Y., Abrami, P. C., and d’Apollonia, S. (2001). Small Group Learning and Individual Learning with Technology: A meta-analysis. Review of Educational Research, 71, 449 – 521.

Magalhaes, M. G. M., and Schiel, D. (1997). A method for evaluation of a course delivered via the World Wide Web in Brazil. In M. G. Moore and G. T. Cozine (Eds.) Web-Based Communications, the Internet, and Distance Education. University Park, PA: The Pennsylvania State University.

Maki, W. S., and Maki, R. H. (2002). Multimedia comprehension skill predicts differential outcomes of web-based and lecture courses. Journal of Experimental Psychology: Applied, 8, 85 – 98.

Maki, R. H., Maki, W. S., Patterson, M., and Whittaker, P. D. (2000). Evaluation of a web-based introductory psychology course: I. Learning and satisfaction in on-line versus lecture courses. Behavior Research Methods, Instruments, and Computers 32, 230 – 239.

McNulty, J. A., Halama, J., Dauzvardis, M. F., and Espiritu, B. (2000). Evaluation of Web-based computer-aided instruction in a basic science course. Academic Medicine 75(1), 59 – 65.

Murphy, T. H. (2000). An evaluation of a distance education course design for general soils. Journal of Agricultural Education, 41, 103 – 113.

Navarro, P., and Shoemaker, J. (2000). Performance and perceptions of distance learners in cyberspace. In M. G. Moore and G. T. Cozine (Eds.) Web-Based Communications, the Internet, and Distance Education. University Park, PA: The Pennsylvania State University.

Phelps, J., and Reynolds, R. (1999). Formative evaluation of a web-based course in meteorology. Computers and Education, 32, 181 – 193.

Phipps, R., and Merisotis, J. (1999). What’s the difference? A review of contemporary research on the effectiveness of distance learning in higher education. Washington DC.: The Institute for Higher Education Policy.

Powers, S. M., Davis, M., and Torrence, E. (1998). Assessing the classroom environment of the virtual classroom. Paper presented at the Annual Meeting of the Mid Western Educational Research Association, Chicago, Illinois.

Ryan, M., Carlton, K. H., and Ali, N. S. (1999). Evaluation of traditional classroom teaching methods versus course delivery via the World Wide Web. Journal of Nursing Education, 38, 272 – 277.

Saba, F. (2000). Research in distance education: A status report. International Review of Research in Open and Distance Learning, 1(1). Retrieved July 30, 2002 from: http://www.irrodl.org/content/v1.1/farhad.html

Saettler, P. (1990). The evolution of American educational technology. Englewood, CO.: Libraries Unlimited.

Sandercock, G. R. H., and Shaw, G. (1999). Learners’ performance and evaluation of attitudes towards web course tools in the delivery of an applied sports science module. Asynchronous Learning Networks Magazine, 3, 1 – 10.

Schlough, S., and Bhuripanyo, S. (1998). The development and evaluation of the Internet delivery of the course “Task Analysis.” Paper presented at the 9th International Conference for Information Technology and Teacher Education (SITE), Washington D.C.

Schulman, A. H., and Sims, R. L. (1999). Learning in an online format versus an in-class format: An experimental study. T.H.E. Journal 26, 54 – 56.

Schutte, J. G. (1996). Virtual Teaching in Higher Education: The new intellectual superhighway or just another traffic jam? California State University, Northridge. Retrieved July 30, 2002 from: http://www.csun.edu/sociology/virexp.htm.

Serban, A. M. (2000). Evaluation of Fall 1999 Online Courses. Education at a Distance 14, 45 – 49.

Shapley, P. (2000). On-line education to develop complex reasoning skills in organic chemistry. Journal of Asynchronous Learning Networks 4(2),

Shaw, G. P., and Pieter, W. (2000). The use of asynchronous learning networks in nutrition education: Student attitude, experiences, and performance. Journal of Asynchronous Learning Networks 4, 40 – 51.

Shuell, T. J. (April, 2000). Teaching and learning in an online environment. Paper presented at the annual meeting of the American Educational Research Association, New Orleans, Louisiana.

Stadtlander, L. M. (1998). Virtual instruction: Teaching an online graduate seminar. Teaching of Psychology 25, 146 – 148.

Summary, R. and Summary, L. (1998). The effectiveness of the World Wide Web as an instructional tool. Paper presented at the 3rd Annual Mid-South Instructional Technology Conference, Murfreesboro, Tennessee.

Taylor, C. D., and Burnkrant, S. R. (1999). Virginia Tech Spring 1999: Online Courses. Assessment Report to the Institute for Distance and Distributed Learning. Virginia Tech University.

Terry, N. (2001). Assessing the enrollment and attrition rates for the online MBA. T.H.E. Journal 28, 64 – 68.

Thomson, J. S., and Stringer, S. B. (1998). Evaluating for distance learning: Feedback from students and faculty. Paper presented at the 14th Annual Conference on Distance Teaching and Learning, Madison, WI.

Trier, K. K. (1999). Comparison of the effectiveness of Web-based instruction to campus instruction. Retrieved July 30, 2002 from: http://www.as1.ipfw.edu/99tohe/presentations/trier.htm.

Verbrugge, W. (1997). Distance education via the Internet: Methodology and results. Paper presented at the 30th Annual Summer Conference Proceedings of Small Computer Users in Education. North Myrtle Beach, South Carolina.

Wang, X. C., Kanfer, A., Hinn, D. M., and Arvan, L. (2001). Stretching the Boundaries: Using ALN to reach on-campus students during an off-campus summer session. Journal of Asynchronous Learning Networks 5, 1 – 20.

Waschull, S. B. (2001). The online delivery of psychology courses: Attrition, performance, and evaluation. Teaching of Psychology 28, 143 – 147.

Wegner, S. B., Holloway, K. C., and Garton, E. M. (1999). The effects of Internet-based instruction on student learning. Journal of Asynchronous Learning Networks 3, 98 – 106.

White, S. E. (April, 1999). The Effectiveness of Web-based Instruction: A case study. Paper presented at the Annual Meeting of the Central States Communication Association, St. Louis, MO.

Wisher, R., and Champagne, M. (2000). Distance learning and training: An evaluation perspective. In S. Tobias and J. Fletcher (Eds.) Training and Retraining: A handbook for business, industry, government, and military. New York: Macmillan Reference USA.

Wisher, R., and Curnow, C. (1999). Perceptions and effects of image transmissions during Internet-based training. The American Journal of Distance Education, 13, 37 – 51.

Woolf, B. P., and Regian, J. W. (2000). Knowledge-based training systems and the engineering of instruction. In S. Tobias and J. Fletcher (Eds.), Training and Retraining: A handbook for business, industry, government, and the military (p. 339-356). New York: Macmillan Reference USA.